上手CUDA编程

文章目录

- 上手CUDA编程

- 矩阵加法例子

- 编译

- 查看本机GPU信息

- 内存管理函数

- 专门二维数组拷贝函数

- Reference

- >>>>> 欢迎关注公众号【三戒纪元】 <<<<<

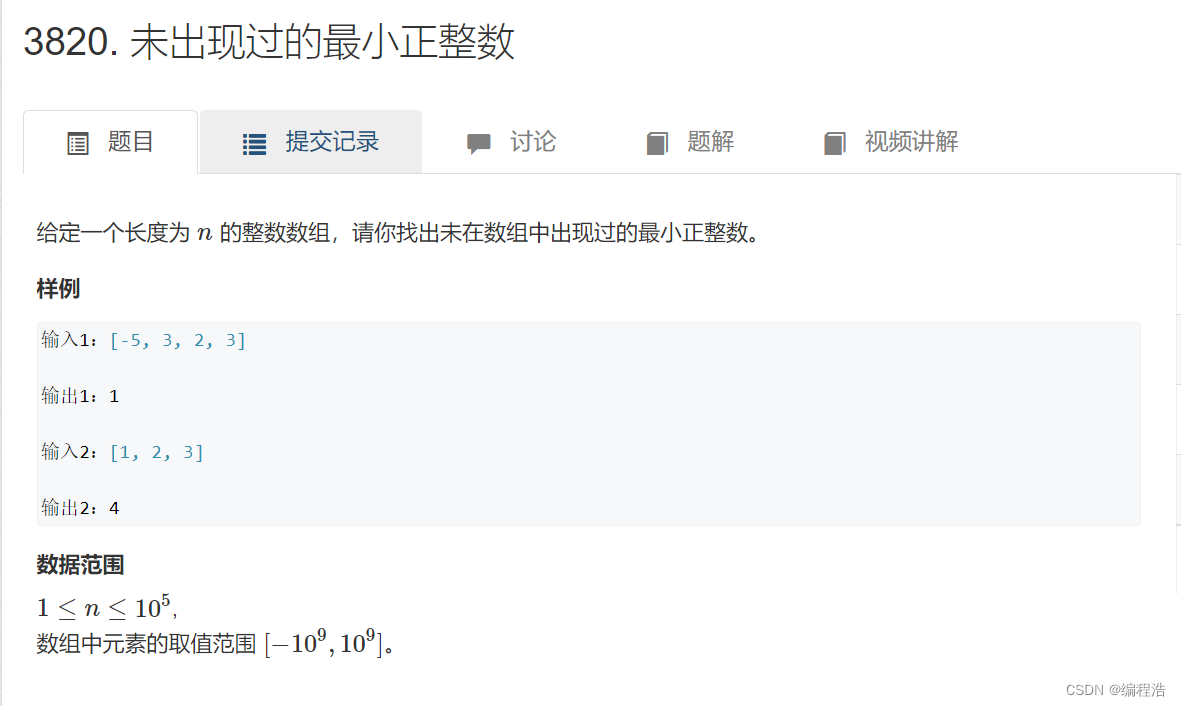

矩阵加法例子

编写 CUDA C 程序时, 要将文件命名为 *.cu,cu文件支持 C/C++ 语法。

比对一下同样的矩阵相加,通过CPU和GPU计算,运算效率的差异。

#include <cuda_runtime.h>

#include <stdio.h>

#include "cudastart.h"

// CPU对照组,用于对比加速比

// nx ny 为矩阵长宽

void SumMatrix2DonCPU(float* Mat_A, float* Mat_B, float* Mat_C, int nx,

int ny) {

float* a = Mat_A;

float* b = Mat_B;

float* c = Mat_C;

for (int j = 0; j < ny; j++) {

for (int i = 0; i < nx; i++) {

c[i] = a[i] + b[i];

}

c += nx;

b += nx;

a += nx;

}

}

// 核函数,每一个线程计算矩阵中的一个元素。

// nx ny 为矩阵长宽

__global__ void SumMatrix(float* Mat_A, float* Mat_B, float* Mat_C, int nx,

int ny) {

int ix = threadIdx.x + blockDim.x * blockIdx.x;

int iy = threadIdx.y + blockDim.y * blockIdx.y;

int idx = ix + iy * ny;

if (ix < nx && iy < ny) {

Mat_C[idx] = Mat_A[idx] + Mat_B[idx];

}

}

// 主函数

int main(int argc, char** argv) {

// 设备初始化

printf("Randy strating...\n");

initDevice(0);

// 输入二维矩阵,4096*4096,单精度浮点型。

int nx = 1 << 12;

int ny = 1 << 12;

int nBytes = nx * ny * sizeof(float);

// Malloc,开辟主机内存

float* A_host = (float*)malloc(nBytes);

float* B_host = (float*)malloc(nBytes);

float* C_host = (float*)malloc(nBytes);

float* C_cal_by_gpu = (float*)malloc(nBytes);

InitialData(A_host, nx * ny);

InitialData(B_host, nx * ny);

// cudaMalloc,开辟设备内存

float* A_dev = NULL;

float* B_dev = NULL;

float* C_dev = NULL;

CHECK(cudaMalloc((void**)&A_dev, nBytes));

CHECK(cudaMalloc((void**)&B_dev, nBytes));

CHECK(cudaMalloc((void**)&C_dev, nBytes));

// 输入数据从主机内存拷贝到设备内存

CHECK(cudaMemcpy(A_dev, A_host, nBytes, cudaMemcpyHostToDevice));

CHECK(cudaMemcpy(B_dev, B_host, nBytes, cudaMemcpyHostToDevice));

// 二维线程块,32×32

dim3 block(32, 32);

// 二维线程网格,128×128

dim3 grid((nx - 1) / block.x + 1, (ny - 1) / block.y + 1);

// 测试GPU执行时间

double GpuStart = CpuSecond();

// 将核函数放在线程网格中执行

SumMatrix<<<grid, block>>>(A_dev, B_dev, C_dev, nx, ny);

CHECK(cudaDeviceSynchronize());

double gpuTime = CpuSecond() - GpuStart;

printf("GPU Execution Time: %f sec\n", gpuTime);

// 在CPU上完成相同的任务

cudaMemcpy(C_cal_by_gpu, C_dev, nBytes, cudaMemcpyDeviceToHost);

double cpuStart = CpuSecond();

SumMatrix2DonCPU(A_host, B_host, C_host, nx, ny);

double cpuTime = CpuSecond() - cpuStart;

printf("CPU Execution Time: %f sec\n", cpuTime);

// 检查GPU与CPU计算结果是否相同

CHECK(cudaMemcpy(C_cal_by_gpu, C_dev, nBytes, cudaMemcpyDeviceToHost));

checkResult(C_host, C_cal_by_gpu, nx * ny);

cudaFree(A_dev);

cudaFree(B_dev);

cudaFree(C_dev);

free(A_host);

free(B_host);

free(C_host);

free(C_cal_by_gpu);

cudaDeviceReset();

return 0;

}

具体代码,原博主已经上传到github上了:https://github.com/ZihaoZhao/CUDA_study/tree/master/Sum_Matrix

主要思想就是先对设备进行初始化initDevice(0);,然后初始化主机内存和设备内存。在主机端初始化2个矩阵 A_host、B_host,然后把这2个数组内的值拷贝到设备内存 A_dev、B_dev中。

2维矩阵大小为 4096 × 4096 4096 \times 4096 4096×4096,grid网格大小为 128 × 128 128 \times 128 128×128,线程块大小为 32 × 32 32 \times 32 32×32,总线程为 4096 × 4096 4096 \times 4096 4096×4096,每个线程处理1个元素。

GPU上处理完后,将设备内存结果拷贝到主机,并与CPU结果进行比较。

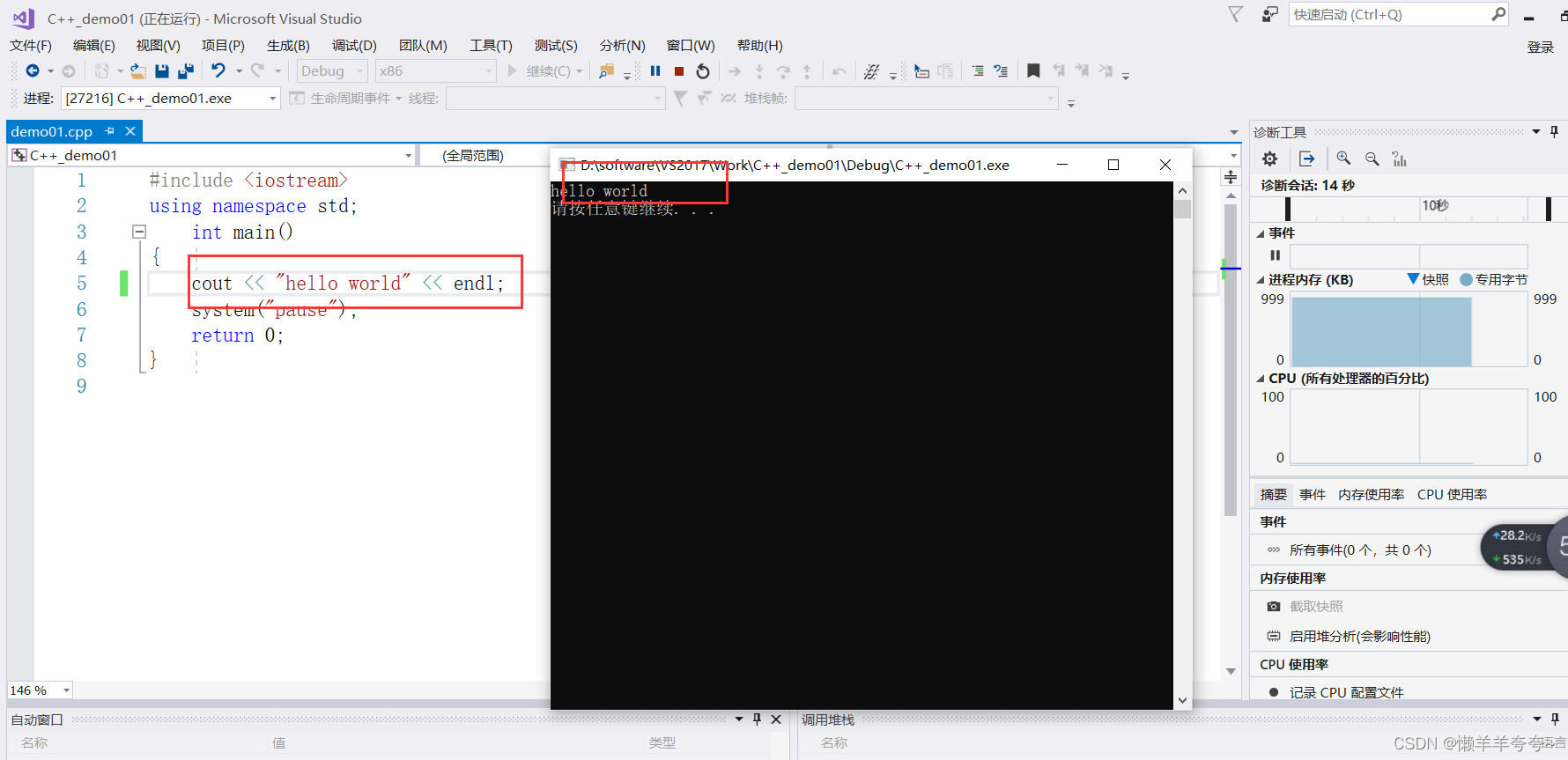

编译

CUDA代码文件后缀名是.cu。

编译单文件CUDA程序命令

nvcc -o randy sum_martix.cu

之后会生成一个可执行文件test,使用下面的命令即可运行。

./randy

结果:

Randy strating...

Using device 0: NVIDIA GeForce RTX 3070 Laptop GPU

GPU Execution Time: 0.000816 sec

CPU Execution Time: 0.054139 sec

Check result success!

结果也能看出来,GPU的效率是CPU的 $0.054139 \div 0.000816= 66.35倍 $

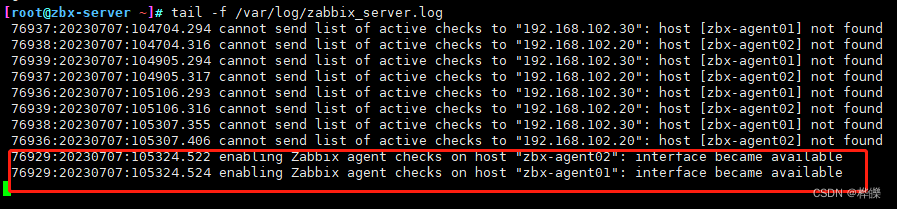

查看本机GPU信息

#include <cuda.h>

#include <cuda_runtime.h>

#include <iostream>

int main() {

int dev = 0;

cudaDeviceProp devProp;

cudaGetDeviceProperties(&devProp, dev);

std::cout << "GPU Device Name" << dev << ": " << devProp.name << std::endl;

std::cout << "SM Count: " << devProp.multiProcessorCount << std::endl;

std::cout << "Shared Memory Size per Thread Block: "

<< devProp.sharedMemPerBlock / 1024.0 << " KB" << std::endl;

std::cout << "Threads per Thread Block: " << devProp.maxThreadsPerBlock

<< std::endl;

std::cout << "Threads per SM: " << devProp.maxThreadsPerMultiProcessor

<< std::endl;

std::cout << "Warps per SM: " << devProp.maxThreadsPerMultiProcessor / 32

<< std::endl;

return 0;

}

结果:

GPU Device Name0: NVIDIA GeForce RTX 3070 Laptop GPU

SM Count: 40

Shared Memory Size per Thread Block: 48 KB

Threads per Thread Block: 1024

Threads per SM: 1536

Warps per SM: 48

本机电脑使用的是 英伟达的 GeForce RTX 3070显卡,有40个流式多处理器SM,每个线程块共享内存为48KB,每个线程块有1024个线程,每个SM有1536个线程,每个SM有48个线程束。

内存管理函数

主机和设备各自拥有独立的内存,C 语言通过标准库管理主机的内存,CUDA 提供 API 管理设备的内存:

| C语言 | CUDA | 功能 |

|---|---|---|

| malloc | cudaMalloc | 分配内存 |

| memcpy | cudaMemcpy | 复制内存 |

| memset | cudaMemset | 设置内存 |

| free | cudaFree | 释放内存 |

主机与设备的数据拷贝,可以使用 cudaMalloc,cudaMemcpy 和 cudaFree 函数,前篇已经介绍过了,看看具体怎么使用

#include <cuda.h>

#include <cuda_runtime.h>

#include <math.h>

#include <sys/time.h>

#include <iostream>

#include <vector>

double CpuSecond() {

struct timeval tp;

gettimeofday(&tp, NULL);

return ((double)tp.tv_sec + (double)tp.tv_usec * 1e-6);

}

int main() {

float mats[6][4] = {

{23, 33, 43, 53}, {15, 25, 35, 45}, {12, 22, 32, 42},

{39, 49, 59, 69}, {20, 30, 40, 50}, {8, 18, 28, 38}

};

// copy data to gpu

std::cout << "mats size: " << sizeof(mats) << std::endl;

double GpuStart = CpuSecond();

float *dev_mats;

cudaError_t err = cudaSuccess;

err = cudaMalloc((void **)&dev_mats, sizeof(mats));

if (err != cudaSuccess) {

printf("Failed to cudaMalloc!");

return 1;

}

cudaMemcpy(dev_mats, mats, sizeof(mats), cudaMemcpyHostToDevice);

std::cout << "Copied data to GPU.\n";

// get back copied cuda data

float host_mats[sizeof(mats) / sizeof(float)];

cudaMemcpy(&host_mats, dev_mats, sizeof(mats), cudaMemcpyDeviceToHost);

double gpuTime = CpuSecond() - GpuStart;

printf("GPU Execution Time: %f sec\n", gpuTime);

std::cout << "Copied from cuda back to host.\n";

std::cout << "host_mats size: " << sizeof(host_mats) << std::endl;

for (int i = 0; i < sizeof(mats) / sizeof(float); i++) {

std::cout << host_mats[i] << " ";

}

std::cout << std::endl;

cudaFree(dev_mats);

return 0;

}

结果:

mats size: 96

Copied data to GPU.

GPU Execution Time: 1.244266 sec

Copied from cuda back to host.

host_mats size: 96

23 33 43 53 15 25 35 45 12 22 32 42 39 49 59 69 20 30 40 50 8 18 28 38

专门二维数组拷贝函数

CUDA 避免2维数组在 kernel 运算时损失较高的性能,设计了专用的内存申请函数cudaMallocPitch,设计了cudaMemcpy2D函数在设备间内存拷贝,形参如下

__host__cudaError_t cudaMallocPitch ( void** devPtr, size_t* pitch, size_t width, size_t height )

__host__ cudaError_t cudaMemcpy2D ( void* dst, size_t dpitch, const void* src, size_t spitch, size_t width, size_t height, cudaMemcpyKind kind )

测试代码:

int main() {

float mats[6][4] = {{23, 33, 43, 53}, {15, 25, 35, 45}, {12, 22, 32, 42},

{39, 49, 59, 69}, {20, 30, 40, 50}, {8, 18, 28, 38}};

size_t width = 4;

size_t height = 6;

size_t pitch;

std::cout << "mats size: " << sizeof(mats) << std::endl;

double GpuStart = CpuSecond();

float *dev_mats;

cudaError_t err = cudaSuccess;

err = cudaMallocPitch((void **)&dev_mats, &pitch, sizeof(float) * width,

height);

if (err != cudaSuccess) {

printf("cudaMalloc failed!");

return 1;

}

// copy data to gpu

cudaMemcpy2D(dev_mats, pitch, mats, sizeof(float) * width,

sizeof(float) * width, height, cudaMemcpyHostToDevice);

std::cout << "Copied data to GPU.\n";

// get back copied cuda data

float host_mats[sizeof(mats) / sizeof(float)];

cudaMemcpy2D(&host_mats, sizeof(float) * width, dev_mats, pitch,

sizeof(float) * width, height, cudaMemcpyDeviceToHost);

double gpuTime = CpuSecond() - GpuStart;

printf("GPU Execution Time: %f sec\n", gpuTime);

std::cout << "Copied from cuda back to host.\n";

std::cout << "host_mats size: " << sizeof(host_mats) << std::endl;

for (int i = 0; i < width * height; i++) {

std::cout << host_mats[i] << " ";

}

std::cout << std::endl;

cudaFree(dev_mats);

return 0;

}

结果:

mats size: 96

Copied data to GPU.

GPU Execution Time: 1.251606 sec

Copied from cuda back to host.

host_mats size: 96

23 33 43 53 15 25 35 45 12 22 32 42 39 49 59 69 20 30 40 50 8 18 28 38

这2个函数会使 kernel 的运行时间变短,因为 pitch 对齐后可实现 global 内存联合访问,但对齐也需要额外的时间,整体时间开销反而比不使用2函数时还多。

Reference

- CUDA编程入门(三)从矩阵加法例程上手CUDA编程

- CUDA 教程(三)CUDA C 编程简介