项目的创建

项目采用的是微服务的架构。先创建一个父项目cloud-photo,然后再在module下创建api、image、users的子项目

相关配置:

application.yml。此处如果没有redis的话可以先注释掉,因为后面启动需要mysql连接成功和redis服务启动

spring:

cloud:

# nacos config

nacos:

discovery:

username: nacos

password: nacos

server-addr: 127.0.0.1:8848

redis:

host: 127.0.0.1

port: 6379

application:

name: cloud-photo-api

datasource:

url: jdbc:mysql://127.0.0.1:3306/photo?zeroDateTimeBehavior=convertToNull&?useSSL=false&allowMultiQueries=true

username: root

password: root

driver-class-name: com.mysql.jdbc.Driver

kafka:

bootstrap-servers: 127.0.0.1:9092

producer: # producer 生产者

retries: 0 # 重试次数

acks: 1 # 应答级别:多少个分区副本备份完成时向生产者发送ack确认(可选0、1、all/-1)

batch-size: 16384 # 批量大小

buffer-memory: 33554432 # 生产端缓冲区大小

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

consumer: # consumer消费者

group-id: javagroup # 默认的消费组ID

enable-auto-commit: true # 是否自动提交offset

auto-commit-interval: 100 # 提交offset延时(接收到消息后多久提交offset)

# earliest:当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,从头开始消费

# latest:当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,消费新产生的该分区下的数据

# none:topic各分区都存在已提交的offset时,从offset后开始消费;只要有一个分区不存在已提交的offset,则抛出异常

auto-offset-reset: latest

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

#服务端口

server:

port: 9007pom.xml文件配置

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>cloud-photo-3121004041</artifactId>

<packaging>pom</packaging>

<version>1.0-SNAPSHOT</version>

<modules>

<module>cloud-photo-api</module>

<module>cloud-photo-image</module>

<module>cloud-photo-users</module>

</modules>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<mybatisplus.version>3.5.3</mybatisplus.version>

<spring-boot.version>2.3.12.RELEASE</spring-boot.version>

<spring-cloud.version>Hoxton.SR12</spring-cloud.version>

<spring-cloud-alibaba.version>2.2.10-RC1</spring-cloud-alibaba.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13.1</version>

<scope>test</scope>

</dependency>

<!-- kafka-->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<!-- redis-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<!-- jedis-->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.9.0</version>

</dependency>

<!-- 执行命令 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-exec</artifactId>

<version>1.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.5.3</version>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-generator</artifactId>

<version>3.5.3</version>

</dependency>

<dependency>

<groupId>org.freemarker</groupId>

<artifactId>freemarker</artifactId>

<version>2.3.31</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.20</version>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring-boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>${spring-cloud-alibaba.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

</project>MySQL

表的创建

CREATE TABLE `tb_file_audit` (

`file_audit_id` varchar(64) NOT NULL DEFAULT '',

`file_name` varchar(128) DEFAULT NULL,

`md5` varchar(64) DEFAULT NULL,

`file_size` int(11) DEFAULT NULL,

`storage_object_id` varchar(64) DEFAULT NULL,

`audit_status` int(11) DEFAULT NULL,

`remark` varchar(128) DEFAULT NULL,

`create_time` timestamp NULL DEFAULT NULL,

`user_file_id` varchar(64) DEFAULT NULL,

PRIMARY KEY (`file_audit_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='文件审核列表';

CREATE TABLE `tb_file_md5` (

`file_md5_id` varchar(64) NOT NULL DEFAULT '',

`md5` varchar(64) DEFAULT NULL COMMENT '文件md5',

`file_size` int(11) DEFAULT NULL COMMENT '文件大小',

`storage_object_id` varchar(64) DEFAULT NULL COMMENT '存储id',

PRIMARY KEY (`file_md5_id`),

KEY `tb_file_md5_md5_idx` (`md5`,`file_size`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='文件MD5列表';

CREATE TABLE `tb_file_resize_icon` (

`file_resize_icon_id` varchar(64) NOT NULL DEFAULT '' COMMENT 'id',

`storage_object_id` varchar(100) DEFAULT NULL COMMENT '图片存储id',

`icon_code` varchar(20) DEFAULT NULL COMMENT '图片尺寸(240_240,600_600)',

`container_id` varchar(20) DEFAULT NULL COMMENT '缩略图存储桶',

`object_id` varchar(64) DEFAULT NULL COMMENT '缩略图存储id',

PRIMARY KEY (`file_resize_icon_id`),

KEY `tb_file_resize_icon_storage_object_id_idx` (`storage_object_id`,`icon_code`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='图片缩略图';

CREATE TABLE `tb_media_info` (

`media_info_id` varchar(64) DEFAULT NULL,

`width` int(11) DEFAULT NULL,

`height` int(11) DEFAULT NULL,

`shooting_time` varchar(30) DEFAULT NULL COMMENT '拍摄时间',

`gps_latitude` int(11) DEFAULT NULL,

`gps_longitude` int(11) DEFAULT NULL,

`storage_object_id` varchar(64) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='文件媒体信息';

CREATE TABLE `tb_storage_object` (

`storage_object_id` varchar(64) NOT NULL,

`storage_provider` varchar(10) DEFAULT NULL COMMENT '存储池供应商',

`container_id` varchar(20) DEFAULT NULL COMMENT '桶ID',

`object_id` varchar(100) DEFAULT NULL COMMENT '存储池文件id',

`md5` varchar(64) DEFAULT NULL COMMENT '文件md5',

`object_size` varchar(64) DEFAULT NULL COMMENT '文件大小',

PRIMARY KEY (`storage_object_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='资源池文件存储信息';

CREATE TABLE `tb_user` (

`user_id` varchar(10) NOT NULL COMMENT '用户Id',

`user_name` varchar(100) DEFAULT NULL COMMENT '用户名',

`department` varchar(50) DEFAULT NULL COMMENT '用户所属地',

`phone` varchar(20) DEFAULT NULL COMMENT '用户手机号',

`password` varchar(100) DEFAULT NULL COMMENT '用户密码',

`create_time` datetime DEFAULT NULL COMMENT '用户创建时间',

`update_time` datetime DEFAULT NULL COMMENT '用户最后登录时间',

`login_count` int(11) DEFAULT NULL COMMENT '用户登录次数',

`role` varchar(20) DEFAULT NULL COMMENT '用户角色',

`birth` date DEFAULT NULL COMMENT '用户出生年月日',

`new_column` int(11) DEFAULT NULL,

PRIMARY KEY (`user_id`),

UNIQUE KEY `tb_user_user_id_uindex` (`user_id`),

KEY `actable_idx_idx_user_name` (`user_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `tb_user_file` (

`user_file_id` varchar(64) NOT NULL DEFAULT '',

`user_id` varchar(64) DEFAULT NULL COMMENT '用户id',

`file_name` varchar(200) DEFAULT NULL COMMENT '文件名',

`parent_id` varchar(64) DEFAULT NULL COMMENT '父目录id',

`file_size` bigint(20) DEFAULT NULL COMMENT '文件大小',

`file_status` varchar(2) DEFAULT NULL COMMENT '文件状态(1 正常 2删除)',

`file_type` varchar(10) DEFAULT NULL COMMENT '文件类型',

`is_folder` varchar(2) DEFAULT NULL COMMENT '是否是目录',

`create_time` timestamp NULL DEFAULT NULL COMMENT '创建时间',

`modify_time` timestamp NULL DEFAULT NULL COMMENT '修改时间',

`storage_object_id` varchar(64) DEFAULT NULL COMMENT '存储id',

`category` int(11) DEFAULT NULL COMMENT '文件分类',

`audit_status` int(11) DEFAULT NULL COMMENT '审核状态(00待审核 01 审核通过 02 审核不通过)',

PRIMARY KEY (`user_file_id`),

KEY `tb_user_file_user_id_idx` (`user_id`,`file_status`,`create_time`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

Kafka

启动Kafka可以先看这篇文章:Kafka的保姆级简易安装启动、关闭注意事项、简单使用_kkoneone11的博客-CSDN博客

本机操作Kafka

新建一个cmd窗口进行命令操作:

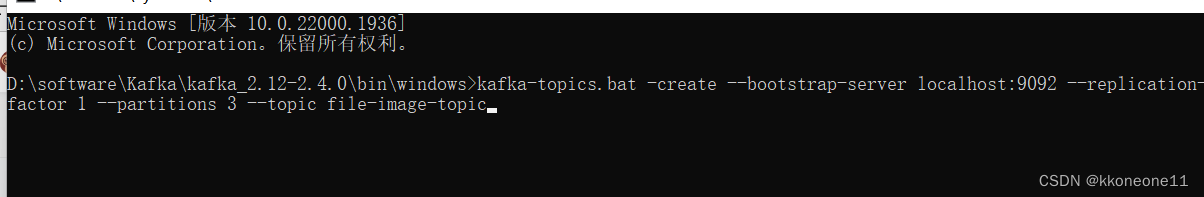

1.新建一个名为file-image-topic的topic

语句解析:这条语句是用于创建一个名为"file-image-topic"的Kafka主题。它使用了kafka-topics.bat命令,并指定了以下参数:

- --bootstrap-server:指定Kafka集群的地址和端口,这里是localhost:9092。

- --replication-factor:指定主题的副本因子,这里是1,表示每个分区只有一个副本。

- --partitions:指定主题的分区数,这里是3,表示主题被分为3个分区。

- --topic:指定要创建的主题的名称,这里是"file-image-topic"。

kafka-topics.bat -create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 3 --topic file-image-topic

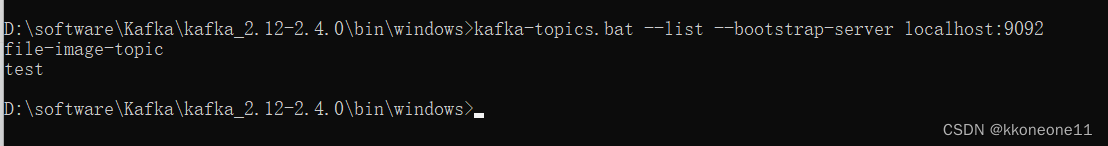

2.查看已经存在的topic

kafka-topics.bat --list --bootstrap-server localhost:9092

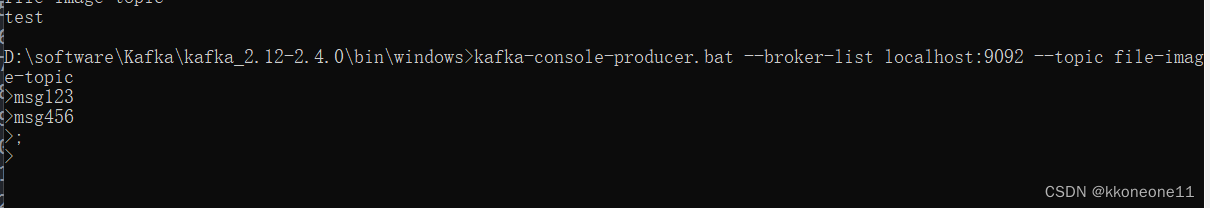

kafka-console-producer.bat --broker-list localhost:9092 --topic file-image-topic

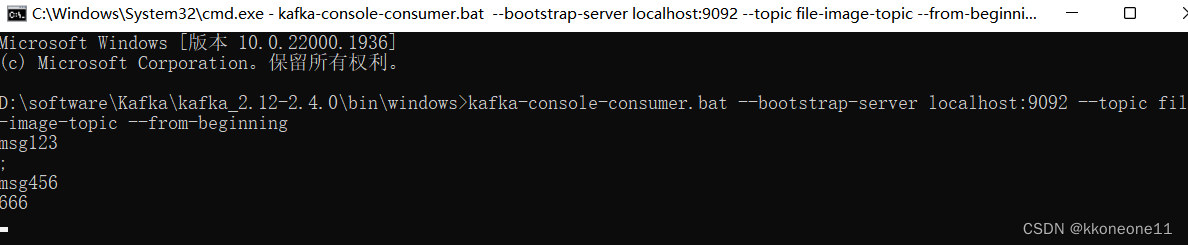

kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic file-image-topic --from-beginning

Springboot项目中使用Kafka

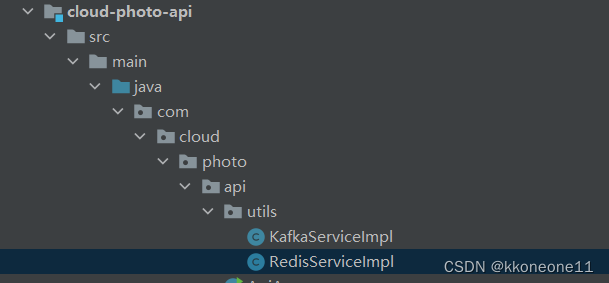

1.创建一个KafkaServiceImpl类

在

在

2.KafkaTemplate

项目中通过KafkaTemplate<?,String> kafkaTemplate来对kafka进行操作,其中读消息用到send方法,读取信息就先通过@KafkaListener的监听器指定topic然后再通过ConsumerRecord<String,String>对里面的信息进行读取

package com.cloud.photo.api.utils;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Service;

/**

* @Author:kkoneone11

* @name:KafkaServiceImpl

* @Date:2023/7/9 14:20

*/

@Service

public class KafkaServiceImpl {

private static final String FILE_IMAGE_TOPIC = "file-image-topic";

@Autowired

private KafkaTemplate<?,String> kafkaTemplate;

/**

* 发送kafka信息

*/

public void send(String message){

kafkaTemplate.send(FILE_IMAGE_TOPIC,message);

System.out.println("send message :"+message);

}

/**

* 消费FILE_IMAGE_TOPIC中的消息

*/

@KafkaListener(topics = {"file-image-topic"})

public void onMessage(ConsumerRecord<String,String> record){

System.out.println("onMessage,key="+record.key()+",value="+record.value());

}

}

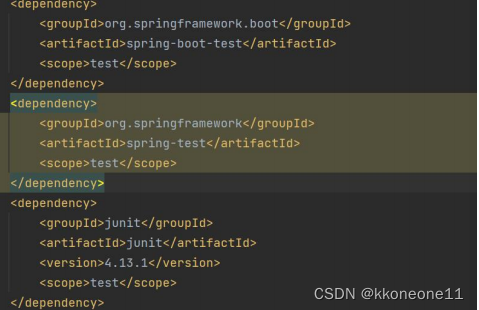

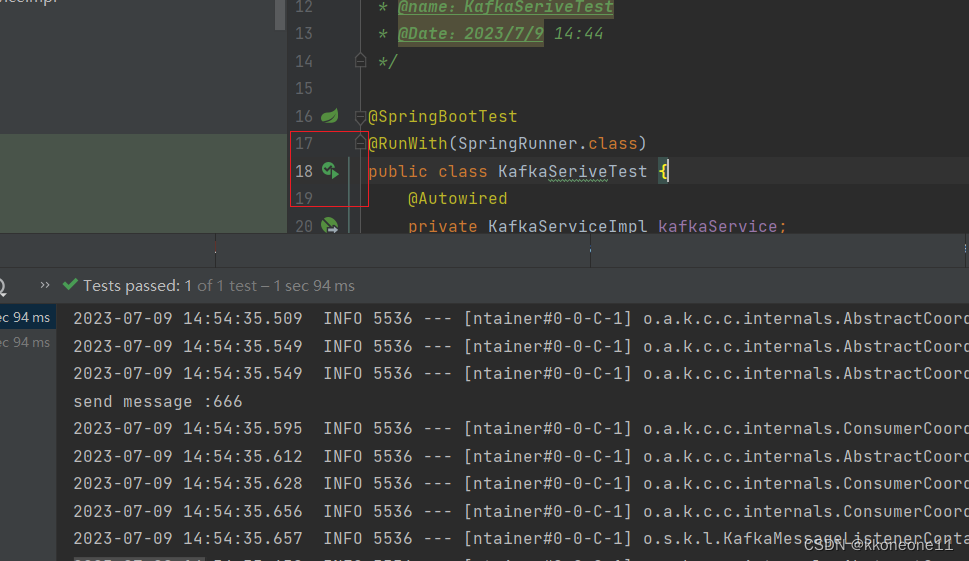

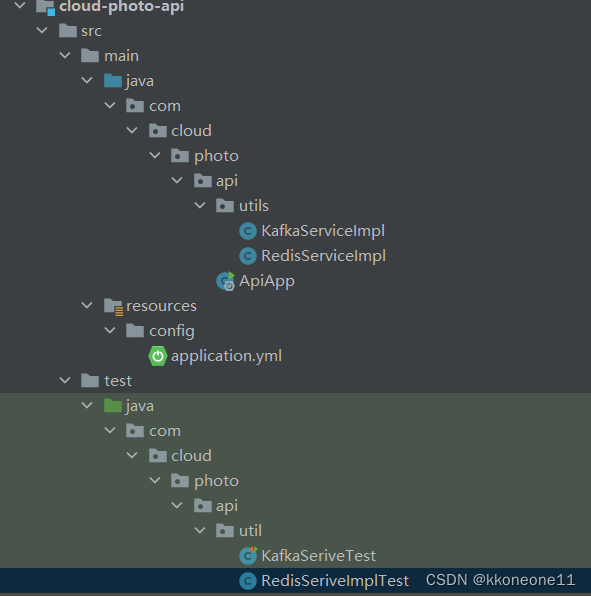

3.测试写入消息方法

要保证pom里已经有了junit测试框架

先在test包下创建一个KafkaSeriveTest类

要注意在类的头顶还有@SpringBootTest @RunWith(SpringRunner.class)两个备注

然后在测试类中通过@Autowired让Springboot管理要引入要调用的类,因为这个类已经通过@Service让Springboot进行管理了所以直接@Autowired。而方法就得在头上加@Test

package com.cloud.photo.api.util;

import com.cloud.photo.api.utils.KafkaServiceImpl;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

/**

* @Author:kkoneone11

* @name:KafkaSeriveTest

* @Date:2023/7/9 14:44

*/

@SpringBootTest

@RunWith(SpringRunner.class)

public class KafkaSeriveTest {

@Autowired

private KafkaServiceImpl kafkaService;

@Test

public void send(){

kafkaService.send("666");

}

}

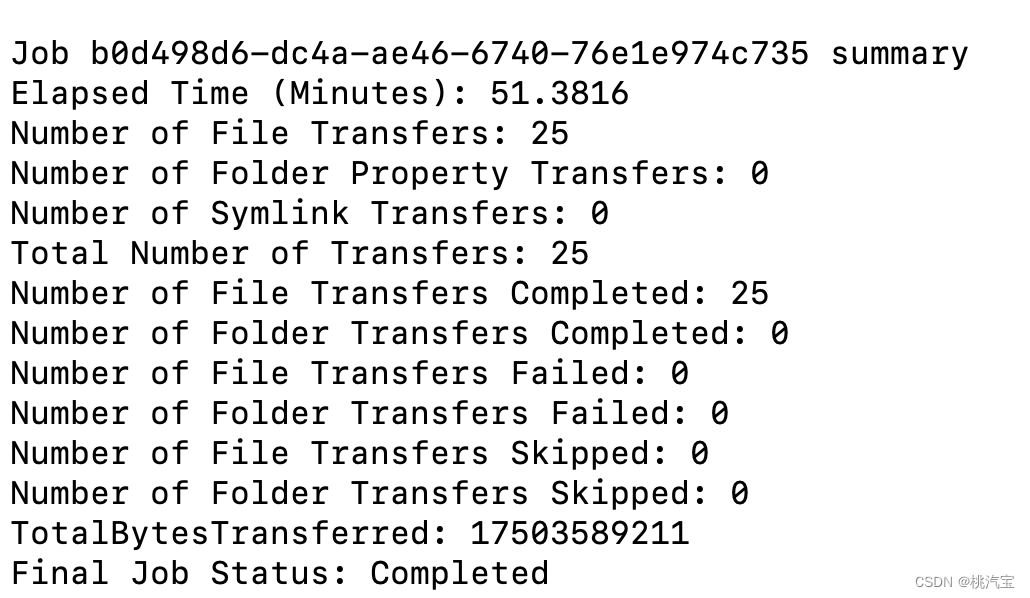

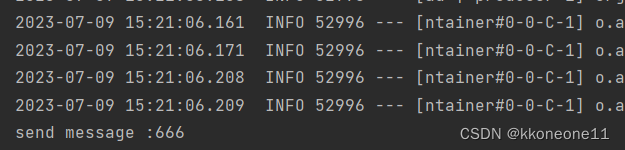

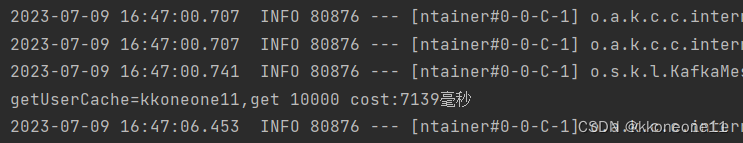

然后运行test类看到以下信息就说明发送成功了,可以到Kafka中进行查看是否有该消息

4.测试读取方法

因为消费kafka消息是通过kafka自带的Listener来进行的,所以运行这个方法是要通过一个启动类ApiAPP来进行测试方法

package com.cloud.photo.api;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

/**

* @Author:kkoneone11

* @name:ApiApp

* @Date:2023/7/9 10:03

*/

@SpringBootApplication

public class ApiApp {

public static void main(String[] args) {

SpringApplication.run(ApiApp.class,args);

}

}

此时ApiApp主方法就是一直在启动的,然后这时候需要我们再启动一下test方法,发送一条信息到topic上(因为上一次已经把666那条信息给消费了)。

发送的新信息

此时可以看到ApiApp控制台上消息被立刻消费了,即已经同步运行了Listener监听器的办法消费了信息

Redis

redis在此处的作用是中间件的缓存,常用到ValueOperations中对值的操作,通过其中的set方法存储一个键值对,get方法根据key获取对应的value值

1.先创建一个RedisServiceImpl类

同样交给对Redis的操作通常是通过RedisTemplate,而我们这用的是StringRedisTemplate,是RedisTemplate的子类。因为Redis可操作的对象类型有很多

package com.cloud.photo.api.utils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.data.redis.core.ValueOperations;

import org.springframework.stereotype.Service;

import javax.annotation.Resource;

/**

* @Author:kkoneone11

* @name:RedisServiceImpl

* @Date:2023/7/9 15:30

*/

@Service

public class RedisServiceImpl {

@Autowired

private StringRedisTemplate stringRedisTemplate;

private static final String USER_CACHE_PRE = "u_c_";

/**

* 设置缓存

*/

public void setUser2Cache(Long userId,String username){

//加一个前缀方便数据的对应

String cacheKey = USER_CACHE_PRE+userId;

ValueOperations<String,String> valueOps = stringRedisTemplate.opsForValue();

valueOps.set(cacheKey,username);

}

/**

* 缓存读取

*/

public String getUserCache(Long useId){

String cacheKey = USER_CACHE_PRE + useId;

ValueOperations<String,String> valueOps = stringRedisTemplate.opsForValue();

return valueOps.get(cacheKey);

}

}

2.测试,创建RedisSeriveImplTest类

package com.cloud.photo.api.utils;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

/**

* @Author:kkoneone11

* @name:RedisSeriveImplTest

* @Date:2023/7/9 16:10

*/

@SpringBootTest

@RunWith(SpringRunner.class)

public class RedisSeriveImplTest {

@Autowired

private RedisServiceImpl redisService;

@Test

public void setUser2Cache(){

redisService.setUser2Cache(888888l,"kkoneone11");

}

/**

*获取一万次名字所需要用的时间

*/

@Test

public void getUserCache(){

long beginTime = System.currentTimeMillis();

String userName = "";

for (int i = 0; i <= 10000; i++) {

userName = redisService.getUserCache(888888L);

}

System.out.println("getUserCache=" + userName + ",get 10000 cost:" + (System.currentTimeMillis() - beginTime) + "毫秒");

}

}

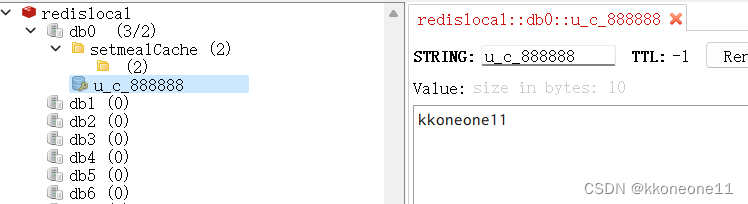

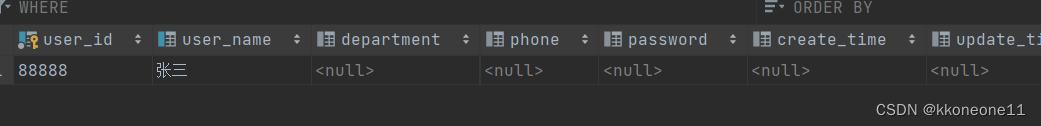

执行set方法后可以看到redis里已经有了对应的键值

执行get方法后可以看到成功执行

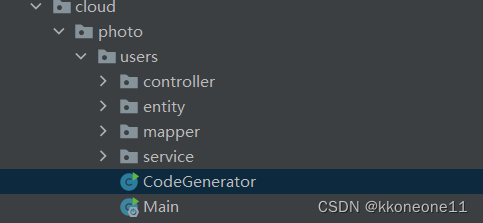

mybatis-plus-genator

1.当数据库中建好表,IDEA连接成功后,在cloud-photo-users子项目中创建一个 CodeGenerator类

package com.cloud.photo.users;

import com.baomidou.mybatisplus.generator.FastAutoGenerator;

import com.baomidou.mybatisplus.generator.config.OutputFile;

import com.baomidou.mybatisplus.generator.engine.FreemarkerTemplateEngine;

import java.util.Collections;

import java.util.HashMap;

import java.util.Map;

/**

* @Author:kkoneone11

* @name:CodeGenerator

* @Date:2023/7/9 16:53

*/

public class CodeGenerator {

public static void main(String[] args) {

//此处记得要修改自己对应的账号密码。和下方修改输出的制定目录

String url = "jdbc:mysql://localhost:3306/photo?setUnicode=true&characterEncoding=utf8";

String username = "root";

String password = "1234";

FastAutoGenerator.create(url, username, password)

.globalConfig(builder -> {

builder.author("ltao") // 设置作者

// .enableSwagger() // 开启 swagger 模式

.fileOverride() // 覆盖已生成文件

.disableOpenDir() //禁止打开输出目录

.outputDir("D:\\cloud\\cloud-photo\\cloud-photo-users" + "/src/main/java"); // 指定输出目录

})

.packageConfig(builder -> {

builder.parent("com.cloud.photo") // 设置父包名

.moduleName("users") // 设置父包模块名,按照自己的需求修改

.entity("entity") //设置entity包名

// .other("model.dto") // 如要设计其他设置dto包名

.pathInfo(Collections.singletonMap(OutputFile.xml, "D:/peixun/cloud-photo-g/cloud-photo-user"

+ "/src/main/java/com/cloud/photo/user/mapper")); // 设置mapperXml生成路径

})

.injectionConfig(consumer -> {

Map<String, String> customFile = new HashMap<>();

// customFile.put("DTO.java", "/templates/entityDTO.java.ftl");

consumer.customFile(customFile);

})

.strategyConfig(builder -> {

builder.addInclude("tb_user") // 设置需要生成的表名

.addTablePrefix("tb_"); // 设置过滤表前缀

})

.templateEngine(new FreemarkerTemplateEngine()) // 使用Freemarker引擎模板,默认的是Velocity引擎模板

.execute();

}

}

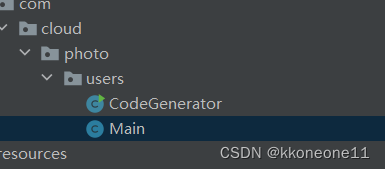

2.然后再创建一个启动Main类用来启动CodeGenerator方法

此处不同的是需要有@MapperScan用来扫描Mapper类

package com.cloud.photo.users;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

/**

* @Author:kkoneone11

* @name:Main

* @Date:2023/7/9 17:10

*/

@SpringBootApplication

@MapperScan(basePackages = {"com.cloud.photo.*"})

public class Main {

public static void main(String[] args) {

SpringApplication.run(Main.class,args);

}

}

3.然后运行Main方法,成功后显示

MySQL的操作

进行测试

调用getOne方法的时候要先在UserServiceImpl中实现getUserInfoById方法

package com.cloud.photo.users.service.impl;

import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;

import com.cloud.photo.users.entity.User;

import com.cloud.photo.users.mapper.UserMapper;

import com.cloud.photo.users.service.IUserService;

import com.baomidou.mybatisplus.extension.service.impl.ServiceImpl;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

/**

* <p>

* 服务实现类

* </p>

*

* @author ltao

* @since 2023-07-09

*/

@Service

public class UserServiceImpl extends ServiceImpl<UserMapper, User> implements IUserService {

public User getUserInfoById(Long userId){

QueryWrapper queryWrapper = new QueryWrapper<User>();

queryWrapper.eq("USER_ID",userId);

return this.getOne(queryWrapper);

}

}

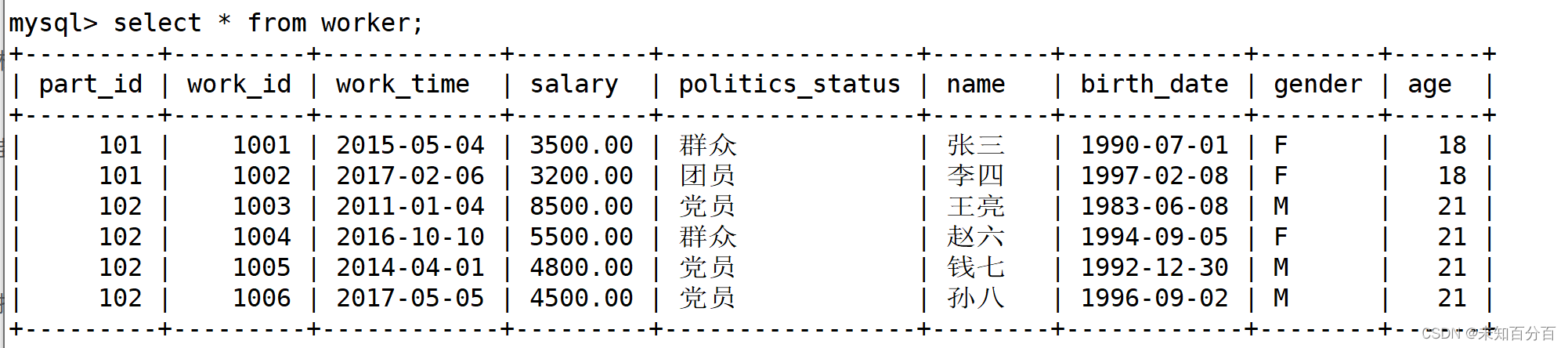

各个方法执行成功后:

libvips

下载地址:https://github.com/libvips/build-win64-mxe/releases/tag/v8.14.0

下载好libvips后回到cloud-photo-api子项目在下方新建一个VipsUtil类

同样根据自己的实际情况修改一下配置

package com.cloud.photo.api.utils;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.exec.CommandLine;

import org.apache.commons.exec.DefaultExecutor;

import org.apache.commons.exec.PumpStreamHandler;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

@Slf4j

public class VipsUtil {

//根据实际情况更改

static String windowsCommand = "D:/software/vips-dev-8.14/bin/vipsthumbnail.exe";

static String linuxCommand = "/usr/local/bin/vipsthumbnail";

/**

*

* @param srcPath

* @param desPath

* @param tarWidth

* @param tarHight

* @param quality

* @return

*/

public static Boolean thumbnail(String srcPath, String desPath, int tarWidth , int tarHight, String quality) {

String size_param = "";

//原图片宽高均小于目前宽高,则直接用原图宽高

//获取图片宽高

size_param = tarWidth + "x" + tarHight;

ByteArrayOutputStream stdout = new ByteArrayOutputStream();

PumpStreamHandler psh = new PumpStreamHandler(stdout);

log.info("thumbnail() prepare tools info ");

//加入参数-t表示自动旋转

String command = "";

if (desPath.endsWith(".png")) {

command = String.format("%s -t %s -s %s -o %s[Q=%s,strip]",windowsCommand, srcPath, size_param, desPath, quality);

}else {

command = String.format("%s -t %s -s %s -o %s[Q=%s,optimize_coding,strip]",windowsCommand, srcPath, size_param, desPath, quality);

}

CommandLine cl = CommandLine.parse(command);

log.info("thumbnail() command = " + command);

DefaultExecutor exec = new DefaultExecutor();

exec.setStreamHandler(psh);

try {

System.out.println(command);

exec.execute(cl);

} catch (IOException e) {

log.warn("thumbnail() exec command failed!");

}

return true;

}

public static void main(String[] args){

//根据实际情况更改

String srcPath = "D:/Cloud_images/img/123.jpg";

String desPath = "D:/Cloud_images/img/output/123_600.jpg";

int tarWidth = 600;

int tarHight = 600;

String quality ="100";

thumbnail(srcPath, desPath, tarWidth , tarHight, quality);

}

}

启动后看output文件夹已经成功有照片