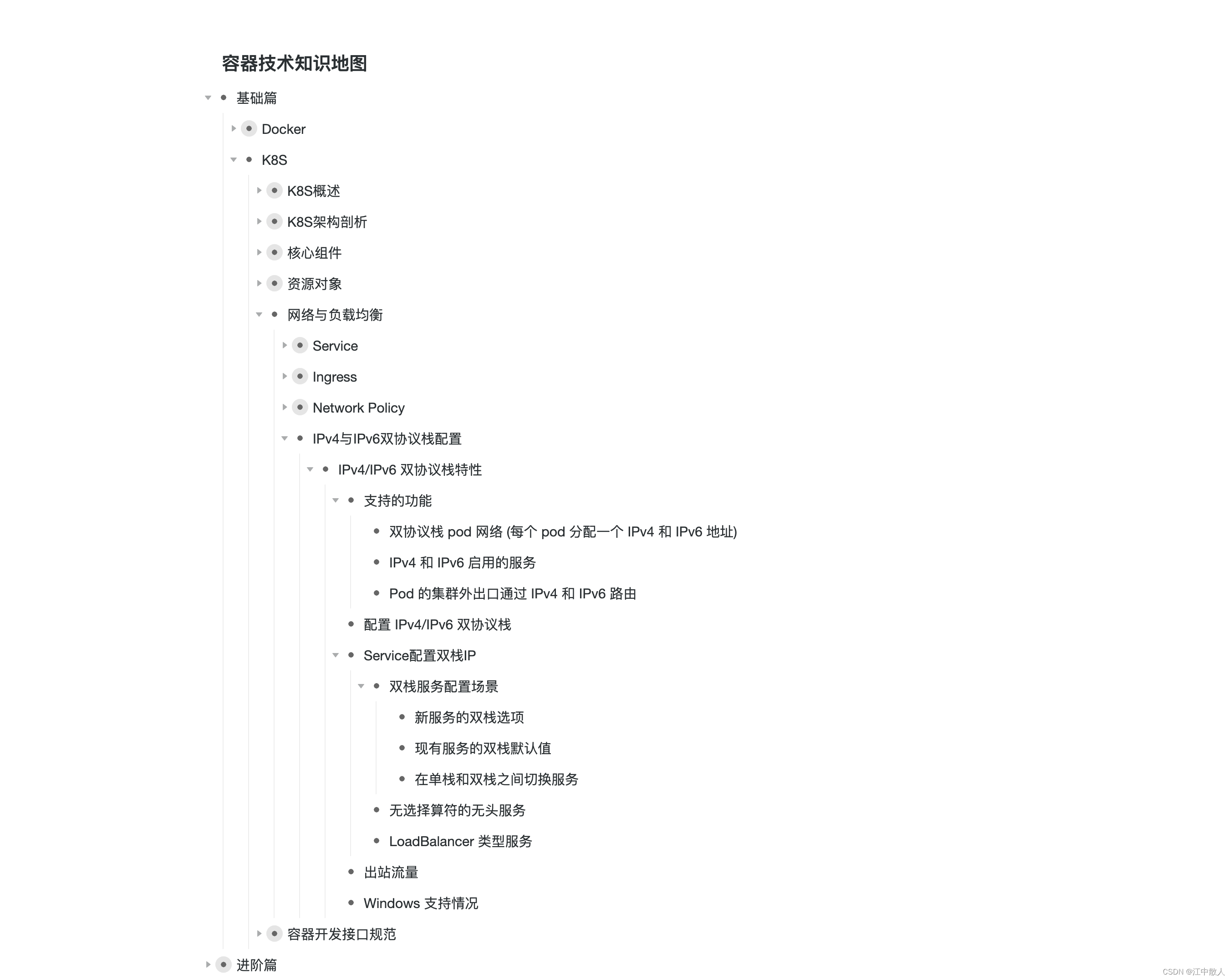

1、概念

参考:

(70条消息) 什么是光流法_张年糕慢慢走的博客-CSDN博客_光流法

(70条消息) 计算机视觉--光流法(optical flow)简介_T-Jhon的博客-CSDN博客_光流法

此外,还有基于均值迁移的目标追踪方法:

camshift:

(75条消息) opencv3中camshift详解(一)camshiftdemo代码详解_夏言谦的博客-CSDN博客

meanshift:

(75条消息) Opencv——用均值平移法meanshift做目标追踪_走过,莫回头的博客-CSDN博客

2、API

光流法:

void cv::calcOpticalFlowPyrLK ( InputArray prevImg,

InputArray nextImg,

InputArray prevPts,

InputOutputArray nextPts,

OutputArray status,

OutputArray err,

Size winSize = Size(21, 21),

int maxLevel = 3,

TermCriteria criteria

int flags = 0,

double minEigThreshold = 1e-4

)

criteria = TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 30, 0.01)

prevImg ——前一帧图像。

nextImg ——后一帧图像。

prevPts ——前一帧的角点vector,初始需要输入预获取的角点。

nextPts ——后一帧的角点vector

status——output status vector (of unsigned chars); each element of the vector is set to 1 if the flow for the corresponding features has been found, otherwise, it is set to 0.

err ——输出的角点的错误信息。

winSize ——光流法窗口大小。

maxLevel——光流层数,0只有1层,1为2层,以此类推

criteria ——停止条件

flags ——operation flags:

OPTFLOW_USE_INITIAL_FLOW uses initial estimations, stored in nextPts; if the flag is not set, then prevPts is copied to nextPts and is considered the initial estimate.

OPTFLOW_LK_GET_MIN_EIGENVALS use minimum eigen values as an error measure (see minEigThreshold description); if the flag is not set, then L1 distance between patches around the original and a moved point, divided by number of pixels in a window, is used as a error measure.

minEigThreshold——the algorithm calculates the minimum eigen value of a 2x2 normal matrix of optical flow equations (this matrix is called a spatial gradient matrix in [25]), divided by number of pixels in a window; if this value is less than minEigThreshold, then a corresponding feature is filtered out and its flow is not processed, so it allows to remove bad points and get a performance boost.

定义停止条件:当迭代10次不需要计算,两次的计算结果差小于0.01也不需要计算

TermCriteria criteria = TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 10, 0.01);

稠密光流法:

void cv::calcOpticalFlowFarneback ( InputArray prev,

InputArray next,

InputOutputArray flow,

double pyr_scale,

int levels,

int winsize,

int iterations,

int poly_n,

double poly_sigma,

int flags

) flow——输出的光流场数据Mat_<Point2f>对象

pyr_scale——金字塔前后层大小之比,一般取0.5.

levels ——光流金字塔层数,一般取3.

iterations ——迭代次数。

poly_n ——多项式阶数 , typically poly_n =5 or 7.

poly_sigma ——standard deviation of the Gaussian that is used to smooth derivatives used as a basis for the polynomial expansion; for poly_n=5, you can set poly_sigma=1.1, for poly_n=7, a good value would be poly_sigma=1.5.

camshift:

RotatedRect cv::CamShift ( InputArray probImage,

Rect & window,

TermCriteria criteria

) window——Initial search window.

meanshift:

int cv::meanShift ( InputArray probImage,

Rect & window,

TermCriteria criteria

) 3、代码

光流法:

void QuickDemo::shade_flow()

{

VideoCapture capture("https://vd4.bdstatic.com/mda-nm67dtz2afxmwat7/sc/cae_h264/1670390212002064886/mda-nm67dtz2afxmwat7.mp4?v_from_s=hkapp-haokan-hbf&auth_key=1670484546-0-0-792e5c9ffc23b54e025e91106b771e99&bcevod_channel=searchbox_feed&cd=0&pd=1&pt=3&logid=3546818474&vid=6396276202448861552&abtest=104960_2&klogid=3546818474");

if (capture.isOpened()) {

cout << "ok!" << endl;

}

//获取合适帧率

int fps = capture.get(CAP_PROP_FPS);

cout << "fps" << fps << endl;

Mat old_frame, old_gray;

capture.read(old_frame);

cvtColor(old_frame, old_gray, COLOR_BGR2GRAY);

//角点获取

vector<Point2f> feature_pts;

goodFeaturesToTrack(old_gray, feature_pts, 100, 0.01,10,Mat(),3,false);

Mat frame, gray;

vector<Point2f> pts[2];

pts[0].insert(pts[0].end(), feature_pts.begin(), feature_pts.end());

vector<uchar> status;

vector<float> err;

//定义停止条件,当迭代10次不需要计算,两次的计算结果差小于0.01也不需要计算

TermCriteria criteria = TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 10, 0.01);

while (true)

{

//capture >> frame;//尽量不用

//逐帧传入视频

bool ret = capture.read(frame);

if (!ret)break;

cvtColor(frame, gray, COLOR_BGR2GRAY);

//光流法函数

calcOpticalFlowPyrLK(old_frame, frame, pts[0], pts[1], status, err, Size(21, 21), 3, criteria, 0);

//检测是否出错

size_t i = 0, k = 0;

RNG rng(12345);

for (i = 0; i < pts[1].size(); ++i) {

//距离与状态检测

if (status[i]) {

pts[0][k] = pts[0][i];

pts[1][k++] = pts[1][i];

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(frame, pts[1][i], 3, Scalar(b, g, r), 3, 8);

line(frame, pts[0][i], pts[1][i], Scalar(b, g, r), 3, 8);

}

}

//更新角点vector容量

pts[0].resize(k);

pts[1].resize(k);

imshow("frame", frame);

char c = waitKey(fps+10);

if (c == 27)

break;

//更换帧图像,帧角点信息

swap(pts[1], pts[0]);

swap(old_gray, gray);

}

capture.release();

}

稀疏光流法:

void QuickDemo::poor_shade_flow()

{

VideoCapture capture("https://vd4.bdstatic.com/mda-nm67dtz2afxmwat7/sc/cae_h264/1670390212002064886/mda-nm67dtz2afxmwat7.mp4?v_from_s=hkapp-haokan-hbf&auth_key=1670484546-0-0-792e5c9ffc23b54e025e91106b771e99&bcevod_channel=searchbox_feed&cd=0&pd=1&pt=3&logid=3546818474&vid=6396276202448861552&abtest=104960_2&klogid=3546818474");

if (capture.isOpened()) {

cout << "ok!" << endl;

}

//获取合适帧率

int fps = capture.get(CAP_PROP_FPS);

cout << "fps" << fps << endl;

Mat old_frame, old_gray;

capture.read(old_frame);

cvtColor(old_frame, old_gray, COLOR_BGR2GRAY);

//角点光源初始化

vector<Point2f> feature_pts;

goodFeaturesToTrack(old_gray, feature_pts, 50, 0.01, 50, Mat(), 3, false);

vector<Point2f> pts[2];

pts[0].insert(pts[0].end(), feature_pts.begin(), feature_pts.end());

vector<Point2f> initial_points;

initial_points.insert(initial_points.end(), feature_pts.begin(), feature_pts.end());

Mat frame, gray;

vector<uchar> status;

vector<float> err;

//定义停止条件,当迭代10次不需要计算,两次的计算结果差小于0.01也不需要计算

TermCriteria criteria = TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 10, 0.01);

while (true)

{

//capture >> frame;//尽量不用

//逐帧传入视频

bool ret = capture.read(frame);

if (!ret)break;

cvtColor(frame, gray, COLOR_BGR2GRAY);

//光流法函数

calcOpticalFlowPyrLK(old_frame, frame, pts[0], pts[1], status, err, Size(21, 21), 3, criteria, 0);

//检测是否出错

size_t i = 0, k = 0;

RNG rng(12345);

for (i = 0; i < pts[1].size(); ++i) {

//距离与状态检测

double dist = abs(pts[0][i].x - pts[1][i].x) + abs(pts[0][i].y - pts[1][i].y);

if (status[i] && dist >2) {

pts[0][k] = pts[0][i];

pts[1][k++] = pts[1][i];

initial_points[k] = initial_points[i];

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(frame, pts[1][i], 3, Scalar(b, g, r), 3, 8);

line(frame, pts[0][i], pts[1][i], Scalar(b, g, r), 3, 8);

}

}

//更新角点vector容量

pts[0].resize(k);

pts[1].resize(k);

initial_points.resize(k);

//绘制跟踪线

draw_line(frame,pts[0],pts[1]);

imshow("frame", frame);

char c = waitKey(fps + 10);

if (c == 27)

break;

//更换帧图像,帧角点信息

swap(pts[1], pts[0]);

swap(old_gray, gray);

//在稀疏光源法还要重新初始化,当角点数小于40时,重新初始化

if (pts[0].size()<40) {

goodFeaturesToTrack(old_gray, feature_pts, 50, 0.01, 50, Mat(), 3, false);

pts[0].insert(pts[0].end(), feature_pts.begin(), feature_pts.end());

initial_points.insert(initial_points.end(), feature_pts.begin(), feature_pts.end());

}

}

capture.release();

}

void QuickDemo::draw_line(Mat& image, vector<Point2f> pts1, vector<Point2f> pts2)

{

vector<Scalar>lut;

RNG rng(12345);

for (auto i = 0; i < pts1.size(); ++i) {

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

lut.push_back(Scalar(b, g, r));

}

for (auto i = 0; i < pts1.size(); ++i) {

line(image, pts1[i], pts2[i], Scalar(255, 0, 0), 2, 8);

}

}稠密光流法:

void QuickDemo::dense_shade_flow()

{

VideoCapture capture("https://vd4.bdstatic.com/mda-nm67dtz2afxmwat7/sc/cae_h264/1670390212002064886/mda-nm67dtz2afxmwat7.mp4?v_from_s=hkapp-haokan-hbf&auth_key=1670484546-0-0-792e5c9ffc23b54e025e91106b771e99&bcevod_channel=searchbox_feed&cd=0&pd=1&pt=3&logid=3546818474&vid=6396276202448861552&abtest=104960_2&klogid=3546818474");

if (!capture.isOpened())

cout << "error" << endl;

namedWindow("frame", WINDOW_FREERATIO);

namedWindow("result", WINDOW_FREERATIO);

int fps = capture.get(CAP_PROP_FPS);

//定义当前帧,前一帧,并灰度转换

Mat frame, preframe;

Mat gray, pregray;

capture.read(preframe);

cvtColor(preframe, pregray, COLOR_BGR2GRAY);

Mat hsv = Mat::zeros(preframe.size(), preframe.type());

Mat mag = Mat::zeros(hsv.size(), CV_32FC1);

Mat ang = Mat::zeros(hsv.size(), CV_32FC1);

Mat xpts = Mat::zeros(hsv.size(), CV_32FC1);

Mat ypts = Mat::zeros(hsv.size(), CV_32FC1);

//输出光流场数据空间定义

Mat_<Point2f> flow;

//通道拆分

vector<Mat> mv;

split(hsv, mv);

Mat bgr;

while (true) {

bool ret = capture.read(frame);

char c = waitKey(fps + 5);

if (c == 27)break;

cvtColor(frame, gray, COLOR_BGR2GRAY);

calcOpticalFlowFarneback(pregray, gray, flow, 0.5, 3, 15, 3, 5, 1.2, 0);

for (int row = 0; row < flow.rows; ++row) {

for (int col = 0; col < flow.cols; ++col) {

const Point2f& flow_xy = flow.at<Point2f>(row, col);

//取出对应x、y方向的光流场数据

xpts.at<float>(row, col) = flow_xy.x;

ypts.at<float>(row, col) = flow_xy.y;

}

}

//转极坐标空间,并归一化到(0,255)

cartToPolar(xpts, ypts, mag, ang);

ang = ang * 180 / CV_PI/2.0;

normalize(mag, mag, 0, 255, NORM_MINMAX);

//绝对值处理

convertScaleAbs(mag, mag);

convertScaleAbs(ang,ang);

//各通道图像更新,并融合通道

mv[0] = ang;

mv[1] = Scalar(255);

mv[2] = mag;

merge(mv, hsv);

cvtColor(hsv, bgr, COLOR_HSV2BGR);

imshow("frame", frame);

imshow("result", bgr);

}

capture.release();

}camshift:

Mat frame, gray; //源图像和源灰度图像

Mat framecopy; //用于拷贝出的源图像

Mat framerect, framerecthsv; //矩形选取后的图像

Rect rect; //鼠标选取的矩形

//直方图

int histSize = 200;

float histR[] = { 0,255 };

const float* histRange = histR;

int channels[] = { 0,1 };

Mat dstHist;

//保存目标轨迹

std::vector<Point> pt;

//鼠标控制

bool firstleftbutton = false;

bool leftButtonDownFlag = false; //左键单击后视频暂停播放的标志位

Point rectstartPoint; //矩形框起点

Point rectstopPoint; //矩形框终点

void onMouse(int event, int x, int y, int flags, void* ustc); //鼠标回调函数

void onMouse(int event, int x, int y, int flags, void* ustc)

{

//鼠标左键按下

if (event == EVENT_LBUTTONDOWN)

{

leftButtonDownFlag = true; //更新按下标志位

rectstartPoint = Point(x, y); //设置矩形的开始点

rectstopPoint = rectstartPoint; //刚按下时结束点和开始点一样

}

//当鼠标按下并且开始移动时

else if (event == EVENT_MOUSEMOVE && leftButtonDownFlag)

{

framecopy = frame.clone(); //复制源图像

rectstopPoint = Point(x, y); //设置矩形的结束点

if (rectstartPoint != rectstopPoint)

{

//当矩形的开始点和结束点不同后在复制的图像上绘制矩形

rectangle(framecopy, rectstartPoint, rectstopPoint,

Scalar(255, 255, 255));

}

imshow("srcvideo", framecopy);

}

//当鼠标抬起时

else if (event == EVENT_LBUTTONUP)

{

leftButtonDownFlag = false;//按下鼠标标志位复位

rect = Rect(rectstartPoint, rectstopPoint);//设置选中后的矩形

framerect = frame(rect); //通过矩形获取到选取后的图像

imshow("selectimg", framerect);//显示出来选择后的图像

cvtColor(framerect, framerecthsv, COLOR_BGR2HSV);

//直方图计算

calcHist(&framerecthsv, 2, channels, Mat(), dstHist, 1, &histSize, &histRange, true, false);

//归一化显示

normalize(dstHist, dstHist, 0, 255, NORM_MINMAX);

}

}

void Quick_Demo::object_follow_demo()

{

VideoCapture cap(0);

namedWindow("srcvideo", WINDOW_FREERATIO);

//设置图像中鼠标事件

setMouseCallback("srcvideo", onMouse, 0);

bool first = false;

while (true)

{

char ch = waitKey(50);

//当鼠标左键没有按下时

if (!leftButtonDownFlag)

{

cap >> frame;

}

//图像为空或Esc键按下退出播放

if (ch == 27)

break;

//如果已经截取了图像进行处理

if (rectstartPoint != rectstopPoint && !leftButtonDownFlag)

{

Mat imageHSV;

Mat calcBackImage;

cvtColor(frame, imageHSV, COLOR_BGR2HSV);

//反向投影

calcBackProject(&imageHSV, 2, channels,

dstHist, calcBackImage, &histRange);

TermCriteria criteria(TermCriteria::MAX_ITER +

TermCriteria::EPS, 10, 1);

CamShift(calcBackImage, rect, criteria);

//更新模板

Mat imageROI = imageHSV(rect);

framerecthsv = imageHSV(rect);

calcHist(&imageROI, 2, channels, Mat(),

dstHist, 1, &histSize, &histRange);

normalize(dstHist, dstHist, 0.0, 1.0, NORM_MINMAX); //归一化

rectangle(frame, rect, Scalar(255, 0, 0), 3); //目标绘制

pt.push_back(Point(rect.x + rect.width / 2,

rect.y + rect.height / 2));//储存roi区域的中心点

for (int i = 0; i < pt.size() - 1; i++)

{

line(frame, pt[i], pt[i + 1], Scalar(0, 255, 0), 2.5);//绘制roi区域的中心点

}

}

imshow("srcvideo", frame);

}

cap.release();

waitKey(0);

destroyAllWindows();

}