目录

- 数据接收流程

- 驱动层

- 网络层

- ip_local_deliver

- ip_local_deliver_finish

- 传输层

- tcp_v4_rcv

- tcp_v4_do_rcv

- tcp_rcv_established

- tcp_recvmsg

linux内核源码下载:https://cdn.kernel.org/pub/linux/kernel/

我下载的是:linux-5.11.1.tar.gz

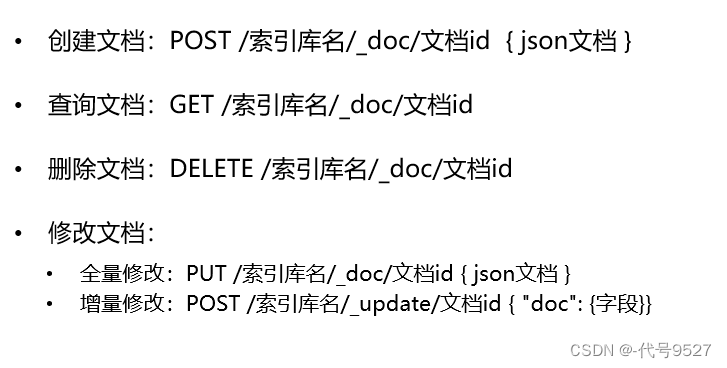

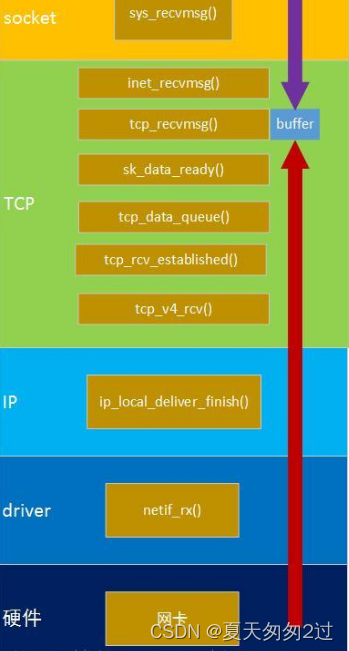

数据接收流程

1,一般网卡接收数据是以触发中断来接收的,在网卡driver中,接收到数据时,往kernel的api:netif_rx()丢。

2,接着数据被送到IP层ip_local_deliver_finish(),经过剥离ip头部,把数据往TCP层发。

3,tcp层tcp_v4_rcv()收到数据后,再调用tcp_rcv_established()(ack也是其中的tcp_ack()发送的)处理,如果当前用户进程没有正在读取数据,则将其插入到接收队列中,tcp_queue_rcv()则判断接收队列是否为空,如果不为空,则将skb合并到接收队列的尾部,最后由tcp_recvmsg()从接收列队中一个一个的将skb读取到用户设置的buffer中去。

4,上层通过recvmsg等函数去接收数据时,就是从buffer中去读取的。

调用栈:

1、从下往上:IP数据报 -> tcp_v4_rcv -> tcp_v4_do_rcv -> tcp_rcv_established -> tcp_data_queue ->sk_data_ready

2、从上往下:应用层recvfrom -> SYSCALL_DEFINE2 -> __sys_recvfrom -> sock_recvmsg -> sock_recvmsg_nosec -> inet_recvmsg -> tcp_recvmsg

驱动层

netif_rx()

所在目录:/linux-5.11.1/net/core/dev.c。

该函数在网卡驱动程序与linux内核之间建立了一道桥梁,将网卡接收上来的数据包(sk_buff形式)插入内核维护的接收缓冲区队列当中。

他的主要任务是把数据帧添加到CPU的输入队列input_pkt_queue中。随后标记软中断来处理后续上传数据帧给TCP/IP协议栈。

网络层

所在目录:/linux-5.11.1/net/ipv4/ip_input.c。

ip_local_deliver(解析IP头部,组包)

ip_local_deliver_finish(根据IP报头的protocol字段,找到对应的L4协议,TCP/UDP)。

ip_local_deliver

/*

* Deliver IP Packets to the higher protocol layers.

*/

int ip_local_deliver(struct sk_buff *skb)

{

/*

* Reassemble IP fragments.

*/

struct net *net = dev_net(skb->dev);

/* 分片重组 */

if (ip_is_fragment(ip_hdr(skb))) {

if (ip_defrag(net, skb, IP_DEFRAG_LOCAL_DELIVER))

return 0;

}

/* 经过LOCAL_IN钩子点 */

return NF_HOOK(NFPROTO_IPV4, NF_INET_LOCAL_IN,

net, NULL, skb, skb->dev, NULL,

ip_local_deliver_finish);

}

ip_local_deliver_finish

static int ip_local_deliver_finish(struct net *net, struct sock *sk, struct sk_buff *skb)

{

/* 去掉ip头 */

__skb_pull(skb, skb_network_header_len(skb));

rcu_read_lock();

{

/* 获取协议 */

int protocol = ip_hdr(skb)->protocol;

const struct net_protocol *ipprot;

int raw;

resubmit:

/* 原始套接口,复制一个副本,输出到该套接口 */

raw = raw_local_deliver(skb, protocol);

/* 获取协议处理结构 */

ipprot = rcu_dereference(inet_protos[protocol]);

if (ipprot) {

int ret;

if (!ipprot->no_policy) {

if (!xfrm4_policy_check(NULL, XFRM_POLICY_IN, skb)) {

kfree_skb(skb);

goto out;

}

nf_reset(skb);

}

/* 协议上层收包处理函数 */

ret = ipprot->handler(skb);

if (ret < 0) {

protocol = -ret;

goto resubmit;

}

__IP_INC_STATS(net, IPSTATS_MIB_INDELIVERS);

}

/* 没有协议接收该数据包 */

else {

/* 原始套接口未接收或接收异常 */

if (!raw) {

if (xfrm4_policy_check(NULL, XFRM_POLICY_IN, skb)) {

__IP_INC_STATS(net, IPSTATS_MIB_INUNKNOWNPROTOS);

/* 发送icmp */

icmp_send(skb, ICMP_DEST_UNREACH,

ICMP_PROT_UNREACH, 0);

}

/* 丢包 */

kfree_skb(skb);

}

/* 原始套接口接收 */

else {

__IP_INC_STATS(net, IPSTATS_MIB_INDELIVERS);

/* 释放包 */

consume_skb(skb);

}

}

}

out:

rcu_read_unlock();

return 0;

}

传输层

根据IP报头协议,以TCP为例,TCP接收函数为:int tcp_v4_rcv(struct sk_buff *skb)。

所在目录:/linux-5.11.1/net/ipv4/tcp_ipv4.c。

tcp_v4_rcv

int tcp_v4_rcv(struct sk_buff *skb)

{

const struct iphdr *iph;

struct tcphdr *th;

struct sock *sk;

int ret;

//非本机数据包扔掉

if (skb->pkt_type != PACKET_HOST)

goto discard_it;

/* Count it even if it's bad */

TCP_INC_STATS_BH(TCP_MIB_INSEGS);

//下面主要是对TCP段的长度进行校验。注意pskb_may_pull()除了校验,还有一个额外的功能,

//如果一个TCP段在传输过程中被网络层分片,那么在目的端的网络层会重新组包,这会导致传给

//TCP的skb的分片结构中包含多个skb,这种情况下,该函数会将分片结构重组到线性数据区

//保证skb的线性区域至少有20个字节数据

if (!pskb_may_pull(skb, sizeof(struct tcphdr)))

goto discard_it;

th = tcp_hdr(skb);

if (th->doff < sizeof(struct tcphdr) / 4)

goto bad_packet;

//保证skb的线性区域至少包括实际的TCP首部

if (!pskb_may_pull(skb, th->doff * 4))

goto discard_it;

//数据包校验相关,校验失败,则悄悄丢弃,不产生任何的差错报文

/* An explanation is required here, I think.

* Packet length and doff are validated by header prediction,

* provided case of th->doff==0 is eliminated.

* So, we defer the checks. */

if (!skb_csum_unnecessary(skb) && tcp_v4_checksum_init(skb))

goto bad_packet;

//初始化skb中的控制块

th = tcp_hdr(skb);

iph = ip_hdr(skb);

TCP_SKB_CB(skb)->seq = ntohl(th->seq);

TCP_SKB_CB(skb)->end_seq = (TCP_SKB_CB(skb)->seq + th->syn + th->fin +

skb->len - th->doff * 4);

TCP_SKB_CB(skb)->ack_seq = ntohl(th->ack_seq);

TCP_SKB_CB(skb)->when = 0;

TCP_SKB_CB(skb)->flags = iph->tos;

TCP_SKB_CB(skb)->sacked = 0;

//根据传入段的源和目的地址信息从ehash或者bhash中查询对应的TCB,这一步决定了

//输入数据包应该由哪个套接字处理,获取到TCB时,还会持有一个引用计数

sk = __inet_lookup(skb->dev->nd_net, &tcp_hashinfo, iph->saddr,

th->source, iph->daddr, th->dest, inet_iif(skb));

if (!sk)

goto no_tcp_socket;

process:

//TCP_TIME_WAIT需要做特殊处理,这里先不关注

if (sk->sk_state == TCP_TIME_WAIT)

goto do_time_wait;

//IPSec相关

if (!xfrm4_policy_check(sk, XFRM_POLICY_IN, skb))

goto discard_and_relse;

nf_reset(skb);

//TCP套接字过滤器,如果数据包被过滤掉了,结束处理过程

if (sk_filter(sk, skb))

goto discard_and_relse;

//到了传输层,该字段已经没有意义,将其置为空

skb->dev = NULL;

//先持锁,这样进程上下文和其它软中断则无法操作该TCB

bh_lock_sock_nested(sk);

ret = 0;

//如果当前TCB没有被进程上下文锁定,首先尝试将数据包放入prequeue队列,

//如果prequeue队列没有处理,再将其处理后放入receive队列。如果TCB已

//经被进程上下文锁定,那么直接将数据包放入backlog队列

if (!sock_owned_by_user(sk)) {

//DMA部分,忽略

#ifdef CONFIG_NET_DMA

struct tcp_sock *tp = tcp_sk(sk);

if (!tp->ucopy.dma_chan && tp->ucopy.pinned_list)

tp->ucopy.dma_chan = get_softnet_dma();

if (tp->ucopy.dma_chan)

ret = tcp_v4_do_rcv(sk, skb);

else

#endif

{

//prequeue没有接收该数据包时返回0,那么交由tcp_v4_do_rcv()处理

if (!tcp_prequeue(sk, skb))

ret = tcp_v4_do_rcv(sk, skb);

}

} else {

//TCB被用户进程锁定,直接将数据包放入backlog队列

sk_add_backlog(sk, skb);

}

//释放锁

bh_unlock_sock(sk);

//释放TCB引用计数

sock_put(sk);

//返回处理结果

return ret;

no_tcp_socket:

if (!xfrm4_policy_check(NULL, XFRM_POLICY_IN, skb))

goto discard_it;

if (skb->len < (th->doff << 2) || tcp_checksum_complete(skb)) {

bad_packet:

TCP_INC_STATS_BH(TCP_MIB_INERRS);

} else {

tcp_v4_send_reset(NULL, skb);

}

discard_it:

/* Discard frame. */

kfree_skb(skb);

return 0;

discard_and_relse:

sock_put(sk);

goto discard_it;

do_time_wait:

...

}

tcp_v4_do_rcv

所在目录:/linux-5.11.1/net/ipv4/tcp_ipv4.c。

int tcp_v4_do_rcv(struct sock *sk, struct sk_buff *skb)

{

struct sock *rsk;

#ifdef CONFIG_TCP_MD5SIG

/* We really want to reject the packet as early as possible if :

* We're expecting an MD5'd packet and this is no MD5 tcp option.

* There is an MD5 option and we're not expecting one.

*/

if (tcp_v4_inbound_md5_hash(sk, skb))

goto discard;

#endif

/* 当状态为ESTABLISHED时,用tcp_rcv_established()接收处理 */

if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

struct dst_entry *dst = sk->sk_rx_dst;

sock_rps_save_rxhash(sk, skb);

if (dst) {

if (inet_sk(sk)->rx_dst_ifindex != skb->skb_iif || dst->ops->check(dst, 0) == NULL) {

dst_release(dst);

sk->sk_rx_dst = NULL;

}

}

/* 连接已建立时的处理路径 */

tcp_rcv_established(sk, skb, tcp_hdr(skb), skb->len);

return 0;

}

/* 检查报文长度、报文校验和 */

if (skb->len < tcp_hdrlen(skb) || tcp_checksum_complete(skb))

goto csum_err;

/* 如果这个sock处于监听状态,被动打开时的处理,包括收到SYN或ACK */

if (sk->sk_state == TCP_LISTEN) {

/* 返回值:

* NULL,错误

* nsk == sk,接收到SYN

* nsk != sk,接收到ACK

*/

struct sock *nsk = tcp_v4_hnd_req(sk, skb);

if (! nsk)

goto discard;

if (nsk != sk) { /* 接收到ACK时 */

sock_rps_save_rxhash(nsk, skb);

if (tcp_child_process(sk, nsk, skb)) { /* 处理新的sock */

rsk = nsk;

goto reset;

}

return 0;

}

} else

sock_rps_save_rx(sk, skb);

/* 处理除了ESTABLISHED和TIME_WAIT之外的所有状态,包括SYN_SENT状态 */

if (tcp_rcv_state_process(sk, skb, tcp_hdr(skb), skb->len)) {

rsk = sk;

goto reset;

}

return 0;

reset:

tcp_v4_send_reset(rsk, skb); /* 发送被动的RST包 */

discard:

kfree_skb(skb);

return 0;

csum_err:

TCP_INC_STATS_BH(sock_net(sk), TCP_MIB_CSUMERRORS);

TCP_INC_STATS_BH(sock_net(sk), TCP_MIB_INERRS);

goto discard;

}

tcp_rcv_established

1、状态为ESTABLISHED时,用tcp_rcv_established()接收处理。

2. 状态为LISTEN时,说明这个sock处于监听状态,用于被动打开的接收处理,包括SYN和ACK。

3. 当状态不为ESTABLISHED或TIME_WAIT时,用tcp_rcv_state_process()处理。

所在目录:/linux-5.11.1/net/ipv4/tcp_input.c。

int tcp_rcv_established(struct sock *sk, struct sk_buff *skb,

struct tcphdr *th, unsigned len)

{

struct tcp_sock *tp = tcp_sk(sk);

int res;

/*

* Header prediction.

* The code loosely follows the one in the famous

* "30 instruction TCP receive" Van Jacobson mail.

*

* Van's trick is to deposit buffers into socket queue

* on a device interrupt, to call tcp_recv function

* on the receive process context and checksum and copy

* the buffer to user space. smart...

*

* Our current scheme is not silly either but we take the

* extra cost of the net_bh soft interrupt processing...

* We do checksum and copy also but from device to kernel.

*/

tp->rx_opt.saw_tstamp = 0;

/* pred_flags is 0xS?10 << 16 + snd_wnd

* if header_prediction is to be made

* 'S' will always be tp->tcp_header_len >> 2

* '?' will be 0 for the fast path, otherwise pred_flags is 0 to

* turn it off (when there are holes in the receive

* space for instance)

* PSH flag is ignored.

*/

//预定向标志和输入数据段的标志比较

//数据段序列号是否正确

if ((tcp_flag_word(th) & TCP_HP_BITS) == tp->pred_flags &&

TCP_SKB_CB(skb)->seq == tp->rcv_nxt &&

!after(TCP_SKB_CB(skb)->ack_seq, tp->snd_nxt)) {

int tcp_header_len = tp->tcp_header_len;

/* Timestamp header prediction: tcp_header_len

* is automatically equal to th->doff*4 due to pred_flags

* match.

*/

/* Check timestamp */

//时间戳选项之外如果还有别的选项就送给Slow Path处理

if (tcp_header_len == sizeof(struct tcphdr) + TCPOLEN_TSTAMP_ALIGNED) {

/* No? Slow path! */

if (!tcp_parse_aligned_timestamp(tp, th))

goto slow_path;

//对数据包做PAWS快速检查,如果检查走Slow Path处理

/* If PAWS failed, check it more carefully in slow path */

if ((s32)(tp->rx_opt.rcv_tsval - tp->rx_opt.ts_recent) < 0)

goto slow_path;

/* DO NOT update ts_recent here, if checksum fails

* and timestamp was corrupted part, it will result

* in a hung connection since we will drop all

* future packets due to the PAWS test.

*/

}

//数据包长度太小

if (len <= tcp_header_len) {

/* Bulk data transfer: sender */

if (len == tcp_header_len) {

/* Predicted packet is in window by definition.

* seq == rcv_nxt and rcv_wup <= rcv_nxt.

* Hence, check seq<=rcv_wup reduces to:

*/

if (tcp_header_len ==

(sizeof(struct tcphdr) + TCPOLEN_TSTAMP_ALIGNED) &&

tp->rcv_nxt == tp->rcv_wup)

tcp_store_ts_recent(tp);

/* We know that such packets are checksummed

* on entry.

*/

tcp_ack(sk, skb, 0);

__kfree_skb(skb);

tcp_data_snd_check(sk);

return 0;

} else { /* Header too small */

TCP_INC_STATS_BH(sock_net(sk), TCP_MIB_INERRS);

goto discard;

}

} else {

int eaten = 0;

int copied_early = 0;

//tp->copied_seq表示未读的数据包序列号

//tp->rcv_nxt表示下一个期望读取的数据包序列号

//len-tcp_header_len小于tp->ucpoy.len表示数据包还没有复制完

if (tp->copied_seq == tp->rcv_nxt &&

len - tcp_header_len <= tp->ucopy.len) {

#ifdef CONFIG_NET_DMA

if (tcp_dma_try_early_copy(sk, skb, tcp_header_len)) {

copied_early = 1;

eaten = 1;

}

#endif

//当前进程是否有锁定

//当前进程的全局指针current

//tp->ucopy.task指针是否等于当前进程

if (tp->ucopy.task == current &&

sock_owned_by_user(sk) && !copied_early) {

__set_current_state(TASK_RUNNING);

//将数据包复制到应用层空间

if (!tcp_copy_to_iovec(sk, skb, tcp_header_len))

eaten = 1;

}

//复制成功

if (eaten) {

/* Predicted packet is in window by definition.

* seq == rcv_nxt and rcv_wup <= rcv_nxt.

* Hence, check seq<=rcv_wup reduces to:

*/

if (tcp_header_len ==

(sizeof(struct tcphdr) +

TCPOLEN_TSTAMP_ALIGNED) &&

tp->rcv_nxt == tp->rcv_wup)

tcp_store_ts_recent(tp);

tcp_rcv_rtt_measure_ts(sk, skb);

__skb_pull(skb, tcp_header_len);

tp->rcv_nxt = TCP_SKB_CB(skb)->end_seq;

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_TCPHPHITSTOUSER);

}

//清除prequeue队列中已经复制的数据包,并回复ack

if (copied_early)

tcp_cleanup_rbuf(sk, skb->len);

}

//复制不成功

if (!eaten) {

//从新计算校验和

if (tcp_checksum_complete_user(sk, skb))

goto csum_error;

/* Predicted packet is in window by definition.

* seq == rcv_nxt and rcv_wup <= rcv_nxt.

* Hence, check seq<=rcv_wup reduces to:

*/

if (tcp_header_len ==

(sizeof(struct tcphdr) + TCPOLEN_TSTAMP_ALIGNED) &&

tp->rcv_nxt == tp->rcv_wup)

tcp_store_ts_recent(tp);

tcp_rcv_rtt_measure_ts(sk, skb);

if ((int)skb->truesize > sk->sk_forward_alloc)

goto step5;

//大块数据传送

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_TCPHPHITS);

/* Bulk data transfer: receiver */

//去掉tcp头部

__skb_pull(skb, tcp_header_len);

//将数据包加入到sk_receive_queue队列中

__skb_queue_tail(&sk->sk_receive_queue, skb);

skb_set_owner_r(skb, sk);

tp->rcv_nxt = TCP_SKB_CB(skb)->end_seq;

}

//更新延迟回答时钟超时间隔值

tcp_event_data_recv(sk, skb);

if (TCP_SKB_CB(skb)->ack_seq != tp->snd_una) {

/* Well, only one small jumplet in fast path... */

tcp_ack(sk, skb, FLAG_DATA);

tcp_data_snd_check(sk);

if (!inet_csk_ack_scheduled(sk))

goto no_ack;

}

//收到数据后回复ack确认

if (!copied_early || tp->rcv_nxt != tp->rcv_wup)

__tcp_ack_snd_check(sk, 0);

no_ack:

#ifdef CONFIG_NET_DMA

if (copied_early)

__skb_queue_tail(&sk->sk_async_wait_queue, skb);

else

#endif

if (eaten)

__kfree_skb(skb);

else

//no_ack标签表明套接字已经准备好下一次应用读

sk->sk_data_ready(sk, 0);

return 0;

}

}

slow_path:

if (len < (th->doff << 2) || tcp_checksum_complete_user(sk, skb))

goto csum_error;

/*

* Standard slow path.

*/

res = tcp_validate_incoming(sk, skb, th, 1);

if (res <= 0)

return -res;

step5:

if (th->ack && tcp_ack(sk, skb, FLAG_SLOWPATH) < 0)

goto discard;

tcp_rcv_rtt_measure_ts(sk, skb);

/* Process urgent data. */

//紧急数据段处理

tcp_urg(sk, skb, th);

/* step 7: process the segment text */

//根据情况将数据复制到应用层或者

//将数据加入sk_receive_queue常规队列中

tcp_data_queue(sk, skb);

tcp_data_snd_check(sk);

tcp_ack_snd_check(sk);

return 0;

csum_error:

TCP_INC_STATS_BH(sock_net(sk), TCP_MIB_INERRS);

discard:

__kfree_skb(skb);

return 0;

}

tcp_recvmsg

用户进程调用recvfrom读取套接字缓冲区上的数据,实际是调用tcp_recvmsg函数将数据包从内核地址空间复制到用户考地址空间。

所在目录:/linux-5.11.1/net/ipv4/tcp.c。

int tcp_recvmsg(struct kiocb *iocb, struct sock *sk, struct msghdr *msg,

size_t len, int nonblock, int flags, int *addr_len)

{

//获取TCP套接字结构

struct tcp_sock *tp = tcp_sk(sk);

int copied = 0;

u32 peek_seq;

u32 *seq;

unsigned long used;

int err;

int target; /* Read at least this many bytes */

long timeo;

struct task_struct *user_recv = NULL;

int copied_early = 0;

struct sk_buff *skb;

u32 urg_hole = 0;

//锁住套接字,其实就是设置sk->sk_lock.owned = 1

//当产生软中断调用tcp_v4_rcv获取套接字sock发现

//sock处于进程上下文,就会把数据包加入到balock_queue队列中

lock_sock(sk);

TCP_CHECK_TIMER(sk);

err = -ENOTCONN;

//套接字当前处于监听状态就直接跳出

if (sk->sk_state == TCP_LISTEN)

goto out;

//查实时间,如果是非阻塞模式就为0

timeo = sock_rcvtimeo(sk, nonblock);

//紧急处理数据

/* Urgent data needs to be handled specially. */

if (flags & MSG_OOB)

goto recv_urg;

//未读取数据包的开始序列号

seq = &tp->copied_seq;

if (flags & MSG_PEEK) {

peek_seq = tp->copied_seq;

seq = &peek_seq;

}

//取len和sk->rcvlowat中的最小值

//MSG_WAITALL标志是判断是否要接受完整的数据包后再拷贝复制数据包

target = sock_rcvlowat(sk, flags & MSG_WAITALL, len);

//配置了DMA可以直接通过访问内存复制数据到用户地址空间

#ifdef CONFIG_NET_DMA

tp->ucopy.dma_chan = NULL;

preempt_disable();

skb = skb_peek_tail(&sk->sk_receive_queue);

{

int available = 0;

if (skb)

available = TCP_SKB_CB(skb)->seq + skb->len - (*seq);

if ((available < target) &&

(len > sysctl_tcp_dma_copybreak) && !(flags & MSG_PEEK) &&

!sysctl_tcp_low_latency &&

dma_find_channel(DMA_MEMCPY)) {

preempt_enable_no_resched();

tp->ucopy.pinned_list =

dma_pin_iovec_pages(msg->msg_iov, len);

} else {

preempt_enable_no_resched();

}

}

#endif

//主循环,复制数据到用户地址空间直到target为0

do {

u32 offset;

/* Are we at urgent data? Stop if we have read anything or have SIGURG pending. */

//遇到紧急数据停止处理跳出循环

if (tp->urg_data && tp->urg_seq == *seq) {

if (copied)

break;

//检测套接字上是否有信号等待处理,确保能处理SIGUSR信号。

if (signal_pending(current)) {

//检查是否超时

copied = timeo ? sock_intr_errno(timeo) : -EAGAIN;

break;

}

}

/* Next get a buffer. */

//循环变量接受缓冲区队列receive_queue队列

skb_queue_walk(&sk->sk_receive_queue, skb) {

/* Now that we have two receive queues this

* shouldn't happen.

*/

if (WARN(before(*seq, TCP_SKB_CB(skb)->seq),

KERN_INFO "recvmsg bug: copied %X "

"seq %X rcvnxt %X fl %X\n", *seq,

TCP_SKB_CB(skb)->seq, tp->rcv_nxt,

flags))

break;

//未读取数据包的序列号和已经读取数据包的序列号差

//如果这个差小于数据包长度skb->len,表示这是我们要找的数据包

//因为是最小的序列号

offset = *seq - TCP_SKB_CB(skb)->seq;

//如果是syn表就跳过

if (tcp_hdr(skb)->syn)

offset--;

//找到了skb,跳转到found_ok_skb处完成复制工作

if (offset < skb->len)

goto found_ok_skb;

//发现是fin包调转到fin处理标签处

if (tcp_hdr(skb)->fin)

goto found_fin_ok;

WARN(!(flags & MSG_PEEK), KERN_INFO "recvmsg bug 2: "

"copied %X seq %X rcvnxt %X fl %X\n",

*seq, TCP_SKB_CB(skb)->seq,

tp->rcv_nxt, flags);

}

/* Well, if we have backlog, try to process it now yet. */

//缓冲区recieve_queue队列中已经没有数据

//而且backlog_queue队列中也没有数据了就跳出循环

if (copied >= target && !sk->sk_backlog.tail)

break;

if (copied) {

//检查套接字的状态是否是关闭

//或者收到远端的断开请求,则要跳出复制循环

if (sk->sk_err ||

sk->sk_state == TCP_CLOSE ||

(sk->sk_shutdown & RCV_SHUTDOWN) ||

!timeo ||

signal_pending(current))

break;

} else {

//copied为0表示应用层没有复制到数据,没有复制到数据有三种可能

//第一是套接字已经关闭了,第二是缓冲区根本没有数据

//第三是其他错误

if (sock_flag(sk, SOCK_DONE))

break;

if (sk->sk_err) {

copied = sock_error(sk);

break;

}

if (sk->sk_shutdown & RCV_SHUTDOWN)

break;

if (sk->sk_state == TCP_CLOSE) {

//当用户关闭套接字会设置SOCK_DON标志

//连接状态是TCP_CLOSE,SOCK_DONE标志就不会0

if (!sock_flag(sk, SOCK_DONE)) {

/* This occurs when user tries to read

* from never connected socket.

*/

copied = -ENOTCONN;

break;

}

break;

}

//查看是否阻塞,不阻塞直接返回

//返回的错误标志是EAGAIN

if (!timeo) {

copied = -EAGAIN;

break;

}

//读取数据失败可能是其他错误

//返回错误原因

if (signal_pending(current)) {

copied = sock_intr_errno(timeo);

break;

}

}

//根据已经复制数据长度copied清除recieve_queue队列

//并且回复对端ack包

tcp_cleanup_rbuf(sk, copied);

//sk_recieve_queue队列中已无数据需要处理就处理preueue队列上的数据

//prequeue队列的处理现场是用户进程

if (!sysctl_tcp_low_latency && tp->ucopy.task == user_recv) {

/* Install new reader */

if (!user_recv && !(flags & (MSG_TRUNC | MSG_PEEK))) {

//复制pre_queue队列的进程是当前进程

user_recv = current;

//处理数据的用户进程

tp->ucopy.task = user_recv;

//应用层接受数据的缓冲区地址

tp->ucopy.iov = msg->msg_iov;

}

//拷贝数据长度

tp->ucopy.len = len;

WARN_ON(tp->copied_seq != tp->rcv_nxt &&

!(flags & (MSG_PEEK | MSG_TRUNC)));

//prequeu队列不为空,必须在释放套接字之前处理这些数据包

//如果这个处理没有完成则数据段顺序将会被破坏,接受段处理顺序是

//flight中的数据、backlog队列、prequeue队列、sk_receive_queue队列,只有当前队列处理

//完成了才会去处理下一个队列。prequeue队列可能在循环结束套接字释放前又

//加入数据包,调转到do_prequeue标签处理

if (!skb_queue_empty(&tp->ucopy.prequeue))

goto do_prequeue;

/* __ Set realtime policy in scheduler __ */

}

#ifdef CONFIG_NET_DMA

if (tp->ucopy.dma_chan)

dma_async_memcpy_issue_pending(tp->ucopy.dma_chan);

#endif

//数据包复制完毕

if (copied >= target) {

/* Do not sleep, just process backlog. */

//从backlog队列中复制数据包到sk_receive_queue队列

release_sock(sk);

lock_sock(sk);

} else

//已经没有数据要处理,将套接字放入等待状态,进程进入睡眠

//如果有数据段来了tcp_prequeue会唤醒进程,软中断会判断用户进程睡眠

//如果睡眠就会把数据放到prequeue队列中

sk_wait_data(sk, &timeo);

#ifdef CONFIG_NET_DMA

tcp_service_net_dma(sk, false); /* Don't block */

tp->ucopy.wakeup = 0;

#endif

if (user_recv) {

int chunk;

/* __ Restore normal policy in scheduler __ */

if ((chunk = len - tp->ucopy.len) != 0) {

NET_ADD_STATS_USER(sock_net(sk), LINUX_MIB_TCPDIRECTCOPYFROMBACKLOG, chunk);

//更新剩余数据长度

len -= chunk;

//更新已经复制的数据长度

copied += chunk;

}

//tp->rcv_nxt == tp->copied_seq判断receive_queue队列中释放有数据

if (tp->rcv_nxt == tp->copied_seq &&

!skb_queue_empty(&tp->ucopy.prequeue)) {

do_prequeue:

//处理prequeue队列

tcp_prequeue_process(sk);

if ((chunk = len - tp->ucopy.len) != 0) {

NET_ADD_STATS_USER(sock_net(sk), LINUX_MIB_TCPDIRECTCOPYFROMPREQUEUE, chunk);

//更新剩余需要复制数据长度

len -= chunk;

//更新复制的数据copied

copied += chunk;

}

}

}

if ((flags & MSG_PEEK) &&

(peek_seq - copied - urg_hole != tp->copied_seq)) {

if (net_ratelimit())

printk(KERN_DEBUG "TCP(%s:%d): Application bug, race in MSG_PEEK.\n",

current->comm, task_pid_nr(current));

peek_seq = tp->copied_seq;

}

continue;

//处理sk_receive_queue队列中的数据

found_ok_skb:

/* Ok so how much can we use? */

used = skb->len - offset;

if (len < used)

used = len;

/* Do we have urgent data here? */

//首先查看是否有紧急数据需要处理

//如果设置套接字选项设置了SO_OOBINLINE就不需要处理紧急数据

//因为有单独处理

if (tp->urg_data) {

u32 urg_offset = tp->urg_seq - *seq;

if (urg_offset < used) {

if (!urg_offset) {

if (!sock_flag(sk, SOCK_URGINLINE)) {

++*seq;

urg_hole++;

offset++;

used--;

if (!used)

goto skip_copy;

}

} else

used = urg_offset;

}

}

if (!(flags & MSG_TRUNC)) {

#ifdef CONFIG_NET_DMA

if (!tp->ucopy.dma_chan && tp->ucopy.pinned_list)

tp->ucopy.dma_chan = dma_find_channel(DMA_MEMCPY);

if (tp->ucopy.dma_chan) {

tp->ucopy.dma_cookie = dma_skb_copy_datagram_iovec(

tp->ucopy.dma_chan, skb, offset,

msg->msg_iov, used,

tp->ucopy.pinned_list);

if (tp->ucopy.dma_cookie < 0) {

printk(KERN_ALERT "dma_cookie < 0\n");

/* Exception. Bailout! */

if (!copied)

copied = -EFAULT;

break;

}

dma_async_memcpy_issue_pending(tp->ucopy.dma_chan);

if ((offset + used) == skb->len)

copied_early = 1;

} else

#endif

{

//将数据包从内核地址空间复制到用户地址空间

err = skb_copy_datagram_iovec(skb, offset,

msg->msg_iov, used);

if (err) {

/* Exception. Bailout! */

if (!copied)

copied = -EFAULT;

break;

}

}

}

//更新数据包序列号

*seq += used;

//更新已复制的数据长度

copied += used;

//更新剩下需要复制的数据长度

len -= used;

//重新调整tcp接受窗口

tcp_rcv_space_adjust(sk);

skip_copy:

if (tp->urg_data && after(tp->copied_seq, tp->urg_seq)) {

tp->urg_data = 0;

//处理完了紧急数据,调转到Fast Path处理

tcp_fast_path_check(sk);

}

if (used + offset < skb->len)

continue;

if (tcp_hdr(skb)->fin)

goto found_fin_ok;

if (!(flags & MSG_PEEK)) {

sk_eat_skb(sk, skb, copied_early);

copied_early = 0;

}

continue;

//套接字状态是Fin

found_fin_ok:

/* Process the FIN. */

//序列号加1

++*seq;

if (!(flags & MSG_PEEK)) {

//重新计算tcp窗口

sk_eat_skb(sk, skb, copied_early);

copied_early = 0;

}

break;

} while (len > 0);

//主循环处理结束后,prequeue队列中还有数据则必须继续处理

if (user_recv) {

if (!skb_queue_empty(&tp->ucopy.prequeue)) {

int chunk;

tp->ucopy.len = copied > 0 ? len : 0;

tcp_prequeue_process(sk);

if (copied > 0 && (chunk = len - tp->ucopy.len) != 0) {

NET_ADD_STATS_USER(sock_net(sk), LINUX_MIB_TCPDIRECTCOPYFROMPREQUEUE, chunk);

len -= chunk;

copied += chunk;

}

}

tp->ucopy.task = NULL;

tp->ucopy.len = 0;

}

#ifdef CONFIG_NET_DMA

tcp_service_net_dma(sk, true); /* Wait for queue to drain */

tp->ucopy.dma_chan = NULL;

if (tp->ucopy.pinned_list) {

dma_unpin_iovec_pages(tp->ucopy.pinned_list);

tp->ucopy.pinned_list = NULL;

}

#endif

/* According to UNIX98, msg_name/msg_namelen are ignored

* on connected socket. I was just happy when found this 8) --ANK

*/

/* Clean up data we have read: This will do ACK frames. */

tcp_cleanup_rbuf(sk, copied);

TCP_CHECK_TIMER(sk);

release_sock(sk);

if (copied > 0)

uid_stat_tcp_rcv(current_uid(), copied);

return copied;

out:

TCP_CHECK_TIMER(sk);

release_sock(sk);

return err;

recv_urg:

//紧急数据处理,复制紧急数据到用户地址空间

err = tcp_recv_urg(sk, msg, len, flags);

if (err > 0)

uid_stat_tcp_rcv(current_uid(), err);

goto out;

}