PyTorch示例——ResNet34模型和Fruits图像数据

前言 导包 数据探索查看 数据集构建 构建模型 ResNet34 模型训练 绘制训练曲线

ResNet34模型,做图像分类 数据使用水果图片数据集,下载见Kaggle Fruits Dataset (Images) Kaggle的Notebook示例见 PyTorch——ResNet34模型和Fruits数据 下面见代码 from PIL import Image

import os

import random

import matplotlib. pyplot as plt

import torch

from torch import nn

from torch. utils. data import Dataset, DataLoader

from torch. nn import functional as F

from torchvision import transforms as T

from torchvision. datasets import ImageFolder

import tqdm

path = "/kaggle/input/fruits-dataset-images/images"

fruit_path = "apple fruit"

apple_files = os.listdir(path + "/" + fruit_path)

Image.open(path + "/"+fruit_path+"/" + apple_files[2])

def show_images ( n_rows, n_cols, x_data) :

assert n_rows * n_cols <= len ( x_data)

plt. figure( figsize= ( n_cols * 1.5 , n_rows * 1.5 ) )

for row in range ( n_rows) :

for col in range ( n_cols) :

index = row * n_cols + col

plt. subplot( n_rows, n_cols, index + 1 )

plt. imshow( x_data[ index] [ 0 ] , cmap= "binary" , interpolation= "nearest" )

plt. axis( "off" )

plt. title( x_data[ index] [ 1 ] )

plt. show( )

def show_fruit_imgs ( fruit, cols, rows) :

files = os. listdir( path + "/" + fruit)

images = [ ]

for _ in range ( cols * rows) :

file = files[ random. randint( 0 , len ( files) - 1 ) ]

image = Image. open ( path + "/" + fruit + "/" + file )

label = file . split( "." ) [ 0 ]

images. append( ( image, label) )

show_images( cols, rows, images)

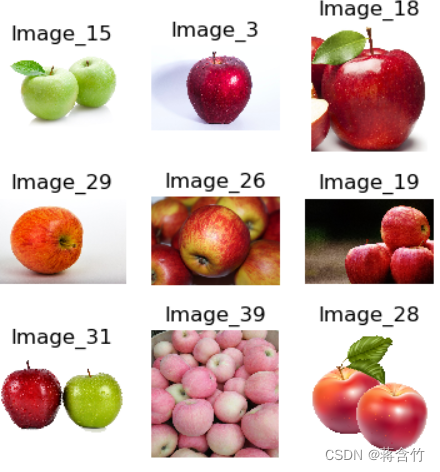

show_fruit_imgs( "apple fruit" , 3 , 3 )

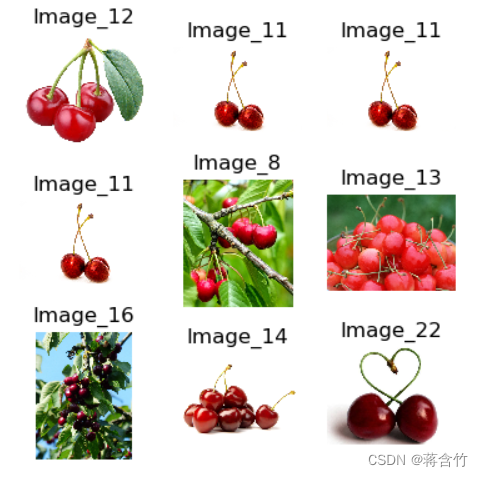

show_fruit_imgs( "cherry fruit" , 3 , 3 )

直接使用ImageFolder加载数据,按目录解析水果类别 transforms = T. Compose( [

T. Resize( 224 ) ,

T. CenterCrop( 224 ) ,

T. ToTensor( ) ,

T. Normalize( mean= [ 5. , 5. , 5. ] , std= [ .5 , .5 , .5 ] )

] )

train_dataset = ImageFolder( path, transform= transforms)

classification = os. listdir( path)

train_dataset[ 2 ]

(tensor([[[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

...,

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.]],

[[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

...,

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.]],

[[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

...,

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.],

[-8., -8., -8., ..., -8., -8., -8.]]]),

0)

class ResidualBlock ( nn. Module) :

def __init__ ( self, in_channels, out_channels, stride= 1 , shortcut= None ) :

super ( ) . __init__( )

self. conv1 = nn. Conv2d( in_channels, out_channels, kernel_size= ( 3 , 3 ) , stride= stride, padding= 1 , bias= False )

self. bn1 = nn. BatchNorm2d( out_channels)

self. conv2 = nn. Conv2d( out_channels, out_channels, kernel_size= ( 3 , 3 ) , stride= 1 , padding= 1 , bias= False )

self. bn2 = nn. BatchNorm2d( out_channels)

self. right = shortcut

def forward ( self, X) :

out = self. conv1( X)

out = self. bn1( out)

out = F. relu( out, inplace= True )

out = self. conv2( out)

out = self. bn2( out)

residual = self. right( X) if self. right else X

out += residual

out = F. relu( out)

return out

class ResNet34 ( nn. Module) :

def __init__ ( self) :

super ( ) . __init__( )

self. pre = nn. Sequential(

nn. Conv2d( 3 , 64 , 7 , 2 , 3 , bias= False ) ,

nn. BatchNorm2d( 64 ) ,

nn. ReLU( inplace= True ) ,

nn. MaxPool2d( 3 , 2 , 1 )

)

self. layer1 = self. _make_layer( 64 , 64 , 3 , 1 , False )

self. layer2 = self. _make_layer( 64 , 128 , 4 , 2 , True )

self. layer3 = self. _make_layer( 128 , 256 , 6 , 2 , True )

self. layer4 = self. _make_layer( 256 , 512 , 3 , 2 , True )

self. fc = nn. Linear( 512 , len ( classification) )

def _make_layer ( self, in_channels, out_channels, block_num, stride, is_shortcut) :

shortcut = None

if is_shortcut:

shortcut = nn. Sequential(

nn. Conv2d( in_channels, out_channels, 1 , stride, bias= False ) ,

nn. BatchNorm2d( out_channels)

)

layers = [ ]

rb = ResidualBlock( in_channels, out_channels, stride, shortcut)

layers. append( rb)

for i in range ( 1 , block_num) :

layers. append( ResidualBlock( out_channels, out_channels) )

return nn. Sequential( * layers)

def forward ( self, X) :

out = self. pre( X)

out = self. layer1( out)

out = self. layer2( out)

out = self. layer3( out)

out = self. layer4( out)

out = F. avg_pool2d( out, 7 )

out = out. view( out. size( 0 ) , - 1 )

out = self. fc( out)

return out

def pad ( num, target) - > str :

"""

将num长度与target对齐

"""

return str ( num) . zfill( len ( str ( target) ) )

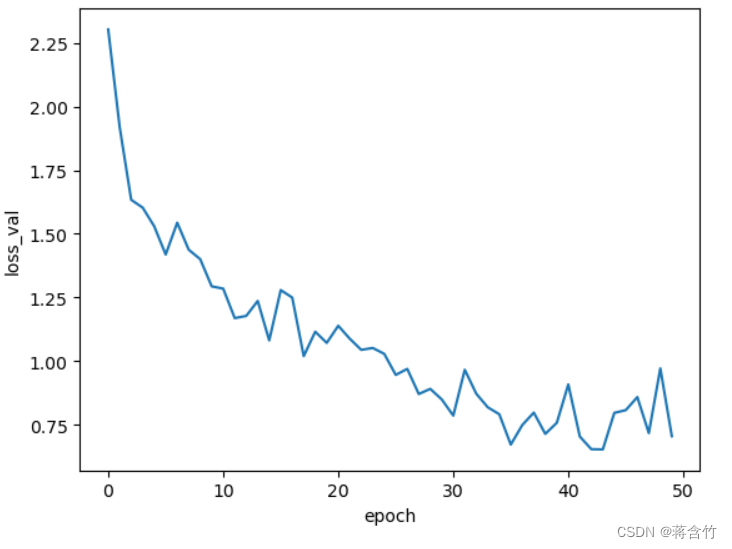

epoch_num = 50

batch_size = 32

learning_rate = 0.0005

device = torch. device( 'cuda' if torch. cuda. is_available( ) else 'cpu' )

train_loader = DataLoader( train_dataset, batch_size= batch_size, shuffle= True , num_workers= 4 )

model = ResNet34( ) . to( device)

criterion = torch. nn. CrossEntropyLoss( )

optimizer = torch. optim. Adam( model. parameters( ) , lr= learning_rate)

print ( model)

ResNet34(

(pre): Sequential(

(0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

)

(layer1): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(right): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(3): ResidualBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(right): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(3): ResidualBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(4): ResidualBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(5): ResidualBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer4): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(right): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(fc): Linear(in_features=512, out_features=9, bias=True)

)

train_loss_list = [ ]

total_step = len ( train_loader)

for epoch in range ( 1 , epoch_num + 1 ) :

model. train

train_total_loss, train_total, train_correct = 0 , 0 , 0

train_progress = tqdm. tqdm( train_loader, desc= "Train..." )

for i, ( X, y) in enumerate ( train_progress, 1 ) :

X, y = X. to( device) , y. to( device)

out = model( X)

loss = criterion( out, y)

loss. backward( )

optimizer. step( )

optimizer. zero_grad( )

_, pred = torch. max ( out, 1 )

train_total += y. size( 0 )

train_correct += ( pred == y) . sum ( ) . item( )

train_total_loss += loss. item( )

train_progress. set_description( f"Train... [epoch { pad( epoch, epoch_num) } / { epoch_num} , loss { ( train_total_loss / i) : .4f } , accuracy { train_correct / train_total: .4f } ]" )

train_loss_list. append( train_total_loss / total_step)

Train... [epoch 01/50, loss 2.3034, accuracy 0.2006]: 100%|██████████| 12/12 [00:15<00:00, 1.32s/it]

Train... [epoch 02/50, loss 1.9193, accuracy 0.3064]: 100%|██████████| 12/12 [00:16<00:00, 1.36s/it]

Train... [epoch 03/50, loss 1.6338, accuracy 0.3482]: 100%|██████████| 12/12 [00:15<00:00, 1.30s/it]

Train... [epoch 04/50, loss 1.6031, accuracy 0.3649]: 100%|██████████| 12/12 [00:16<00:00, 1.38s/it]

Train... [epoch 05/50, loss 1.5298, accuracy 0.4401]: 100%|██████████| 12/12 [00:15<00:00, 1.31s/it]

Train... [epoch 06/50, loss 1.4189, accuracy 0.4429]: 100%|██████████| 12/12 [00:16<00:00, 1.34s/it]

Train... [epoch 07/50, loss 1.5439, accuracy 0.4708]: 100%|██████████| 12/12 [00:15<00:00, 1.31s/it]

Train... [epoch 08/50, loss 1.4378, accuracy 0.4596]: 100%|██████████| 12/12 [00:16<00:00, 1.36s/it]

Train... [epoch 09/50, loss 1.4005, accuracy 0.5348]: 100%|██████████| 12/12 [00:15<00:00, 1.32s/it]

Train... [epoch 10/50, loss 1.2937, accuracy 0.5599]: 100%|██████████| 12/12 [00:16<00:00, 1.34s/it]

......

Train... [epoch 45/50, loss 0.7966, accuracy 0.7354]: 100%|██████████| 12/12 [00:15<00:00, 1.27s/it]

Train... [epoch 46/50, loss 0.8075, accuracy 0.7660]: 100%|██████████| 12/12 [00:15<00:00, 1.33s/it]

Train... [epoch 47/50, loss 0.8587, accuracy 0.7131]: 100%|██████████| 12/12 [00:15<00:00, 1.27s/it]

Train... [epoch 48/50, loss 0.7171, accuracy 0.7604]: 100%|██████████| 12/12 [00:16<00:00, 1.35s/it]

Train... [epoch 49/50, loss 0.9715, accuracy 0.7047]: 100%|██████████| 12/12 [00:15<00:00, 1.27s/it]

Train... [epoch 50/50, loss 0.7050, accuracy 0.7855]: 100%|██████████| 12/12 [00:15<00:00, 1.33s/it]

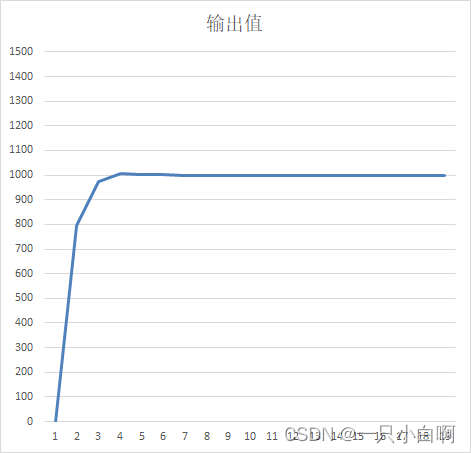

plt. plot( range ( len ( train_loss_list) ) , train_loss_list)

plt. xlabel( "epoch" )

plt. ylabel( "loss_val" )

plt. show( )