文章目录

- 深度学习模型优化器报错:

- 报错原因:

- 解决方案:

深度学习模型优化器报错:

ValueError: decay is deprecated in the new Keras optimizer, pleasecheck the docstring for valid arguments, or use the legacy optimizer, e.g., tf.keras.optimizers.legacy.Adam.

报错原因:

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[10], line 15

13 model = Model(input, output)

14 model.summary() # 显示模型的输出

---> 15 opt = Adam(lr=0.0001, beta_1=0.9, beta_2=0.999, decay=0.01) # 设置优化器

16 model.compile(optimizer=opt, # 优化器

17 loss = 'binary_crossentropy', # 交叉熵

18 metrics=['accuracy'])

File D:\anaconda3\lib\site-packages\keras\optimizers\adam.py:104, in Adam.__init__(self, learning_rate, beta_1, beta_2, epsilon, amsgrad, weight_decay, clipnorm, clipvalue, global_clipnorm, use_ema, ema_momentum, ema_overwrite_frequency, jit_compile, name, **kwargs)

86 def __init__(

87 self,

88 learning_rate=0.001,

(...)

102 **kwargs

103 ):

--> 104 super().__init__(

105 name=name,

106 weight_decay=weight_decay,

107 clipnorm=clipnorm,

108 clipvalue=clipvalue,

109 global_clipnorm=global_clipnorm,

110 use_ema=use_ema,

111 ema_momentum=ema_momentum,

112 ema_overwrite_frequency=ema_overwrite_frequency,

113 jit_compile=jit_compile,

114 **kwargs

115 )

116 self._learning_rate = self._build_learning_rate(learning_rate)

117 self.beta_1 = beta_1

File D:\anaconda3\lib\site-packages\keras\optimizers\optimizer.py:1087, in Optimizer.__init__(self, name, weight_decay, clipnorm, clipvalue, global_clipnorm, use_ema, ema_momentum, ema_overwrite_frequency, jit_compile, **kwargs)

1072 def __init__(

1073 self,

1074 name,

(...)

1083 **kwargs,

1084 ):

1085 """Create a new Optimizer."""

-> 1087 super().__init__(

1088 name,

1089 weight_decay,

1090 clipnorm,

1091 clipvalue,

1092 global_clipnorm,

1093 use_ema,

1094 ema_momentum,

1095 ema_overwrite_frequency,

1096 jit_compile,

1097 **kwargs,

1098 )

1099 self._distribution_strategy = tf.distribute.get_strategy()

File D:\anaconda3\lib\site-packages\keras\optimizers\optimizer.py:105, in _BaseOptimizer.__init__(self, name, weight_decay, clipnorm, clipvalue, global_clipnorm, use_ema, ema_momentum, ema_overwrite_frequency, jit_compile, **kwargs)

103 self._variables = []

104 self._create_iteration_variable()

--> 105 self._process_kwargs(kwargs)

File D:\anaconda3\lib\site-packages\keras\optimizers\optimizer.py:134, in _BaseOptimizer._process_kwargs(self, kwargs)

132 for k in kwargs:

133 if k in legacy_kwargs:

--> 134 raise ValueError(

135 f"{k} is deprecated in the new Keras optimizer, please"

136 "check the docstring for valid arguments, or use the "

137 "legacy optimizer, e.g., "

138 f"tf.keras.optimizers.legacy.{self.__class__.__name__}."

139 )

140 else:

141 raise TypeError(

142 f"{k} is not a valid argument, kwargs should be empty "

143 " for `optimizer_experimental.Optimizer`."

144 )

ValueError: decay is deprecated in the new Keras optimizer, pleasecheck the docstring for valid arguments, or use the legacy optimizer, e.g., tf.keras.optimizers.legacy.Adam.

可以看到错误源于第15行:

opt = Adam(lr=0.0001, beta_1=0.9, beta_2=0.999, decay=0.01) # 设置优化器

该优化器设置方法已失效,需要按报错提示更换legacy.Adam优化器.

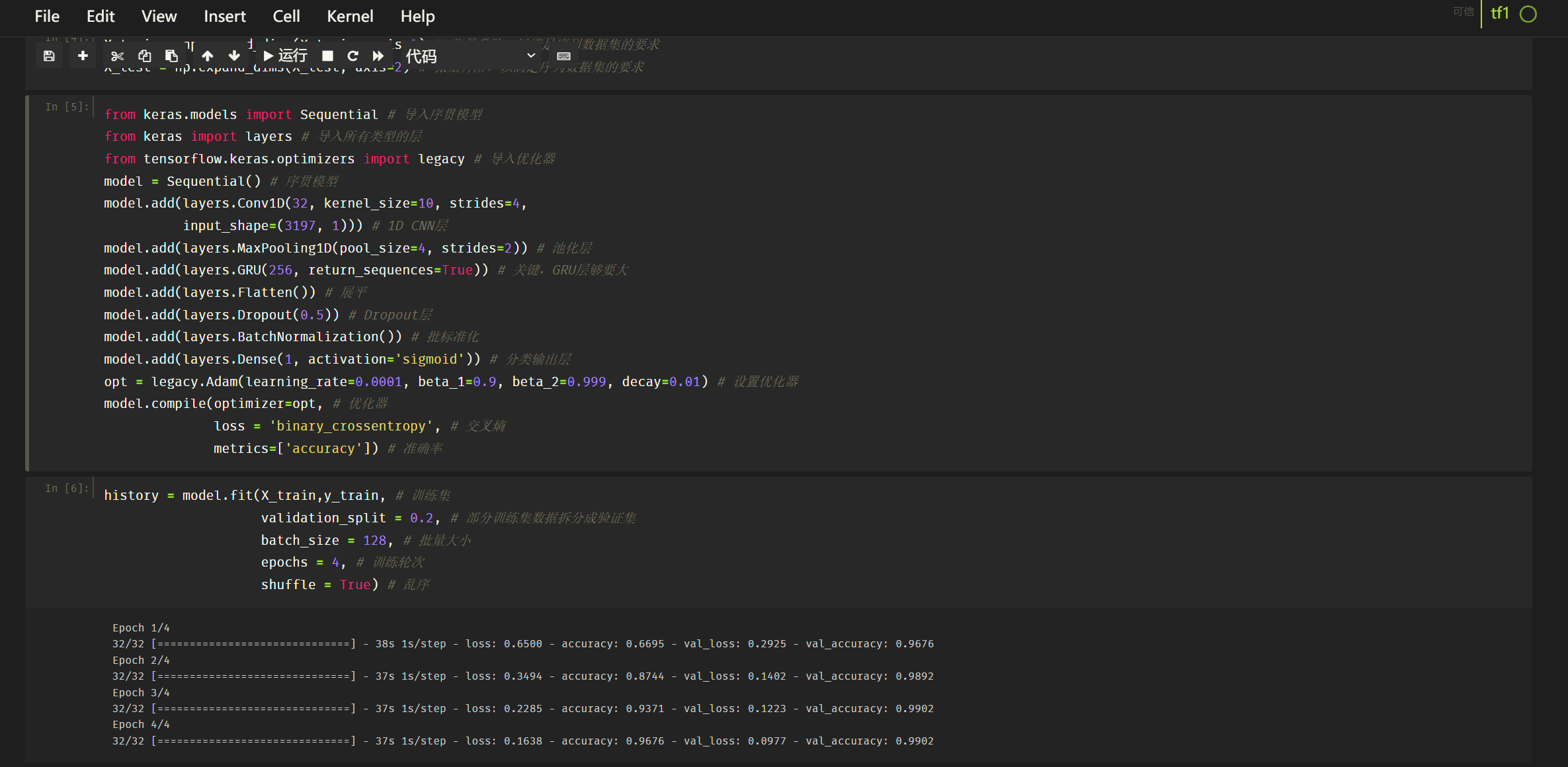

解决方案:

1、导入模型方法修改为from tensorflow.keras.optimizers import legacy

2、Adam()优化器调用修改为legacy.Adam(),且其中的学习率参数lr必须要修改为learning_rate

from keras import layers # 导入各种层

from keras.models import Model # 导入模型

from tensorflow.keras.optimizers import legacy # 导入Adam优化器

input = layers.Input(shape=(3197, 1)) # Input

# 通过函数式API构建模型

x = layers.Conv1D(32, kernel_size=10, strides=4)(input)

x = layers.MaxPooling1D(pool_size=4, strides=2)(x)

x = layers.GRU(256, return_sequences=True)(x)

x = layers.Flatten()(x)

x = layers.Dropout(0.5)(x)

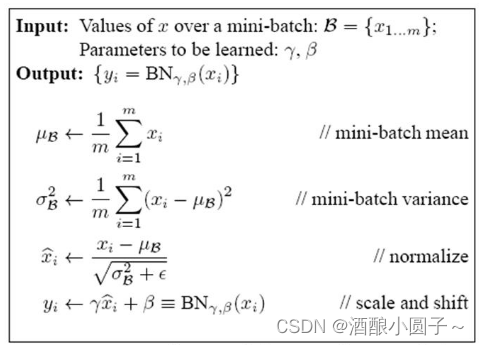

x = layers.BatchNormalization()(x)

output = layers.Dense(1, activation='sigmoid')(x) # Output

model = Model(input, output)

model.summary() # 显示模型的输出

opt = legacy.Adam(learning_rate=0.0001, beta_1=0.9, beta_2=0.999, decay=0.01) # 设置优化器

model.compile(optimizer=opt, # 优化器

loss = 'binary_crossentropy', # 交叉熵

metrics=['accuracy']) # 准确率

这样模型就可以继续正常训练了。

![[游戏开发][Unity]出包真机运行花屏(已解决)](https://img-blog.csdnimg.cn/img_convert/bbbb8ec9749c92de6c7533aa3a90750c.png)