from torch.optim.lr_scheduler import MultiStepLR

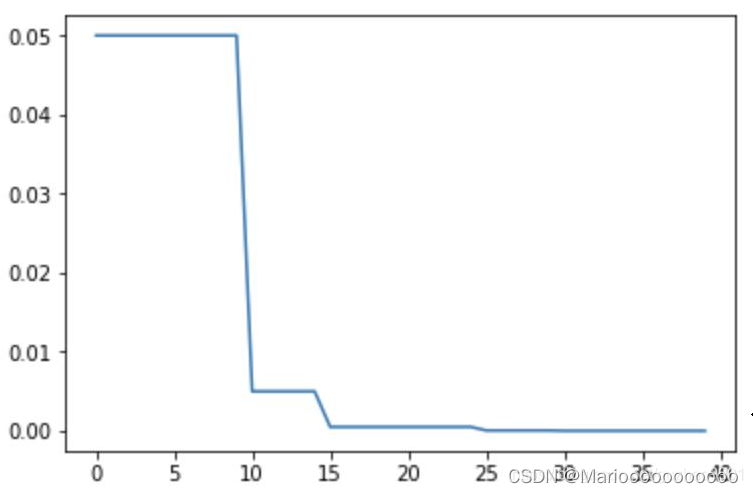

简单来说,就是分阶段调整学习率.

- 用法:

model = ANet(classes=5) #加载模型

optimizer = optim.SGD(params = model.parameters(), lr=0.05) #优化方法使用SGD

#在指定的epoch值,如[10,15,25,30]处对学习率进行衰减,lr = lr * gamma

scheduler = lr_scheduler.MultiStepLR(optimizer, milestones=[10,15,25,30], gamma=0.1)

下图更直观

参考链接:

https://blog.csdn.net/guzhao9901/article/details/116484887