案例演示

案例演示:Syslogtcp+Mem+Logger

Syslogtcp: syslog广泛应用于系统日志。syslog日志消息既可以记录在本地文件中,也可以通过网络发送到接收syslog的服务器。接收syslog的服务器可以对多个设备的syslog消息进行统一的存储,或者解析其中的内容做相应的处理。本数据源指的是syslog的通过tcp端口来传送数据

mem:通过内存选择器来处理

logger:输出目的地为logger

配置方案

[root@qianfeng01 flumeconf]# vi syslogtcp-logger.conf

a1.sources = r1

a1.channels = c1

a1.sinks = s1

a1.sources.r1.type=syslogtcp

a1.sources.r1.host = qianfeng01

a1.sources.r1.port = 6666

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.channels.c1.keep-alive=3

a1.channels.c1.byteCapacityBufferPercentage=20

a1.channels.c1.byteCapacity=800000

a1.sinks.s1.type=logger

a1.sinks.s1.maxBytesToLog=16

a1.sources.r1.channels=c1

a1.sinks.s1.channel=c1

复制代码启动agent

[root@qianfeng01 flumeconf]# flume-ng agent -c ../conf -f ./syslogtcp-logger.conf -n a1 -Dflume.root.logger=INFO,console

复制代码测试:需要先安装nc

[root@qianfeng01 ~]# yum -y install nmap-ncat

[root@qianfeng01 ~]# echo "hello world" | nc qianfeng01 6666

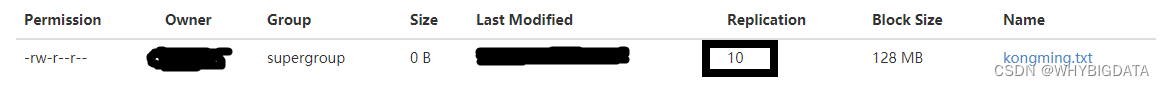

复制代码案例演示:Syslogtcp+Mem+HDFS

Syslogtcp:同上

mem:表示从内存传送

hdfs:表示目的地为HDFS服务器

配置方案

[root@qianfeng01 flumeconf]# vi syslogtcp-hdfs.conf

a1.sources = r1

a1.channels = c1

a1.sinks = s1

a1.sources.r1.type=syslogtcp

a1.sources.r1.host = qianfeng01

a1.sources.r1.port = 6666

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.channels.c1.keep-alive=3

a1.channels.c1.byteCapacityBufferPercentage=20

a1.channels.c1.byteCapacity=800000

a1.sinks.s1.type=hdfs

a1.sinks.s1.hdfs.path=hdfs://qianfeng01:8020/flume/%Y/%m/%d/%H%M

a1.sinks.s1.hdfs.filePrefix=flume-hdfs

a1.sinks.s1.hdfs.fileSuffix=.log

a1.sinks.s1.hdfs.inUseSuffix=.tmp

a1.sinks.s1.hdfs.rollInterval=60

a1.sinks.s1.hdfs.rollSize=1024

a1.sinks.s1.hdfs.rollCount=10

a1.sinks.s1.hdfs.idleTimeout=0

a1.sinks.s1.hdfs.batchSize=100

a1.sinks.s1.hdfs.fileType=DataStream

a1.sinks.s1.hdfs.writeFormat=Text

a1.sinks.s1.hdfs.round=true

a1.sinks.s1.hdfs.roundValue=1

a1.sinks.s1.hdfs.roundUnit=second

a1.sinks.s1.hdfs.useLocalTimeStamp=true

a1.sources.r1.channels=c1

a1.sinks.s1.channel=c1

复制代码启动Agent的服务

[root@qianfeng01 flumeconf]# flume-ng agent -c ../conf -f ./syslogtcp-hdfs.conf -n a1 -Dflume.root.logger=INFO,console

复制代码测试

[root@qianfeng01 ~]# echo "hello world hello qianfeng" | nc qianfeng01 6666

复制代码案例演示:Syslogtcp+File+HDFS

syslogtcp 同上

file:表示用file作为channel传送介质

hdfs:表示目的地为HDFS

配置方案

[root@qianfeng01 flumeconf]# vi syslogtcp-fh.conf

a1.sources = r1

a1.channels = c1

a1.sinks = s1

a1.sources.r1.type=syslogtcp

a1.sources.r1.host = qianfeng01

a1.sources.r1.port = 6666

a1.channels.c1.type=file

a1.channels.c1.dataDirs=/root/flumedata/filechannel/data

a1.channels.c1.checkpointDir=/root/flumedata/filechannel/point

a1.channels.c1.transactionCapacity=10000

a1.channels.c1.checkpointInterval=30000

a1.channels.c1.capacity=1000000

a1.channels.c1.keep-alive=3

a1.sinks.s1.type=hdfs

a1.sinks.s1.hdfs.path=hdfs://qianfeng01:8020/flume/%Y/%m/%d/%H%M

a1.sinks.s1.hdfs.filePrefix=flume-hdfs

a1.sinks.s1.hdfs.fileSuffix=.log

a1.sinks.s1.hdfs.inUseSuffix=.tmp

a1.sinks.s1.hdfs.rollInterval=60

a1.sinks.s1.hdfs.rollSize=1024

a1.sinks.s1.hdfs.rollCount=10

a1.sinks.s1.hdfs.idleTimeout=0

a1.sinks.s1.hdfs.batchSize=100

a1.sinks.s1.hdfs.fileType=DataStream

a1.sinks.s1.hdfs.writeFormat=Text

a1.sinks.s1.hdfs.round=true

a1.sinks.s1.hdfs.roundValue=1

a1.sinks.s1.hdfs.roundUnit=second

a1.sinks.s1.hdfs.useLocalTimeStamp=true

a1.sources.r1.channels=c1

a1.sinks.s1.channel=c1

复制代码启动Agent的服务

[root@qianfeng01 flumeconf]# flume-ng agent -c ../conf -f ./syslogtcp-fh.conf -n a1 -Dflume.root.logger=INFO,console

复制代码测试

[root@qianfeng01 ~]# echo "hello world hello qianfeng" | nc qianfeng01 6666

复制代码案例演示 Taildir+Memory+HDFS

配置方案

[root@qianfeng01 flumeconf]# vi taildir-hdfs.conf

a1.sources = r1

a1.channels = c1

a1.sinks = s1

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /usr/local/flume-1.9.0/flumeconf/taildir_position.json

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /usr/local/flume-1.9.0/flumedata/tails/.*log.*

a1.sources.r1.fileHeader = true

a1.sources.ri.maxBatchCount = 1000

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.sinks.s1.type=hdfs

a1.sinks.s1.hdfs.path=hdfs://qianfeng01:9820/flume/taildir/

a1.sinks.s1.hdfs.filePrefix=flume-hdfs

a1.sinks.s1.hdfs.fileSuffix=.log

a1.sinks.s1.hdfs.inUseSuffix=.tmp

a1.sinks.s1.hdfs.rollInterval=60

a1.sinks.s1.hdfs.rollSize=1024

a1.sinks.s1.hdfs.rollCount=10

a1.sinks.s1.hdfs.idleTimeout=0

a1.sinks.s1.hdfs.batchSize=100

a1.sinks.s1.hdfs.fileType=DataStream

a1.sinks.s1.hdfs.writeFormat=Text

a1.sinks.s1.hdfs.round=true

a1.sinks.s1.hdfs.roundValue=1

a1.sinks.s1.hdfs.roundUnit=second

a1.sinks.s1.hdfs.useLocalTimeStamp=true

a1.sources.r1.channels=c1

a1.sinks.s1.channel=c1

复制代码启动Agent的服务

[root@qianfeng01 flumeconf]# flume-ng agent -c ../conf -f ./taildir-hdfs.conf -n a1 -Dflume.root.logger=INFO,console

复制代码测试

[root@qianfeng01 tails]# echo "hello world" >>a1.log

[root@qianfeng01 tails]# echo "hello world" >>a1.log

[root@qianfeng01 tails]# echo "hello world" >>a1.log

[root@qianfeng01 tails]# echo "hello world" >>a1.log

[root@qianfeng01 tails]# echo "hello world123" >>a1.log

[root@qianfeng01 tails]# echo "hello world123" >>a2.log

[root@qianfeng01 tails]# echo "hello world123" >>a3.log

[root@qianfeng01 tails]# echo "hello world123" >>a3.csv

[root@qianfeng01 tails]# echo "hello world123" >>a3.log

更多大数据精彩内容欢迎B站搜索“千锋教育”或者扫码领取全套资料

【千锋教育】大数据开发全套教程,史上最全面的大数据学习视频