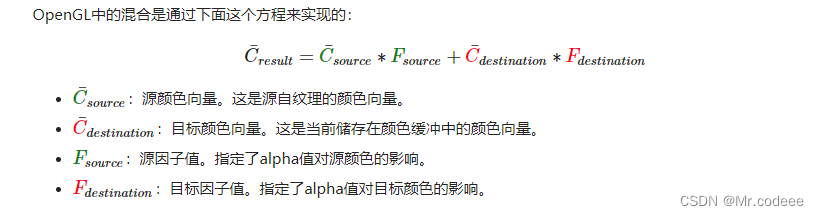

1.简介

混合(Blending)通常是实现物体透明度的一种技术。透明就是说一个物体(或者其中的一部分)不是纯色(Solid Color)的,它的颜色是物体本身的颜色和它背后其它物体的颜色的不同强度结合。

2.丢弃片段

只想显示草纹理的某些部分,而忽略剩下的部分,下面这个纹理正是这样的,它要么是完全不透明的(alpha值为1.0),要么是完全透明的(alpha值为0.0),没有中间情况。

所以当添加像草这样的植被到场景中时,我们不希望看到草的方形图像,而是只显示草的部分,并能看透图像其余的部分。我们想要丢弃(Discard)显示纹理中透明部分的片段,不将这些片段存储到颜色缓冲中。

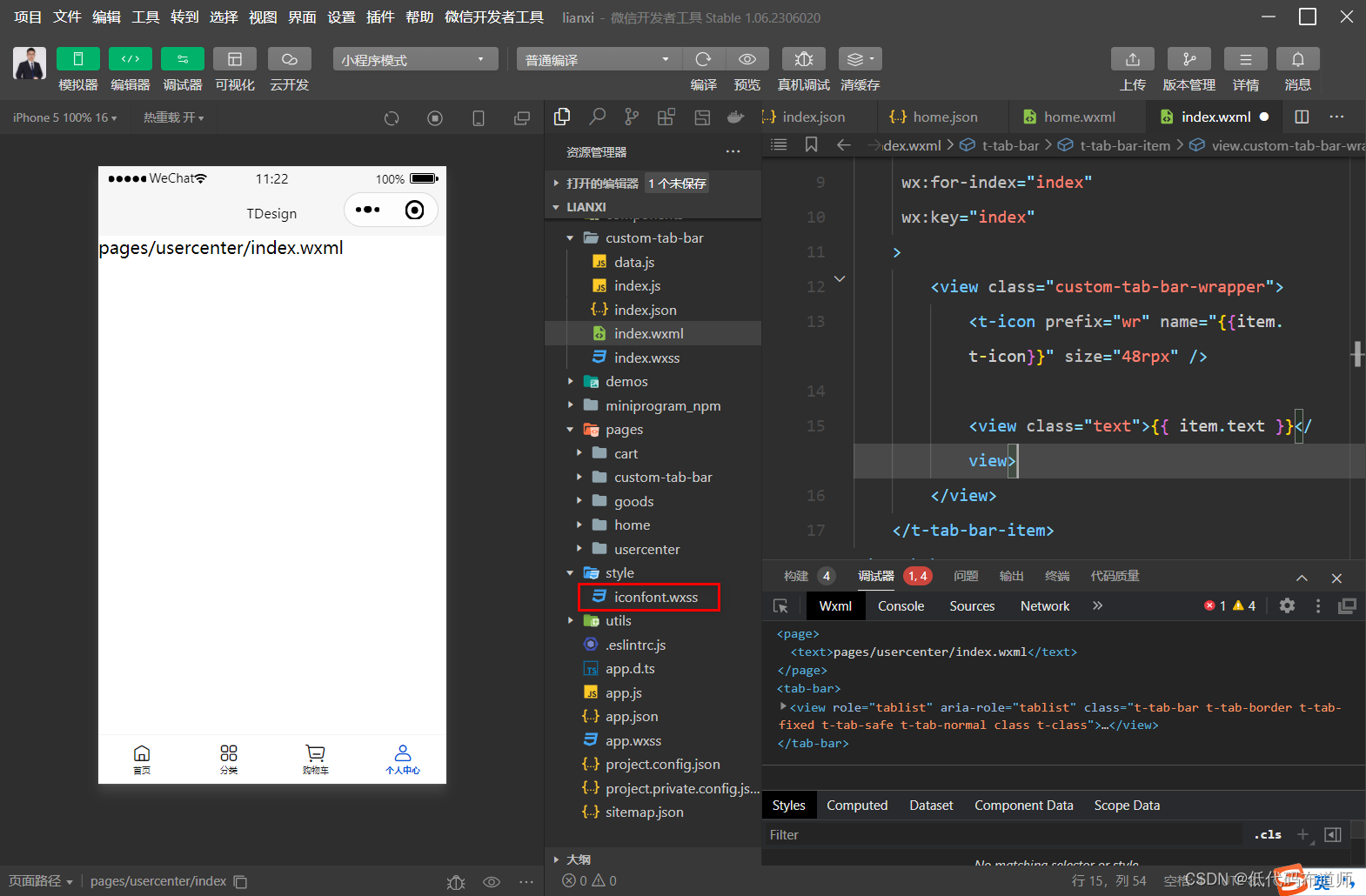

加载程序看起来像这样:

出现这种情况是因为OpenGL默认是不知道怎么处理alpha值的,更不知道什么时候应该丢弃片段。我们需要自己手动来弄。GLSL给了discard命令,一旦被调用,它就会保证片段不会被进一步处理,所以就不会进入颜色缓冲。

#version 330 core

out vec4 FragColor;

in vec2 TexCoords;

uniform sampler2D texture1;

void main()

{

vec4 texColor = texture(texture1, TexCoords);

if(texColor.a < 0.1)

discard;

FragColor = texColor;

}

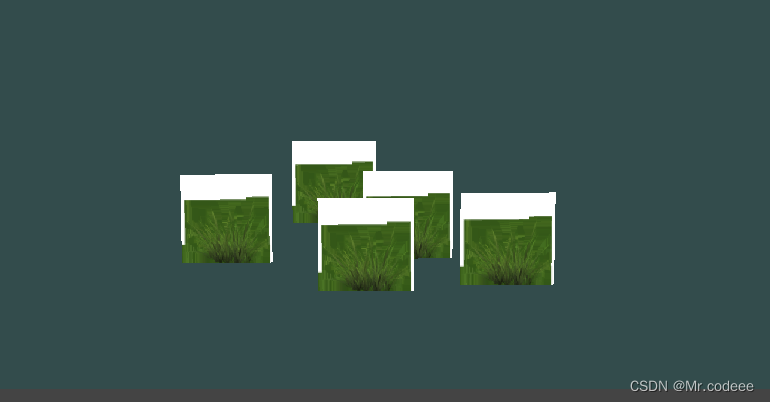

3.混合

虽然直接丢弃片段很好,但它不能让我们渲染半透明的图像。我们要么渲染一个片段,要么完全丢弃它。要想渲染有多个透明度级别的图像,我们需要启用混合(Blending)。

可以启用GL_BLEND来启用混合:

glEnable(GL_BLEND);

以下函数接受两个参数,来设置源和目标因子。

glBlendFunc(GLenum sfactor, GLenum dfactor)GL_ZERO | 因子等于0 |

GL_ONE | 因子等于1 |

GL_SRC_COLOR | 因子等于源颜色向量C¯source |

GL_ONE_MINUS_SRC_COLOR | 因子等于1−C¯source |

GL_DST_COLOR | 因子等于目标颜色向量C¯destination |

GL_ONE_MINUS_DST_COLOR | 因子等于1−C¯destination |

GL_SRC_ALPHA | 因子等于C¯source的alpha分量 |

GL_ONE_MINUS_SRC_ALPHA | 因子等于1− C¯source的alpha分量 |

GL_DST_ALPHA | 因子等于C¯destination的alpha分量 |

GL_ONE_MINUS_DST_ALPHA | 因子等于1− C¯destination的alpha分量 |

GL_CONSTANT_COLOR | 因子等于常数颜色向量C¯constant |

GL_ONE_MINUS_CONSTANT_COLOR | 因子等于1−C¯constant |

GL_CONSTANT_ALPHA | 因子等于C¯constant的alpha分量 |

GL_ONE_MINUS_CONSTANT_ALPHA | 因子等于1− C¯constant的alpha分量 |

为了获得之前两个方形的混合结果,我们需要使用源颜色向量的alpha作为源因子,使用1−alpha作为目标因子。这将会产生以下的glBlendFunc:

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);4.示例

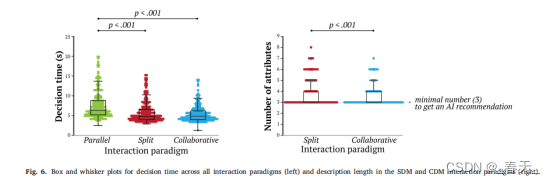

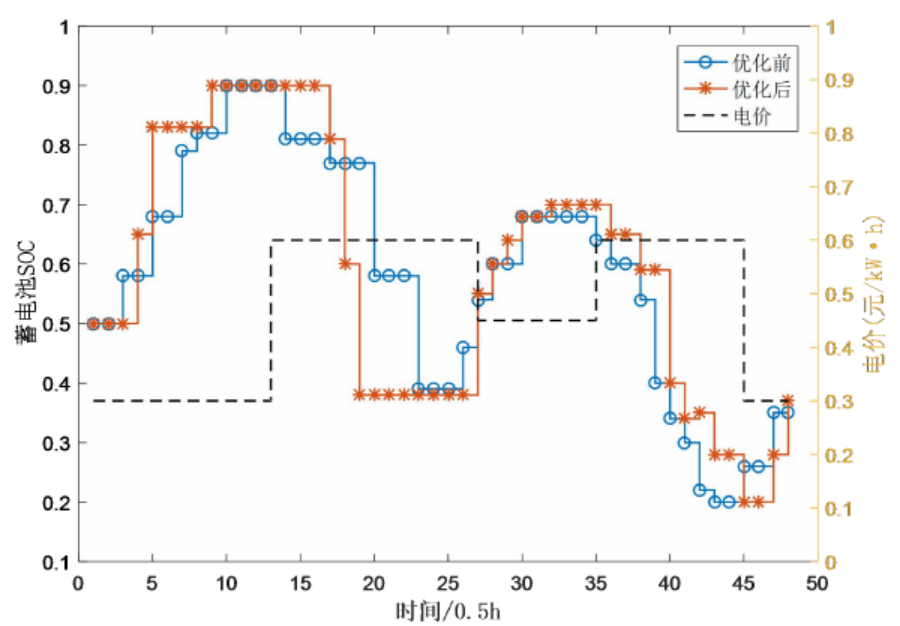

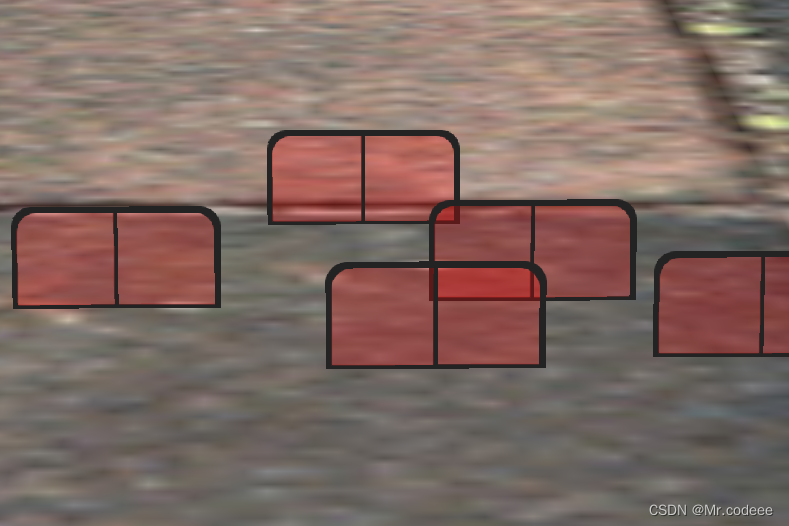

最前面窗户的透明部分遮蔽了背后的窗户。

发生这一现象的原因是,深度测试和混合一起使用的话会产生一些麻烦。当写入深度缓冲时,深度缓冲不会检查片段是否是透明的,所以透明的部分会和其它值一样写入到深度缓冲中。结果就是窗户的整个四边形不论透明度都会进行深度测试。即使透明的部分应该显示背后的窗户,深度测试仍然丢弃了它们。

所以我们不能随意地决定如何渲染窗户,让深度缓冲解决所有的问题了。这也是混合变得有些麻烦的部分。要想保证窗户中能够显示它们背后的窗户,我们需要首先绘制背后的这部分窗户。这也就是说在绘制的时候,我们必须先手动将窗户按照最远到最近来排序,再按照顺序渲染。

map<float, QVector3D> sorted;

foreach(auto item,windows) {

float distance = m_camera.Position.distanceToPoint(item);

sorted[distance] = item;

}

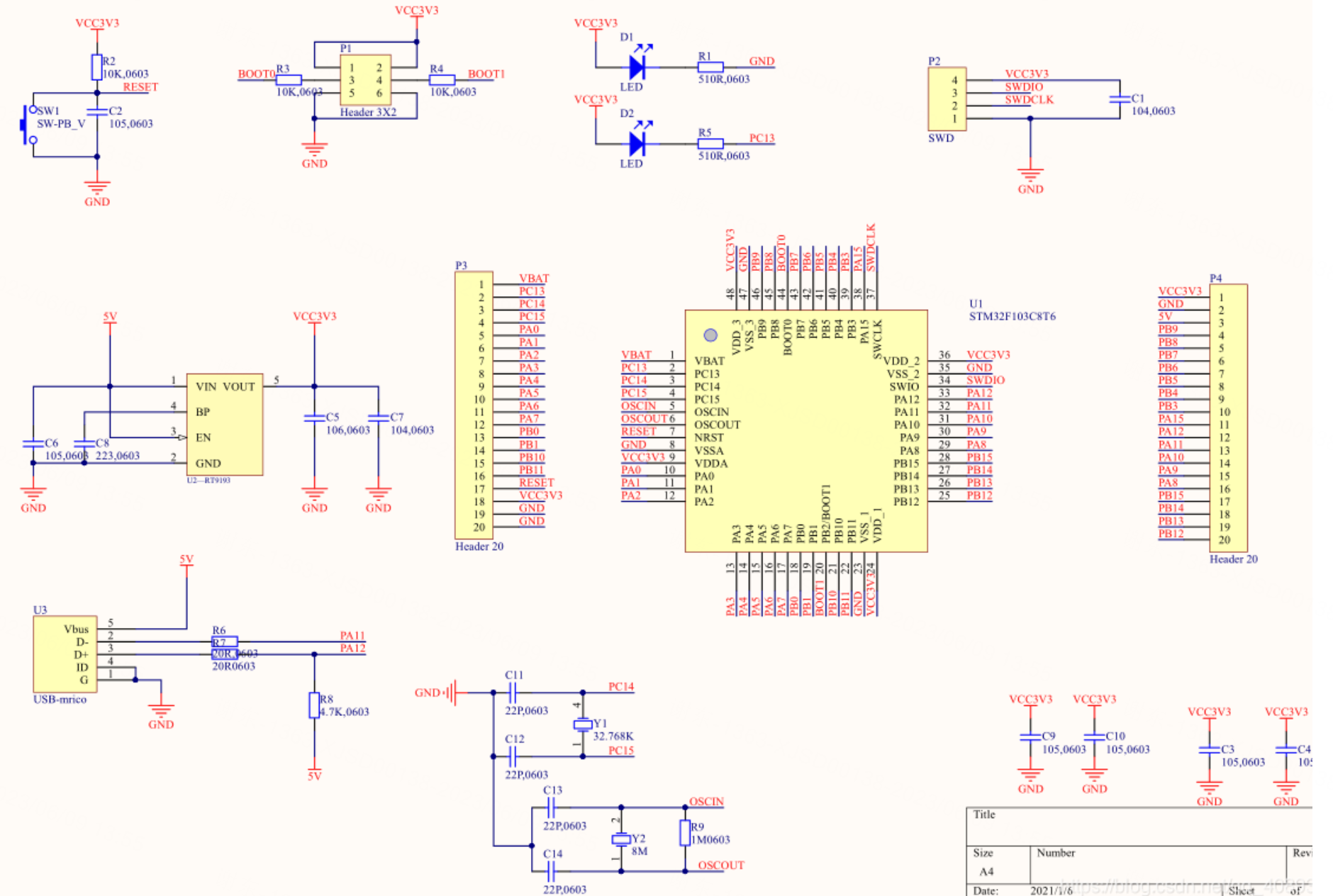

5.源码

#include "axbopemglwidget.h"

#include "vertices.h"

const unsigned int timeOutmSec=50;

QVector3D viewInitPos(0.0f,5.0f,20.0f);

float _near=0.1f,_far=100.0f;

QMatrix4x4 model;

QMatrix4x4 view;

QMatrix4x4 projection;

QPoint lastPos;

vector<QVector3D> windows;

map<float, QVector3D> sorted;

AXBOpemglWidget::AXBOpemglWidget(QWidget *parent) : QOpenGLWidget(parent)

{

connect(&m_timer,SIGNAL(timeout()),this,SLOT(on_timeout()));

m_timer.start(timeOutmSec);

m_time.start();

m_camera.Position=viewInitPos;

setFocusPolicy(Qt::StrongFocus);

setMouseTracking(true);

windows.push_back(QVector3D(-1.5f, 0.0f, -0.48f));

windows.push_back(QVector3D( 1.5f, 0.0f, 0.51f));

windows.push_back(QVector3D( 0.0f, 0.0f, 0.7f));

windows.push_back(QVector3D(-0.3f, 0.0f, -2.3f));

windows.push_back(QVector3D( 0.5f, 0.0f, -0.6f));

foreach(auto item,windows) {

float distance = m_camera.Position.distanceToPoint(item);

sorted[distance] = item;

}

}

AXBOpemglWidget::~AXBOpemglWidget()

{

for(auto iter=m_Models.begin();iter!=m_Models.end();iter++){

ModelInfo *modelInfo=&iter.value();

delete modelInfo->model;

}

}

void AXBOpemglWidget::loadModel(string path)

{

static int i=0;

makeCurrent();

Model * _model=new Model(QOpenGLContext::currentContext()->versionFunctions<QOpenGLFunctions_3_3_Core>()

,path.c_str());

//m_camera.Position=cameraPosInit(_model->m_maxY,_model->m_minY);

m_Models["Julian"+QString::number(i++)]=

ModelInfo{_model,QVector3D(0,0-_model->m_minY,0)

,0.0,0.0,0.0,false,QString::fromLocal8Bit("张三")+QString::number(i++)};

doneCurrent();

}

void AXBOpemglWidget::initializeGL()

{

initializeOpenGLFunctions();

//创建VBO和VAO对象,并赋予ID

bool success;

m_ShaderProgram.addShaderFromSourceFile(QOpenGLShader::Vertex,":/shaders/shaders/shapes.vert");

m_ShaderProgram.addShaderFromSourceFile(QOpenGLShader::Fragment,":/shaders/shaders/shapes.frag");

success=m_ShaderProgram.link();

if(!success) qDebug()<<"ERR:"<<m_ShaderProgram.log();

m_BoxDiffuseTex=new

QOpenGLTexture(QImage(":/images/images/container2.png").mirrored());

m_WindowDiffuseTex=new

QOpenGLTexture(QImage(":/images/images/blending_transparent_window.png"));

m_PlaneDiffuseTex=new

QOpenGLTexture(QImage(":/images/images/wall.jpg").mirrored());

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

m_PlaneMesh=processMesh(planeVertices,6,m_PlaneDiffuseTex->textureId());

m_WindowMesh=processMesh(transparentVertices,6,m_WindowDiffuseTex->textureId());

}

void AXBOpemglWidget::resizeGL(int w, int h)

{

Q_UNUSED(w);

Q_UNUSED(h);

}

void AXBOpemglWidget::paintGL()

{

model.setToIdentity();

view.setToIdentity();

projection.setToIdentity();

// float time=m_time.elapsed()/50.0;

projection.perspective(m_camera.Zoom,(float)width()/height(),_near,_far);

view=m_camera.GetViewMatrix();

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glEnable(GL_DEPTH_TEST);

glDepthFunc(GL_LESS);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

m_ShaderProgram.bind();

m_ShaderProgram.setUniformValue("projection", projection);

m_ShaderProgram.setUniformValue("view", view);

//model.rotate(time, 1.0f, 1.0f, 0.5f);

m_ShaderProgram.setUniformValue("viewPos",m_camera.Position);

// light properties, note that all light colors are set at full intensity

m_ShaderProgram.setUniformValue("light.ambient", 0.7f, 0.7f, 0.7f);

m_ShaderProgram.setUniformValue("light.diffuse", 0.9f, 0.9f, 0.9f);

m_ShaderProgram.setUniformValue("light.specular", 1.0f, 1.0f, 1.0f);

// material properties

m_ShaderProgram.setUniformValue("material.shininess", 32.0f);

m_ShaderProgram.setUniformValue("light.direction", -0.2f, -1.0f, -0.3f);

m_ShaderProgram.setUniformValue("model", model);

m_PlaneMesh->Draw(m_ShaderProgram);

foreach(auto modelInfo,m_Models){

model.setToIdentity();

model.translate(modelInfo.worldPos);

model.rotate(modelInfo.pitch,QVector3D(1.0,0.0,0.0));

model.rotate(modelInfo.yaw,QVector3D(0.0,1.0,0.0));

model.rotate(modelInfo.roll,QVector3D(0.0,0.0,1.0));

m_ShaderProgram.setUniformValue("model", model);

modelInfo.model->Draw(m_ShaderProgram);

}

for(map<float,QVector3D>::reverse_iterator riter=sorted.rbegin();

riter!=sorted.rend();riter++){

model.setToIdentity();

model.translate(riter->second);

m_ShaderProgram.setUniformValue("model", model);

m_WindowMesh->Draw(m_ShaderProgram);

}

}

void AXBOpemglWidget::wheelEvent(QWheelEvent *event)

{

m_camera.ProcessMouseScroll(event->angleDelta().y()/120);

}

void AXBOpemglWidget::keyPressEvent(QKeyEvent *event)

{

float deltaTime=timeOutmSec/1000.0f;

switch (event->key()) {

case Qt::Key_W: m_camera.ProcessKeyboard(FORWARD,deltaTime);break;

case Qt::Key_S: m_camera.ProcessKeyboard(BACKWARD,deltaTime);break;

case Qt::Key_D: m_camera.ProcessKeyboard(RIGHT,deltaTime);break;

case Qt::Key_A: m_camera.ProcessKeyboard(LEFT,deltaTime);break;

case Qt::Key_Q: m_camera.ProcessKeyboard(DOWN,deltaTime);break;

case Qt::Key_E: m_camera.ProcessKeyboard(UP,deltaTime);break;

case Qt::Key_Space: m_camera.Position=viewInitPos;break;

default:break;

}

}

void AXBOpemglWidget::mouseMoveEvent(QMouseEvent *event)

{

makeCurrent();

if(m_modelMoving){

for(auto iter=m_Models.begin();iter!=m_Models.end();iter++){

ModelInfo *modelInfo=&iter.value();

if(!modelInfo->isSelected) continue;

modelInfo->worldPos=

QVector3D(worldPosFromViewPort(event->pos().x(),event->pos().y()));

}

}else

if(event->buttons() & Qt::RightButton

|| event->buttons() & Qt::LeftButton

|| event->buttons() & Qt::MiddleButton){

auto currentPos=event->pos();

QPoint deltaPos=currentPos-lastPos;

lastPos=currentPos;

if(event->buttons() & Qt::RightButton)

m_camera.ProcessMouseMovement(deltaPos.x(),-deltaPos.y());

else

for(auto iter=m_Models.begin();iter!=m_Models.end();iter++){

ModelInfo *modelInfo=&iter.value();

if(!modelInfo->isSelected) continue;

if(event->buttons() & Qt::MiddleButton){

modelInfo->roll+=deltaPos.x();

}

else if(event->buttons() & Qt::LeftButton){

modelInfo->yaw+=deltaPos.x();

modelInfo->pitch+=deltaPos.y();

}

}

}

doneCurrent();

}

void AXBOpemglWidget::mousePressEvent(QMouseEvent *event)

{

bool hasSelected=false;

makeCurrent();

lastPos=event->pos();

if(event->buttons()&Qt::LeftButton){

QVector4D wolrdPostion=worldPosFromViewPort(event->pos().x(),

event->pos().y());

mousePickingPos(QVector3D(wolrdPostion));

for(QMap<QString, ModelInfo>::iterator iter=m_Models.begin();iter!=m_Models.end();iter++){

ModelInfo *modelInfo=&iter.value();

float r=(modelInfo->model->m_maxY-modelInfo->model->m_minY)/2;

if(modelInfo->worldPos.distanceToPoint(QVector3D(wolrdPostion))<r

&&!hasSelected){

modelInfo->isSelected=true;

hasSelected=true;

}

else

modelInfo->isSelected=false;

// qDebug()<<modelInfo->worldPos.distanceToPoint(QVector3D(wolrdPostion))

// <<"<"<<r<<"="<<modelInfo->isSelected;

}

}

doneCurrent();

}

void AXBOpemglWidget::mouseDoubleClickEvent(QMouseEvent *event)

{

Q_UNUSED(event);

if(m_modelMoving){

//再次双击取消移动

m_modelMoving=false;

}else

foreach(auto modelInfo,m_Models){

//双击启动移动

if(modelInfo.isSelected==true)

m_modelMoving=true;

qDebug()<<modelInfo.name<<modelInfo.isSelected;

}

}

void AXBOpemglWidget::on_timeout()

{

update();

}

QVector3D AXBOpemglWidget::cameraPosInit(float maxY, float minY)

{

QVector3D temp={0,0,0};

float height=maxY-minY;

temp.setZ(1.5*height);

if(minY>=0)

temp.setY(height/2.0);

viewInitPos=temp;

return temp;

}

Mesh* AXBOpemglWidget::processMesh(float *vertices, int size, unsigned int textureId)

{

vector<Vertex> _vertices;

vector<unsigned int> _indices;

vector<Texture> _textures;

//memcpy(&_vertices[0],vertices,8*size*sizeof(float));

for(int i=0;i<size;i++){

Vertex vert;

vert.Position[0]=vertices[i*5+0];

vert.Position[1]=vertices[i*5+1];

vert.Position[2]=vertices[i*5+2];

vert.TexCoords[0]=vertices[i*5+3];

vert.TexCoords[1]=vertices[i*5+4];

_vertices.push_back(vert);

_indices.push_back(i);

}

Texture tex; tex.id=textureId;

tex.type="texture_diffuse";

_textures.push_back(tex);

return new Mesh(

QOpenGLContext::currentContext()->versionFunctions<QOpenGLFunctions_3_3_Core>()

,_vertices,_indices,_textures);

}

QVector4D AXBOpemglWidget::worldPosFromViewPort(int posX, int posY)

{

float winZ;

glReadPixels(

posX,

this->height()-posY

,1,1

,GL_DEPTH_COMPONENT,GL_FLOAT

,&winZ);

float x=(2.0f*posX)/this->width()-1.0f;

float y=1.0f-(2.0f*posY)/this->height();

float z=winZ*2.0-1.0f;

float w = (2.0 * _near * _far) / (_far + _near - z * (_far - _near));

//float w= _near*_far/(_near*winZ-_far*winZ+_far);

QVector4D wolrdPostion(x,y,z,1);

wolrdPostion=w*wolrdPostion;

return view.inverted()*projection.inverted()*wolrdPostion;

}