这里记录下自己使用虚拟机详细安装hive的过程,在安装hive之前需要保证咋们已经安装好了hadoop,没有的话可以参考我之前的安装的流程

安装mysql

# 更新密钥

rpm --import https://repo.mysql.com/RPM-GPG-KEY-mysql-2022

# 安装Mysql yum库

rpm -Uvh http://repo.mysql.com//mysql57-community-release-el7-7.noarch.rpm

# yum安装Mysql

yum -y install mysql-community-server

# 启动Mysql设置开机启动

systemctl start mysqld

systemctl enable mysqld

# 检查Mysql服务状态

systemctl status mysqld

# 第一次启动mysql,会在日志文件中生成root用户的一个随机密码,使用下面命令查看该密码

grep 'temporary password' /var/log/mysqld.log

# 使用查询到的密码登录

mysql -u root -p

# 如果你想设置简单密码,需要降低Mysql的密码安全级别

set global validate_password_policy=LOW; # 密码安全级别低

set global validate_password_length=4; # 密码长度最低4位即可

# 修改密码

mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY '123456';

# 然后就可以用简单密码了(课程中使用简单密码,为了方便,生产中不要这样)

ALTER USER 'root'@'localhost' IDENTIFIED BY 'root';

/usr/bin/mysqladmin -u root password 'root'

grant all privileges on *.* to root@"%" identified by 'root' with grant option;

flush privileges;

# 创建hive数据库,后续要用到

CREATE DATABASE hive CHARSET UTF8;

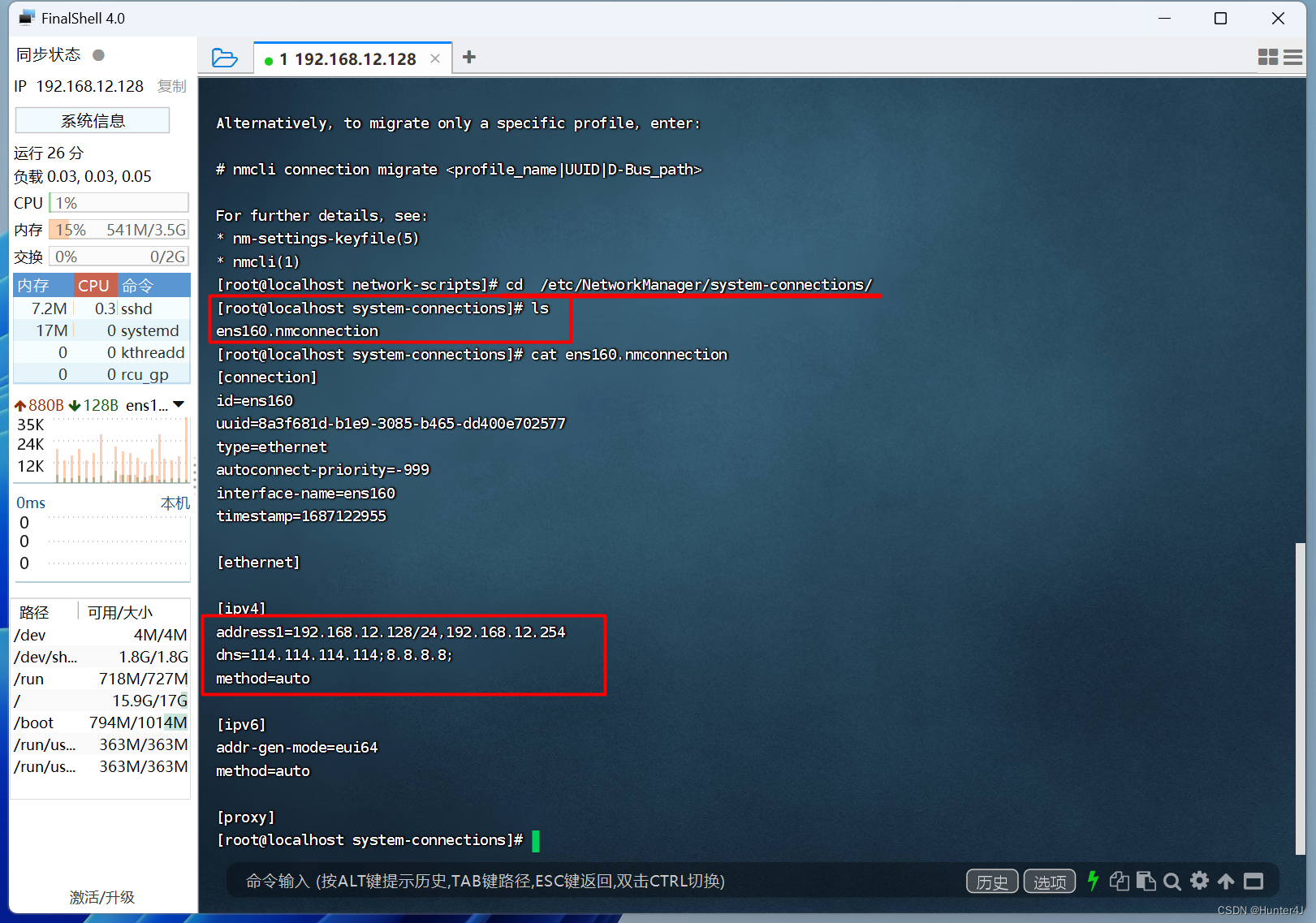

配置hadoop的core-site.xml

配置好hadoop的core-site.xml后记得重启集群

<!-- 整合hive 用户代理设置 -->

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

Hive安装

- 下载Hive安装包:

http://archive.apache.org/dist/hive/hive-3.1.3/apache-hive-3.1.3-bin.tar.gz - 解压到node1服务器的:/export/server/内

tar -zxvf apache-hive-3.1.3-bin.tar.gz -C /export/server/ - 设置软连接

ln -s /export/server/apache-hive-3.1.3-bin /export/server/hive - 下载MySQL驱动包:

https://repo1.maven.org/maven2/mysql/mysql-connector-java/5.1.34/mysql-connector-java-5.1.34.jar - 将下载好的驱动jar包,放入:Hive安装文件夹的lib目录内

mv mysql-connector-java-5.1.34.jar /export/server/hive/lib/ - 到hive目录conf下,配置hive-env.sh

export HADOOP_HOME=/export/server/hadoop-3.3.0

export HIVE_CONF_DIR=/export/server/hive/conf

export HIVE_AUX_JARS_PATH=/export/server/hive/lib

- 到hadoop目录conf下,配置hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://node1:3306/hive?createDatabaseIfNotExist=true&useSSL=false&useUnicode=true&characterEncoding=UTF-8</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>node1</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://node1:9083</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

</configuration>

- 元数据库初始化

cd /export/server/hive

bin/schematool -initSchema -dbType mysql -verbos

- 创建一个hive的日志文件夹

mkdir /export/server/hive/logs

- 启动元数据管理服务(必须启动,否则无法工作)

/export/server/hive

前台启动:bin/hive --service metastore

后台启动:nohup bin/hive --service metastore >> logs/metastore.log 2>&1 &

- 启动客户端

bin/hive

至此,hive安装完成!

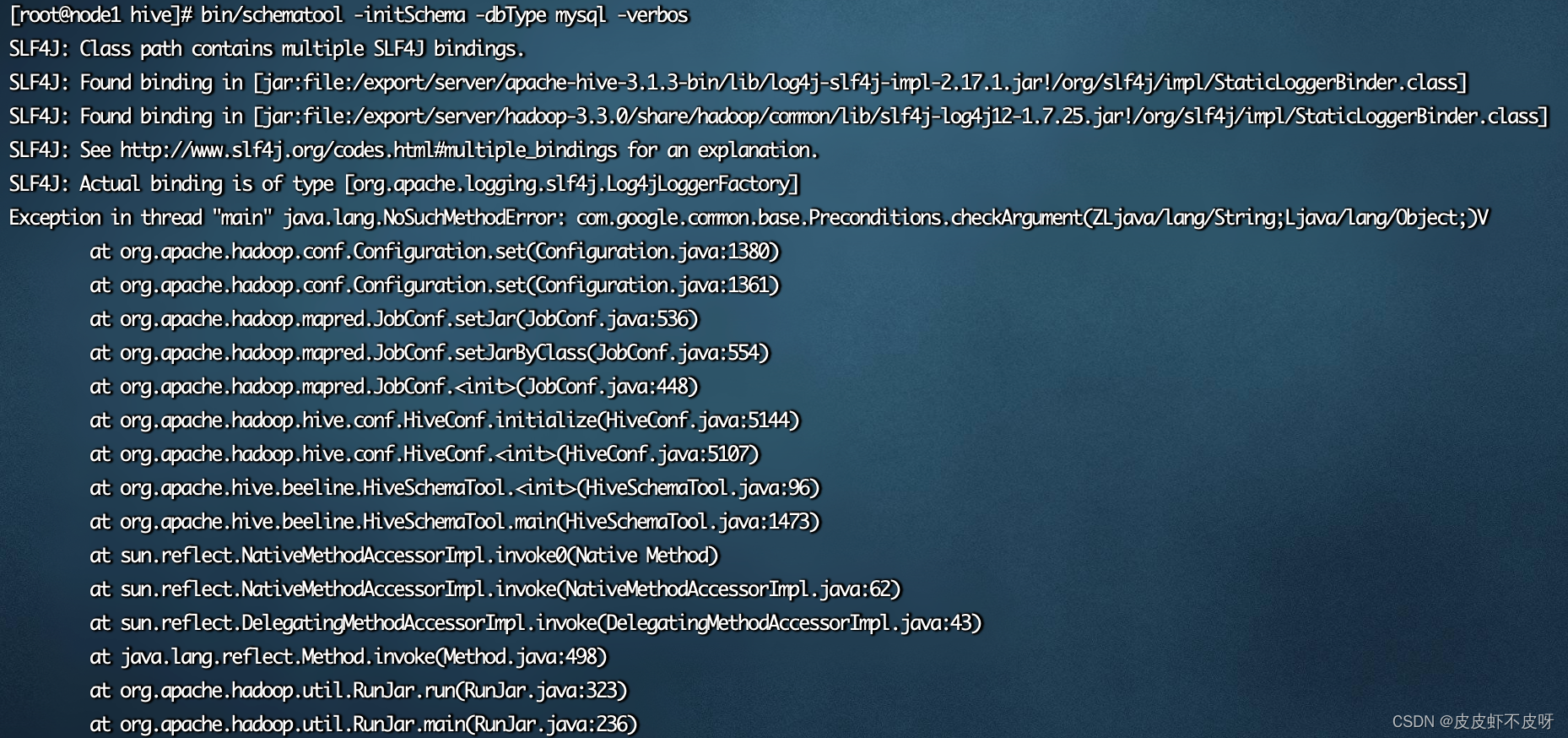

遇到的问题

hive在执行初始化的时候报错:Exception in thread “main“ java.lang.NoSuchMethodError: com.google.common.base.

引起报错的原因:因为hadoop和hive的两个guava.jar版本不一致

切换到hadoop目录下/export/server/hadoop/share/hadoop/common/lib发现guava-27.0-jre.jar

而hive目录下/export/server/hive/lib/的版本是guava-19.0.jar

解决方式

将hive下guava-19.0.jar版本删除,将hadoop下的guava-27.0-jre.jar拷贝一份到hive的目录下