Back to MLP: A Simple Baseline for Human Motion Prediction

conda install -c conda-forge easydict

简介

| paper | code |

|---|---|

| https://arxiv.org/abs/2207.01567v2 | https://github.com/dulucas/siMLPe |

Back to MLP是一个仅使用MLP的新baseline,效果SOTA。本文解决了人类运动预测的问题,包括从历史观测的序列中预测未来的身体姿势。本文表明,结合离散余弦变换(DCT)、预测关节残余位移、优化速度作为辅助损失等一系列标准实践,基于多层感知器(MLP)且参数仅为14万的轻量级网络可以超越最先进的性能。对Human3.6M(注:这个数据集实际大小是100多M),AMASS和3DPW数据集的详尽评估表明,我们名为siMLPe的方法始终优于所有其他方法。我们希望我们的简单方法可以作为社区的强大基线,并允许重新思考人类运动预测问题。

- 训练时引入Ground Truth,获得其隐藏表示作为中间目标指导姿态预测?

有关模型

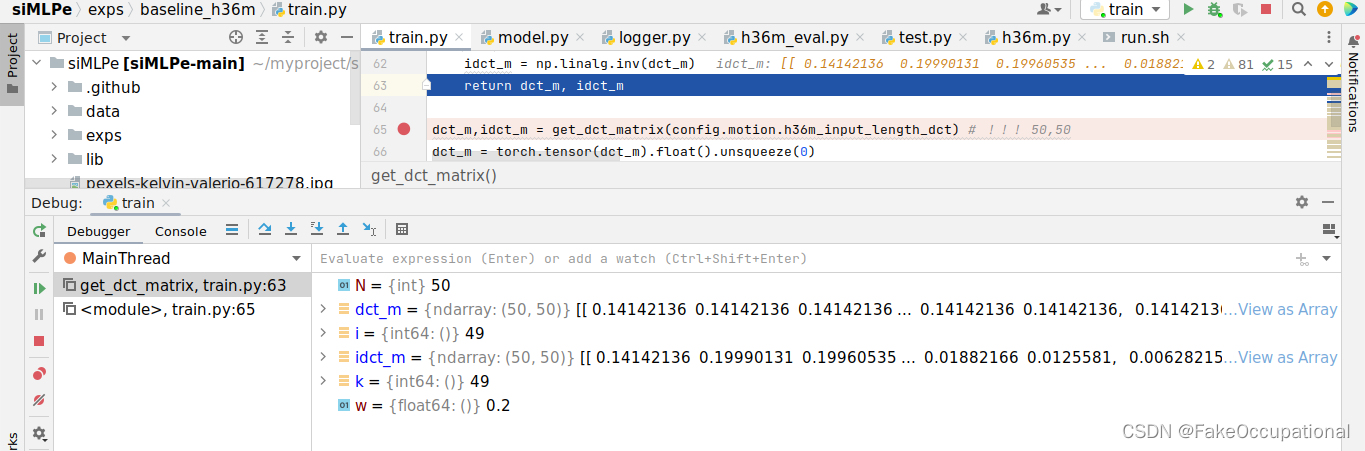

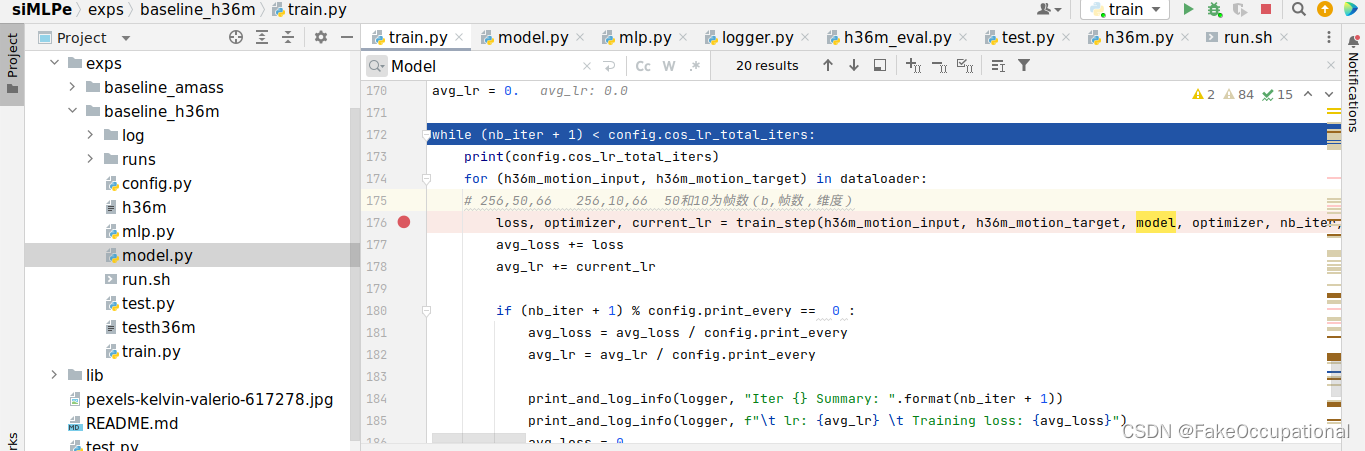

- 函数入口为 **/myproject/siMLPe/exps/baseline_h36m/train.py

DCT & IDCT

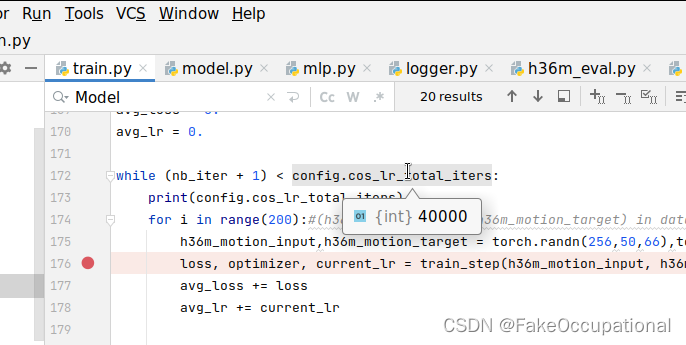

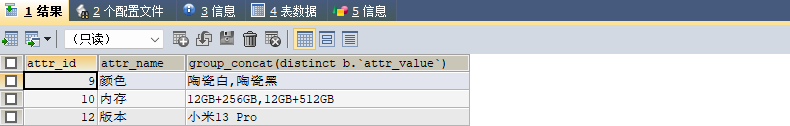

dct_m,idct_m = get_dct_matrix(config.motion.h36m_input_length_dct)- N=50,即参数config.motion.h36m_input_length_dct=50,在**/myproject/siMLPe/exps/baseline_h36m/config.py的51行

C.motion.h36m_input_length_dct = 50

模型的设置

- 以main中的两句话为入口,模型的设置只需要

model = Model(config)# from model import siMLPe as Model

model.train()

{'seed': 304, 'abs_dir': '/home/fly100/myproject/siMLPe/exps/baseline_h36m', 'this_dir': 'baseline_h36m', 'repo_name': 'siMLPe', 'root_dir': '/home/fly100/myproject/siMLPe', 'log_dir': '/home/fly100/myproject/siMLPe/exps/baseline_h36m/log', 'snapshot_dir': '/home/fly100/myproject/siMLPe/exps/baseline_h36m/log/snapshot', 'log_file': '/home/fly100/myproject/siMLPe/exps/baseline_h36m/log/log_2022_10_18_20_47_09.log', 'link_log_file': '/home/fly100/myproject/siMLPe/exps/baseline_h36m/log/log_last.log', 'val_log_file': '/home/fly100/myproject/siMLPe/exps/baseline_h36m/log/val_2022_10_18_20_47_09.log', 'link_val_log_file': '/home/fly100/myproject/siMLPe/exps/baseline_h36m/log/val_last.log', 'h36m_anno_dir': '/home/fly100/myproject/siMLPe/data/h36m/', 'motion': {'h36m_input_length': 50, 'h36m_input_length_dct': 50, 'h36m_target_length_train': 10, 'h36m_target_length_eval': 25, 'dim': 66}, 'data_aug': True, 'deriv_input': True, 'deriv_output': True, 'use_relative_loss': True, 'pre_dct': False, 'post_dct': False, 'motion_mlp': {'hidden_dim': 66, 'seq_len': 50, 'num_layers': 64, 'with_normalization': False, 'spatial_fc_only': False, 'norm_axis': 'spatial'}, 'motion_fc_in': {'in_features': 66, 'out_features': 66, 'with_norm': False, 'activation': 'relu', 'init_w_trunc_normal': False, 'temporal_fc': False}, 'motion_fc_out': {'in_features': 66, 'out_features': 66, 'with_norm': False, 'activation': 'relu', 'init_w_trunc_normal': True, 'temporal_fc': False}, 'batch_size': 256, 'num_workers': 8, 'cos_lr_max': 1e-05, 'cos_lr_min': 5e-08, 'cos_lr_total_iters': 40000, 'weight_decay': 0.0001, 'model_pth': None, 'shift_step': 1, 'print_every': 100, 'save_every': 5000}

siMLPe初始化

class siMLPe(nn.Module):

def __init__(self, config):

self.config = copy.deepcopy(config)

super(siMLPe, self).__init__()

self.motion_mlp = build_mlps(self.config.motion_mlp)# 堆叠多层的MLP

self.motion_fc_in = nn.Linear(self.config.motion.dim, self.config.motion.dim)# 66->66

self.motion_fc_out = nn.Linear(self.config.motion.dim, self.config.motion.dim)# 66->66

self.reset_parameters()# 权重->xavier,偏置->0

self.config.motion_mlp.seq_len # 50

self.arr0 = Rearrange('b n d -> b d n')

self.arr1 = Rearrange('b d n -> b n d')

堆叠多层的MLP

class TransMLP(nn.Module):

def __init__(self, dim, seq, use_norm, use_spatial_fc, num_layers, layernorm_axis):

super().__init__()

self.mlps = nn.Sequential(*[

MLPblock(dim, seq, use_norm, use_spatial_fc, layernorm_axis)

for i in range(num_layers)])

def forward(self, x):

x = self.mlps(x)

return x

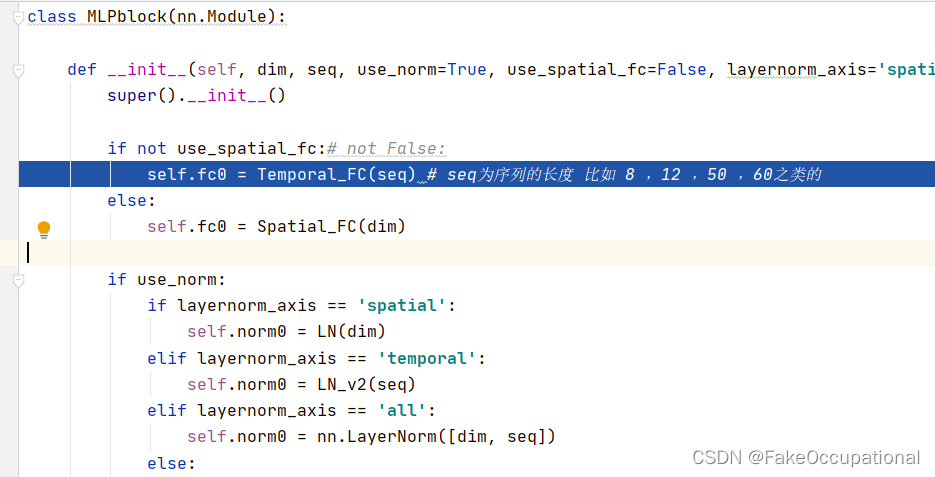

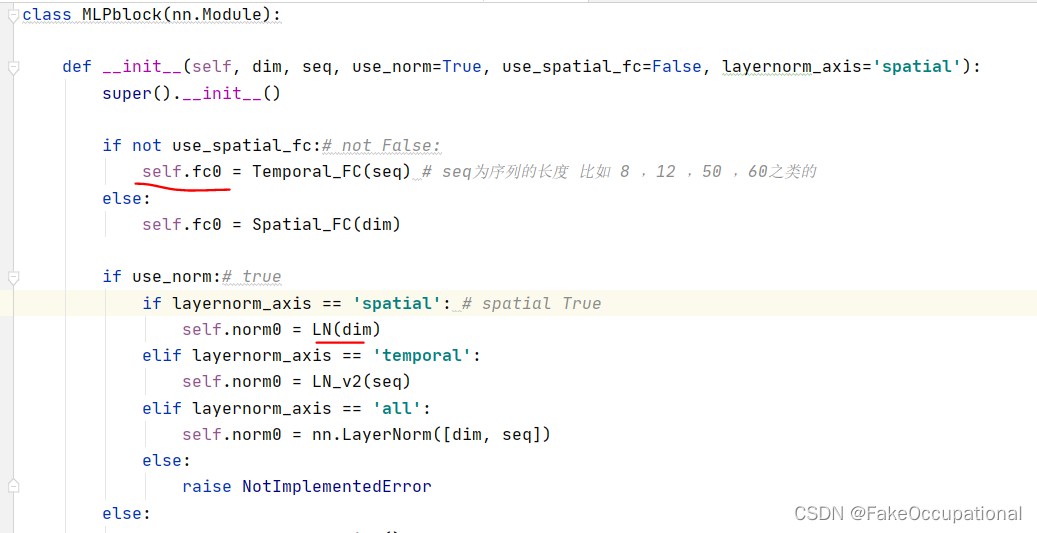

其中的Temporal_FC或Spatial_FC

class Temporal_FC(nn.Module):

def __init__(self, dim):

super(Temporal_FC, self).__init__()

self.fc = nn.Linear(dim, dim)# 类似序列长度到序列长度的,下边的Spatial_FC是转置了的

def forward(self, x):

x = self.fc(x)

return x

class Spatial_FC(nn.Module):

def __init__(self, dim):

super(Spatial_FC, self).__init__()

self.fc = nn.Linear(dim, dim)

self.arr0 = Rearrange('b n d -> b d n')

self.arr1 = Rearrange('b d n -> b n d')

def forward(self, x):

x = self.arr0(x)

x = self.fc(x)

x = self.arr1(x)

return x

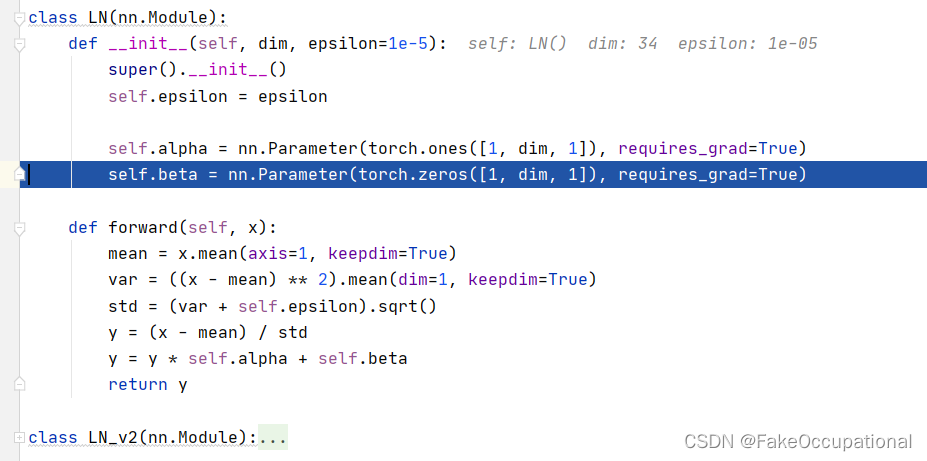

作者自己实现了LN

然后就是传播过程了

def forward(self, x):

x_ = self.fc0(x)

x_ = self.norm0(x_)

x = x + x_

return x

模型的训练

siMLPe(

(arr0): Rearrange('b n d -> b d n')

(arr1): Rearrange('b d n -> b n d')

(motion_mlp): TransMLP(

(mlps): Sequential(

(0): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(1): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(2): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(3): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(4): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(5): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(6): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(7): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(8): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(9): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(10): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(11): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(12): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(13): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(14): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(15): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(16): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(17): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(18): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(19): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(20): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(21): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(22): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(23): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(24): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(25): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(26): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(27): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(28): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(29): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(30): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(31): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(32): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(33): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(34): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(35): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(36): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(37): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(38): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(39): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(40): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(41): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(42): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(43): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(44): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(45): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(46): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(47): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(48): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(49): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(50): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(51): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(52): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(53): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(54): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(55): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(56): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(57): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(58): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(59): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(60): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(61): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(62): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

(63): MLPblock(

(fc0): Temporal_FC(

(fc): Linear(in_features=50, out_features=50, bias=True)

)

(norm0): Identity()

)

)

)

(motion_fc_in): Linear(in_features=66, out_features=66, bias=True)

(motion_fc_out): Linear(in_features=66, out_features=66, bias=True)

)

代码

环境准备

conda create -n simpmlp python=3.8

conda activate simpmlp

conda install pytorch==1.9.0 torchvision==0.10.0 torchaudio==0.9.0 cpuonly -c pytorch

- PyTorch >= 1.5

- Numpy

- CUDA >= 10.1

- Easydict conda install -c conda-forge easydict

- pickle 安装python后已包含pickle库,不需要单独再安装

- einops

- pip install

- six

- pip install tb-nightly https://blog.csdn.net/weixin_47166887/article/details/121384701

- https://blog.csdn.net/weixin_46133643/article/details/125344874

数据准备

- 解压数据

(base) ┌──(fly100㉿kali)-[~/myproject/siMLPe-main/data]

└─$ unzip h3.6m\ \(1\).zip

- 将文件夹名称3.6 改为36

- 将文件移到外边

- 将文件移到外边

- 原因

# H3.6M

cd exps/baseline_h36m/

sh run.sh

# Baseline 48

CUBLAS_WORKSPACE_CONFIG=:4096:8 python train.py --seed 888 --exp-name baseline.txt --layer-norm-axis spatial --with-normalization --num 48

人类有没有可能是被设计出来的?为什么视网膜贴反了?

细节

数据

- 在读取数据时的数据增强(50%的几率倒序)

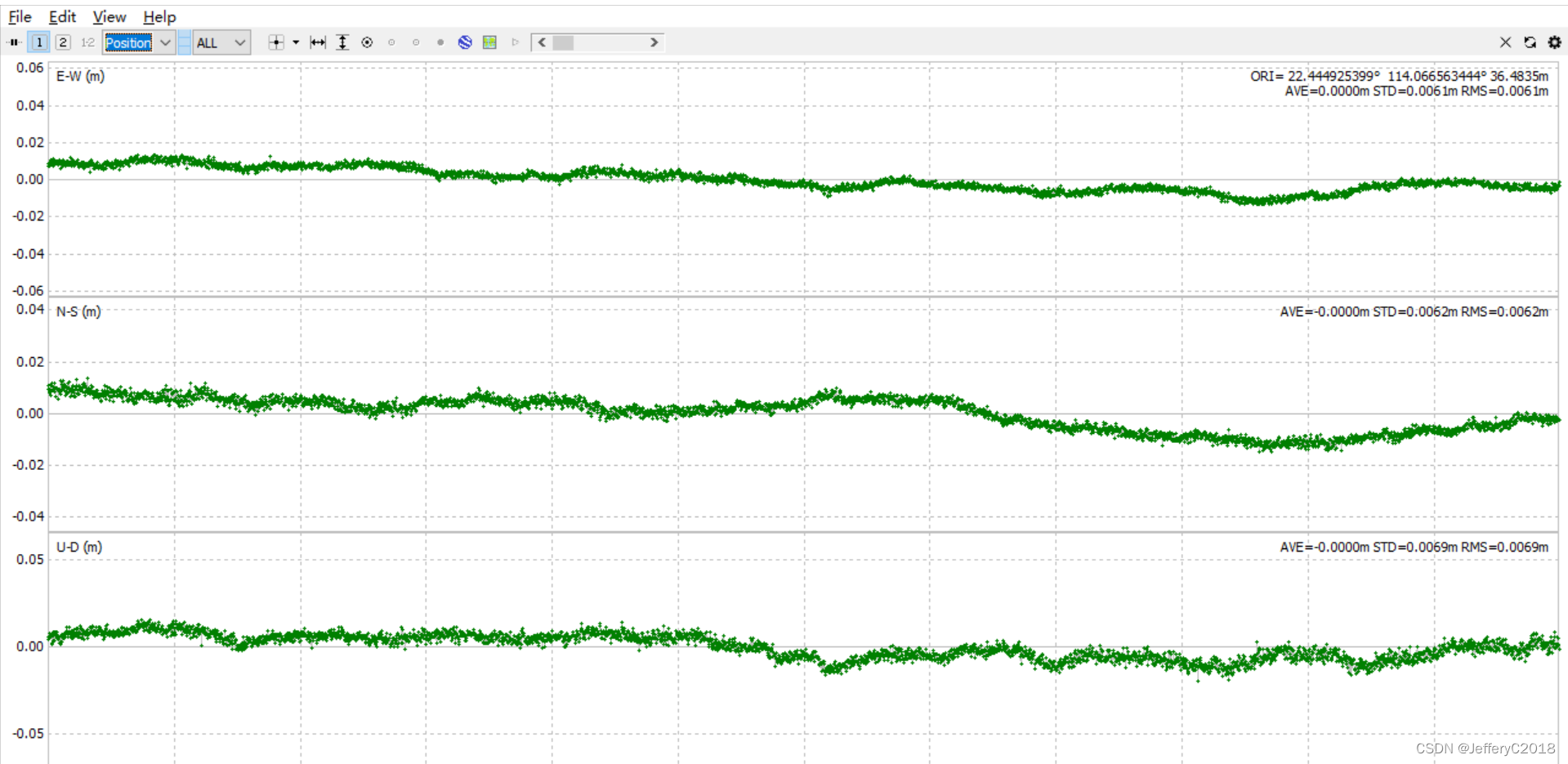

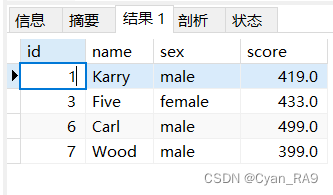

- 在获取数据时一次性获取连续的60个动作数据(60,66),前50个作为input,后10个作为target,一个66纬度的数据例子:

训练

- 要训练40000个epoch???