eee考核的时候搭建环境出了问题。。虽然有点久远,但还能看看

1.克隆centos

先查看第一台的ip

ifconfig

编辑另外两台

进入根目录

cd/编辑

vim /etc/sysconfig/network-scripts/ifcfg-ens33更改项

IPADDR=192.168.181.4 # 设置为想要的固定IP地址

重启

2.mobax ssh连接三台

3.改三台名字

hostnamectl set-hostname master

hostnamectl set-hostname slave1

hostnamectl set-hostname slave2bash 查看改成功没有

4.编写network文件 master操作就可以

vi /etc/sysconfig/networkNETWORKING=yes

HOSTNAME=master5.下载工具(三台)

yum install –y net-tools

bash

6.编辑host文件 (master)

vi /etc/hosts

这里改成自己的ip地址

192.168.181.3 master msater.root

192.168.181.4 slave1 slave1.root

192.168.181.5 slave2 slave2.root

scp /etc/hosts root@slave1:/etc/

scp /etc/hosts root@slave2:/etc/

7.关闭防火墙(三台)

关闭防火墙:systemctl stop firewalld

查看状态:systemctl status firewalld

看到dead

8.修改时区(三个)

tzselect

5

9

1

1

9.设置定时服务

crontab -e

*/10 * * * * usr/sbin/ntpdata master10.下载ntp服务

yum install –y ntp

11.配置ntp文件

master上执行

vi /etc/ntp.conf

server 127.127.1.0

fudge 127.127.1.0 stratum 10

scp /etc/ntp.conf root@slave1:/etc/

scp /etc/ntp.conf root@slave2:/etc/

/bin/systemctl restart ntpd.serviceslave1 slave2 执行

ntpdate master

12.配置免密在.SSH下

三台机器

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

master操作

cd .ssh/

cat id_dsa.pub >> authorized_keys

ssh master

exitslave1 slave2操作

cd .ssh/

scp master:~/.ssh/id_dsa.pub ./master_dsa.pub

cat master_dsa.pub >> authorized_keys最后通过master链接

ssh slave1

ssh slave2最后退出 exit

13.安装软件

进入opt 新建文件soft

cd /opt/

mkdir soft打开软件包 传入文件

14安装JDK

三个机器

mkdir -p /usr/java

tar -zxvf /opt/soft/jdk-8u171-linux-x64.tar.gz -C/usr/java/配置文件(master)

vi /etc/profile

export JAVA_HOME=/usr/java/jdk1.8.0_171

export CLASSPATH=$JAVA_HOME/lib/

export PATH=$PATH:$JAVA_HOME/bin

export PATH JAVA_HOME CLASSPATH

scp -r /etc/profile slave1:/etc/profile

scp -r /etc/profile slave2:/etc/profile

生效环境变量

source /etc/profile

java -version15 ZooKeeper

mkdir /usr/zookeeper

tar -zxvf /opt/soft/zookeeper-3.4.10.tar.gz -C/usr/zookeeper/

cd /usr/zookeeper/zookeeper-3.4.10/conf

scp zoo_sample.cfg zoo.cfg

vi zoo.cfg

修改这两个

dataDir=/usr/zookeeper/zookeeper-3.4.10/zkdata

dataLogDir=/usr/zookeeper/zookeeper-3.4.10/zkdatalog

在最后加上下面的

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

cd ..

mkdir zkdata

mkdir zkdatalog

cd zkdata

vi myid

1

scp -r /usr/zookeeper root@slave1:/usr/

scp -r /usr/zookeeper root@slave2:/usr/

slave1 slave2里面操作

cd /usr/zookeeper/zookeeper-3.4.10/zkdata

vi myid

slave1改为2

slave2改为3

/usr/zookeeper/zookeeper-3.4.10/bin/zkServer.sh start

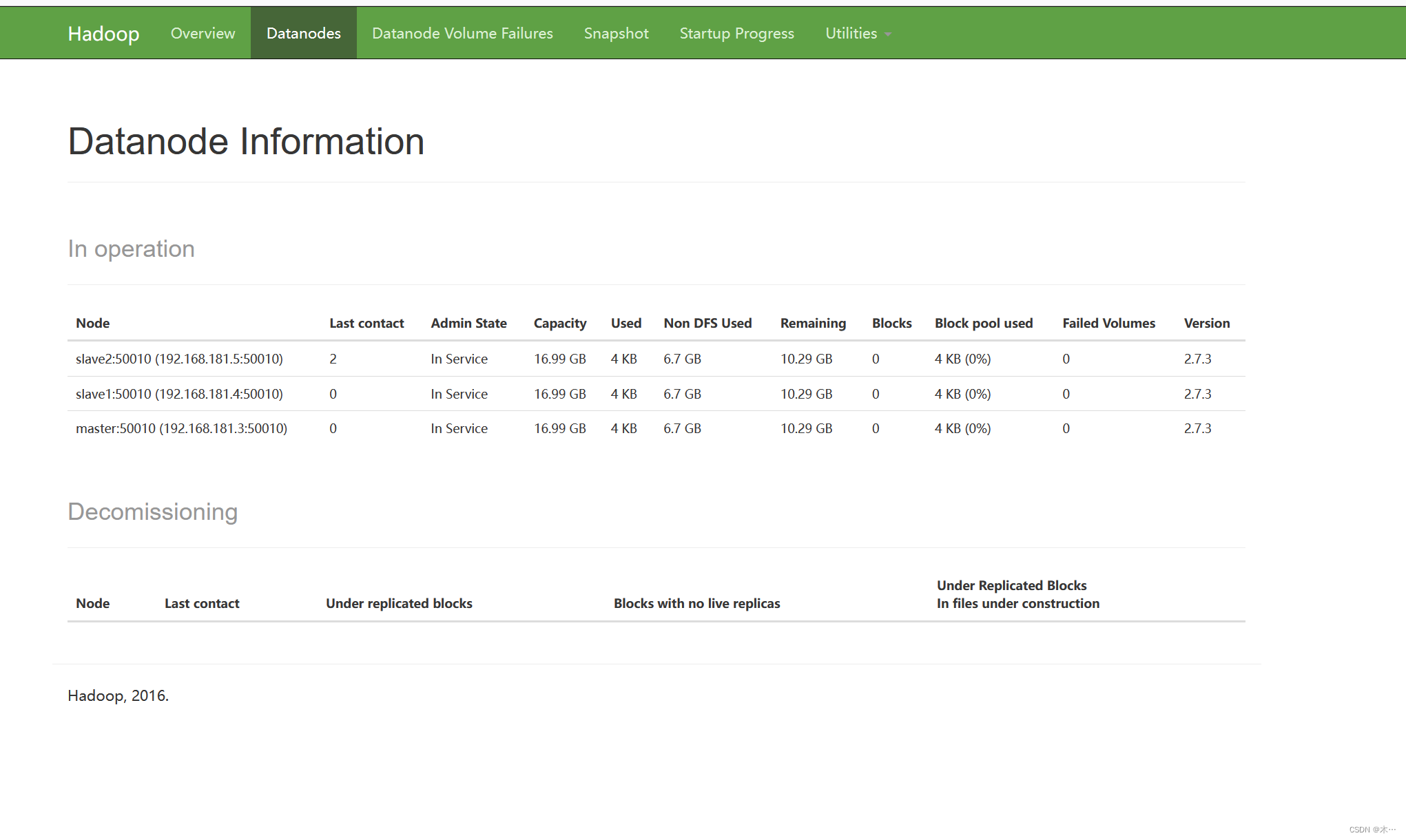

/usr/zookeeper/zookeeper-3.4.10/bin/zkServer.sh status16 hadoop

mkdir –p /usr/hadoop

tar -zxvf /opt/soft/hadoop-2.7.3.tar.gz -C /usr/hadoop/

vi /etc/profile

#HADOOP

export HADOOP_HOME=/usr/hadoop/hadoop-2.7.3

export CLASSPATH=$CLASSPATH:$HADOOP_HOME/lib

export PATH=$PATH:$HADOOP_HOME/bin

scp -r /etc/profile slave1:/etc/profile

scp -r /etc/profile slave2:/etc/profile

source /etc/profilecd /usr/hadoop/hadoop-2.7.3/etc/hadoop/

vi hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_171

17 四个配置文件

vi core-site.xml

在两个

<configuration>

</configuration>

中间加入

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/hadoop/hadoop-2.7.3/hdfs/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>fs.checkpoint.period</name>

<value>60</value>

</property>

<property>

<name>fs.checkpoint.size</name>

<value>67108864</value>

</property>

vi yarn-site.xml

<property>

<name>yarn.resourcemanager.address</name>

<value>master:18040</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:18030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:18088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:18025</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:18141</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>vi hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/hadoop/hadoop-2.7.3/hdfs/name</value>

<final>ture</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/hadoop/hadoop-2.7.3/hdfs/data</value>

<final>ture</final>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>vi mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>修改slave (上次就是这里出问题了。。

vi slaves

slave1

slave2

vi master

master

scp -r /usr/hadoop root@slave1:/usr/

scp -r /usr/hadoop root@slave2:/usr/

hadoop namenode -format

出现0 才正确

/usr/hadoop/hadoop-2.7.3/sbin/start-all.sh

访问 ip(master):50070

查看状态

jps

出现5个

18 hbase

master

mkdir -p /usr/hbase

tar -zxvf /opt/soft/hbase-1.2.4-bin.tar.gz -C/usr/hbase/

cd /usr/hbase/hbase-1.2.4/conf/

vi /etc/profile

#hbase

export HBASE_HOME=/usr/hbase/hbase-1.2.4

export PATH=$PATH:$HBASE_HOME/bin

PATH=$PATH:$ZOOKEEPER_HOME/bin

scp -r /etc/profile slave1:/etc/profile

scp -r /etc/profile slave2:/etc/profile

source /etc/profilevi hbase-site.xml

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>

<property>

<name>hbase.coprocessor.master.classes</name>

<value>org.apache.hadoop.hbase.security.access.AccessController</value>

</property>

<property>

<name>hbase.coprocessor.region.classes</name>

<value>org.apache.hadoop.hbase.security.token.TokenProvider,org.apache.hadoop.hbase.security.access.AccessController,org.apache.hadoop.hbase.security.access.SecureBulkLoadEndpoint</value>

</property>vi hbase-env.sh

export HBASE_MANAGES_ZK=false

export JAVA_HOME=/usr/java/jdk1.8.0_171

export HBASE_CLASSPATH=/usr/hadoop/hadoop-2.7.3/etc/hadoopvi regionservers

master

slave1

slave2scp -r /usr/hbase/hbase-1.2.4 slave1:/usr/hbase/

scp -r /usr/hbase/hbase-1.2.4 slave2:/usr/hbase/start-hbase.sh19 hive

mkdir –p /usr/hive

tar -zxvf /opt/soft/apache-hive-2.1.1-bin.tar.gz -C /usr/hive/

sudo vim /etc/profile

export HIVE_HOME=/usr/hive

export PATH=$HIVE_HOME/bin:$PATH

scp -r /etc/profile slave1:/etc/profile

scp -r /etc/profile slave2:/etc/profile

cd /usr/hive/apache-hive-2.1.1-bin/conf/

cp hive-env.sh.template hive-env.sh

vim hive-env.sh

HADOOP_HOME=/usr/hadoop/hadoop-2.7.3

![[golang 微服务] 6. GRPC微服务集群+Consul集群+grpc-consul-resolver案例演示](https://img-blog.csdnimg.cn/img_convert/c089a3215074dfddca1d2ad2ad269a8f.png)