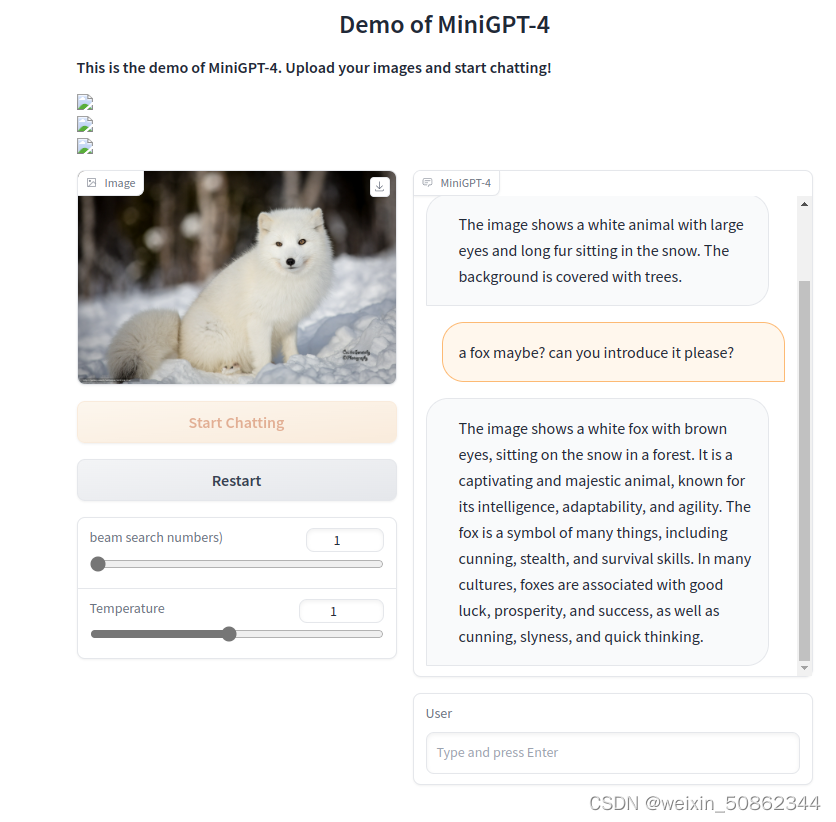

MiniGPT-4

https://github.com/vision-cair/minigpt-4

1 环境配置

1.1 安装环境

git lfs install

//如果报错 :git: 'lfs' is not a git command. See 'git --help'.

//尝试使用:

sudo apt-get install git-lfs

git lfs install

1.2 准备Vicuna权重

(1)下载 Vicuna’s delta weight

git lfs install

git clone https://huggingface.co/lmsys/vicuna-13b-delta-v0 # more powerful, need at least 24G gpu memory

# or

git clone https://huggingface.co/lmsys/vicuna-7b-delta-v0 # smaller, need 12G gpu memory

(2)填写表格以获得原始的LLAMA-7B或LLAMA-13B权重

这个东西其实也不用这么老实,直接huggingface上拉下来就行

(3)下载兼容library

pip install git+https://github.com/lm-sys/FastChat.git@v0.1.10

(4)创建最终权重

python -m fastchat.model.apply_delta --base /path/to/llama-13bOR7b-hf/ --target /path/to/save/working/vicuna/weight/ --delta /path/to/vicuna-13bOR7b-delta-v0/

同时修改minigpt4/configs/models/minigpt4.yaml中llama_model的路径

报错:

Tokenizer class LLaMATokenizer does not exist or is not currently imported.

参考#59将llama-13b-hf/tokenizer_config.json 中的"tokenizer_class": “LLaMATokenizer” 改成 “tokenizer_class”: “LlamaTokenizer”

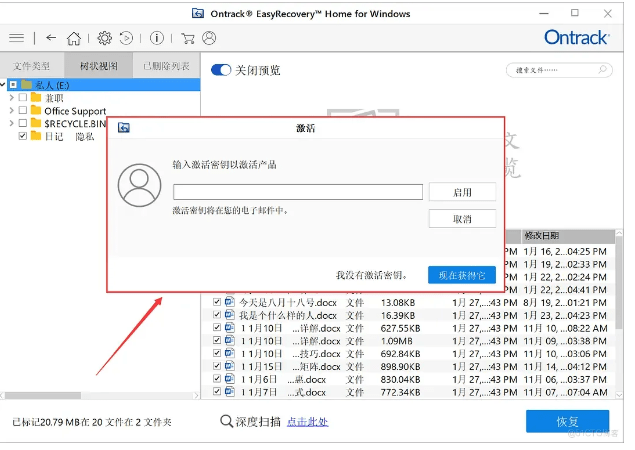

1.3 下载预训练权重

百度网盘: Vicuna 7B:

谷歌:Vicuna 13B

https://drive.google.com/file/d/1a4zLvaiDBr-36pasffmgpvH5P7CKmpze/view

Vicuna 7B:

https://drive.google.com/file/d/1RY9jV0dyqLX-o38LrumkKRh6Jtaop58R/view

同时修改配置文件中路径到实际下载路径

2. 体验

python demo.py --cfg-path eval_configs/minigpt4_eval.yaml --gpu-id 0

3.微调

预训练贫困小孩就算了,不过就算是微调至少要16G

train_configs/minigpt4_stage2_finetune.yaml配置

weight_decay: 0.05

max_epoch: 5

iters_per_epoch: 20

batch_size_train: 1 #12

batch_size_eval: 1 #12

num_workers: 2

warmup_steps: 200

修改两个路径

(1)train_configs/minigpt4_stage2_pretrain.yaml为第一阶段的训练checkpoint

(2minigpt4/configs/datasets/cc_sbu/align.yaml修改为数据集路径

官方有提供训练集和第一阶段预训练模型

训练集格式如下:

{“annotations”: [{“image_id”: “2”, “caption”: “The image shows a man

fishing on a lawn next to a river with a bridge in the background.

Trees can be seen on the other side of the river, and the sky is

cloudy.”}

对应图片:

NUM_GPU为自己的gpu数量

torchrun --nproc-per-node NUM_GPU train.py --cfg-path train_configs/minigpt4_stage2_finetune.yaml