关于我们要搭建的K8S:

- Docker版本:docker-ce-19.03.9;

- K8S版本:1.20.2;

- 三个节点:master、node1、node2(固定IP);

- 容器运行时:仍然使用Docker而非Containerd;

- Pod网络:用Calico替换Flannel实现 Pod 互通,支持更大规模的集群;

- 集群构建工具:Kubeadm(这个没啥好说的吧);

关于网络配置:

- 整体机器采用NAT地址转换;

- 各台虚拟机采用固定IP地址;

- 虚拟机VMWare统一网关地址:192.168.32.2;

具体IP地址分配如下:

| 主机名称 | 硬件配置 | IP |

|---|---|---|

| master | CPU4核/内存4G | 192.168.32.200 |

| node1 | CPU4核/内存4G | 192.168.32.201 |

| node2 | CPU4核/内存4G | 192.168.32.202 |

① 安装CentOS镜像

首先在镜像站下载CentOS-7-x86_64-Minima.iso,即最小的镜像文件;

然后在VMWare安装这个镜像,这里作为master机器;

具体镜像安装挺简单的,这里不再赘述了;

只贴一个配置:

- 1个处理器4核

- 4G内存

- 40G硬盘SCSI

- 网络:NAT

分区:

- /boot:256M

- swap:2G

- /:剩余

在安装CentOS时可以不创建用户,但是一定要创建Root密码;

我这里创建的是:

123456;

② 配置网络

在网络配置中,我们要配置虚拟机为固定的IP地址,避免使用DCHP动态分配IP

首先需要修改配置vi /etc/sysconfig/network:

$ vi /etc/sysconfig/network

# 添加下面的配置

+ NETWORKING=yes

+ HOSTNAME=master 还要修改vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

-BOOTPROTO=dchp

+BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

-UUID=XXXX-XXXX-XXXX

-ONBOOT=no

+ONBOOT=yes

+IPADDR=192.168.32.200

+NETMASK=255.255.255.0

+GATEWAY=192.168.32.2配置hosts:

$ vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

+ 192.168.32.200 master

+ 192.168.32.201 node1

+ 192.168.32.202 node2配置完成后reboot;

reboot后登录,ping百度、qq等网站,成功则说明配置成功

ping www.qq.com

PING ins-r23tsuuf.ias.tencent-cloud.net (221.198.70.47) 56(84) bytes of data.

64 bytes from www47.asd.tj.cn (221.198.70.47): icmp_seq=1 ttl=128 time=61.0 ms

64 bytes from www47.asd.tj.cn (221.198.70.47): icmp_seq=2 ttl=128 time=61.0 ms

64 bytes from www47.asd.tj.cn (221.198.70.47): icmp_seq=3 ttl=128 time=61.2 ms

③ 系统配置

系统配置主要是关闭防火墙、关闭swap、配置yum源等;

Ⅰ.关闭防火墙iptables && .禁用selinux

关闭防火墙iptables

$ service iptables stop

$ systemctl disable iptables

禁用selinux

$ systemctl stop firewalld

$ systemctl disable firewalld

# 查看selinux

$ getenforce

Enforcing

# 关闭

$ vim /etc/selinux/config

# 修改为:disabled

SELINUX=disabledSSH登录配置

$ vim /etc/ssh/sshd_config

# 修改

UseDNS no

PermitRootLogin yes #允许root登录

PermitEmptyPasswords no #不允许空密码登录

PasswordAuthentication yes # 设置是否使用口令验证

关闭Swap空间

[root@master ~]# swapoff -a

[root@master ~]# sed -ie '/swap/ s/^/# /' /etc/fstab

[root@master ~]# free -m

total used free shared buff/cache available

Mem: 3770 1265 1304 12 1200 2267

Swap: 0 0 0配置桥接流量

[root@k8s-master ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF配置yum源

# 配置阿里云源

# 备份

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

# 配置

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

# 生成缓存

yum makecache

# 安装epel库

yum -y install epel-release

yum -y update④ 下载并配置软件

Ⅰ.时间同步ntp

安装ntp:

yum install ntp

# 开启服务

$ service ntpd start

# 开机启动

$ systemctl enable ntpdⅡ.安装Docker

安装必要的一些系统工具:

yum install -y yum-utils device-mapper-persistent-data lvm2

添加软件源信息:

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

替换下载源为阿里源:

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

# 更新源

yum makecache fast查看可安装版本:

yum list docker-ce --showduplicates | sort -r

选择版本安装:

yum -y install docker-ce-19.03.9

设置开机启动r并启动Docke:

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors" : [

"http://hub-mirror.c.163.com",

"http://registry.docker-cn.com",

"http://docker.mirrors.ustc.edu.cn"

]

}

EOF重启生效:

[root@master ~]# systemctl restart docker

[root@master ~]# docker info | grep 'Server Version'

Server Version: 19.03.9

以上步骤,三台机器都得操作一遍。

Ⅲ. 安装kubeadm/kubelet和kubectl

由于kubeadm依赖中已经包括了kubectl、kubelet,所以不用单独安装kubectl;

配置镜像源:

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF安装kubeadm:

yum install kubeadm-1.20.2 -y

设置开机启动:

systemctl enable kubelet至此,所有配置配置完毕、所有软件安装完毕;

创建快照并克隆,克隆master,

选择虚拟机→快照→拍摄快照,使用当前虚拟机的当前状态拍摄快照;

拍摄完成后,选择当前拍摄快照,点击克隆,选择现有快照,选择完整克隆,随后修改名称,完成即可;

修改克隆机并测试网络互通性

通过镜像克隆两台虚拟机,取名为node1和node2;

修改各台虚拟机的配置,这里以node1为例:

$ vi /etc/sysconfig/network

NETWORKING=yes

- HOSTNAME=master

+ HOSTNAME=node1

$ vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

- IPADDR=192.168.32.200

+ IPADDR=192.168.32.201

NETMASK=255.255.255.0

GATEWAY=192.168.32.2

NAME=ens33

DEVICE=ens33

ONBOOT=yes

node2虚拟机类似,最后做测试,如在master去ping其他node:

[root@master ~]# ping node1

PING node1 (192.168.24.181) 56(84) bytes of data.

64 bytes from node1 (192.168.24.181): icmp_seq=1 ttl=64 time=0.183 ms

64 bytes from node1 (192.168.24.181): icmp_seq=2 ttl=64 time=0.192 ms

64 bytes from node1 (192.168.24.181): icmp_seq=3 ttl=64 time=0.175 ms

^C

--- node1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.175/0.183/0.192/0.013 ms

[root@master ~]# ping node2

PING node2 (192.168.24.182) 56(84) bytes of data.

64 bytes from node2 (192.168.24.182): icmp_seq=1 ttl=64 time=0.274 ms

64 bytes from node2 (192.168.24.182): icmp_seq=2 ttl=64 time=0.235 ms

64 bytes from node2 (192.168.24.182): icmp_seq=3 ttl=64 time=0.199 ms

^C

--- node2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.199/0.236/0.274/0.030 ms创建Kubernetes集群

Master节点初始化

在Master节点执行:

[root@master ~]# kubeadm init \

--apiserver-advertise-address=192.168.32.200 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.20.2 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--ignore-preflight-errors=all这里可能会出现 40s pass或者部署异常,这个和容器的配置有关,看一参考这个

Docker驱动问题:detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". - 简书

The HTTP call equal to ‘curl -sSL http://localhost:10248/healthz‘ failed with error: Get “http://loc_the http call equl_king config的博客-CSDN博客 修改完,最好重启下。

等待一段时间后初始化结束,这时根据提示我们需要拷贝认证文件:

# 拷贝kubectl使用的连接k8s认证文件到默认路径

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config同时还会创建鉴权token,类似于:

kubeadm join 192.168.32.200:6443 --token w2mfe2.3pwfhv6nm9yueb4d \ --discovery-token-ca-cert-hash sha256:88b9219498210b9ac2f394e32b06a21ae58af887ff6566fa53f30fc9a9dd1ef3 --v=6

这个是稍后将子Node节点加入Master节点时需要的命令,需要先记下来;

此时查看Master节点的状态:

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 2m15s v1.20.2这时master节点是NotReady的状态;

这是因为我们还没有为Kubernetes安装对应的CNI(Container Network Interface,容器网络接口)插件;

安装Calico插件

通过wget下载Calico配置文件:

wget https://docs.projectcalico.org/manifests/calico.yaml

这里可能下载不下来,可以在谷歌上面找资源,或者试下浏览器打开这个网站,复制放到新文件,修改文件名为 calico.yaml。

修改Pod网络(CALICO_IPV4POOL_CIDR),与前面kubeadm init指定的一样;

#

vim calico.yaml

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

-# - name: CALICO_IPV4POOL_CIDR

-# value: "10.244.0.0/16"

+ - name: CALICO_IPV4POOL_CIDR

+ value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.最后通过配置文件启动服务:

kubectl apply -f calico.yaml等待一段时间后,查看pod状态:

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6d7b4db76c-pkdfp 1/1 Running 1 18h

calico-node-5vmrs 1/1 Running 2 18h

calico-node-95x84 1/1 Running 1 18h

calico-node-tpx7f 1/1 Running 2 18h

coredns-7f89b7bc75-lr8ch 1/1 Running 1 18h

coredns-7f89b7bc75-z5j77 1/1 Running 1 18h

etcd-master 1/1 Running 2 18h

kube-apiserver-master 1/1 Running 2 18h

kube-controller-manager-master 1/1 Running 2 18h

kube-proxy-5wtj8 1/1 Running 2 18h

kube-proxy-b7h4t 1/1 Running 2 18h

kube-proxy-kxhrs 1/1 Running 2 18h

kube-scheduler-master 1/1 Running 2 18h同时查看节点状态:

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 19h v1.21.1以上的步骤除了master外,其他node1,2节点也得操作。

Node节点加入Master

kubeadm join 192.168.32.200:6443 --token w2mfe2.3pwfhv6nm9yueb4d \

--discovery-token-ca-cert-hash sha256:88b9219498210b9ac2f394e32b06a21ae58af887ff6566fa53f30fc9a9dd1ef3 --v=6集群创建完毕!

注:默认token有效期为24小时,当过期之后,该token就不可用了;

这时就需要重新创建token,操作如下:

kubeadm token create --print-join-command通过该命令可以快捷生成token;

这里可能会出现问题,可以参考这个 error execution phase preflight: couldn‘t validate the identity of the API Server: abort connecting_彭宇栋的博客-CSDN博客

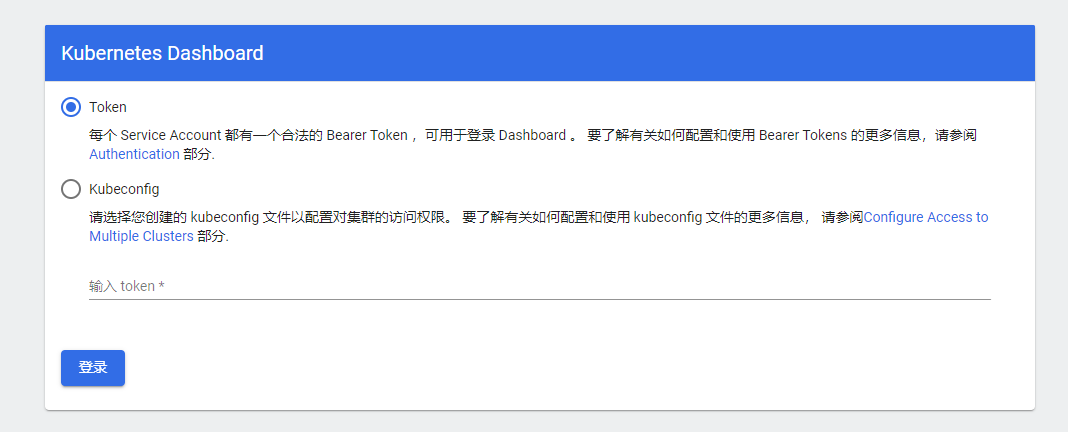

部署WebUI(Dashboard)

①下载并部署

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.1.0/aio/deploy/recommended.yaml -O dashboard.yaml可能访问不了,得通过特殊工具访问,网上一大堆模板,可以找找

由于在默认情况下,Dashboard只能集群内部访问;因此,需要修改Service为NodePort类型,暴露到外部;

vi dashboard.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

+ type: NodePort

ports:

- port: 443

targetPort: 8443

+ nodePort: 30001

selector:

k8s-app: kubernetes-dashboard随后,将配置文件应用:

kubectl apply -f dashboard.yaml等待服务部署后查看:

[root@master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-79c5968bdc-ldvd7 1/1 Running 1 19h

kubernetes-dashboard-7448ffc97b-gpsv5 1/1 Running 1 19h用浏览器访问(不要用谷歌和IE之类的,要用火狐,这个坑踩了好久)

- https://192.168.32.200:30001/

出现下面的界面:

说明Dashboard部署成功;

② 创建用户角色

面在Master节点创建service account并绑定默认cluster-admin管理员集群角色;

创建用户:

[root@master ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

用户授权:

[root@master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

获取用户Token:

[root@master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-bbsrb

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 9a01a52d-04a5-4ea6-b4f8-afdc22b1b9c6

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Inpvc2Y0dmREN3p1SU5GWUhuWWVNek92NDJzX2JFQm94N09Dd1Nwa1lWUnMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tYmJzcmIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiOWEwMWE1MmQtMDRhNS00ZWE2LWI0ZjgtYWZkYzIyYjFiOWM2Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.oAN9GWZlj6_HKdG_2KOLzjfysXpVBl6lcfarQThZYs-TaEtVzOfKqvAPe4e7yE93uunV-4ddr1fdyGDV3iwPPwpGF9B65IDn6XlM268agEwb2efNjlbwYku4NZt8RCgH_tf-IdvuwEiuYolaGvfYLGw1sQ6-Hphi4kw-G9KZgCAUYwcqhijGSwcZwP7GwMEsthqXLJE84mUHpqRj6QZoRV_vx3G54PyIplLrp04gkuLZArqcxxkY7Y9gibafbhKKbNbxY1v32lYIzG1VjwHb3vmLx_FABEilztYtU1alXfgtdvuiGBpfuzgXgOCgLyElRqUK04dWRCSIRHM3Ai9aRg使用获取到的Token登录Dashboard;

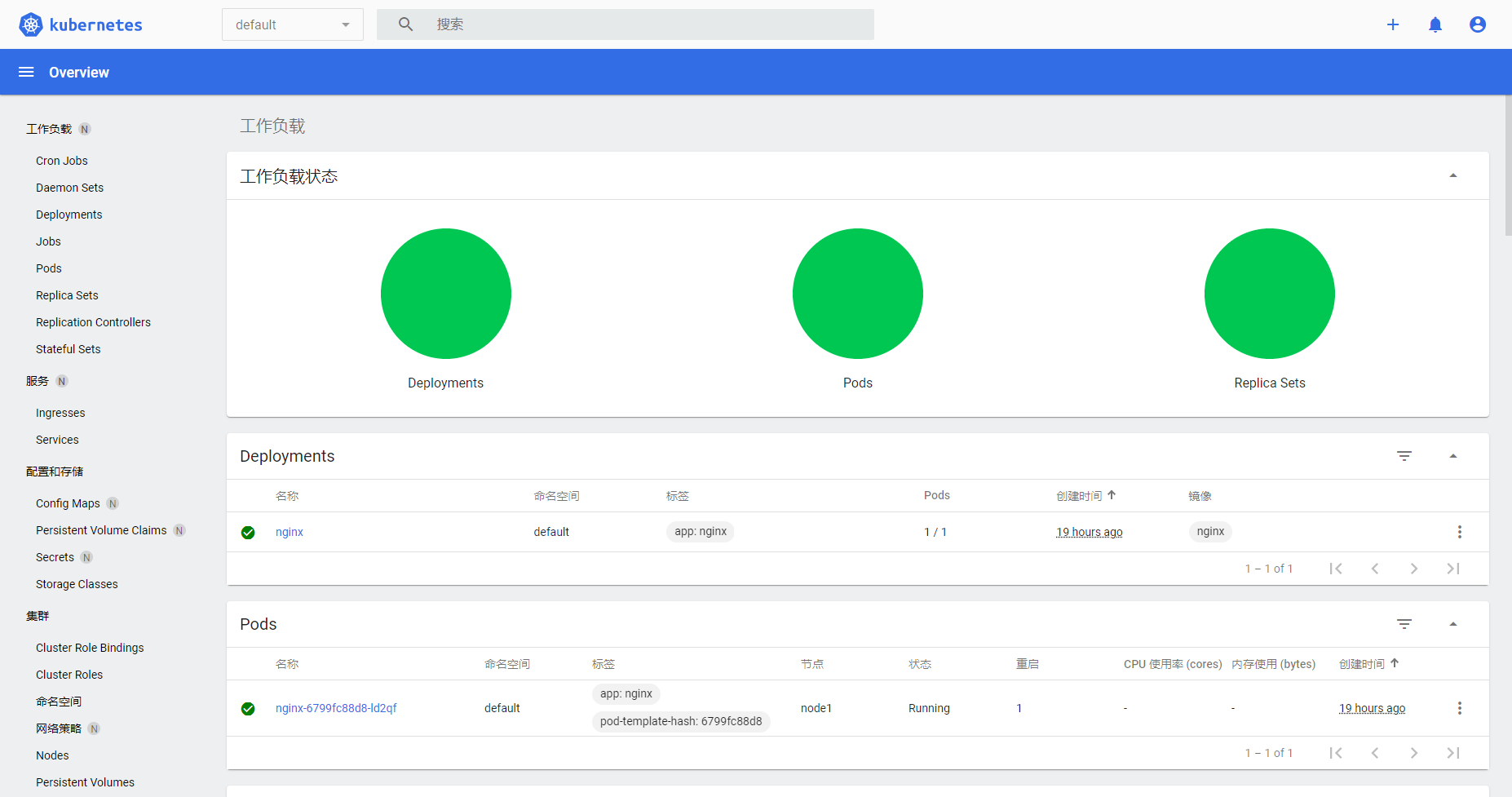

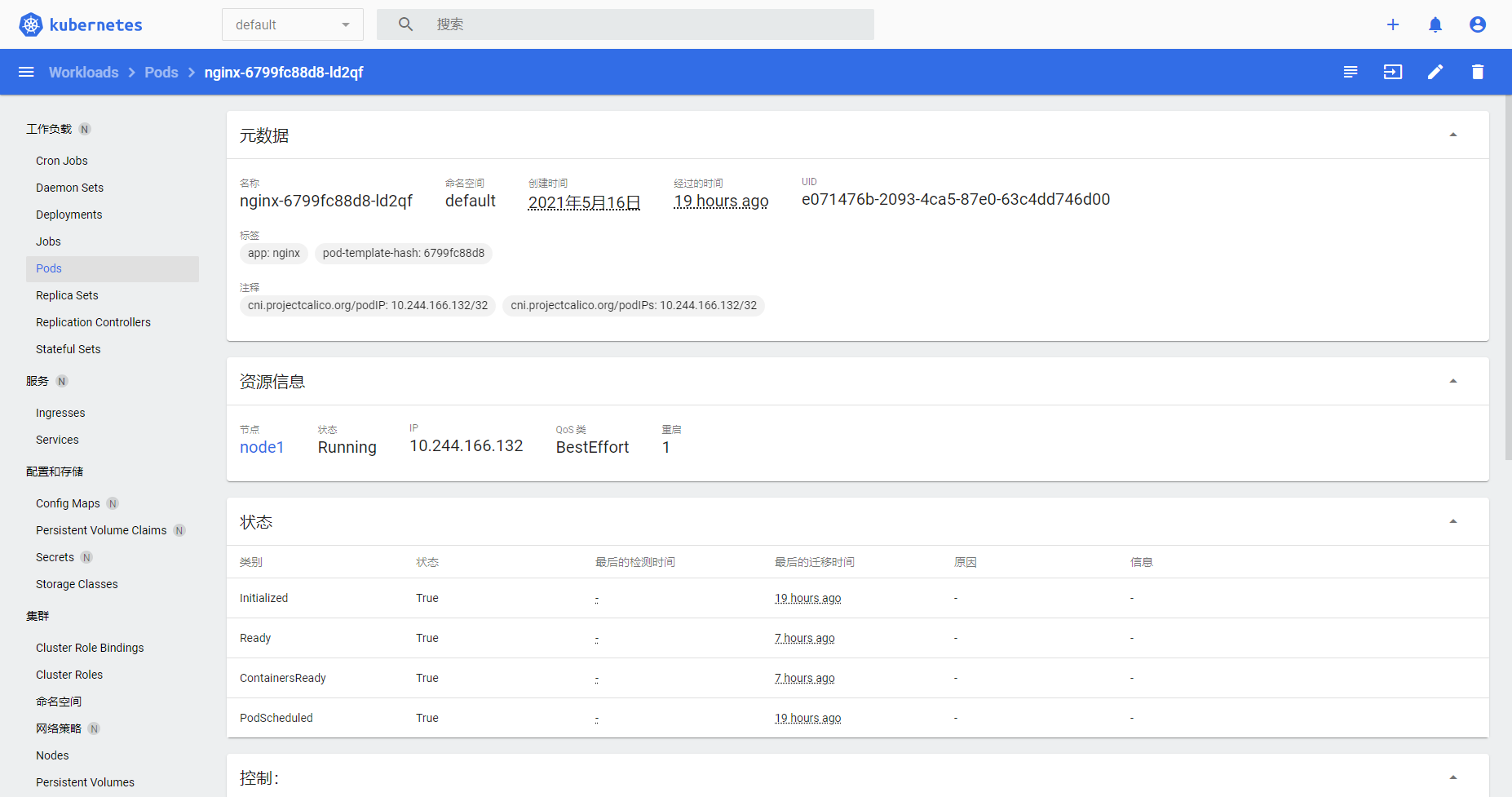

登录后的界面

测试Kubernetes集群

创建一个部署的Deployment:

[root@master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created将Nginx服务暴露:

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed查看Pod和服务状态:

[root@master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-ld2qf 1/1 Running 1 19h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19h

service/nginx NodePort 10.98.182.12 <none> 80:32182/TCP 19h在Master中访问Nginx:

[root@master ~]# curl 10.98.182.12

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>成功!

同时,我们也可以在面板上看到Nginx的服务:

至此,我们的K8S已经安装成功了。

看了好多教程,在谷歌和百度不断搜索问题解决,终于搭建成功了,谢谢一下几位大佬的教程

参考链接:

在VMWare中部署你的K8S集群 - 张小凯的博客

Kubernetes(一) 跟着官方文档从零搭建K8S - 掘金