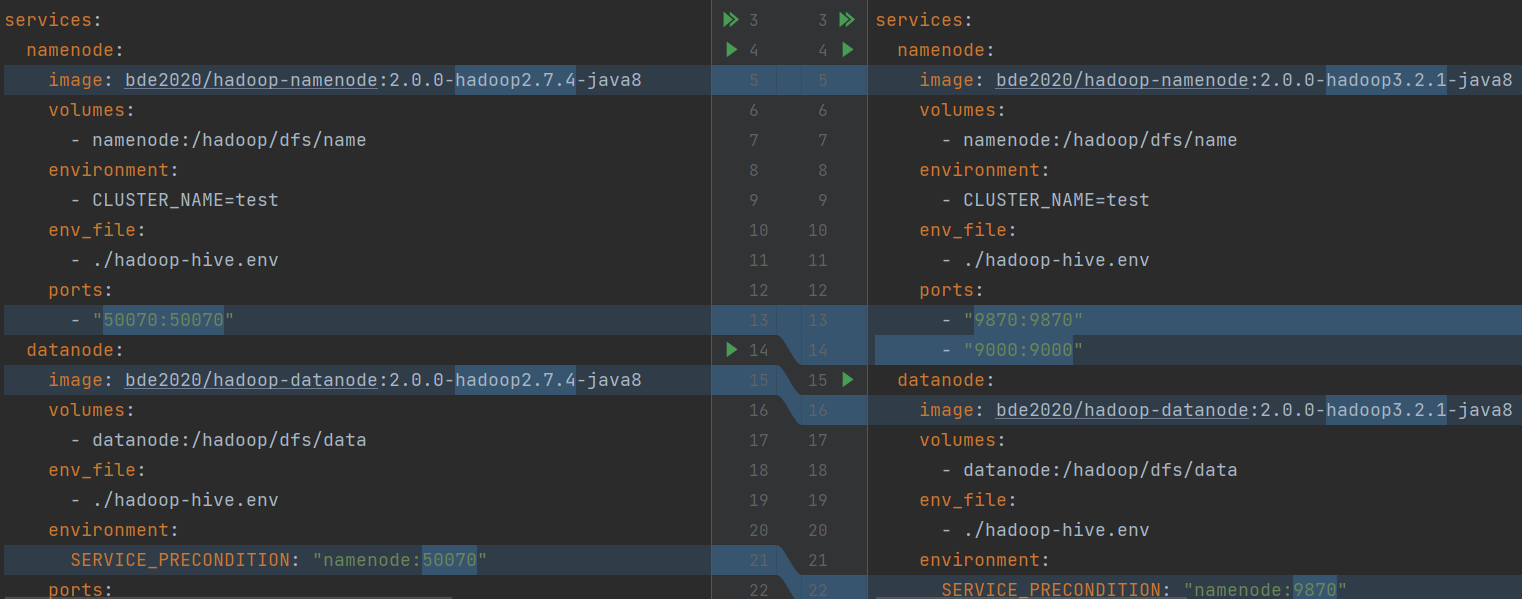

1. 版本号修改

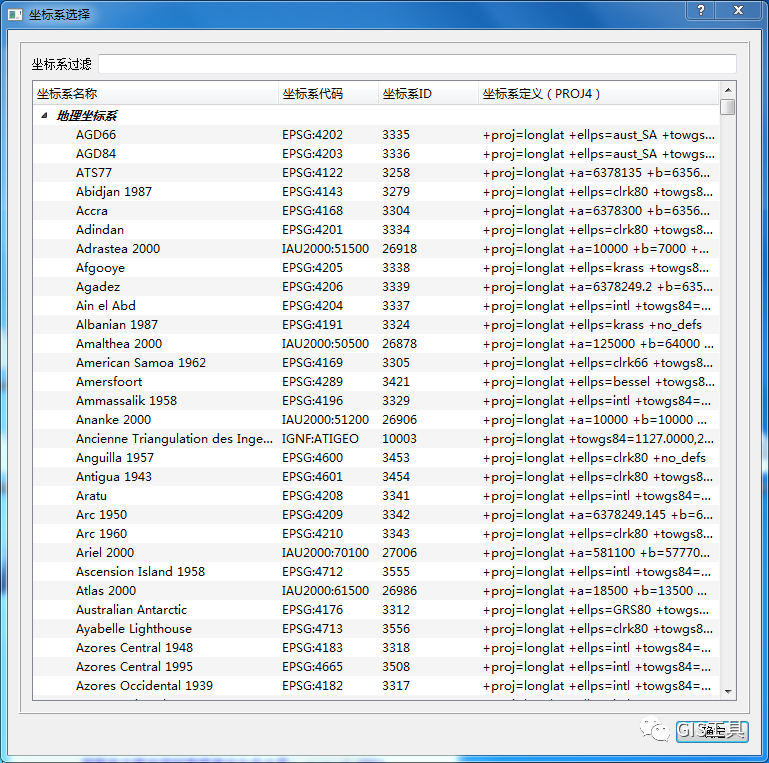

对于升级而言,我们最先考虑的是docker hub中有的较新的版本,然后我们需要简单了解下hadoop2与hadoop3的区别,首先明确的是端口号有所改变,如下图所示

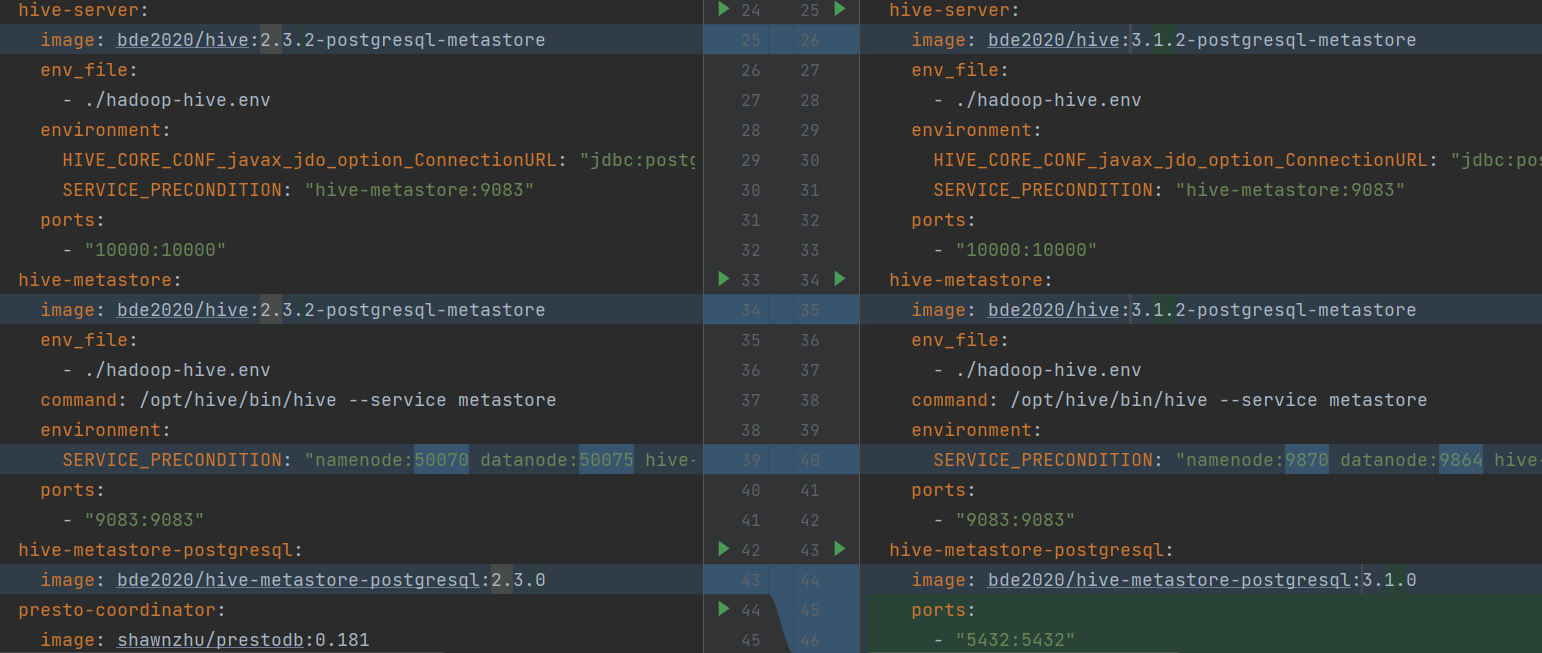

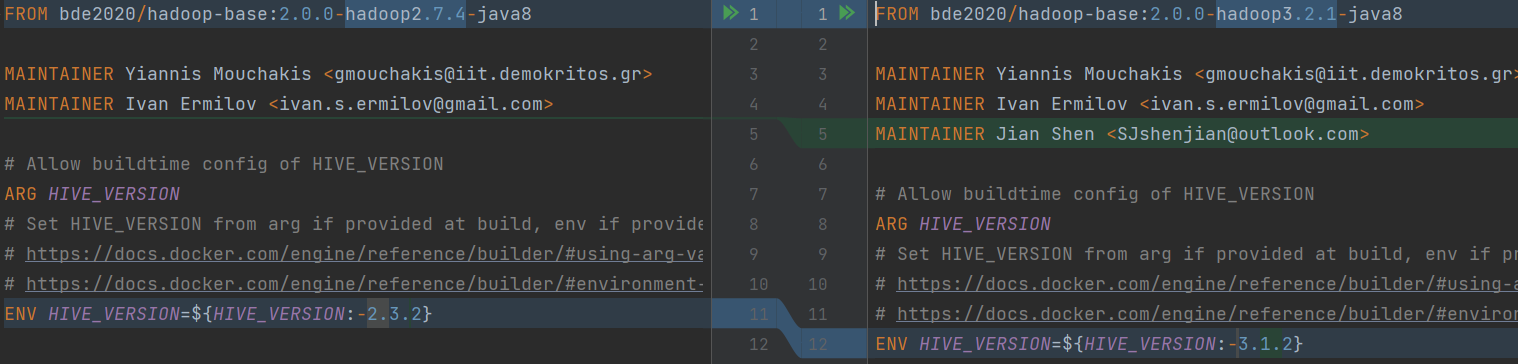

2. Hive镜像构建

刚刚我们修改了Hive为bde2020/hive:3.1.2-postgresql-metastore,仓库中并没有,我们就要考虑修改Dockerfile自行构建镜像了

现在可以尝试构建镜像了,并启动

docker build -t bde2020/hive:3.1.2-postgresql-metastore .

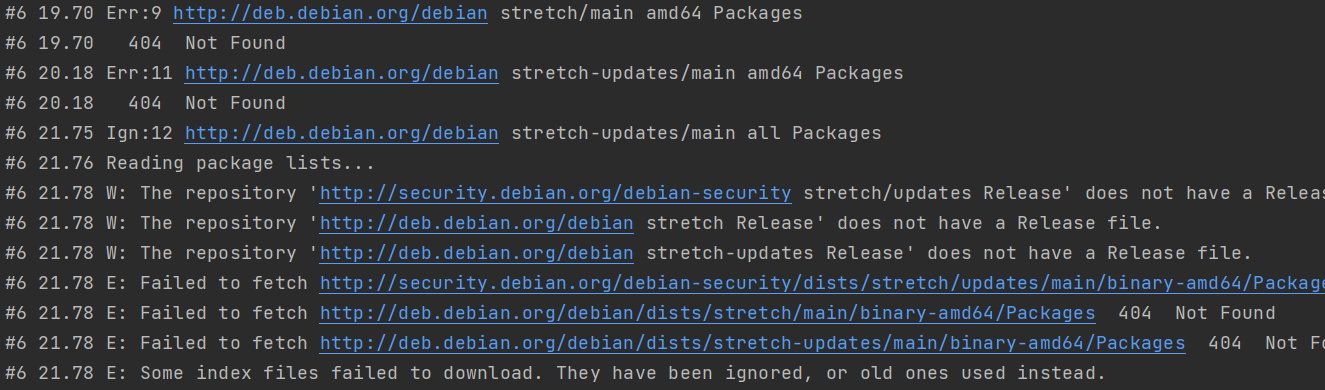

3. apt-get update 404 Not Found

哇偶,构建出错

原因:/etc/apt/sources.list中源存在问题,我们修改为目前可用的源即可

RUN sed -i 's/^.*$/deb http:\/\/deb.debian.org\/debian\/ buster main\ndeb-src http:\/\/deb.debian.org\/debian\/ buster main\ndeb http:\/\/deb.debian.org\/debian-security\/ buster\/updates main\ndeb-src http:\/\/deb.debian.org\/debian-security\/ buster\/updates main/g' /etc/apt/sources.list

4. entrypoint.sh文件不存在

再次尝试构建镜像了,并启动,发现如下错误

打开entrypoint.sh文件,发现报错,我们按照提示加上注释即可

5. 版本冲突与格式错误

/opt/hive/conf/hive-env.sh: line 37: $'\r': command not found

/opt/hive/conf/hive-env.sh: line 45: $'\r': command not found

/opt/hive/conf/hive-env.sh: line 46: $'\r': command not found

/opt/hive/conf/hive-env.sh: line 49: $'\r': command not found

/opt/hive/conf/hive-env.sh: line 52: $'\r': command not found

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-3.2.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1357)

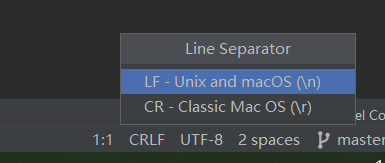

我们将hive-env.sh格式改为LF,unix格式

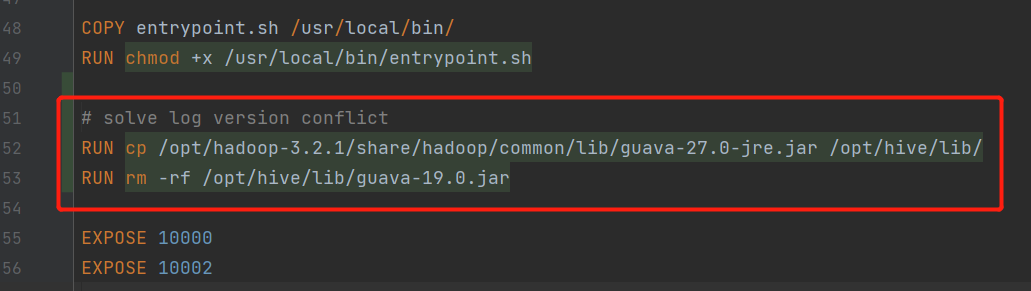

Dockerfile中加入这两段解决log冲突问题

# solve log version conflict

RUN cp /opt/hadoop-3.2.1/share/hadoop/common/lib/guava-27.0-jre.jar /opt/hive/lib/

RUN rm -rf /opt/hive/lib/guava-19.0.jar

再次构建尝试运行

6. url链接问题

看起来日志都很正常了,但是我们执行任何hive操作时,报如下错误:

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

FAILED: HiveException java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

控制台没有输出错误日志,我们查看其他地方是由有hive.log

# find / -name hive.log

/tmp/root/hive.log

查看日志,可以发现,url非法了哎,这是因为docker在默认创建网络的时候,会给添加_default后缀导致url非法

Caused by: org.apache.hadoop.hive.metastore.api.MetaException: Got exception: java.net.URISyntaxException Illegal character in hostname at index 49: thrift://docker-hive-hive-metastore-1.docker-hive_default:9083

at org.apache.hadoop.hive.metastore.utils.MetaStoreUtils.logAndThrowMetaException(MetaStoreUtils.java:168) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.resolveUris(HiveMetaStoreClient.java:267) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:182) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) ~[hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_232]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_232]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_232]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_232]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.session.SessionState.setAuthorizerV2Config(SessionState.java:960) ~[hive-exec-3.1.2.jar:3.1.2]

... 16 mor

我们修改docker-compose.yaml,指定网络名称即可

# 版本由3改为3.5

version: "3.5"

# solve java.net.URISyntaxException Illegal character in hostname at index 49: thrift://docker-hive-hive-metastore-1.docker-hive_default:9083

networks:

default:

name: docker-hive-default

先down后up,再次执行show databases;正常了哎, 也可以看到网络docker-hive-default

(base) PS D:\Java\新建文件夹\docker-hive> docker network ls

NETWORK ID NAME DRIVER SCOPE

b53167dc0bb1 bridge bridge local

6ae196a5dfd6 docker-hive-default bridge local

1582f3dcbd8b docker-hive_default bridge local

3e85deffd6b1 host host local

8740d8865907 none null local

现在即使通过beeline连接也是OK的,完结

欢迎关注公众号算法小生,与我沟通交流