一、创建一个pod

[root@master01 ~]# kubectl create ns prod

[root@master01 ~]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: prod

labels:

app: myapp

spec:

containers:

- name: test1

image: busybox:latest

command:

- "/bin/sh"

- "-c"

- "sleep 100000000000"

- name: test2

image: busybox:latest

args: ["sleep","100000000000000"]

[root@master01 ~]# kubectl create -f pod.yaml ###创建pod

[root@master01 ~]# kubectl describe pod pod-demo -n prod ###查看日志

[root@master01 ~]# kubectl get pod pod-demo -n prod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-demo 2/2 Running 0 4m41s 10.0.0.70 master03 <none> <none>

[root@master01 ~]# kubectl -n prod exec -it pod/pod-demo test1 -- sh ###进入容器

Defaulted container "test1" out of: test1, test2

/ #

[root@master01 ~]# kubectl delete -f pod.yaml ###删除pod

二、label使用

优先级:nodeName -> nodeSelector -> 污点(Taints) ->亲和性(Affinity)

1、创建httpd

[root@master01 ~]# cat web-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: web

labels:

app: as

rel: stable

spec:

containers:

- name: web

image: httpd:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f web-pod.yaml

2、label常用操作

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 75s

[root@master01 ~]# kubectl get pod --show-labels ###查看所有label

NAME READY STATUS RESTARTS AGE LABELS

web 1/1 Running 0 87s app=as,rel=stable

[root@master01 ~]# kubectl get pod -l app=as ###匹配app=as的label

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 2m

[root@master01 ~]# kubectl get pod -l app!=test ###匹配app不等于test的label

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 2m23s

[root@master01 ~]# kubectl get pod -l 'app in (test,as)' ###匹配app为test或者as的label

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 3m7s

[root@master01 ~]# kubectl get pod -l 'app notin (test,as)' ###匹配app不为test或者as的label

No resources found in default namespace.

[root@master01 ~]# kubectl get pod -L app ### 匹配所有app

NAME READY STATUS RESTARTS AGE APP

web 1/1 Running 0 3m34s as

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

web 1/1 Running 0 4m21s app=as,rel=stable

[root@master01 ~]# kubectl label pod web rel=canary --overwrite ###修改label

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

web 1/1 Running 0 4m49s app=as,rel=canary

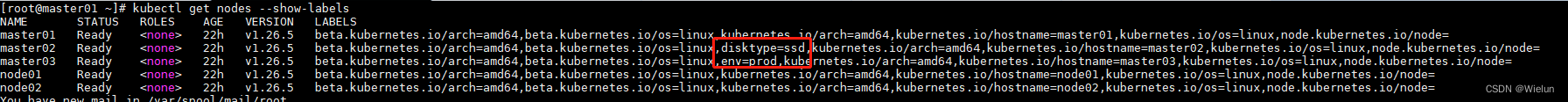

[root@master01 ~]# kubectl label nodes master02 disktype=ssd ###给节点打label

[root@master01 ~]# kubectl label nodes master03 env=prod

[root@master01 ~]# kubectl get nodes --show-labels

3、nodeSelector使用

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

nodeSelector:

disktype: ssd

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

deployment.apps/nginx-deployment created

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-697c567f87-gddsh 1/1 Running 0 9s 10.0.1.77 master02 <none> <none>

nginx-deployment-697c567f87-lltln 1/1 Running 0 9s 10.0.1.144 master02 <none> <none>

web 1/1 Running 0 25m 10.0.0.38 master03 <none> <none>

4、nodeName使用

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

nodeName: master02

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

三、污点和容忍度

1、查看Taints

[root@master01 ~]# kubectl describe node master01|grep Taints

Taints: <none>

[root@master01 ~]# kubectl taint node master01 node-role.kubernets.io/master="":NoSchedule

[root@master01 ~]# kubectl describe node master01|grep Taints

Taints: node-role.kubernets.io/master:NoSchedule

[root@master01 ~]# kubectl taint node master01 node-role.kubernets.io/master- ###删除

[root@master01 ~]# kubectl get pod -A -owide

[root@master01 ~]# kubectl describe pod cilium-operator-58bf55d99b-zxmxp -n kube-system|grep Tolerations

Tolerations: op=Exists

2、添加污点

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 5

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl taint node master02 node-type=production:NoSchedule

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

# 如果之前已经部署在master02,不会把之前的驱离

[root@master01 ~]# kubectl get pod -A -owide|grep nginx

default nginx-deployment-66b9f7ff85-6hxqf 1/1 Running 0 22s 10.0.4.110 master01 <none> <none>

default nginx-deployment-66b9f7ff85-d8jsz 1/1 Running 0 22s 10.0.2.168 node01 <none> <none>

default nginx-deployment-66b9f7ff85-dmptx 1/1 Running 0 22s 10.0.0.223 master03 <none> <none>

default nginx-deployment-66b9f7ff85-h5wts 1/1 Running 0 22s 10.0.4.201 master01 <none> <none>

default nginx-deployment-66b9f7ff85-jxjw4 0/1 ContainerCreating 0 22s <none> node02 <none> <none>

3、容忍污点

(1)容忍NoSchedule

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 5

template:

metadata:

labels:

app: nginx

spec:

tolerations:

- key: node-type

operator: Equal

value: production

effect: NoSchedule

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod -A -owide|grep nginx

default nginx-deployment-7cffd544c8-7d677 1/1 Running 0 11s 10.0.2.15 node01 <none> <none>

default nginx-deployment-7cffd544c8-nszsr 1/1 Running 0 11s 10.0.0.229 master03 <none> <none>

default nginx-deployment-7cffd544c8-v4cw2 1/1 Running 0 11s 10.0.4.192 master01 <none> <none>

default nginx-deployment-7cffd544c8-v75dd 1/1 Running 0 11s 10.0.1.52 master02 <none> <none>

default nginx-deployment-7cffd544c8-x9mdm 1/1 Running 0 11s 10.0.3.93 node02 <none> <none>

(2)容忍NoExecute

会立刻把master03的机器迁移走

[root@master01 ~]# kubectl get pod -A -owide|grep nginx

default nginx-deployment-7cffd544c8-7d677 1/1 Running 0 4m10s 10.0.2.15 node01 <none> <none>

default nginx-deployment-7cffd544c8-nszsr 1/1 Running 0 4m10s 10.0.0.229 master03 <none> <none>

default nginx-deployment-7cffd544c8-v4cw2 1/1 Running 0 4m10s 10.0.4.192 master01 <none> <none>

default nginx-deployment-7cffd544c8-v75dd 1/1 Running 0 4m10s 10.0.1.52 master02 <none> <none>

default nginx-deployment-7cffd544c8-x9mdm 1/1 Running 0 4m10s 10.0.3.93 node02 <none> <none>

[root@master01 ~]# kubectl taint node master03 node-type=test:NoExecute

[root@master01 ~]# kubectl get pod -A -owide|grep nginx

default nginx-deployment-7cffd544c8-7d677 1/1 Running 0 4m41s 10.0.2.15 node01 <none> <none>

default nginx-deployment-7cffd544c8-fpn9l 1/1 Running 0 6s 10.0.4.252 master01 <none> <none>

default nginx-deployment-7cffd544c8-v4cw2 1/1 Running 0 4m41s 10.0.4.192 master01 <none> <none>

default nginx-deployment-7cffd544c8-v75dd 1/1 Running 0 4m41s 10.0.1.52 master02 <none> <none>

default nginx-deployment-7cffd544c8-x9mdm 1/1 Running 0 4m41s 10.0.3.93 node02 <none> <none>

(3)设置tolerationSeconds

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 5

template:

metadata:

labels:

app: nginx

spec:

tolerations:

- key: node-type

operator: Equal

value: production

effect: NoSchedule

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 30

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod -A -owide|grep nginx

default nginx-deployment-5c5996fdc-7fjl9 1/1 Running 0 9m47s 10.0.1.124 master02 <none> <none>

default nginx-deployment-5c5996fdc-8qh2r 1/1 Running 0 9m47s 10.0.4.109 master01 <none> <none>

default nginx-deployment-5c5996fdc-b2nbz 1/1 Running 0 9m47s 10.0.3.200 node02 <none> <none>

default nginx-deployment-5c5996fdc-hdnsl 1/1 Running 0 9m47s 10.0.2.251 node01 <none> <none>

default nginx-deployment-5c5996fdc-j78nd 1/1 Running 0 9m47s 10.0.4.135 master01 <none> <none>

[root@master01 ~]# kubectl describe pod nginx-deployment-5c5996fdc-7fjl9 |grep -A 3 Tolerations

Tolerations: node-type=production:NoSchedule

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 30s

(4)删除之前污点

[root@master01 ~]# kubectl taint node master02 node-type-

[root@master01 ~]# kubectl taint node master03 node-type-

四、亲和性

1、node亲和性

如果master02不够话,会调度到其他节点上

[root@master01 ~]# kubectl label nodes master02 gpu=true

node/master02 labeled

[root@master01 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master01 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master01,kubernetes.io/os=linux,node.kubernetes.io/node=

master02 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,gpu=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=master02,kubernetes.io/os=linux,node.kubernetes.io/node=

master03 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,env=prod,kubernetes.io/arch=amd64,kubernetes.io/hostname=master03,kubernetes.io/os=linux,node.kubernetes.io/node=

node01 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node01,kubernetes.io/os=linux,node.kubernetes.io/node=

node02 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node02,kubernetes.io/os=linux,node.kubernetes.io/node=

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 5

template:

metadata:

labels:

app: nginx

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: gpu

operator: In

values:

- "true"

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod -owide|grep nginx

nginx-deployment-596d5dfbb-6v9wc 1/1 Running 0 19s 10.0.1.49 master02 <none> <none>

nginx-deployment-596d5dfbb-gzsx7 1/1 Running 0 19s 10.0.1.213 master02 <none> <none>

nginx-deployment-596d5dfbb-ktwhw 1/1 Running 0 19s 10.0.1.179 master02 <none> <none>

nginx-deployment-596d5dfbb-r96cz 1/1 Running 0 19s 10.0.1.183 master02 <none> <none>

nginx-deployment-596d5dfbb-tznqh 1/1 Running 0 19s 10.0.1.210 master02 <none> <none>

2、preferredDuringSchedulingIgnoredDuringExecution

[root@master01 ~]# kubectl label node master02 available-zone=zone1

[root@master01 ~]# kubectl label node master03 available-zone=zone2

[root@master01 ~]# kubectl label nodes master02 share-type=dedicated

[root@master01 ~]# kubectl label nodes master03 share-type=shared

[root@master01 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master01 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master01,kubernetes.io/os=linux,node.kubernetes.io/node=

master02 Ready <none> 24h v1.26.5 available-zone=zone1,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,gpu=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=master02,kubernetes.io/os=linux,node.kubernetes.io/node=,share-type=dedicated

master03 Ready <none> 24h v1.26.5 available-zone=zone2,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,env=prod,kubernetes.io/arch=amd64,kubernetes.io/hostname=master03,kubernetes.io/os=linux,node.kubernetes.io/node=,share-type=shared

node01 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node01,kubernetes.io/os=linux,node.kubernetes.io/node=

node02 Ready <none> 24h v1.26.5 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node02,kubernetes.io/os=linux,node.kubernetes.io/node=

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 10

template:

metadata:

labels:

app: nginx

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80

preference:

matchExpressions:

- key: available-zone

operator: In

values:

- zone1

- weight: 20

preference:

matchExpressions:

- key: share-type

operator: In

values:

- dedicated

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod -A -o wide|grep nginx

default nginx-deployment-5794574555-22xqh 1/1 Running 0 13s 10.0.1.28 master02 <none> <none>

default nginx-deployment-5794574555-5b8kq 1/1 Running 0 13s 10.0.1.168 master02 <none> <none>

default nginx-deployment-5794574555-6fm9h 1/1 Running 0 13s 10.0.1.152 master02 <none> <none>

default nginx-deployment-5794574555-7gqm7 1/1 Running 0 13s 10.0.3.163 node02 <none> <none>

default nginx-deployment-5794574555-7mp2p 1/1 Running 0 13s 10.0.1.19 master02 <none> <none>

default nginx-deployment-5794574555-8rrmw 1/1 Running 0 13s 10.0.4.107 master01 <none> <none>

default nginx-deployment-5794574555-c6s7z 1/1 Running 0 13s 10.0.1.52 master02 <none> <none>

default nginx-deployment-5794574555-f94p6 1/1 Running 0 13s 10.0.0.125 master03 <none> <none>

default nginx-deployment-5794574555-hznds 1/1 Running 0 13s 10.0.1.163 master02 <none> <none>

default nginx-deployment-5794574555-vx85n 1/1 Running 0 13s 10.0.2.179 node01 <none> <none>

3、pod亲和性

(1)创建一个busybox

发现生成在master03

[root@master01 ~]# kubectl run backend -l app=backend --image busybox -- sleep 99999999

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

backend 1/1 Running 0 7m35s 10.0.0.242 master03 <none> <none>

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

backend 1/1 Running 0 45s app=backend

(2)nginx亲和busybox(方式一)

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 5

template:

metadata:

labels:

app: nginx

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- backend

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

(3)nginx亲和busybox(方式二)

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 5

template:

metadata:

labels:

app: nginx

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

labelSelector:

matchLabels:

app: backend

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

(4)优先级亲和pod

优先部署在满足的节点上

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 5

template:

metadata:

labels:

app: nginx

spec:

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80

podAffinityTerm:

topologyKey: "kubernetes.io/hostname"

labelSelector:

matchLabels:

app: backend

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

backend 1/1 Running 0 19m 10.0.0.242 master03 <none> <none>

nginx-deployment-6b6b6646cf-2g2nt 1/1 Running 0 30s 10.0.0.158 master03 <none> <none>

nginx-deployment-6b6b6646cf-62xlh 1/1 Running 0 30s 10.0.0.4 master03 <none> <none>

nginx-deployment-6b6b6646cf-6nhl4 1/1 Running 0 30s 10.0.0.123 master03 <none> <none>

nginx-deployment-6b6b6646cf-gfbxt 1/1 Running 0 30s 10.0.0.125 master03 <none> <none>

nginx-deployment-6b6b6646cf-sg6bx 1/1 Running 0 30s 10.0.4.206 master01 <none> <none>

(5)pod非亲和

因为5个节点都安装了,因为第六个无法进行安装

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

selector:

matchLabels:

app: frontend

replicas: 6

template:

metadata:

labels:

app: frontend

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

labelSelector:

matchLabels:

app: frontend

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

backend 1/1 Running 0 32m 10.0.0.242 master03 <none> <none>

frontend-84bdd684b-825hs 1/1 Running 0 74s 10.0.1.145 master02 <none> <none>

frontend-84bdd684b-b8vd9 0/1 Pending 0 74s <none> <none> <none> <none>

frontend-84bdd684b-m7d79 1/1 Running 0 74s 10.0.2.20 node01 <none> <none>

frontend-84bdd684b-pj9vv 1/1 Running 0 74s 10.0.4.42 master01 <none> <none>

frontend-84bdd684b-qn6lb 1/1 Running 0 74s 10.0.3.91 node02 <none> <none>

frontend-84bdd684b-tl84p 1/1 Running 0 74s 10.0.0.214 master03 <none> <none>

[root@master01 ~]# kubectl describe pod frontend-84bdd684b-b8vd9

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 102s (x2 over 103s) default-scheduler 0/5 nodes are available: 5 node(s) didn't match pod anti-affinity rules. preemption: 0/5 nodes are available: 5 No preemption victims found for incoming pod..

五、RC与RS

适应于不需要更新的程序,最大的问题不支持滚动更新

RS比RC主要多标签选择器

1、RC体验

[root@master01 ~]# cat rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: rc-test

spec:

replicas: 3

selector:

app: rc-pod

template:

metadata:

labels:

app: rc-pod

spec:

containers:

- name: rc-test

image: httpd:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f rc.yaml

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rc-test-bjrqn 1/1 Running 0 35s 10.0.0.244 master03 <none> <none>

rc-test-srbfn 1/1 Running 0 35s 10.0.4.207 master01 <none> <none>

rc-test-tjrmf 1/1 Running 0 35s 10.0.0.67 master03 <none> <none>

[root@master01 ~]# kubectl get rc

NAME DESIRED CURRENT READY AGE

rc-test 3 3 3 94s

[root@master01 ~]# kubectl describe rc rc-test|grep -i Replicas

Replicas: 3 current / 3 desired

2、RS初体验

[root@master01 ~]# cat rs.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: rs-test

labels:

app: guestbool

tier: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- name: rs-test

image: httpd:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f rs.yaml

[root@master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rs-test-7dbz8 1/1 Running 0 2m18s 10.0.4.245 master01 <none> <none>

rs-test-m8tqv 1/1 Running 0 2m18s 10.0.1.202 master02 <none> <none>

rs-test-pp6b6 1/1 Running 0 2m18s 10.0.0.93 master03 <none> <none>

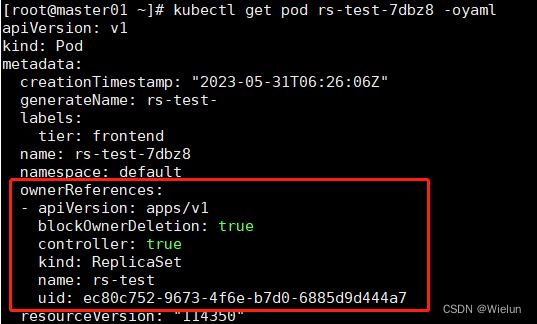

[root@master01 ~]# kubectl get pod rs-test-7dbz8 -oyaml # 通过uuid进行的管理

3、RS接管pod

注意标签相同,必须所有标签都相同,执行运行pod启动不了的

[root@master01 ~]# cat pod-rs.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod1

labels:

tier: frontend

spec:

containers:

- name: test1

image: httpd:latest

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Pod

metadata:

name: pod2

labels:

tier: frontend

spec:

containers:

- name: test2

image: httpd:latest

imagePullPolicy: IfNotPresent

[root@master01 ~]# kubectl delete -f rs.yaml

[root@master01 ~]# kubectl apply -f pod-rs.yaml

[root@master01 ~]# kubectl apply -f rs.yaml

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod1 1/1 Running 0 102s

pod2 1/1 Running 0 102s

rs-test-zw2sj 1/1 Running 0 3s

[root@master01 ~]# kubectl delete -f rs.yaml

4、修改label测试

[root@master01 ~]# kubectl apply -f rs.yaml

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

rs-test-cn6cc 1/1 Running 0 11s tier=frontend

rs-test-jxwfb 1/1 Running 0 11s tier=frontend

rs-test-sztrt 1/1 Running 0 11s tier=frontend

[root@master01 ~]# kubectl label pod rs-test-cn6cc tier=canary --overwrite

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

rs-test-cn6cc 1/1 Running 0 60s tier=canary

rs-test-jxwfb 1/1 Running 0 60s tier=frontend

rs-test-qw5fj 1/1 Running 0 14s tier=frontend

rs-test-sztrt 1/1 Running 0 60s tier=frontend

[root@master01 ~]# kubectl get pod -l tier=frontend

NAME READY STATUS RESTARTS AGE

rs-test-jxwfb 1/1 Running 0 3m30s

rs-test-qw5fj 1/1 Running 0 2m44s

rs-test-sztrt 1/1 Running 0 3m30s

[root@master01 ~]# kubectl get pod -l 'tier in (canary,frontend)'

NAME READY STATUS RESTARTS AGE

rs-test-cn6cc 1/1 Running 0 3m48s

rs-test-jxwfb 1/1 Running 0 3m48s

rs-test-qw5fj 1/1 Running 0 3m2s

rs-test-sztrt 1/1 Running 0 3m48s

[root@master01 ~]# kubectl get pod -l 'tier notin (canary)'

NAME READY STATUS RESTARTS AGE

rs-test-jxwfb 1/1 Running 0 4m2s

rs-test-qw5fj 1/1 Running 0 3m16s

rs-test-sztrt 1/1 Running 0 4m2s

5、多标签

(1)测试rs

[root@master01 ~]# cat rs.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: rs-test

labels:

app: guestbool

tier: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

matchExpressions:

- key: tier

operator: In

values:

- frontend

- canary

- {key: app, operator: In, values: [guestbool, test]}

template:

metadata:

labels:

tier: frontend

app: guestbool

spec:

containers:

- name: rs-test

image: httpd:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl delete -f rs.yaml

[root@master01 ~]# kubectl apply -f rs.yaml

replicaset.apps/rs-test created

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

rs-test-2srdx 1/1 Running 0 3s app=guestbool,tier=frontend

rs-test-ftzsk 1/1 Running 0 3s app=guestbool,tier=frontend

rs-test-v2nlj 0/1 ContainerCreating 0 3s app=guestbool,tier=frontend

(2)测试pod-rs

[root@master01 ~]# kubectl label pod rs-test-2srdx tier=canary --overwrite

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

rs-test-2srdx 1/1 Running 0 2m46s app=guestbool,tier=canary

rs-test-4t548 1/1 Running 0 4s app=guestbool,tier=frontend

rs-test-ftzsk 1/1 Running 0 2m46s app=guestbool,tier=frontend

rs-test-v2nlj 1/1 Running 0 2m46s app=guestbool,tier=frontend

[root@master01 ~]# cat pod-rs.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod1

labels:

tier: canary

spec:

containers:

- name: test1

image: httpd:latest

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Pod

metadata:

name: pod2

labels:

tier: frontend

spec:

containers:

- name: test2

image: httpd:latest

imagePullPolicy: IfNotPresent

[root@master01 ~]# kubectl apply -f pod-rs.yaml

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod1 1/1 Running 0 9s tier=canary

pod2 1/1 Running 0 9s tier=frontend

rs-test-2srdx 1/1 Running 0 3m49s app=guestbool,tier=canary

rs-test-4t548 1/1 Running 0 67s app=guestbool,tier=frontend

rs-test-ftzsk 1/1 Running 0 3m49s app=guestbool,tier=frontend

rs-test-v2nlj 1/1 Running 0 3m49s app=guestbool,tier=frontend