客户:为什么明明我设为自动播放了,却无法自动播放?

我:#@$%^#~

客户:为什么其他浏览器可以自动播放,Chrome浏览器不能自动播,您们产品有问题...

我:^$&*^(*&^(*%

好吧,我终于找到根源了,分享给大家:

----

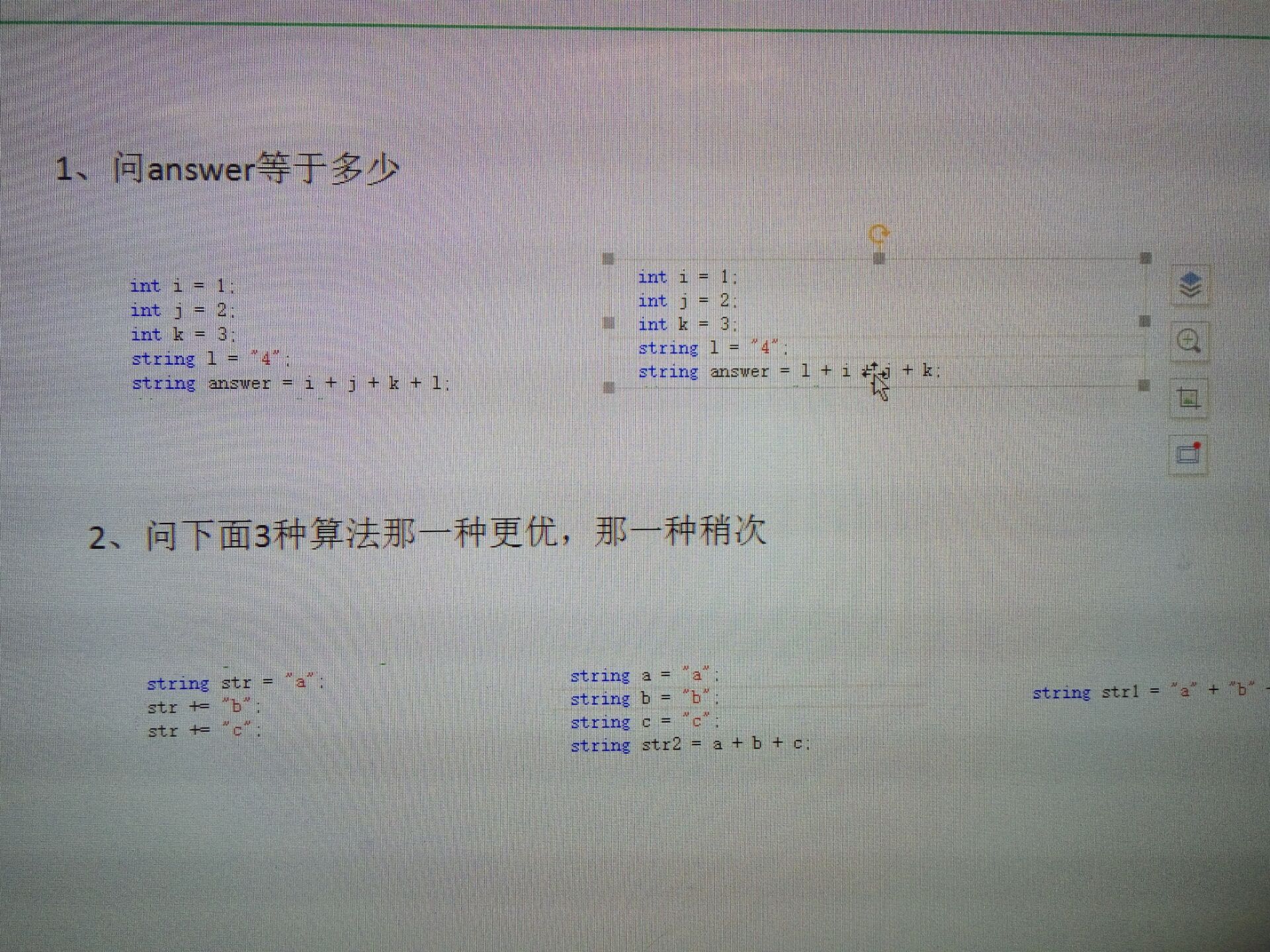

摘录1:Chrome自动播放限制的考量

正如您可能已经注意到的,Web浏览器正在朝着更严格的自动播放策略发展,以改善用户体验,最大限度地减少安装广告拦截程序的动机,并减少昂贵的数据流量消耗。这些更改旨在为用户提供更大的播放控制权,并使拥有合法用例的视频发布者受益。

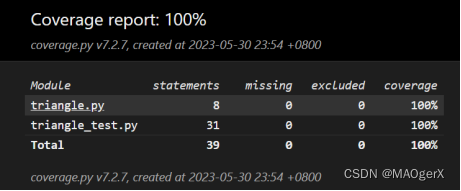

摘录2:Chrome的自动播放政策

- 静音自动播放总是允许的。

- 在下列情况下允许使用声音自动播放:

- 用户已经与域进行了交互(点击,tap等)。

- 在桌面上,用户的媒体参与指数阈值(MEI)已被越过,这意味着用户以前播放带有声音的视频。

- 在移动设备上,用户已将该网站添加到主屏幕。

- 顶部框架可以将自动播放权限授予其iframe以允许自动播放声音。

Autoplay policy in Chrome

Improved user experience, minimized incentives to install ad blockers, and reduced data consumption

Published on Wednesday, September 13, 2017 • Updated on Tuesday, May 25, 2021

François Beaufort

Dives into Chromium source code

GitHub

The Autoplay Policy launched in Chrome 66 for audio and video elements and is effectively blocking roughly half of unwanted media autoplays in Chrome. For the Web Audio API, the autoplay policy launched in Chrome 71. This affects web games, some WebRTC applications, and other web pages using audio features. More details can be found in the Web Audio API section below.

Chrome's autoplay policies changed in April of 2018 and I'm here to tell you why and how this affects video playback with sound. Spoiler alert: users are going to love it!

Internet memes tagged "autoplay" found on Imgflip and Imgur.

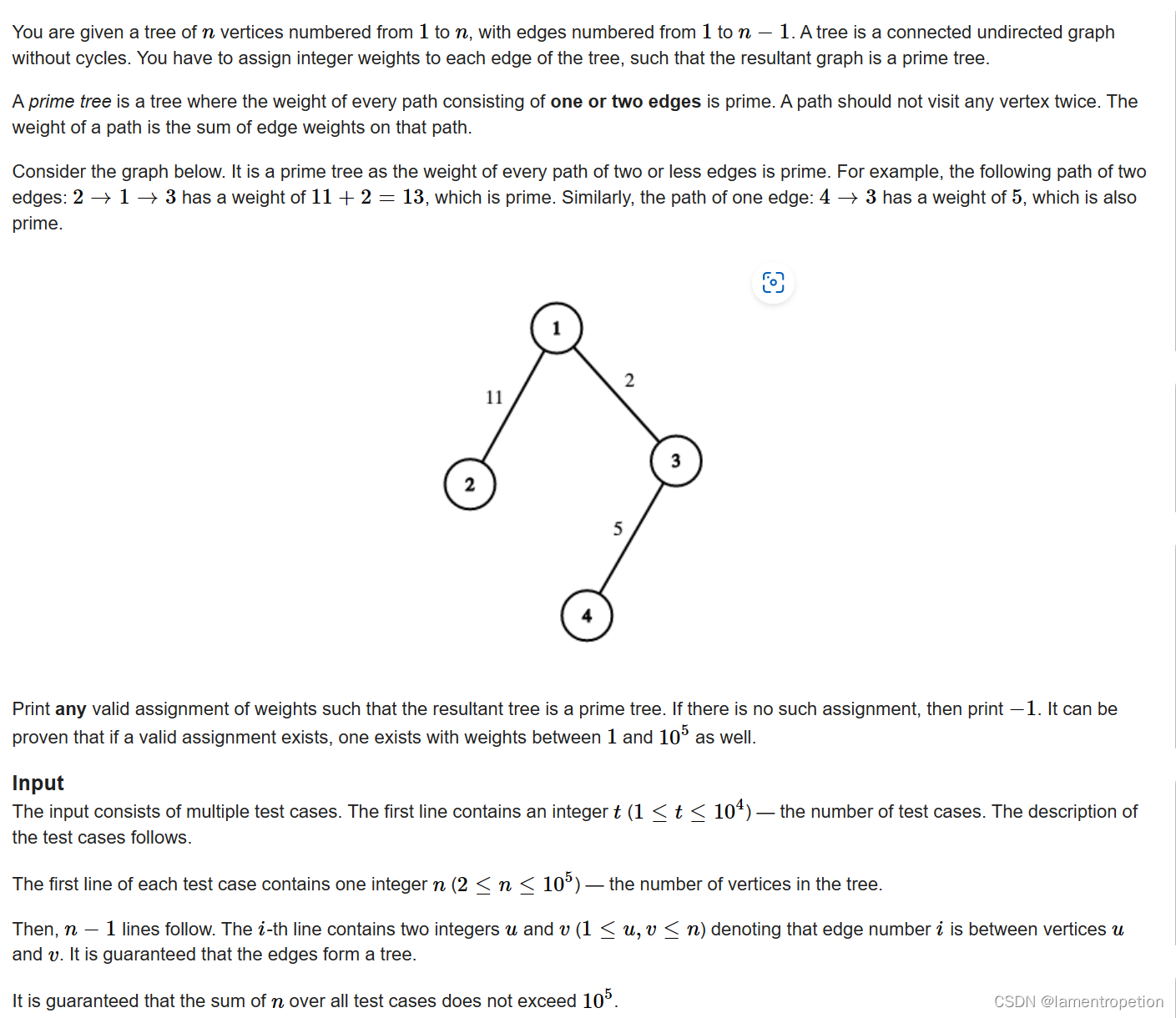

#New behaviors

As you may have noticed, web browsers are moving towards stricter autoplay policies in order to improve the user experience, minimize incentives to install ad blockers, and reduce data consumption on expensive and/or constrained networks. These changes are intended to give greater control of playback to users and to benefit publishers with legitimate use cases.

Chrome's autoplay policies are simple:

- Muted autoplay is always allowed.

- Autoplay with sound is allowed if:

- The user has interacted with the domain (click, tap, etc.).

- On desktop, the user's Media Engagement Index threshold has been crossed, meaning the user has previously played video with sound.

- The user has added the site to their home screen on mobile or installed the PWA on desktop.

- Top frames can delegate autoplay permission to their iframes to allow autoplay with sound.

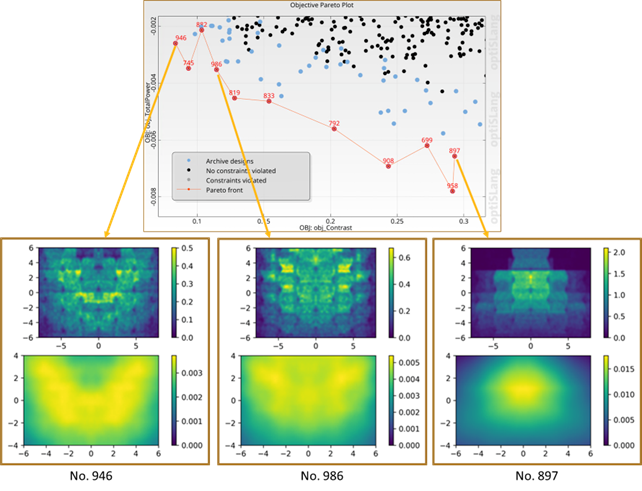

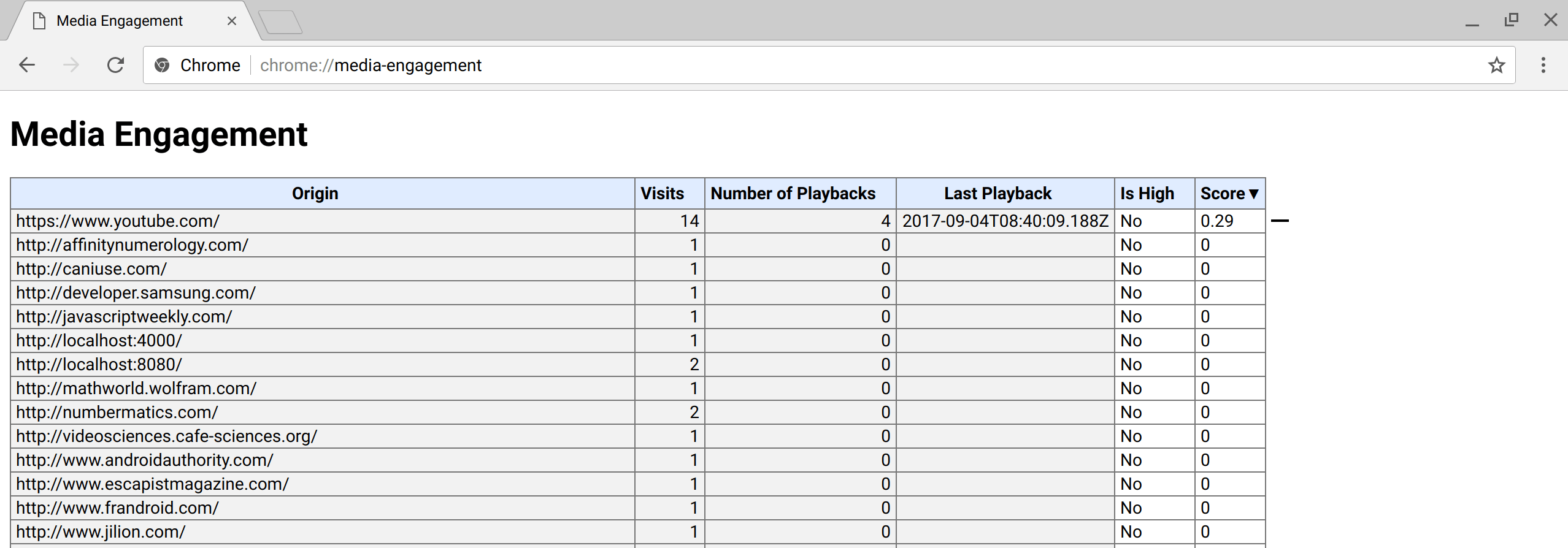

#Media Engagement Index

The Media Engagement Index (MEI) measures an individual's propensity to consume media on a site. Chrome's approach is a ratio of visits to significant media playback events per origin:

- Consumption of the media (audio/video) must be greater than seven seconds.

- Audio must be present and unmuted.

- The tab with the video is active.

- Size of the video (in px) must be greater than 200x140.

From that, Chrome calculates a media engagement score, which is highest on sites where media is played on a regular basis. When it is high enough, media is allowed to autoplay on desktop only.

A user's MEI is available at the about://media-engagement internal page.

Screenshot of the about://media-engagement internal page in Chrome.

#Developer switches

As a developer, you may want to change Chrome autoplay policy behavior locally to test your website for different levels of user engagement.

-

You can disable the autoplay policy entirely by using a command line flag:

chrome.exe --autoplay-policy=no-user-gesture-required. This allows you to test your website as if user were strongly engaged with your site and playback autoplay would be always allowed. -

You can also decide to make sure autoplay is never allowed by disabling MEI and whether sites with the highest overall MEI get autoplay by default for new users. Do this with flags:

chrome.exe --disable-features=PreloadMediaEngagementData, MediaEngagementBypassAutoplayPolicies.

#Iframe delegation

A permissions policy allows developers to selectively enable and disable browser features and APIs. Once an origin has received autoplay permission, it can delegate that permission to cross-origin iframes with the permissions policy for autoplay. Note that autoplay is allowed by default on same-origin iframes.

<!-- Autoplay is allowed. -->

<iframe src="https://cross-origin.com/myvideo.html" allow="autoplay">

<!-- Autoplay and Fullscreen are allowed. -->

<iframe src="https://cross-origin.com/myvideo.html" allow="autoplay; fullscreen">When the permissions policy for autoplay is disabled, calls to play() without a user gesture will reject the promise with a NotAllowedError DOMException. And the autoplay attribute will also be ignored.

Warning

Older articles incorrectly recommend using the attribute gesture=media which is not supported.

#Examples

Example 1: Every time a user visits VideoSubscriptionSite.com on their laptop they watch a TV show or a movie. As their media engagement score is high, autoplay is allowed.

Example 2: GlobalNewsSite.com has both text and video content. Most users go to the site for text content and watch videos only occasionally. Users' media engagement score is low, so autoplay wouldn't be allowed if a user navigates directly from a social media page or search.

Example 3: LocalNewsSite.com has both text and video content. Most people enter the site through the homepage and then click on the news articles. Autoplay on the news article pages would be allowed because of user interaction with the domain. However, care should be taken to make sure users aren't surprised by autoplaying content.

Example 4: MyMovieReviewBlog.com embeds an iframe with a movie trailer to go with a review. Users interacted with the domain to get to the blog, so autoplay is allowed. However, the blog needs to explicitly delegate that privilege to the iframe in order for the content to autoplay.

#Chrome enterprise policies

It is possible to change the autoplay behavior with Chrome enterprise policies for use cases such as kiosks or unattended systems. Check out the Policy List help page to learn how to set the autoplay related enterprise policies:

- The

AutoplayAllowedpolicy controls whether autoplay is allowed or not. - The

AutoplayAllowlistpolicy allows you to specify an allowlist of URL patterns where autoplay will always be enabled.

#Best practices for web developers

#Audio/Video elements

Here's the one thing to remember: Don't ever assume a video will play, and don't show a pause button when the video is not actually playing. It is so important that I'm going to write it one more time below for those who simply skim through that post.

Important

Don't assume a video will play, and don't show a pause button when the video is not actually playing.

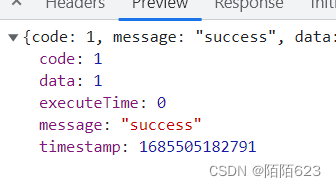

You should always look at the Promise returned by the play function to see if it was rejected:

var promise = document.querySelector('video').play();

if (promise !== undefined) {

promise.then(_ => {

// Autoplay started!

}).catch(error => {

// Autoplay was prevented.

// Show a "Play" button so that user can start playback.

});

}Caution

Don't play interstitial ads without showing any media controls as they may not autoplay and users will have no way of starting playback.

One cool way to engage users is to use muted autoplay and let them chose to unmute. (See the example below.) Some websites already do this effectively, including Facebook, Instagram, Twitter, and YouTube.

<video id="video" muted autoplay>

<button id="unmuteButton"></button>

<script>

unmuteButton.addEventListener('click', function() {

video.muted = false;

});

</script>Events that trigger user activation are still to be defined consistently across browsers. I'd recommend you stick to "click" for the time being then. See GitHub issue whatwg/html#3849.

#Web Audio

The Web Audio API has been covered by autoplay since Chrome 71. There are a few things to know about it. First, it is good practice to wait for a user interaction before starting audio playback so that users are aware of something happening. Think of a "play" button or "on/off" switch for instance. You can also add an "unmute" button depending on the flow of the app.

If an AudioContext is created before the document receives a user gesture, it will be created in the "suspended" state, and you will need to call resume() after the user gesture.

If you create your AudioContext on page load, you'll have to call resume() at some time after the user interacted with the page (e.g., after a user clicks a button). Alternatively, the AudioContext will be resumed after a user gesture if start() is called on any attached node.

// Existing code unchanged.

window.onload = function() {

var context = new AudioContext();

// Setup all nodes

// ...

}

// One-liner to resume playback when user interacted with the page.

document.querySelector('button').addEventListener('click', function() {

context.resume().then(() => {

console.log('Playback resumed successfully');

});

});You may also create the AudioContext only when the user interacts with the page.

document.querySelector('button').addEventListener('click', function() {

var context = new AudioContext();

// Setup all nodes

// ...

});To detect whether the browser requires a user interaction to play audio, check AudioContext.state after you've created it. If playing is allowed, it should immediately switch to running. Otherwise it will be suspended. If you listen to the statechange event, you can detect changes asynchronously.

To see an example, check out the small Pull Request that fixes Web Audio playback for these autoplay policy rules for https://airhorner.com.

You can find a summary of Chrome's autoplay feature on the Chromium site.