目录

- 矩阵运算

- 前言

- 1. 矩阵乘法和求导

- 总结

矩阵运算

前言

手写AI推出的全新保姆级从零手写自动驾驶CV课程,链接。记录下个人学习笔记,仅供自己参考。

本次课程主要学习矩阵运算的基础,考虑使用矩阵来表达多个线性回归模型。

课程大纲可看下面的思维导图。

1. 矩阵乘法和求导

先回忆下矩阵相关知识

定义矩阵乘法:

{

a

b

d

e

}

×

{

1

3

2

4

}

=

{

a

1

+

b

2

a

3

+

b

4

d

1

+

e

2

d

3

+

e

4

}

\left\{\begin{array}{cc}a&b\\ d&e\end{array}\right\}\times\left\{\begin{array}{cc}1&3\\ 2&4\end{array}\right\}=\left\{\begin{array}{cc}a1+b2&a3+b4\\ d1+e2&d3+e4\end{array}\right\}

{adbe}×{1234}={a1+b2d1+e2a3+b4d3+e4}

记法:C[r][c] = 乘加(A中取 r 行,B中取 c 列)

参考:https://www.cnblogs.com/ljy-endl/p/11411665.html

矩阵求导:

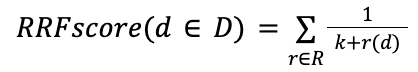

对于 A ⋅ B = C A\cdot B = C A⋅B=C 定义 L L L 是关于 C C C 的损失函数

设

G

=

∂

L

∂

C

G = \dfrac{\partial L}{\partial C}

G=∂C∂L 若直接

C

C

C 对

A

A

A 求导,则

G

G

G 定义为

C

C

C 大小的全 1 矩阵,则有:

∂

L

∂

A

=

G

⋅

B

T

∂

L

∂

B

=

A

T

⋅

G

\dfrac{\partial L}{\partial A}=G\cdot B^T \ \ \ \ \ \dfrac{\partial L}{\partial B}=A^T \cdot G

∂A∂L=G⋅BT ∂B∂L=AT⋅G

矩阵求导推导:

-

考虑矩阵乘法 A ⋅ B = C A \cdot B = C A⋅B=C

-

考虑 Loss 函数 L = ∑ i m ∑ j n ( C i j − p ) 2 L = \sum^m_{i}\sum^n_{j}{(C_{ij} - p)^2} L=i∑mj∑n(Cij−p)2

-

考虑 C C C 的每一项导数 ▽ C i j = ∂ L ∂ C i j \triangledown C_{ij} = \frac{\partial L}{\partial C_{ij}} ▽Cij=∂Cij∂L

-

考虑 A B C ABC ABC 都为 2x2 矩阵时,定义 G G G 为 L L L 对 C C C 的导数

A = [ a b c d ] B = [ e f g h ] C = [ i j k l ] G = ∂ L ∂ C = [ ∂ L ∂ i ∂ L ∂ j ∂ L ∂ k ∂ L ∂ l ] = [ w x y z ] A = \begin{bmatrix} a & b\\ c & d \end{bmatrix} \quad B = \begin{bmatrix} e & f \\ g & h \end{bmatrix} \quad C = \begin{bmatrix} i & j \\ k & l \end{bmatrix} \quad G = \frac{\partial L}{\partial C} = \begin{bmatrix} \frac{\partial L}{\partial i} & \frac{\partial L}{\partial j} \\ \frac{\partial L}{\partial k} & \frac{\partial L}{\partial l} \end{bmatrix} = \begin{bmatrix} w & x \\ y & z \end{bmatrix} A=[acbd]B=[egfh]C=[ikjl]G=∂C∂L=[∂i∂L∂k∂L∂j∂L∂l∂L]=[wyxz] -

展开左边 A ⋅ B A \cdot B A⋅B

C = [ i = a e + b g j = a f + b h k = c e + d g l = c f + d h ] C = \begin{bmatrix} i = ae + bg & j = af + bh\\ k = ce + dg & l = cf + dh \end{bmatrix} C=[i=ae+bgk=ce+dgj=af+bhl=cf+dh]

-

L L L 对于每一个 A A A 的导数

▽ A i j = ∂ L ∂ A i j \triangledown A_{ij} = \frac{\partial L}{\partial A_{ij}} ▽Aij=∂Aij∂L∂ L ∂ a = ∂ L ∂ i ∗ ∂ i ∂ a + ∂ L ∂ j ∗ ∂ j ∂ a ∂ L ∂ b = ∂ L ∂ i ∗ ∂ i ∂ b + ∂ L ∂ j ∗ ∂ j ∂ b ∂ L ∂ c = ∂ L ∂ k ∗ ∂ k ∂ c + ∂ L ∂ l ∗ ∂ l ∂ c ∂ L ∂ d = ∂ L ∂ k ∗ ∂ k ∂ d + ∂ L ∂ l ∗ ∂ l ∂ d \begin{aligned} \frac{\partial L}{\partial a} &= \frac{\partial L}{\partial i} * \frac{\partial i}{\partial a} + \frac{\partial L}{\partial j} * \frac{\partial j}{\partial a} \\ \frac{\partial L}{\partial b} &= \frac{\partial L}{\partial i} * \frac{\partial i}{\partial b} + \frac{\partial L}{\partial j} * \frac{\partial j}{\partial b} \\ \frac{\partial L}{\partial c} &= \frac{\partial L}{\partial k} * \frac{\partial k}{\partial c} + \frac{\partial L}{\partial l} * \frac{\partial l}{\partial c} \\ \frac{\partial L}{\partial d} &= \frac{\partial L}{\partial k} * \frac{\partial k}{\partial d} + \frac{\partial L}{\partial l} * \frac{\partial l}{\partial d} \end{aligned} ∂a∂L∂b∂L∂c∂L∂d∂L=∂i∂L∗∂a∂i+∂j∂L∗∂a∂j=∂i∂L∗∂b∂i+∂j∂L∗∂b∂j=∂k∂L∗∂c∂k+∂l∂L∗∂c∂l=∂k∂L∗∂d∂k+∂l∂L∗∂d∂l

∂ L ∂ a = w e + x f ∂ L ∂ b = w g + x h ∂ L ∂ c = y e + z f ∂ L ∂ d = y g + z h \begin{aligned} \frac{\partial L}{\partial a} &= we + xf \\ \frac{\partial L}{\partial b} &= wg + xh \\ \frac{\partial L}{\partial c} &= ye + zf \\ \frac{\partial L}{\partial d} &= yg + zh \end{aligned} ∂a∂L∂b∂L∂c∂L∂d∂L=we+xf=wg+xh=ye+zf=yg+zh

-

因此 A A A 的导数为

∂ L ∂ A = [ w e + x f w g + x h y e + z f y g + z h ] ∂ L ∂ A = [ w x y z ] [ e g f h ] \frac{\partial L}{\partial A} = \begin{bmatrix} we + xf & wg + xh\\ ye + zf & yg + zh \end{bmatrix} \quad \frac{\partial L}{\partial A} = \begin{bmatrix} w & x\\ y & z \end{bmatrix} \begin{bmatrix} e & g\\ f & h \end{bmatrix} ∂A∂L=[we+xfye+zfwg+xhyg+zh]∂A∂L=[wyxz][efgh]

∂ L ∂ A = G ⋅ B T \frac{\partial L}{\partial A} = G \cdot B^T ∂A∂L=G⋅BT

-

同理 B B B 的导数为

∂ L ∂ e = w a + y c ∂ L ∂ f = x a + z c ∂ L ∂ g = w b + y d ∂ L ∂ h = x b + z d \begin{aligned} \frac{\partial L}{\partial e} &= wa + yc \\ \frac{\partial L}{\partial f} &= xa + zc \\ \frac{\partial L}{\partial g} &= wb + yd \\ \frac{\partial L}{\partial h} &= xb + zd \end{aligned} ∂e∂L∂f∂L∂g∂L∂h∂L=wa+yc=xa+zc=wb+yd=xb+zd∂ L ∂ B = [ w a + y c x a + z c w b + y d x b + z d ] ∂ L ∂ B = [ a c b d ] [ w x y z ] \frac{\partial L}{\partial B} = \begin{bmatrix} wa + yc & xa + zc\\ wb + yd & xb + zd \end{bmatrix} \quad \frac{\partial L}{\partial B} = \begin{bmatrix} a & c\\ b & d \end{bmatrix} \begin{bmatrix} w & x\\ y & z \end{bmatrix} ∂B∂L=[wa+ycwb+ydxa+zcxb+zd]∂B∂L=[abcd][wyxz]

∂ L ∂ B = A T ⋅ G \frac{\partial L}{\partial B} = A^T \cdot G ∂B∂L=AT⋅G

总结

本次课程学习了矩阵求导相关知识,后续实现多个线性回归或者逻辑逻辑回归模型可以考虑使用矩阵方式来表达