一、参考官网:

https://mmtracking.readthedocs.io/zh_CN/latest/install.html#

mmtracking,mmcv,mmdetection版本匹配关系:

| MMTracking version | MMCV version | MMDetection version |

|---|---|---|

| master | mmcv-full>=1.3.17, \<2.0.0 | MMDetection>=2.19.1, \<3.0.0 |

| 0.14.0 | mmcv-full>=1.3.17, \<2.0.0 | MMDetection>=2.19.1, \<3.0.0 |

| 0.13.0 | mmcv-full>=1.3.17, \<1.6.0 | MMDetection>=2.19.1, \<3.0.0 |

| 0.12.0 | mmcv-full>=1.3.17, \<1.5.0 | MMDetection>=2.19.1, \<3.0.0 |

| 0.11.0 | mmcv-full>=1.3.17, \<1.5.0 | MMDetection>=2.19.1, \<3.0.0 |

| 0.10.0 | mmcv-full>=1.3.17, \<1.5.0 | MMDetection>=2.19.1, \<3.0.0 |

| 0.9.0 | mmcv-full>=1.3.17, \<1.5.0 | MMDetection>=2.19.1, \<3.0.0 |

| 0.8.0 | mmcv-full>=1.3.8, \<1.4.0 | MMDetection>=2.14.0, \<3.0.0 |

二、安装:

-

conda创建并激活mmtrack环境:

conda create -n mmtrack python=3.8 pip numpy -y

conda activate mmtrack-

查看cuda版本:

nvcc -V 或者nvidia-smi

注意:

CUDA有 Runtime 运行API和 Driver 驱动API,两者都有对应的CUDA版本:

nvcc -V 显示的就是Runtime 运行API对应的CUDA版本,

而 nvidia-smi显示的是Driver 驱动API对应的CUDA版本。

一般运行API版本<=驱动API版本即可。

软件运行时调用的应该是Runtime API

cat /usr/local/cuda/version.txt 显示的就是cuda软链接里面的cuda版本

————————————————

版权声明:本文为CSDN博主「图形学挖掘机」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weixin_42416791/article/details/115720181-

安装pytorch等:

pip install torch==1.12.0+cu113 torchvision==0.13.0+cu113 torchaudio==0.12.0 --extra-index-url https://download.pytorch.org/whl/cu113

注:我这边是cuda11.3版本的,所以在官网:

https://pytorch.org/get-started/previous-versions/

找11.3可以得到安装命令:

pip install torch==1.12.0+cu113 torchvision==0.13.0+cu113 torchaudio==0.12.0 --extra-index-url https://download.pytorch.org/whl/cu113

-

安装mmvc

pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.12.0/index.html注意:cuda版本和torch版本要与上一步的匹配,我这里是cu113,torch版本是1.12.0,所以这里是torch1.12.0

- 安装mmdet,mmtrack参考官网:依赖 — MMTracking 0.14.0 文档

-

安装 MMDetection:

pip install mmdet

或者如果您想修改代码,也可以从源码构建 MMDetection:

git clone https://github.com/open-mmlab/mmdetection.git cd mmdetection 注意:clone下来的master分支默认是3.0.0版本,mmtrack目前还不支持此版本,所以需要checkout到2.x版本再去编译。 checkout命令:git checkout origin/2.x 编译安装命令: pip install -r requirements/build.txt pip install -v -e . # or "python setup.py develop"

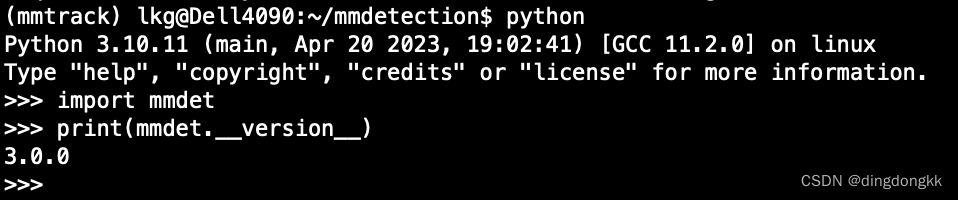

验证安装是否成功:

import mmdet

print(mmdet.__version__)

# 预期输出:3.0.0 或其他版本号

更多参考:

开始你的第一步 — MMDetection 3.0.0 文档

-

将 MMTracking 仓库克隆到本地:

git clone https://github.com/open-mmlab/mmtracking.git cd mmtracking

-

首先安装依赖,然后安装 MMTracking:

pip install -r requirements/build.txt pip install -v -e . # or "python setup.py develop"

-

安装额外的依赖:

-

为 MOTChallenge 评估:

pip install git+https://github.com/JonathonLuiten/TrackEval.git

-

为 LVIS 评估:

pip install git+https://github.com/lvis-dataset/lvis-api.git

-

为 TAO 评估:

pip install git+https://github.com/TAO-Dataset/tao.git

验证

为了验证是否正确安装了 MMTracking 和所需的环境,我们可以运行 MOT、VID、SOT 的示例脚本。

运行 MOT 演示脚本您可以看到输出一个命名为 mot.mp4 的视频文件:

python demo/demo_mot_vis.py configs/mot/deepsort/sort_faster-rcnn_fpn_4e_mot17-private.py --input demo/demo.mp4 --output mot.mp4

三、踩过的坑

1. 报错:

The detected CUDA version (12.0) mismatches the version that was used to compile

PyTorch (11.8). Please make sure to use the same CUDA versions.

原因:pytorch2.0.1不支持cuda12.0

解决办法:安装cuda11.8

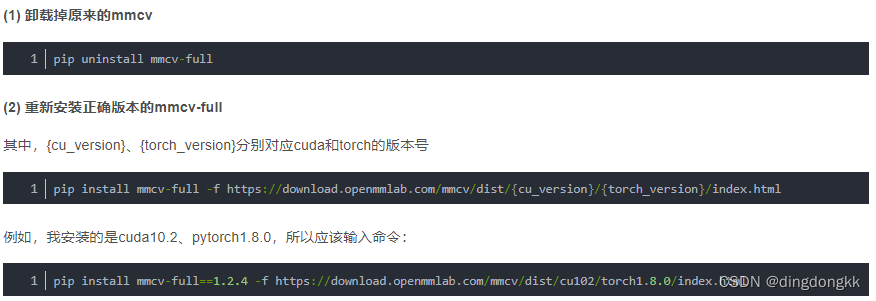

2. 通过mim install "mmcv>=2.0.0"安装mmcv而不是pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.12.0/index.html

报错:

nvcc fatal : Unsupported gpu architecture 'compute_89'

error: command '/usr/local/cuda-11.4/bin/nvcc' failed with exit code 1

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: legacy-install-failure

× Encountered error while trying to install package.

╰─> mmcv

note: This is an issue with the package mentioned above, not pip.

hint: See above for output from the failure.

原因:不同GPU算力不一致。算力参考:https://en.wikipedia.org/wiki/CUDA

| Compute capability (version) | Micro-architecture | GPUs | GeForce |

| 1.0 | Tesla | G80 | GeForce 8800 Ultra, GeForce 8800 GTX, GeForce 8800 GTS(G80) |

| 1.1 | Tesla | G92, G94, G96, G98, G84, G86 | GeForce GTS 250, GeForce 9800 GX2, GeForce 9800 GTX, GeForce 9800 GT, GeForce 8800 GTS(G92), GeForce 8800 GT, GeForce 9600 GT, GeForce 9500 GT, GeForce 9400 GT, GeForce 8600 GTS, GeForce 8600 GT, GeForce 8500 GT, GeForce G110M, GeForce 9300M GS, GeForce 9200M GS, GeForce 9100M G, GeForce 8400M GT, GeForce G105M |

| 1.2 | Tesla | GT218, GT216, GT215 | GeForce GT 340*, GeForce GT 330*, GeForce GT 320*, GeForce 315*, GeForce 310*, GeForce GT 240, GeForce GT 220, GeForce 210, GeForce GTS 360M, GeForce GTS 350M, GeForce GT 335M, GeForce GT 330M, GeForce GT 325M, GeForce GT 240M, GeForce G210M, GeForce 310M, GeForce 305M |

| 1.3 | Tesla | GT200, GT200b | GeForce GTX 295, GTX 285, GTX 280, GeForce GTX 275, GeForce GTX 260 |

| 2.0 | Fermi | GF100, GF110 | GeForce GTX 590, GeForce GTX 580, GeForce GTX 570, GeForce GTX 480, GeForce GTX 470, GeForce GTX 465, GeForce GTX 480M |

| 2.1 | Fermi | GF104, GF106 GF108, GF114, GF116, GF117, GF119 | GeForce GTX 560 Ti, GeForce GTX 550 Ti, GeForce GTX 460, GeForce GTS 450, GeForce GTS 450*, GeForce GT 640 (GDDR3), GeForce GT 630, GeForce GT 620, GeForce GT 610, GeForce GT 520, GeForce GT 440, GeForce GT 440*, GeForce GT 430, GeForce GT 430*, GeForce GT 420*, GeForce GTX 675M, GeForce GTX 670M, GeForce GT 635M, GeForce GT 630M, GeForce GT 625M, GeForce GT 720M, GeForce GT 620M, GeForce 710M, GeForce 610M, GeForce 820M, GeForce GTX 580M, GeForce GTX 570M, GeForce GTX 560M, GeForce GT 555M, GeForce GT 550M, GeForce GT 540M, GeForce GT 525M, GeForce GT 520MX, GeForce GT 520M, GeForce GTX 485M, GeForce GTX 470M, GeForce GTX 460M, GeForce GT 445M, GeForce GT 435M, GeForce GT 420M, GeForce GT 415M, GeForce 710M, GeForce 410M |

| 3.0 | Kepler | GK104, GK106, GK107 | GeForce GTX 770, GeForce GTX 760, GeForce GT 740, GeForce GTX 690, GeForce GTX 680, GeForce GTX 670, GeForce GTX 660 Ti, GeForce GTX 660, GeForce GTX 650 Ti BOOST, GeForce GTX 650 Ti, GeForce GTX 650, GeForce GTX 880M, GeForce GTX 870M, GeForce GTX 780M, GeForce GTX 770M, GeForce GTX 765M, GeForce GTX 760M, GeForce GTX 680MX, GeForce GTX 680M, GeForce GTX 675MX, GeForce GTX 670MX, GeForce GTX 660M, GeForce GT 750M, GeForce GT 650M, GeForce GT 745M, GeForce GT 645M, GeForce GT 740M, GeForce GT 730M, GeForce GT 640M, GeForce GT 640M LE, GeForce GT 735M, GeForce GT 730M |

| 3.5 | Kepler | GK110, GK208 | GeForce GTX Titan Z, GeForce GTX Titan Black, GeForce GTX Titan, GeForce GTX 780 Ti, GeForce GTX 780, GeForce GT 640 (GDDR5), GeForce GT 630 v2, GeForce GT 730, GeForce GT 720, GeForce GT 710, GeForce GT 740M (64-bit, DDR3), GeForce GT 920M |

| 5.0 | Maxwell | GM107, GM108 | GeForce GTX 750 Ti, GeForce GTX 750, GeForce GTX 960M, GeForce GTX 950M, GeForce 940M, GeForce 930M, GeForce GTX 860M, GeForce GTX 850M, GeForce 845M, GeForce 840M, GeForce 830M |

| 5.2 | Maxwell | GM200, GM204, GM206 | GeForce GTX Titan X, GeForce GTX 980 Ti, GeForce GTX 980, GeForce GTX 970, GeForce GTX 960, GeForce GTX 950, GeForce GTX 750 SE, GeForce GTX 980M, GeForce GTX 970M, GeForce GTX 965M |

| 6.1 | Pascal | GP102, GP104, GP106, GP107, GP108 | Nvidia TITAN Xp, Titan X, GeForce GTX 1080 Ti, GTX 1080, GTX 1070 Ti, GTX 1070, GTX 1060, GTX 1050 Ti, GTX 1050, GT 1030, GT 1010, MX350, MX330, MX250, MX230, MX150, MX130, MX110 |

| 7.0 | Volta | GV100 | NVIDIA TITAN V |

| 7.5 | Turing | TU102, TU104, TU106, TU116, TU117 | NVIDIA TITAN RTX, GeForce RTX 2080 Ti, RTX 2080 Super, RTX 2080, RTX 2070 Super, RTX 2070, RTX 2060 Super, RTX 2060 12GB, RTX 2060, GeForce GTX 1660 Ti, GTX 1660 Super, GTX 1660, GTX 1650 Super, GTX 1650, MX550, MX450 |

| 8.6 | Ampere | GA102, GA103, GA104, GA106, GA107 | GeForce RTX 3090 Ti, RTX 3090, RTX 3080 Ti, RTX 3080 12GB, RTX 3080, RTX 3070 Ti, RTX 3070, RTX 3060 Ti, RTX 3060, RTX 3050, RTX 3050 Ti(mobile), RTX 3050(mobile), RTX 2050(mobile), MX570 |

| 8.9 | Ada Lovelace | AD102, AD103, AD104, AD106, AD107 | GeForce RTX 4090, RTX 4080, RTX 4070 Ti, RTX 4070 |

3. 报错:pip install git+https://github.com/JonathonLuiten/TrackEval.git时报错:

fatal: unable to access 'GitHub - JonathonLuiten/TrackEval: HOTA (and other) evaluation metrics for Multi-Object Tracking (MOT).': GnuTLS recv error (-110): The TLS connection was non-properly terminated.

error: subprocess-exited-with-error

× git clone --filter=blob:none --quiet GitHub - JonathonLuiten/TrackEval: HOTA (and other) evaluation metrics for Multi-Object Tracking (MOT). /tmp/pip-req-build-ex0bgbo7 did not run successfully.

│ exit code: 128

╰─> See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error

× git clone --filter=blob:none --quiet GitHub - JonathonLuiten/TrackEval: HOTA (and other) evaluation metrics for Multi-Object Tracking (MOT). /tmp/pip-req-build-ex0bgbo7 did not run successfully.

│ exit code: 128

╰─> See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

原因:可能是github连接不稳定,

解决办法:重新执行几次命令即可。

4. ModuleNotFoundError: No module named ‘mmcv._ext‘

pip install mmcv-full==1.3.17 -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.12.0/index.html

注意:链接中的cuda version和torch版本要对应

参考:ModuleNotFoundError: No module named ‘mmcv._ext‘解决方案_no module named mmcv_AI 菌的博客-CSDN博客