前置条件

1.规划两台主机安装prometheus

# kubectl get nodes --show-labels | grep prometheus

nm-foot-gxc-proms01 Ready worker 62d v1.23.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=proms01,kubernetes.io/os=linux,node-role.kubernetes.io/worker=,node=prometheus

nm-foot-gxc-proms02 Ready worker 62d v1.23.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=proms02,kubernetes.io/os=linux,node-role.kubernetes.io/worker=,node=prometheus2.在规划的主机目录上,新建监控数据存放目录

# mkidr -p /data/prometheus-data具体操作

1.修改prometheus-prometheus.yaml,添加retention参数

# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

。。。

name: k8s

namespace: monitoring

spec:

retention: 90d

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

externalLabels: {}

image: quay.io/prometheus/prometheus:v2.36.1

storage:

volumeClaimTemplate:

spec:

storageClassName: local-storage

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 150Gi

nodeSelector:

#kubernetes.io/os: linux

node: prometheus

。。。2.创建storageclass.yaml文件

# cat storageclass.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer执行命令, 创建storageclass

kubectl create -f storageclass.yaml3.删除prometheus-k8s的pod,并检查是否删除。

# kubectl delete -f prometheus-prometheus.yaml

# kubectl get pod -n monitoring |grep prometheus-k8s4.创建pv.yaml

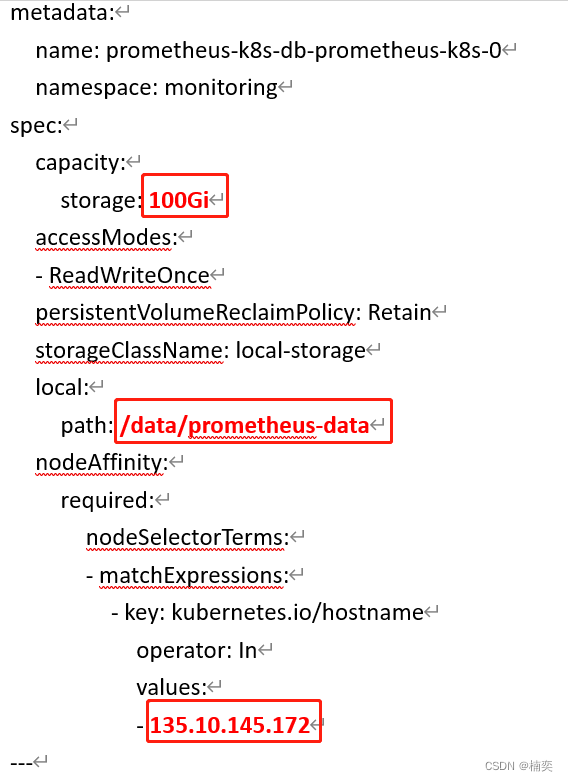

关注着3个地方的配置即可,其中,value值,就看是通过什么方式注册的,如果集群是通过ip方式注册,value值便是ip。若是通过主机名方式,则value值即是主机名。

# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-k8s-db-prometheus-k8s-0

namespace: monitoring

spec:

capacity:

storage: 150Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data/prometheus-data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- proms01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-k8s-db-prometheus-k8s-1

namespace: monitoring

spec:

capacity:

storage: 150Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data/prometheus-data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- proms02

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: prometheus-k8s-db-prometheus-k8s-0

namespace: monitoring

labels:

app: prometheus

prometheus: k8s

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 150Gi

storageClassName: local-storage

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: prometheus-k8s-db-prometheus-k8s-1

namespace: monitoring

labels:

app: prometheus

prometheus: k8s

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 150Gi 所有文件改好后,执行生成pv

# kubectl create -f pv.yaml5.部署prometheus

# kubectl create -f prometheus-prometheus.yaml 6.检查pod的状态

# kubectl get pod -n monitoring | grep "prometheus-k8s"执行kubectl get pod -n monitoring | grep "prometheus-k8s"检查pod,状态为running 并且规划的存储目录下生成prometheus-db 则说明prometheus数据持久化成功.

检查相应的主机下,数据目录是否已生成

# ls /data/prometheus-data/

prometheus-db