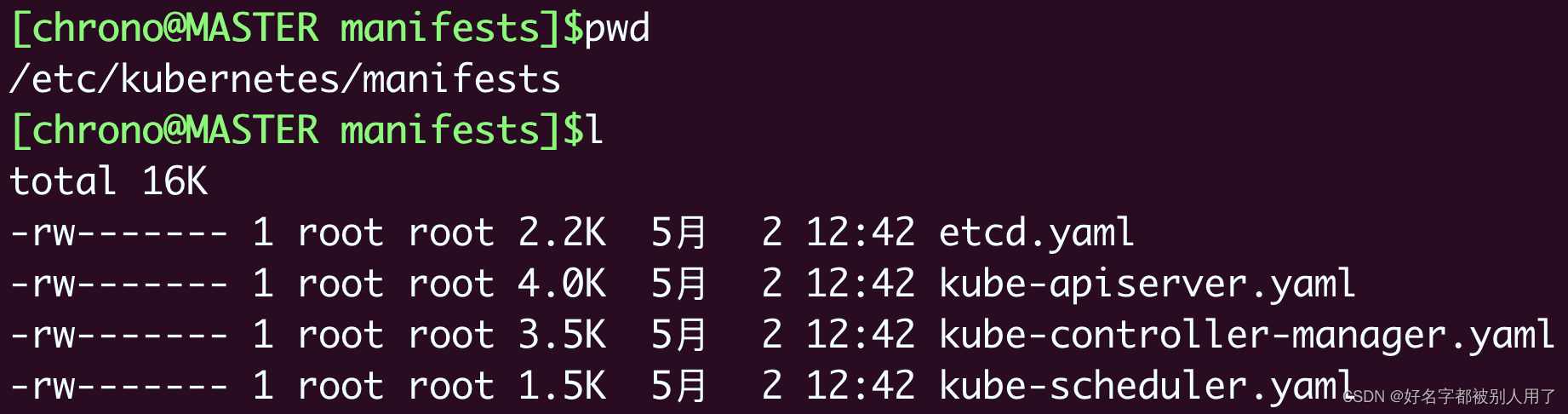

- 安装octomap_ros和rviz插件

sudo apt-get install ros-indigo-octomap* - 源码安装:turtlebot_exploration_3d(本机为Ubuntu16对应的ros版本为kinetic,但是无对应的版本,用的是ubuntu14的indigo,版本向前兼容,故可以运行)

-

cd turtlebot_ws/src git clone https://github.com/RobustFieldAutonomyLab/turtlebot_exploration_3d.git catkin_make - deb包安装:

-

sudo apt-get update sudo apt-get install ros-indigo-turtlebot-exploration-3d运行:

- 主机端,新终端,执行:

-

$ roslaunch turtlebot_exploration_3d minimal_explo.launch $ roslaunch turtlebot_exploration_3d turtlebot_gmapping.launch $ rosrun turtlebot_exploration_3d turtlebot_exploration_3d - 从机端,新终端,执行:

-

roslaunch turtlebot_exploration_3d exploration_rviz.launch

对应的脚本信息如下:

minimal_explo.launch:

<launch>

<!-- Turtlebot -->

<arg name="base" default="$(env TURTLEBOT_BASE)"/> <!-- create, roomba -->

<arg name="battery" default="$(env TURTLEBOT_BATTERY)"/> <!-- /proc/acpi/battery/BAT0 in 2.6 or earlier kernels-->

<arg name="stacks" default="$(env TURTLEBOT_STACKS)"/> <!-- circles, hexagons -->

<arg name="3d_sensor" default="$(env TURTLEBOT_3D_SENSOR)"/> <!-- kinect, asus_xtion_pro -->

<arg name="simulation" default="$(env TURTLEBOT_SIMULATION)"/>

<arg name="serialport" default="$(env TURTLEBOT_SERIAL_PORT)"/> <!-- /dev/ttyUSB0, /dev/ttyS0 -->

<arg name="robot_name" default="$(env TURTLEBOT_NAME)"/>

<arg name="robot_type" default="$(env TURTLEBOT_TYPE)"/>

<param name="/use_sim_time" value="$(arg simulation)"/>

<include file="$(find turtlebot_bringup)/launch/includes/robot.launch.xml">

<arg name="base" value="$(arg base)" />

<arg name="stacks" value="$(arg stacks)" />

<arg name="3d_sensor" value="$(arg 3d_sensor)" />

</include>

<include file="$(find turtlebot_bringup)/launch/includes/mobile_base.launch.xml">

<arg name="base" value="$(arg base)" />

<arg name="serialport" value="$(arg serialport)" />

</include>

<include file="$(find turtlebot_bringup)/launch/includes/netbook.launch.xml">

<arg name="battery" value="$(arg battery)" />

</include>

<!-- Rapp Manager -->

<arg name="auto_rapp_installation" default="false"/> <!-- http://wiki.ros.org/rocon_app_manager/Tutorials/indigo/Automatic Rapp Installation -->

<arg name="auto_start_rapp" default=""/> <!-- autostart a rapp, e.g. rocon_apps/talker -->

<arg name="rapp_package_whitelist" default="$(env TURTLEBOT_RAPP_PACKAGE_WHITELIST)"/> <!-- comma separated list of package names -->

<arg name="rapp_package_blacklist" default="$(env TURTLEBOT_RAPP_PACKAGE_BLACKLIST)"/>

<arg name="robot_icon" default="turtlebot_bringup/turtlebot2.png"/>

<arg name="screen" default="true"/> <!-- verbose output from running apps -->

<!-- ***************************** Rocon Master Info ************************** -->

<arg name="robot_description" default="Kick-ass ROS turtle"/>

<!-- Capabilities -->

<arg name="capabilities" default="true"/> <!-- enable/disable a capability server -->

<arg name="capabilities_server_name" default="capability_server"/>

<arg name="capabilities_nodelet_manager_name" default="capability_server_nodelet_manager" />

<arg name="capabilities_parameters" default="$(find turtlebot_bringup)/param/capabilities/defaults_tb2.yaml" /> <!-- defaults_tb.yaml, defaults_tb2.yaml -->

<arg name="capabilities_package_whitelist" default="[kobuki_capabilities, std_capabilities, turtlebot_capabilities]" /> <!-- get capabilities from these packages only -->

<arg name="capabilities_blacklist" default="['std_capabilities/Navigation2D', 'std_capabilities/MultiEchoLaserSensor']" /> <!-- blacklist specific capabilities -->

<!-- Interactions -->

<arg name="interactions" default="true"/>

<arg name="interactions_list" default="$(optenv INTERACTIONS_LIST [turtlebot_bringup/admin.interactions, turtlebot_bringup/documentation.interactions, turtlebot_bringup/pairing.interactions])"/>

<!-- Zeroconf -->

<arg name="zeroconf" default="true"/>

<arg name="zeroconf_name" default="$(arg robot_name)"/>

<arg name="zeroconf_port" default="11311"/>

<!-- Rapp Manager -->

<include file="$(find rocon_app_manager)/launch/standalone.launch">

<!-- Rapp Manager -->

<arg name="robot_name" value="$(arg robot_name)" />

<arg name="robot_type" value="$(arg robot_type)" />

<arg name="robot_icon" value="$(arg robot_icon)" />

<arg name="rapp_package_whitelist" value="$(arg rapp_package_whitelist)" />

<arg name="rapp_package_blacklist" value="$(arg rapp_package_blacklist)" />

<arg name="auto_start_rapp" value="$(arg auto_start_rapp)" />

<arg name="screen" value="$(arg screen)" />

<arg name="auto_rapp_installation" value="$(arg auto_rapp_installation)" />

<!-- Rocon Master Info -->

<arg name="robot_description" value="$(arg robot_description)" />

<!-- Capabilities -->

<arg name="capabilities" value="$(arg capabilities)" />

<arg name="capabilities_blacklist" value="$(arg capabilities_blacklist)" />

<arg name="capabilities_nodelet_manager_name" value="$(arg capabilities_nodelet_manager_name)" />

<arg name="capabilities_server_name" value="$(arg capabilities_server_name)" />

<arg name="capabilities_package_whitelist" value="$(arg capabilities_package_whitelist)" />

<arg name="capabilities_parameters" value="$(arg capabilities_parameters)" />

<!-- Interactions -->

<arg name="interactions" value="$(arg interactions)"/>

<arg name="interactions_list" value="$(arg interactions_list)"/>

<!-- Zeroconf -->

<arg name="zeroconf" value="$(arg zeroconf)"/>

<arg name="zeroconf_name" value="$(arg zeroconf_name)"/>

<arg name="zeroconf_port" value="$(arg zeroconf_port)"/>

</include>

</launch>

turtlebot_gmapping.launch:

<launch>

<!-- <node pkg="hokuyo_node" type="hokuyo_node" name="hokuyo" />

-->

<include file="$(find turtlebot_bringup)/launch/3dsensor.launch">

<!-- <arg name="rgb_processing" value="true" />

<arg name="depth_registration" value="true" />

<arg name="depth_processing" value="true" />

<arg name="scan_processing" value="true" /> -->

<!-- We must specify an absolute topic name because if not it will be prefixed by "$(arg camera)".

Probably is a bug in the nodelet manager: https://github.com/ros/nodelet_core/issues/7 -->

<arg name="scan_topic" value="/scan_kinect" />

</include>

<node pkg="tf" type="static_transform_publisher" name="base_footprint_to_laser" args="0 0 0.35 0 0 0 base_footprint laser 50" />

<arg name="scan_topic" default="scan_kinect" />

<arg name="base_frame" default="base_footprint"/>

<arg name="odom_frame" default="odom"/>

<node pkg="gmapping" type="slam_gmapping" name="slam_gmapping" output="screen">

<param name="scan_topic" value="$(arg scan_topic)"/>

<param name="base_frame" value="$(arg base_frame)"/>

<param name="odom_frame" value="$(arg odom_frame)"/>

<param name="map_update_interval" value="5.0"/>

<param name="maxUrange" value="7.9"/>

<param name="maxRange" value="8.0"/>

<param name="sigma" value="0.05"/>

<param name="kernelSize" value="1"/>

<param name="lstep" value="0.05"/>

<param name="astep" value="0.05"/>

<param name="iterations" value="5"/>

<param name="lsigma" value="0.075"/>

<param name="ogain" value="3.0"/>

<param name="lskip" value="0"/>

<param name="minimumScore" value="200"/>

<param name="srr" value="0.01"/>

<param name="srt" value="0.02"/>

<param name="str" value="0.01"/>

<param name="stt" value="0.02"/>

<param name="linearUpdate" value="0.5"/>

<param name="angularUpdate" value="0.436"/>

<param name="temporalUpdate" value="-1.0"/>

<param name="resampleThreshold" value="0.5"/>

<param name="particles" value="80"/>

<!--

<param name="xmin" value="-50.0"/>

<param name="ymin" value="-50.0"/>

<param name="xmax" value="50.0"/>

<param name="ymax" value="50.0"/>

make the starting size small for the benefit of the Android client's memory...

-->

<param name="xmin" value="-1.0"/>

<param name="ymin" value="-1.0"/>

<param name="xmax" value="1.0"/>

<param name="ymax" value="1.0"/>

<param name="delta" value="0.01"/>

<param name="llsamplerange" value="0.01"/>

<param name="llsamplestep" value="0.01"/>

<param name="lasamplerange" value="0.005"/>

<param name="lasamplestep" value="0.005"/>

<remap from="scan" to="$(arg scan_topic)"/>

</node>

<include file="$(find turtlebot_navigation)/launch/includes/move_base.launch.xml"/>

<node pkg="turtlebot_exploration_3d" type="scan_to_pcl" name="scan_to_pcl" />

</launch>

turtlebot_exploration_3d.cpp:

// Related headers:

#include "exploration.h"

#include "navigation_utils.h"

#include "gpregressor.h"

#include "covMaterniso3.h"

//C library headers:

#include <iostream>

#include <fstream>

// #include <chrono>

// #include <iterator>

// #include <ctime>

//C++ library headers: NONE

#include <visualization_msgs/Marker.h>

#include <visualization_msgs/MarkerArray.h>

//other library headers: NONE

using namespace std;

int main(int argc, char **argv) {

ros::init(argc, argv, "turtlebot_exploration_3d");

ros::NodeHandle nh;

// Initialize time

time_t rawtime;

struct tm * timeinfo;

char buffer[80];

time (&rawtime);

timeinfo = localtime(&rawtime);

// strftime(buffer,80,"Trajectory_%R_%S_%m%d_DA.txt",timeinfo);

// std::string logfilename(buffer);

// std::cout << logfilename << endl;

strftime(buffer,80,"Octomap3D_%m%d_%R_%S.ot",timeinfo);

octomap_name_3d = buffer;

ros::Subscriber kinect_sub = nh.subscribe<sensor_msgs::PointCloud2>("/camera/depth_registered/points", 1, kinectCallbacks);// need to change##########

ros::Publisher GoalMarker_pub = nh.advertise<visualization_msgs::Marker>( "Goal_Marker", 1 );

ros::Publisher Candidates_pub = nh.advertise<visualization_msgs::MarkerArray>("Candidate_MIs", 1);

ros::Publisher Frontier_points_pub = nh.advertise<visualization_msgs::Marker>("Frontier_points", 1);

ros::Publisher pub_twist = nh.advertise<geometry_msgs::Twist>("/cmd_vel_mux/input/teleop", 1);

ros::Publisher Octomap_pub = nh.advertise<octomap_msgs::Octomap>("octomap_3d",1);

tf_listener = new tf::TransformListener();

tf::StampedTransform transform;

tf::Quaternion Goal_heading; // robot's heading direction

visualization_msgs::MarkerArray CandidatesMarker_array;

visualization_msgs::Marker Frontier_points_cubelist;

geometry_msgs::Twist twist_cmd;

ros::Time now_marker = ros::Time::now();

// Initialize parameters

int max_idx = 0;

point3d Sensor_PrincipalAxis(1, 0, 0);

octomap::OcTreeNode *n;

octomap::OcTree new_tree(octo_reso);

octomap::OcTree new_tree_2d(octo_reso);

cur_tree = &new_tree;

cur_tree_2d = &new_tree_2d;

point3d next_vp;

bool got_tf = false;

bool arrived;

// Update the initial location of the robot

for(int i =0; i < 6; i++){

// Update the pose of the robot

got_tf = false;

while(!got_tf){

try{

tf_listener->lookupTransform("/map", "/camera_rgb_frame", ros::Time(0), transform);// need to change tf of kinect###############

kinect_orig = point3d(transform.getOrigin().x(), transform.getOrigin().y(), transform.getOrigin().z());

got_tf = true;

}

catch (tf::TransformException ex) {

ROS_WARN("Wait for tf: Kinect frame");

}

ros::Duration(0.05).sleep();

}

// Take a Scan

ros::spinOnce();

// Rotate another 60 degrees

twist_cmd.linear.x = twist_cmd.linear.y = twist_cmd.angular.z = 0;

ros::Time start_turn = ros::Time::now();

ROS_WARN("Rotate 60 degrees");

while (ros::Time::now() - start_turn < ros::Duration(2.6)){ // turning duration - second

twist_cmd.angular.z = 0.6; // turning speed

// turning angle = turning speed * turning duration / 3.14 * 180

pub_twist.publish(twist_cmd);

ros::Duration(0.05).sleep();

}

// stop

twist_cmd.angular.z = 0;

pub_twist.publish(twist_cmd);

}

// steps robot taken, counter

int robot_step_counter = 0;

while (ros::ok())

{

vector<vector<point3d>> frontier_groups=extractFrontierPoints(cur_tree);

//frontier_groups.clear();//in the next line

unsigned long int o = 0;

for(vector<vector<point3d>>::size_type e = 0; e < frontier_groups.size(); e++) {

o = o+frontier_groups[e].size();

}

Frontier_points_cubelist.points.resize(o);

ROS_INFO("frontier points %ld", o);

now_marker = ros::Time::now();

Frontier_points_cubelist.header.frame_id = "map";

Frontier_points_cubelist.header.stamp = now_marker;

Frontier_points_cubelist.ns = "frontier_points_array";

Frontier_points_cubelist.id = 0;

Frontier_points_cubelist.type = visualization_msgs::Marker::CUBE_LIST;

Frontier_points_cubelist.action = visualization_msgs::Marker::ADD;

Frontier_points_cubelist.scale.x = octo_reso;

Frontier_points_cubelist.scale.y = octo_reso;

Frontier_points_cubelist.scale.z = octo_reso;

Frontier_points_cubelist.color.a = 1.0;

Frontier_points_cubelist.color.r = (double)255/255;

Frontier_points_cubelist.color.g = 0;

Frontier_points_cubelist.color.b = (double)0/255;

Frontier_points_cubelist.lifetime = ros::Duration();

unsigned long int t = 0;

int l = 0;

geometry_msgs::Point q;

for(vector<vector<point3d>>::size_type n = 0; n < frontier_groups.size(); n++) {

for(vector<point3d>::size_type m = 0; m < frontier_groups[n].size(); m++){

q.x = frontier_groups[n][m].x();

q.y = frontier_groups[n][m].y();

q.z = frontier_groups[n][m].z()+octo_reso;

Frontier_points_cubelist.points.push_back(q);

}

t++;

}

ROS_INFO("Publishing %ld frontier_groups", t);

Frontier_points_pub.publish(Frontier_points_cubelist); //publish frontier_points

Frontier_points_cubelist.points.clear();

// Generate Candidates

vector<pair<point3d, point3d>> candidates = extractCandidateViewPoints(frontier_groups, kinect_orig, num_of_samples);

std::random_shuffle(candidates.begin(),candidates.end()); // shuffle to select a subset

vector<pair<point3d, point3d>> gp_test_poses = candidates;

ROS_INFO("Candidate View Points: %luGenereated, %d evaluating...", candidates.size(), num_of_samples_eva);

int temp_size = candidates.size()-3;

if (temp_size < 1) {

ROS_ERROR("Very few candidates generated, maybe finishing with exploration...");

nh.shutdown();

return 0;

}

// Generate Testing poses

candidates.resize(min(num_of_samples_eva,temp_size));

frontier_groups.clear();

// Evaluate MI for every candidate view points

vector<double> MIs(candidates.size());

double before = countFreeVolume(cur_tree);

// int max_idx = 0;

double begin_mi_eva_secs, end_mi_eva_secs;

begin_mi_eva_secs = ros::Time::now().toSec();

#pragma omp parallel for

for(int i = 0; i < candidates.size(); i++)

{

auto c = candidates[i];

// Evaluate Mutual Information

Sensor_PrincipalAxis = point3d(1.0, 0.0, 0.0);

Sensor_PrincipalAxis.rotate_IP(c.second.roll(), c.second.pitch(), c.second.yaw() );

octomap::Pointcloud hits = castSensorRays(cur_tree, c.first, Sensor_PrincipalAxis);

// Considering pure MI for decision making

MIs[i] = calc_MI(cur_tree, c.first, hits, before);

// Normalize the MI with distance

// MIs[i] = calc_MI(cur_tree, c.first, hits, before) /

// sqrt(pow(c.first.x()-kinect_orig.x(),2) + pow(c.first.y()-kinect_orig.y(),2));

// Pick the Candidate view point with max MI

// if (MIs[i] > MIs[max_idx])

// {

// max_idx = i;

// }

}

// Bayesian Optimization for actively selecting candidate

double train_time, test_time;

GPRegressor g(100, 3, 0.01);

for (int bay_itr = 0; bay_itr < num_of_bay; bay_itr++) {

//Initialize gp regression

MatrixXf gp_train_x(candidates.size(), 2), gp_train_label(candidates.size(), 1), gp_test_x(gp_test_poses.size(), 2);

for (int i=0; i< candidates.size(); i++){

gp_train_x(i,0) = candidates[i].first.x();

gp_train_x(i,1) = candidates[i].first.y();

gp_train_label(i) = MIs[i];

}

for (int i=0; i< gp_test_poses.size(); i++){

gp_test_x(i,0) = gp_test_poses[i].first.x();

gp_test_x(i,1) = gp_test_poses[i].first.y();

}

// Perform GP regression

MatrixXf gp_mean_MI, gp_var_MI;

train_time = ros::Time::now().toSec();

g.train(gp_train_x, gp_train_label);

train_time = ros::Time::now().toSec() - train_time;

test_time = ros::Time::now().toSec();

g.test(gp_test_x, gp_mean_MI, gp_var_MI);

test_time = ros::Time::now().toSec() - test_time;

ROS_INFO("GP: Train(%zd) took %f secs , Test(%zd) took %f secs", candidates.size(), train_time, gp_test_poses.size(), test_time);

// Get Acquisition function

double beta = 2.4;

vector<double> bay_acq_fun(gp_test_poses.size());

for (int i = 0; i < gp_test_poses.size(); i++) {

bay_acq_fun[i] = gp_mean_MI(i) + beta*gp_var_MI(i);

}

vector<int> idx_acq = sort_MIs(bay_acq_fun);

// evaluate MI, add to the candidate

auto c = gp_test_poses[idx_acq[0]];

Sensor_PrincipalAxis = point3d(1.0, 0.0, 0.0);

Sensor_PrincipalAxis.rotate_IP(c.second.roll(), c.second.pitch(), c.second.yaw() );

octomap::Pointcloud hits = castSensorRays(cur_tree, c.first, Sensor_PrincipalAxis);

candidates.push_back(c);

MIs.push_back(calc_MI(cur_tree, c.first, hits, before));

}

end_mi_eva_secs = ros::Time::now().toSec();

ROS_INFO("Mutual Infomation Eva took: %3.3f Secs.", end_mi_eva_secs - begin_mi_eva_secs);

// Normalize the MI with distance

for(int i = 0; i < candidates.size(); i++) {

auto c = candidates[i];

MIs[i] = MIs[i] /

sqrt(pow(c.first.x()-kinect_orig.x(),2) + pow(c.first.y()-kinect_orig.y(),2));

}

// sort vector MIs, with idx_MI, descending

vector<int> idx_MI = sort_MIs(MIs);

// Publish the candidates as marker array in rviz

tf::Quaternion MI_heading;

MI_heading.setRPY(0.0, -PI/2, 0.0);

MI_heading.normalize();

CandidatesMarker_array.markers.resize(candidates.size());

for (int i = 0; i < candidates.size(); i++)

{

CandidatesMarker_array.markers[i].header.frame_id = "map";

CandidatesMarker_array.markers[i].header.stamp = ros::Time::now();

CandidatesMarker_array.markers[i].ns = "candidates";

CandidatesMarker_array.markers[i].id = i;

CandidatesMarker_array.markers[i].type = visualization_msgs::Marker::ARROW;

CandidatesMarker_array.markers[i].action = visualization_msgs::Marker::ADD;

CandidatesMarker_array.markers[i].pose.position.x = candidates[i].first.x();

CandidatesMarker_array.markers[i].pose.position.y = candidates[i].first.y();

CandidatesMarker_array.markers[i].pose.position.z = candidates[i].first.z();

CandidatesMarker_array.markers[i].pose.orientation.x = MI_heading.x();

CandidatesMarker_array.markers[i].pose.orientation.y = MI_heading.y();

CandidatesMarker_array.markers[i].pose.orientation.z = MI_heading.z();

CandidatesMarker_array.markers[i].pose.orientation.w = MI_heading.w();

CandidatesMarker_array.markers[i].scale.x = (double)2.0*MIs[i]/MIs[idx_MI[0]];

CandidatesMarker_array.markers[i].scale.y = 0.2;

CandidatesMarker_array.markers[i].scale.z = 0.2;

CandidatesMarker_array.markers[i].color.a = (double)MIs[i]/MIs[idx_MI[0]];

CandidatesMarker_array.markers[i].color.r = 0.0;

CandidatesMarker_array.markers[i].color.g = 1.0;

CandidatesMarker_array.markers[i].color.b = 0.0;

}

Candidates_pub.publish(CandidatesMarker_array);

CandidatesMarker_array.markers.clear();

candidates.clear();

// loop in the idx_MI, if the candidate with max MI cannot be achieved,

// switch to a sub-optimal MI.

arrived = false;

int idx_ptr = 0;

while (!arrived) {

// Setup the Goal

next_vp = point3d(candidates[idx_MI[idx_ptr]].first.x(),candidates[idx_MI[idx_ptr]].first.y(),candidates[idx_MI[idx_ptr]].first.z());

Goal_heading.setRPY(0.0, 0.0, candidates[idx_MI[idx_ptr]].second.yaw());

Goal_heading.normalize();

ROS_INFO("Max MI : %f , @ location: %3.2f %3.2f %3.2f", MIs[idx_MI[idx_ptr]], next_vp.x(), next_vp.y(), next_vp.z() );

// Publish the goal as a Marker in rviz

visualization_msgs::Marker marker;

marker.header.frame_id = "map";

marker.header.stamp = ros::Time();

marker.ns = "goal_marker";

marker.id = 0;

marker.type = visualization_msgs::Marker::ARROW;

marker.action = visualization_msgs::Marker::ADD;

marker.pose.position.x = next_vp.x();

marker.pose.position.y = next_vp.y();

marker.pose.position.z = 1.0;

marker.pose.orientation.x = Goal_heading.x();

marker.pose.orientation.y = Goal_heading.y();

marker.pose.orientation.z = Goal_heading.z();

marker.pose.orientation.w = Goal_heading.w();

marker.scale.x = 0.5;

marker.scale.y = 0.1;

marker.scale.z = 0.1;

marker.color.a = 1.0; // Don't forget to set the alpha!

marker.color.r = 1.0;

marker.color.g = 1.0;

marker.color.b = 0.0;

GoalMarker_pub.publish( marker );

// Send the Robot

arrived = goToDest(next_vp, Goal_heading);

if(arrived)

{

// Update the initial location of the robot

got_tf = false;

while(!got_tf){

try{

tf_listener->lookupTransform("/map", "/camera_rgb_frame", ros::Time(0), transform);// need to change tf of kinect###############

kinect_orig = point3d(transform.getOrigin().x(), transform.getOrigin().y(), transform.getOrigin().z());

got_tf = true;

}

catch (tf::TransformException ex) {

ROS_WARN("Wait for tf: Kinect frame");

}

ros::Duration(0.05).sleep();

}

// Update Octomap

ros::spinOnce();

ROS_INFO("Succeed, new Map Free Volume: %f", countFreeVolume(cur_tree));

robot_step_counter++;

// prepare octomap msg

octomap_msgs::binaryMapToMsg(*cur_tree, msg_octomap);

msg_octomap.binary = 1;

msg_octomap.id = 1;

msg_octomap.resolution = octo_reso;

msg_octomap.header.frame_id = "/map";

msg_octomap.header.stamp = ros::Time::now();

Octomap_pub.publish(msg_octomap);

ROS_INFO("Octomap updated in RVIZ");

// // Send out results to file.

// explo_log_file.open(logfilename, std::ofstream::out | std::ofstream::app);

// explo_log_file << "DA Step ," << robot_step_counter << ", Current Entropy ," << countFreeVolume(cur_tree) << ", time, " << ros::Time::now().toSec() << endl;

// explo_log_file.close();

}

else

{

ROS_WARN("Failed to drive to the %d th goal, switch to the sub-optimal..", idx_ptr);

idx_ptr++;

if(idx_ptr > MIs.size()) {

ROS_ERROR("None of the goal is valid for path planning, shuting down the node");

nh.shutdown();

}

}

}

// r.sleep();

}

nh.shutdown();

return 0;

}

exploration_rviz.launch:

<launch>

<node name="rviz" pkg="rviz" type="rviz" args="-d $(find turtlebot_exploration_3d)/launch/turtlebot_explo.rviz" />

</launch>

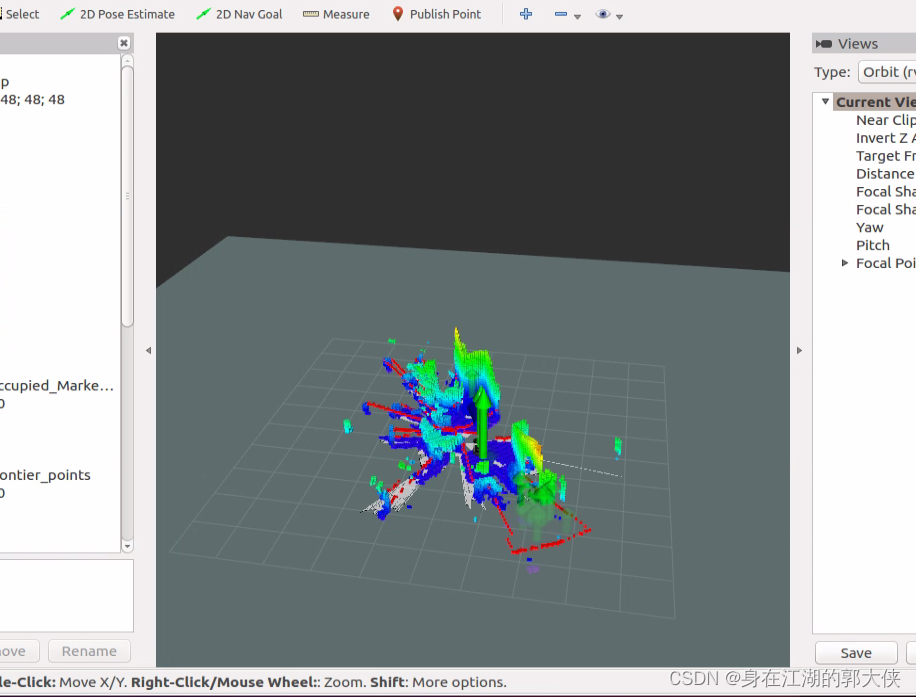

- 自动建图进行会比较慢,会显示octomap图,同时也实现了gmapping的建图

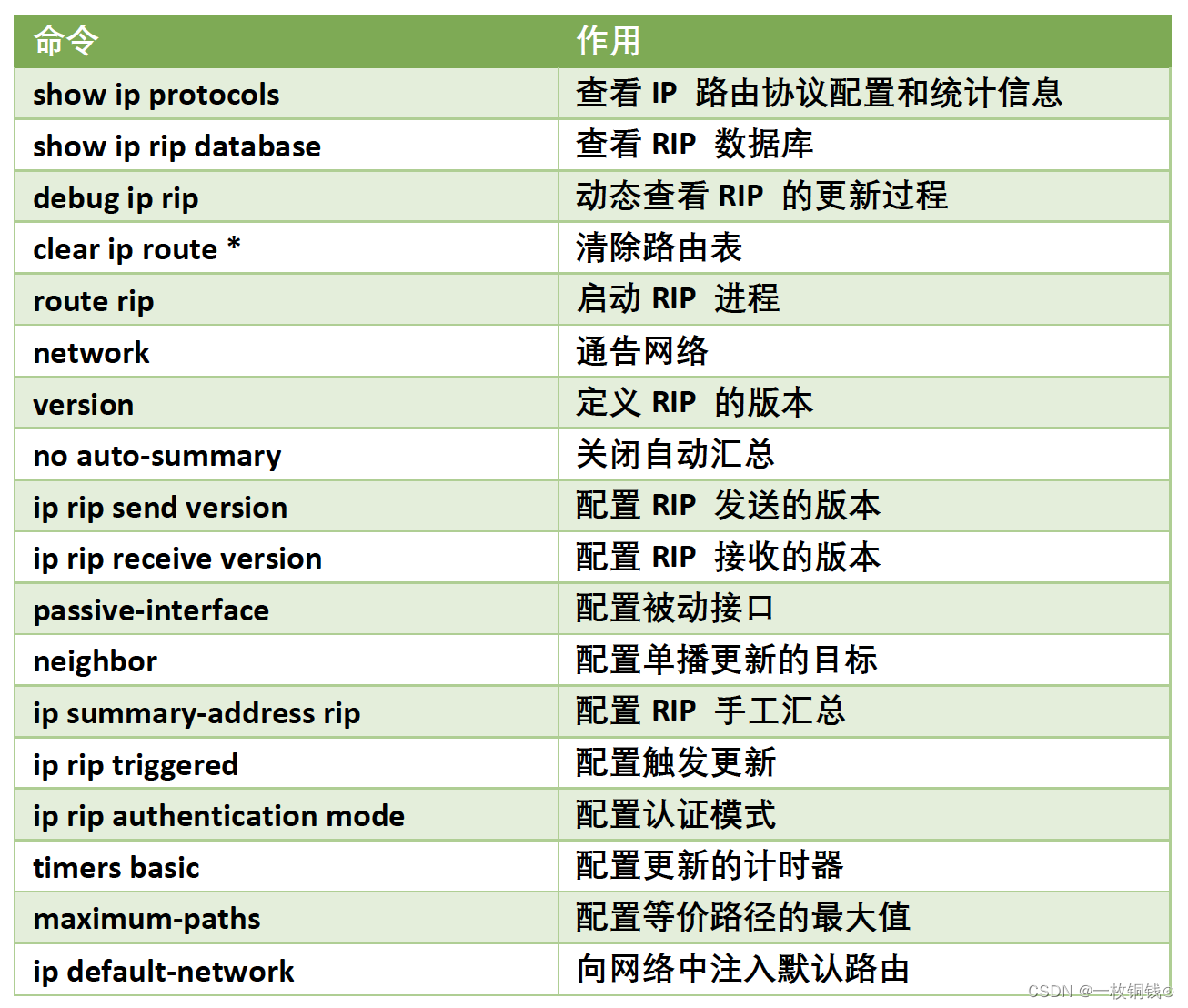

image: /home/ubuntu/map/zhizaokongjian.pgm

resolution: 0.050000

origin: [-12.200000, -12.200000, 0.000000]

negate: 0

occupied_thresh: 0.65

free_thresh: 0.196

image: 含占用信息的image文件的路径;可以是绝对路径,也可以是到YAML文件的相对路径。

resolution:地图的分辨率,meters/pixel

origin: 机器人相对地图原点的位姿,(x,y,yaw),yaw逆时针旋转(yaw=0表示没有旋转)。

occupied_thresh:单元占用的概率大于这个阈值则认为完全占用。

free_thresh: 单元占用的概率小于这个阈值则认为完全自由。

negate: 不论白色/黑色,自由/占用,semantics(语义/符号)应该被反转(阈值的解释不受影响)。-

保存地图:

- 新建目录:

-

mkdir ~/map - 保存地图:

-

rosrun map_server map_saver -f ~/map1 -

得到两个文件如下:

- map1.pgm 地图

- map1.yaml 配置

-

map1.yaml样例:

- 必填的字节:

![[附源码]计算机毕业设计springboot家庭医生签约服务管理系统](https://img-blog.csdnimg.cn/7d39b4d997f14cafb34b19e7ab549a7b.png)

![[附源码]SSM计算机毕业设计亿上汽车在线销售管理系统JAVA](https://img-blog.csdnimg.cn/0b8a5c37dfb14e57af1341bf66b3d91d.png)