本博客为人脸识别系统的活体检测代码解释

人脸识别系统博客汇总:人脸识别系统-博客索引

项目GitHub地址:

注意:阅读本博客前请先参考以下博客

工具安装、环境配置:人脸识别系统-简介

UI界面设计:人脸识别系统-UI界面设计

UI事件处理:人脸识别系统-UI事件处理

摄像头画面展示:人脸识别系统-摄像头画面展示

阅读完本博客后可以继续阅读:

用户端逻辑:

- 人脸识别:Python | 人脸识别系统 — 人脸识别

- 背景模糊:Python | 人脸识别系统 — 背景模糊

- 姿态检测:Python | 人脸识别系统 — 姿态检测

- 人脸比对:Python | 人脸识别系统 — 人脸比对

- 用户操作:Python | 人脸识别系统 — 用户操作

管理员端逻辑:

- 管理员操作:

- 用户操作:

一、基本思路

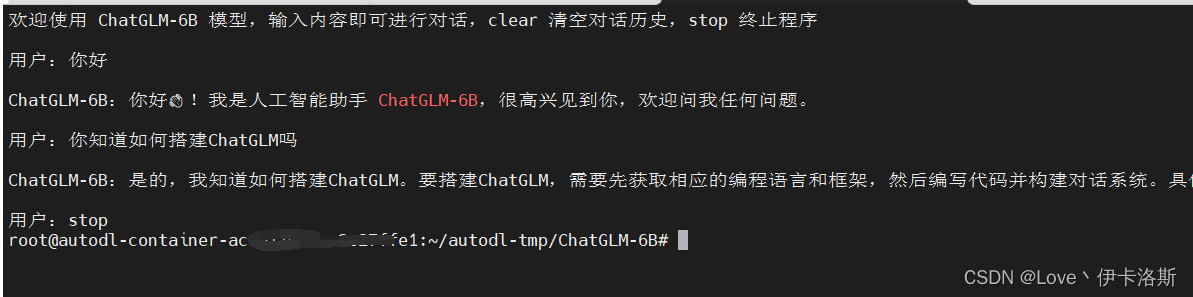

代码使用 静默活体检测+交互活体检测 结合判断。

静默活体检测使用百度API,通过接口返回的置信度,判断是否通过。

交互活体检测通过要求用户完成一定动作,判断是否通过。

二、初始化

初始化 isFaceRecognition_flag 标志判断当前人脸识别状态。

按钮绑定人脸识别判断器 recognize_face_judge 方法。

其余属性会在后面讲到。

def __init__(self, parent=None):

super(UserMainWindow, self).__init__(parent)

self.setupUi(self)

self.isFaceDetection_flag = False # 是否打开活体检测标志

self.biopsy_testing_button.clicked.connect(self.detect_face_judge) # 活体检测

self.detector = None # 人脸检测器

self.predictor = None # 特征点检测器

# 闪烁阈值

self.EAR_THRESH = None

self.MOUTH_THRESH = None

# 总闪烁次数

self.eye_flash_counter = None

self.mouth_open_counter = None

self.turn_left_counter = None

self.turn_right_counter = None

# 连续帧数阈值

self.EAR_CONSTANT_FRAMES = None

self.MOUTH_CONSTANT_FRAMES = None

self.LEFT_CONSTANT_FRAMES = None

self.RIGHT_CONSTANT_FRAMES = None

# 连续帧计数器

self.eye_flash_continuous_frame = 0

self.mouth_open_continuous_frame = 0

self.turn_left_continuous_frame = 0

self.turn_right_continuous_frame = 0

# 字体颜色

self.text_color = (255, 0, 0)

# 百度API

self.api = BaiduApiUtil三、判断器

# 活体检测判断器

def detect_face_judge(self):

if not self.cap.isOpened():

QMessageBox.information(self, "提示", self.tr("请先打开摄像头"))

else:

if not self.isFaceDetection_flag:

self.isFaceDetection_flag = True

self.biopsy_testing_button.setText("关闭活体检测")

self.detect_face()

self.biopsy_testing_button.setText("活体检测")

self.isFaceDetection_flag = False

elif self.isFaceDetection_flag:

self.isFaceDetection_flag = False

self.remind_label.setText("")

self.biopsy_testing_button.setText("活体检测")

self.show_camera()四、检测器

首先判断当前环境是否联网(联网检测代码在BaiduApiUtil工具类中,工具类的代码在下面),联网进行静默活体检测+交互活体检测(联网检测),否则进行单独的交互活体检测(本地检测)。

# 百度API

self.api = BaiduApiUtil

... ...

# 整体活体检测

def detect_face(self):

if self.api.network_connect_judge():

if not self.detect_face_network():

return False

if not self.detect_face_local():

return False

return True

# 联网活体检测

def detect_face_network(self):

... ...

# 本地活体检测

def detect_face_local(self):

... ...1、静默活体检测

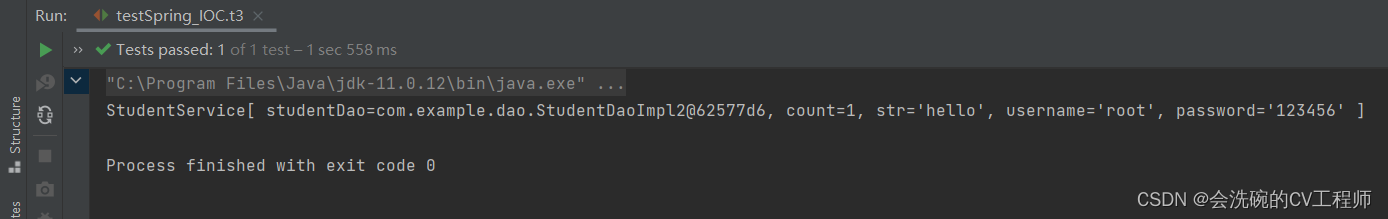

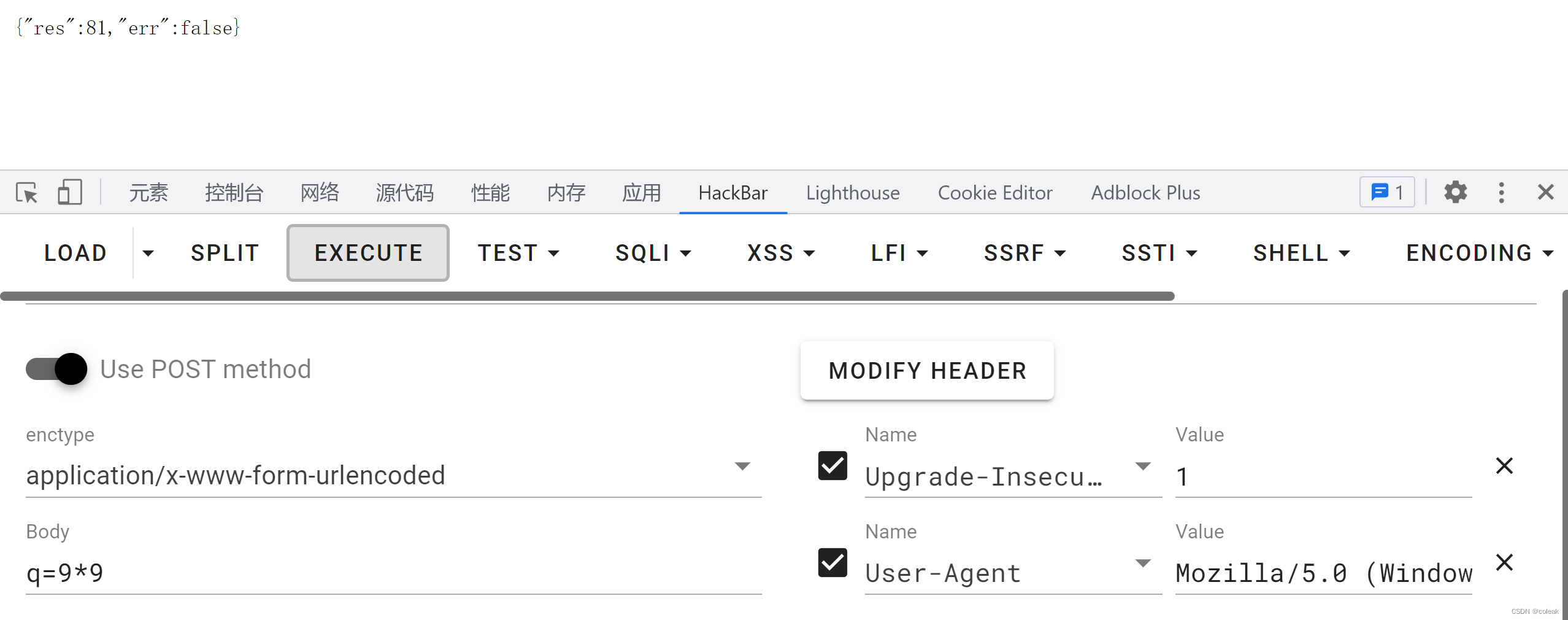

静默活体检测用到了百度智能云接口,我们创建一个工具类 BaiduApiUtil,在工具类中编写网络连接、请求、解析结果等的代码。然后在用户界面逻辑代码中进行使用。

接口详情请参考 百度智能云-接口详情

代码示例请参考 百度智能云-代码示例

注意:使用前需要注册百度智能云账号,申请接口(接口免费),获取自己的API_KEY以及SECRET_KEY

(1)工具类 BaiduApiUtil

a.联网判断

def network_connect_judge():

"""

联网判断

:return: 是否联网

"""

ret = os.system("ping baidu.com -n 1")

return True if ret == 0 else Falseb. 获取访问令牌

将申请百度接口的API_KEY等参数保存到.conf配置文件中(配置文件在当前项目的conf目录下),然后在使用ConfigParser读取并使用。

[baidu_config] app_id = XXXXXXXXXXXXXXXXXXXXXXXX secret_key = XXXXXXXXXXXXXXXXXXXXXXXX

def get_access_token():

"""

获取访问令牌

:return: 访问令牌

"""

conf = ConfigParser()

path = os.path.join(os.path.dirname(__file__))

conf.read(path[:path.rindex('util')] + "conf\\setting.conf", encoding='gbk')

API_KEY = conf.get('baidu_config', 'app_id')

SECRET_KEY = conf.get('baidu_config', 'secret_key')

url = "https://aip.baidubce.com/oauth/2.0/token"

params = {"grant_type": "client_credentials", "client_id": API_KEY, "client_secret": SECRET_KEY}

return str(requests.post(url, params=params).json().get("access_token"))

c.接口调用

注意:进行API请求时,上传的图片格式为base64格式,我们传入的图片为jpg格式,故需要进行格式转换。通过base64.b64encode()方法进行转换

def face_api_invoke(path):

"""

人脸 API 调用

:param path: 待检测的图片路径

:return: 是否通过静默人脸识别

"""

with open(path, 'rb') as f:

img_data = f.read()

base64_data = base64.b64encode(img_data)

base64_str = base64_data.decode('utf-8')

url = "https://aip.baidubce.com/rest/2.0/face/v3/faceverify?access_token=" + get_access_token()

headers = {'Content-Type': 'application/json'}

payload = json.dumps(([{

"image": base64_str,

"image_type": "BASE64"

}]))

response = requests.request("POST", url, headers=headers, data=payload)

print(response)

result = json.loads(response.text)

if result["error_msg"] == "SUCCESS":

frr_1e_4 = result["result"]["thresholds"]["frr_1e-4"]

frr_1e_3 = result["result"]["thresholds"]["frr_1e-3"]

frr_1e_2 = result["result"]["thresholds"]["frr_1e-2"]

face_liveness = result["result"]["face_liveness"]

if face_liveness >= frr_1e_2:

return True

elif frr_1e_3 <= face_liveness <= frr_1e_2:

return True

elif face_liveness <= frr_1e_4:

return False(2)用户主界面逻辑调用

# 文件目录

curPath = os.path.abspath(os.path.dirname(__file__))

# 项目根路径

rootPath = curPath[:curPath.rindex('logic')] # logic为存放用户界面逻辑代码的文件夹名

# 配置文件夹路径

CONF_FOLDER_PATH = rootPath + 'conf\\'

# 图片文件夹路径

PHOTO_FOLDER_PATH = rootPath + 'photo\\'

# 数据文件夹路径

DATA_FOLDER_PATH = rootPath + 'data\\'

... ...

# 联网活体检测

def detect_face_network(self):

while self.cap.isOpened():

ret, frame = self.cap.read()

frame_location = face_recognition.face_locations(frame)

if len(frame_location) == 0:

QApplication.processEvents()

self.remind_label.setText("未检测到人脸")

else:

global PHOTO_FOLDER_PATH

shot_path = PHOTO_FOLDER_PATH + datetime.now().strftime("%Y%m%d%H%M%S") + ".jpg"

self.show_image.save(shot_path)

QApplication.processEvents()

self.remind_label.setText("正在初始化\n请稍后")

# 百度API进行活体检测

QApplication.processEvents()

if not self.api.face_api_invoke(shot_path):

os.remove(shot_path)

QMessageBox.about(self, '警告', '未通过活体检测')

self.remind_label.setText("")

return False

else:

os.remove(shot_path)

return True

show_video = cv2.cvtColor(cv2.resize(frame, (self.WIN_WIDTH, self.WIN_HEIGHT)), cv2.COLOR_BGR2RGB)

self.show_image = QImage(show_video.data, show_video.shape[1], show_video.shape[0], QImage.Format_RGB888)

self.camera_label.setPixmap(QPixmap.fromImage(self.show_image))2、交互活体检测

(1)基本原理

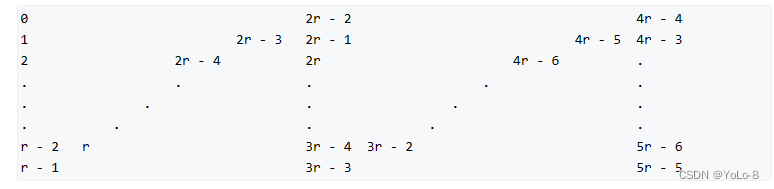

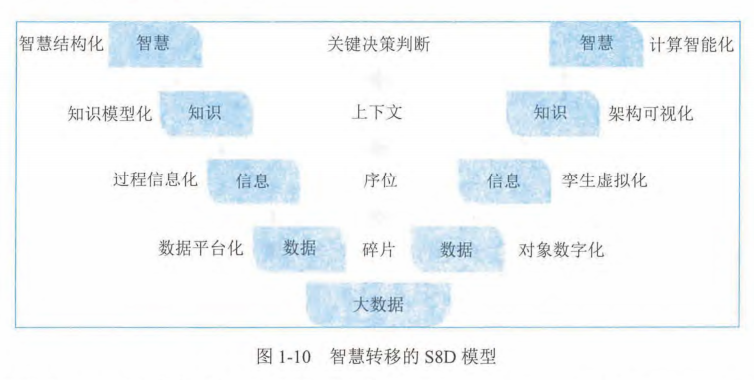

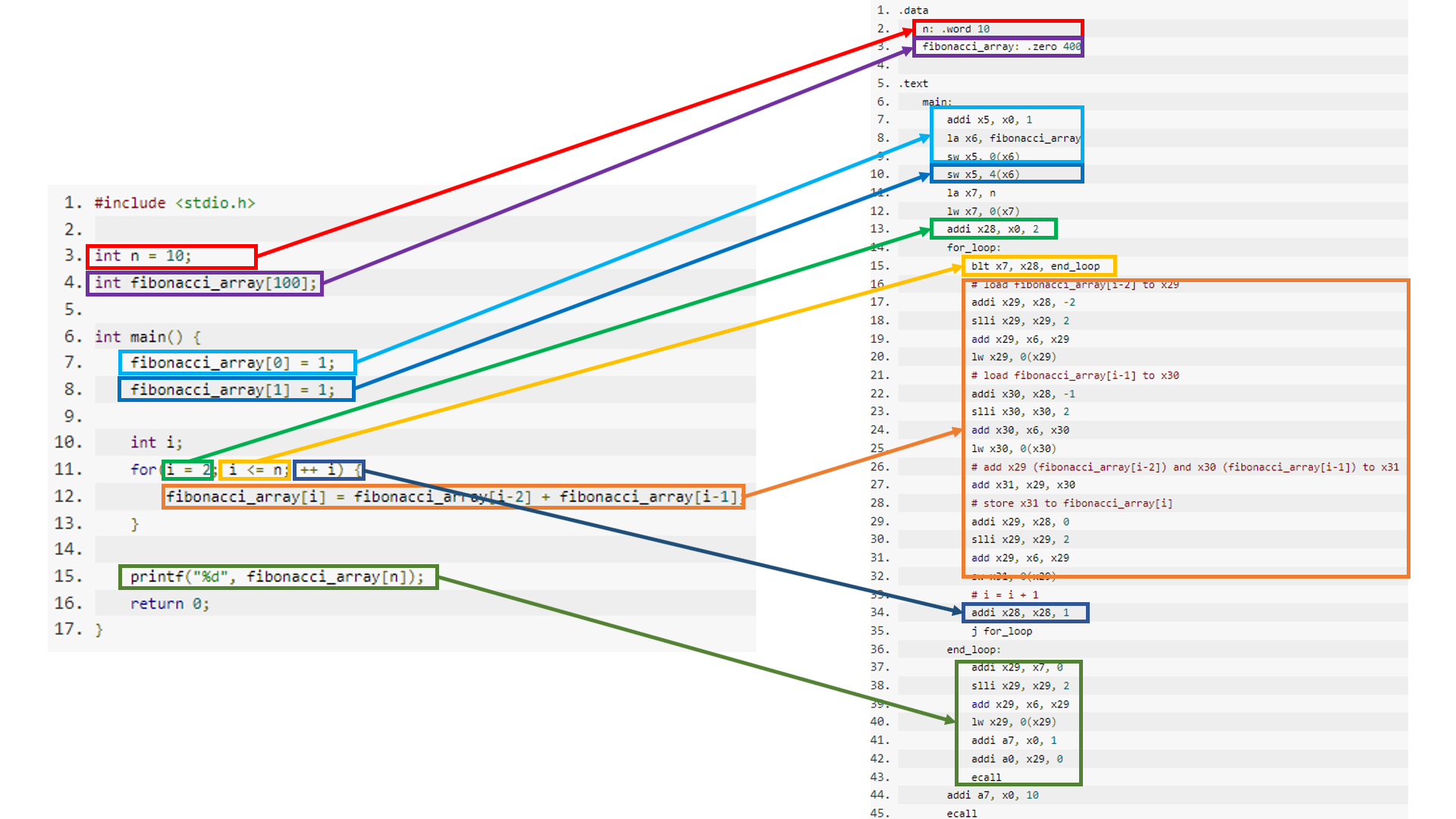

采用开源框架dlib的shape_predictor_68_face_landmarks模型,对人脸的68个特征点进行检测定位。本系统活体检测主要检测人脸左摇头、右摇头、眨眼、张嘴、点头等多个动作,故需要鼻子[32,36]、左眼[37,42]、右眼[43,48]、上嘴唇内边缘[66,68]等多个部分的特征点集合。

眨眼检测的基本原理是计算眼睛长宽比EAR(Eye Aspect Ratio)值。当人眼睁开时,EAR在某个值上下波动。当人眼闭合时,EAR将迅速下降,理论上接近于零,实际上一般波动于0.25上下,故本系统设置阈值在0.25。

EAR的计算公式如下:

其中,p1~p5为当前眼睛的6个标记点,图示如下:

(2)实现原理

不断读取摄像头传回的每一帧,对该帧的EAR值进行计算。当EAR低于阈值时,自动将当前帧计数加一。当帧连续计数超过2帧后,EAR值大于阈值,则将该次动作视为一次眨眼

同理,对张嘴、左摇头、右摇头的处理也是类似的。首先通过dlib获取当前器官的标记点,计算其长宽比,与系统预先指定的阈值进行比较。当长宽比小于阈值时,连续帧计数器自动加一。当连续帧计数器值超过指定值时,判断本次动作为一次有效的动作,进行记录。

由于需要用户进行各种动作的完成,纸质或者电子照片基本上无法再通过本次活体检测。

但对于视频,攻击者有可能使用预先录制的完成一定顺序动作的视频,以此欺骗系统。对于该情况,本团队的应对措施如下:

系统需要用户完成左摇头、右摇头、眨眼、张嘴动作,其中张嘴以及眨眼指定的次数为指定数目。系统对上述动作进行随机打乱,并且张嘴以及眨眼指定的次数也为随机数。

通过以上方式,用户在进行每一次的交互活体检测时,需要完成的方案都是完全不相同的,且完成的张嘴、眨眼次数也是不同的。当用户超过系统的指定时间未完成检测,则自动判断为活体检测失效。当用户超过3次进行登录的活体检测失败,系统将判断当前用户存在风险,并锁死当前登录的用户。被锁死的用户需要经过管理员通过管理员系统方可以解除锁定。

通过以上的方式,对视频的欺骗攻击,本系统也有能力进行抵御阻挡。

(3)代码详解

a.初始化

需要初始化的参数包括:特征点检测器 self.predictor、self.detector、闪烁阈值、总闪烁次数、连续帧数阈值、连续帧计数器、当前总帧数、检测随机值、面部特征点索引。

特征点检测器:通过dlib的shape_predictor_68_face_landmarks模型,对人脸的68个特征点进行检测定位,首先需要进行模型的加载。由于模型加载时间较长,设置逻辑判断。当不是第一次使用活体检测时,使用已经加载好的属性,提高初始化时间。

面部特征点索引:当前用户面部特征点的索引序号

闪烁阈值、连续帧计数器:设置眨眼、张嘴的EAR、MAR阈值,当前帧用户的动作超过阈值时连续帧计数器加一。

连续帧数阈值:当帧连续计数超过阈值设置的帧数后,EAR值大于阈值,则将该次动作视为一次眨眼或张嘴动作。

总闪烁次数:用户需要完成的动作的次数。

当前总帧数:从开始到当前时间 活体检测的帧数,超过系统指定帧数时则判断活体检测失败。

检测随机值:包括随机次数的眨眼、张嘴次数,以及随机的动作集合,如(右转头-眨眼-张嘴-左转头)、(右转头-眨眼-左转头-张嘴)、(眨眼-张嘴-右转头-左转头)等

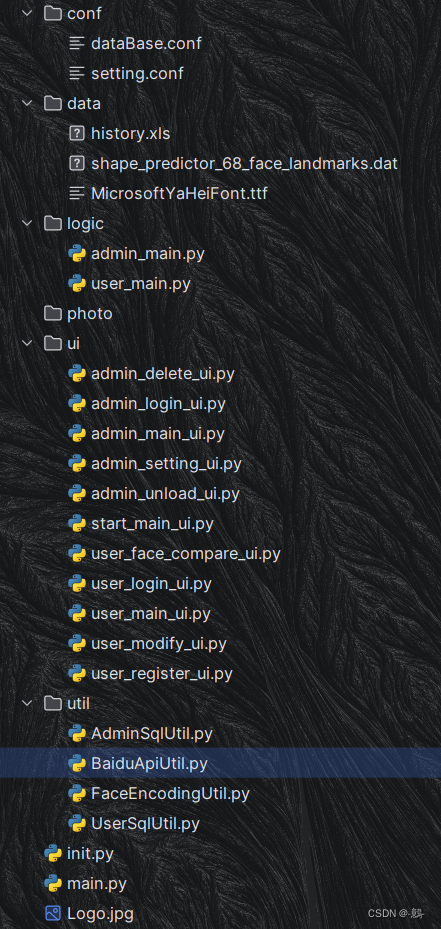

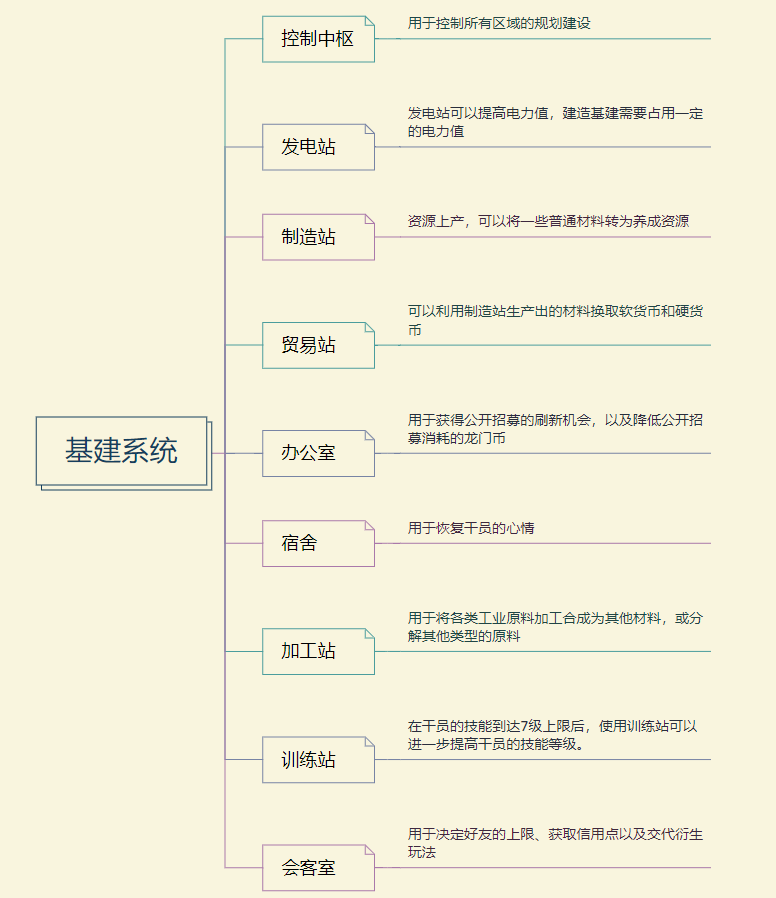

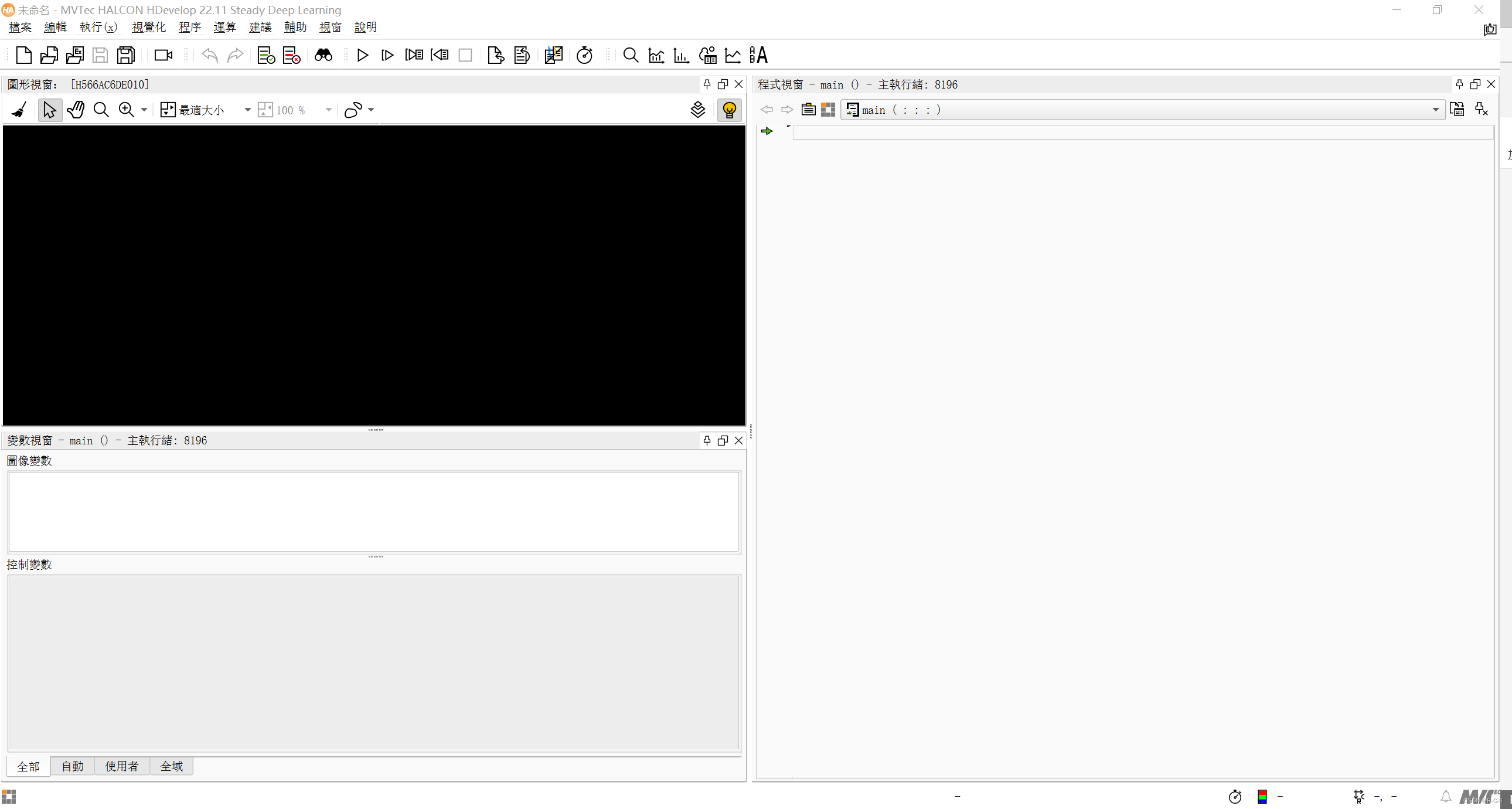

项目结构如下:

其中 shape_predictor_68_face_landmarks.dat 文件在项目的data目录下。

# 本地活体检测

def detect_face_local(self):

self.detect_start_time = time()

QApplication.processEvents()

self.remind_label.setText("正在初始化\n请稍后")

# 特征点检测器首次加载比较慢,通过判断减少后面加载的速度

if self.detector is None:

self.detector = dlib.get_frontal_face_detector()

if self.predictor is None:

self.predictor = dlib.shape_predictor('../data/shape_predictor_68_face_landmarks.dat')

# 闪烁阈值

self.EAR_THRESH = 0.25

self.MOUTH_THRESH = 0.7

# 总闪烁次数

self.eye_flash_counter = 0

self.mouth_open_counter = 0

self.turn_left_counter = 0

self.turn_right_counter = 0

# 连续帧数阈值

self.EAR_CONSTANT_FRAMES = 2

self.MOUTH_CONSTANT_FRAMES = 2

self.LEFT_CONSTANT_FRAMES = 4

self.RIGHT_CONSTANT_FRAMES = 4

# 连续帧计数器

self.eye_flash_continuous_frame = 0

self.mouth_open_continuous_frame = 0

self.turn_left_continuous_frame = 0

self.turn_right_continuous_frame = 0

print("活体检测 初始化时间:", time() - self.detect_start_time)

# 当前总帧数

total_frame_counter = 0

# 设置随机值

now_flag = 0

random_type = [0, 1, 2, 3]

random.shuffle(random_type)

random_eye_flash_number = random.randint(4, 6)

random_mouth_open_number = random.randint(2, 4)

QMessageBox.about(self, '提示', '请按照指示执行相关动作')

self.remind_label.setText("")

# 抓取面部特征点的索引

(lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS["left_eye"]

(rStart, rEnd) = face_utils.FACIAL_LANDMARKS_IDXS["right_eye"]

(mStart, mEnd) = face_utils.FACIAL_LANDMARKS_IDXS["mouth"]b.EAR、MAR等值的计算

以眼睛为例,获取眼睛的,套用计算EAR值的公式,计算得到EAR值。

# 计算眼长宽比例 EAR值

@staticmethod

def count_EAR(eye):

A = dist.euclidean(eye[1], eye[5])

B = dist.euclidean(eye[2], eye[4])

C = dist.euclidean(eye[0], eye[3])

EAR = (A + B) / (2.0 * C)

return EAR

# 计算嘴长宽比例 MAR值

@staticmethod

def count_MAR(mouth):

A = dist.euclidean(mouth[1], mouth[11])

B = dist.euclidean(mouth[2], mouth[10])

C = dist.euclidean(mouth[3], mouth[9])

D = dist.euclidean(mouth[4], mouth[8])

E = dist.euclidean(mouth[5], mouth[7])

F = dist.euclidean(mouth[0], mouth[6]) # 水平欧几里德距离

ratio = (A + B + C + D + E) / (5.0 * F)

return ratio

# 计算左右脸转动比例 FR值

@staticmethod

def count_FR(face):

rightA = dist.euclidean(face[0], face[27])

rightB = dist.euclidean(face[2], face[30])

rightC = dist.euclidean(face[4], face[48])

leftA = dist.euclidean(face[16], face[27])

leftB = dist.euclidean(face[14], face[30])

leftC = dist.euclidean(face[12], face[54])

ratioA = rightA / leftA

ratioB = rightB / leftB

ratioC = rightC / leftC

ratio = (ratioA + ratioB + ratioC) / 3

return ratioc.用户动作判断

def check_eye_flash(self, average_EAR):

if average_EAR < self.EAR_THRESH:

self.eye_flash_continuous_frame += 1

else:

if self.eye_flash_continuous_frame >= self.EAR_CONSTANT_FRAMES:

self.eye_flash_counter += 1

self.eye_flash_continuous_frame = 0

def check_mouth_open(self, mouth_MAR):

if mouth_MAR > self.MOUTH_THRESH:

self.mouth_open_continuous_frame += 1

else:

if self.mouth_open_continuous_frame >= self.MOUTH_CONSTANT_FRAMES:

self.mouth_open_counter += 1

self.mouth_open_continuous_frame = 0

def check_right_turn(self, leftRight_FR):

if leftRight_FR <= 0.5:

self.turn_right_continuous_frame += 1

else:

if self.turn_right_continuous_frame >= self.RIGHT_CONSTANT_FRAMES:

self.turn_right_counter += 1

self.turn_right_continuous_frame = 0

def check_left_turn(self, leftRight_FR):

if leftRight_FR >= 2.0:

self.turn_left_continuous_frame += 1

else:

if self.turn_left_continuous_frame >= self.LEFT_CONSTANT_FRAMES:

self.turn_left_counter += 1

self.turn_left_continuous_frame = 0d.活体检测判断

当摄像头打开时,进行活体检测判断。当用户 活体检测成功 或者 超时 时才退出循环。

while self.cap.isOpened():

ret, frame = self.cap.read()

total_frame_counter += 1

frame = imutils.resize(frame)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

rects = self.detector(gray, 0)

if len(rects) == 1:

QApplication.processEvents()

shape = self.predictor(gray, rects[0])

shape = face_utils.shape_to_np(shape)

# 提取面部坐标

left_eye = shape[lStart:lEnd]

right_eye = shape[rStart:rEnd]

mouth = shape[mStart:mEnd]

# 计算长宽比

left_EAR = self.count_EAR(left_eye)

right_EAR = self.count_EAR(right_eye)

mouth_MAR = self.count_MAR(mouth)

leftRight_FR = self.count_FR(shape)

average_EAR = (left_EAR + right_EAR) / 2.0

# 计算左眼、右眼、嘴巴的凸包

left_eye_hull = cv2.convexHull(left_eye)

right_eye_hull = cv2.convexHull(right_eye)

mouth_hull = cv2.convexHull(mouth)

# 可视化

cv2.drawContours(frame, [left_eye_hull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [right_eye_hull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [mouth_hull], -1, (0, 255, 0), 1)

if now_flag >= 4:

self.remind_label.setText("")

QMessageBox.about(self, '提示', '已通过活体检测')

self.turn_right_counter = 0

self.mouth_open_counter = 0

self.eye_flash_counter = 0

return True

if random_type[now_flag] == 0:

if self.turn_left_counter > 0:

now_flag += 1

else:

self.remind_label.setText("请向左摇头")

self.check_left_turn(leftRight_FR)

self.turn_right_counter = 0

self.mouth_open_counter = 0

self.eye_flash_counter = 0

elif random_type[now_flag] == 1:

if self.turn_right_counter > 0:

now_flag += 1

else:

self.remind_label.setText("请向右摇头")

self.check_right_turn(leftRight_FR)

self.turn_left_counter = 0

self.mouth_open_counter = 0

self.eye_flash_counter = 0

elif random_type[now_flag] == 2:

if self.mouth_open_counter >= random_mouth_open_number:

now_flag += 1

else:

self.remind_label.setText("已张嘴{}次\n还需张嘴{}次".format(self.mouth_open_counter, (

random_mouth_open_number - self.mouth_open_counter)))

self.check_mouth_open(mouth_MAR)

self.turn_right_counter = 0

self.turn_left_counter = 0

self.eye_flash_counter = 0

elif random_type[now_flag] == 3:

if self.eye_flash_counter >= random_eye_flash_number:

now_flag += 1

else:

self.remind_label.setText("已眨眼{}次\n还需眨眼{}次".format(self.eye_flash_counter, (

random_eye_flash_number - self.eye_flash_counter)))

self.check_eye_flash(average_EAR)

self.turn_right_counter = 0

self.turn_left_counter = 0

self.mouth_open_counter = 0

elif len(rects) == 0:

QApplication.processEvents()

self.remind_label.setText("没有检测到人脸!")

elif len(rects) > 1:

QApplication.processEvents()

self.remind_label.setText("检测到超过一张人脸!")

show_video = cv2.cvtColor(cv2.resize(frame, (self.WIN_WIDTH, self.WIN_HEIGHT)), cv2.COLOR_BGR2RGB)

self.show_image = QImage(show_video.data, show_video.shape[1], show_video.shape[0], QImage.Format_RGB888)

self.camera_label.setPixmap(QPixmap.fromImage(self.show_image))

if total_frame_counter >= 1000.0:

QMessageBox.about(self, '警告', '已超时,未通过活体检测')

self.remind_label.setText("")

return False(4)全部代码

# 计算眼长宽比例 EAR值

@staticmethod

def count_EAR(eye):

A = dist.euclidean(eye[1], eye[5])

B = dist.euclidean(eye[2], eye[4])

C = dist.euclidean(eye[0], eye[3])

EAR = (A + B) / (2.0 * C)

return EAR

# 计算嘴长宽比例 MAR值

@staticmethod

def count_MAR(mouth):

A = dist.euclidean(mouth[1], mouth[11])

B = dist.euclidean(mouth[2], mouth[10])

C = dist.euclidean(mouth[3], mouth[9])

D = dist.euclidean(mouth[4], mouth[8])

E = dist.euclidean(mouth[5], mouth[7])

F = dist.euclidean(mouth[0], mouth[6]) # 水平欧几里德距离

ratio = (A + B + C + D + E) / (5.0 * F)

return ratio

# 计算左右脸转动比例 FR值

@staticmethod

def count_FR(face):

rightA = dist.euclidean(face[0], face[27])

rightB = dist.euclidean(face[2], face[30])

rightC = dist.euclidean(face[4], face[48])

leftA = dist.euclidean(face[16], face[27])

leftB = dist.euclidean(face[14], face[30])

leftC = dist.euclidean(face[12], face[54])

ratioA = rightA / leftA

ratioB = rightB / leftB

ratioC = rightC / leftC

ratio = (ratioA + ratioB + ratioC) / 3

return ratio

# 本地活体检测

def detect_face_local(self):

self.detect_start_time = time()

QApplication.processEvents()

self.remind_label.setText("正在初始化\n请稍后")

# 特征点检测器首次加载比较慢,通过判断减少后面加载的速度

if self.detector is None:

self.detector = dlib.get_frontal_face_detector()

if self.predictor is None:

global DATA_FOLDER_PATH

self.predictor = dlib.shape_predictor('../data/shape_predictor_68_face_landmarks.dat')

# 闪烁阈值

self.EAR_THRESH = 0.25

self.MOUTH_THRESH = 0.7

# 总闪烁次数

self.eye_flash_counter = 0

self.mouth_open_counter = 0

self.turn_left_counter = 0

self.turn_right_counter = 0

# 连续帧数阈值

self.EAR_CONSTANT_FRAMES = 2

self.MOUTH_CONSTANT_FRAMES = 2

self.LEFT_CONSTANT_FRAMES = 4

self.RIGHT_CONSTANT_FRAMES = 4

# 连续帧计数器

self.eye_flash_continuous_frame = 0

self.mouth_open_continuous_frame = 0

self.turn_left_continuous_frame = 0

self.turn_right_continuous_frame = 0

print("活体检测 初始化时间:", time() - self.detect_start_time)

# 当前总帧数

total_frame_counter = 0

# 设置随机值

now_flag = 0

random_type = [0, 1, 2, 3]

random.shuffle(random_type)

random_eye_flash_number = random.randint(4, 6)

random_mouth_open_number = random.randint(2, 4)

QMessageBox.about(self, '提示', '请按照指示执行相关动作')

self.remind_label.setText("")

# 抓取面部特征点的索引

(lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS["left_eye"]

(rStart, rEnd) = face_utils.FACIAL_LANDMARKS_IDXS["right_eye"]

(mStart, mEnd) = face_utils.FACIAL_LANDMARKS_IDXS["mouth"]

while self.cap.isOpened():

ret, frame = self.cap.read()

total_frame_counter += 1

frame = imutils.resize(frame)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

rects = self.detector(gray, 0)

if len(rects) == 1:

QApplication.processEvents()

shape = self.predictor(gray, rects[0])

shape = face_utils.shape_to_np(shape)

# 提取面部坐标

left_eye = shape[lStart:lEnd]

right_eye = shape[rStart:rEnd]

mouth = shape[mStart:mEnd]

# 计算长宽比

left_EAR = self.count_EAR(left_eye)

right_EAR = self.count_EAR(right_eye)

mouth_MAR = self.count_MAR(mouth)

leftRight_FR = self.count_FR(shape)

average_EAR = (left_EAR + right_EAR) / 2.0

# 计算左眼、右眼、嘴巴的凸包

left_eye_hull = cv2.convexHull(left_eye)

right_eye_hull = cv2.convexHull(right_eye)

mouth_hull = cv2.convexHull(mouth)

# 可视化

cv2.drawContours(frame, [left_eye_hull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [right_eye_hull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [mouth_hull], -1, (0, 255, 0), 1)

if now_flag >= 4:

self.remind_label.setText("")

QMessageBox.about(self, '提示', '已通过活体检测')

self.turn_right_counter = 0

self.mouth_open_counter = 0

self.eye_flash_counter = 0

return True

if random_type[now_flag] == 0:

if self.turn_left_counter > 0:

now_flag += 1

else:

self.remind_label.setText("请向左摇头")

self.check_left_turn(leftRight_FR)

self.turn_right_counter = 0

self.mouth_open_counter = 0

self.eye_flash_counter = 0

elif random_type[now_flag] == 1:

if self.turn_right_counter > 0:

now_flag += 1

else:

self.remind_label.setText("请向右摇头")

self.check_right_turn(leftRight_FR)

self.turn_left_counter = 0

self.mouth_open_counter = 0

self.eye_flash_counter = 0

elif random_type[now_flag] == 2:

if self.mouth_open_counter >= random_mouth_open_number:

now_flag += 1

else:

self.remind_label.setText("已张嘴{}次\n还需张嘴{}次".format(self.mouth_open_counter, (

random_mouth_open_number - self.mouth_open_counter)))

self.check_mouth_open(mouth_MAR)

self.turn_right_counter = 0

self.turn_left_counter = 0

self.eye_flash_counter = 0

elif random_type[now_flag] == 3:

if self.eye_flash_counter >= random_eye_flash_number:

now_flag += 1

else:

self.remind_label.setText("已眨眼{}次\n还需眨眼{}次".format(self.eye_flash_counter, (

random_eye_flash_number - self.eye_flash_counter)))

self.check_eye_flash(average_EAR)

self.turn_right_counter = 0

self.turn_left_counter = 0

self.mouth_open_counter = 0

elif len(rects) == 0:

QApplication.processEvents()

self.remind_label.setText("没有检测到人脸!")

elif len(rects) > 1:

QApplication.processEvents()

self.remind_label.setText("检测到超过一张人脸!")

show_video = cv2.cvtColor(cv2.resize(frame, (self.WIN_WIDTH, self.WIN_HEIGHT)), cv2.COLOR_BGR2RGB)

self.show_image = QImage(show_video.data, show_video.shape[1], show_video.shape[0], QImage.Format_RGB888)

self.camera_label.setPixmap(QPixmap.fromImage(self.show_image))

if total_frame_counter >= 1000.0:

QMessageBox.about(self, '警告', '已超时,未通过活体检测')

self.remind_label.setText("")

return False

def check_eye_flash(self, average_EAR):

if average_EAR < self.EAR_THRESH:

self.eye_flash_continuous_frame += 1

else:

if self.eye_flash_continuous_frame >= self.EAR_CONSTANT_FRAMES:

self.eye_flash_counter += 1

self.eye_flash_continuous_frame = 0

def check_mouth_open(self, mouth_MAR):

if mouth_MAR > self.MOUTH_THRESH:

self.mouth_open_continuous_frame += 1

else:

if self.mouth_open_continuous_frame >= self.MOUTH_CONSTANT_FRAMES:

self.mouth_open_counter += 1

self.mouth_open_continuous_frame = 0

def check_right_turn(self, leftRight_FR):

if leftRight_FR <= 0.5:

self.turn_right_continuous_frame += 1

else:

if self.turn_right_continuous_frame >= self.RIGHT_CONSTANT_FRAMES:

self.turn_right_counter += 1

self.turn_right_continuous_frame = 0

def check_left_turn(self, leftRight_FR):

if leftRight_FR >= 2.0:

self.turn_left_continuous_frame += 1

else:

if self.turn_left_continuous_frame >= self.LEFT_CONSTANT_FRAMES:

self.turn_left_counter += 1

self.turn_left_continuous_frame = 0阅读完本博客后可以继续阅读:

用户端逻辑:

- 人脸识别:Python | 人脸识别系统 — 人脸识别

- 背景模糊:Python | 人脸识别系统 — 背景模糊

- 姿态检测:Python | 人脸识别系统 — 姿态检测

- 人脸比对:Python | 人脸识别系统 — 人脸比对

- 用户操作:Python | 人脸识别系统 — 用户操作

管理员端逻辑:

- 管理员操作:

- 用户操作:

注:以上代码仅为参考,若需要运行,请参考项目GitHub完整源代码: