1.读取数据与数据处理

为什么不对数据进行标准化?

我们制作的评分卡,评分卡是要给医务人员们使用的基于病人的化验结果打分的一张卡片,而为了制作这张卡片,我们需要对我们的数据进行一个“分档”,比如说,年龄20-30岁为一档,年龄30-50岁为一档每档的分数不同。

一旦我们将数据统一量纲,或者标准化了之后,数据大小和范围都会改变,统计结果是漂亮了,但是对于医务人员来说,他们完全无法理解,标准化后的年龄在0.00328~0.00467之间为一档是什么含义。并且,病人的各种化验结果,天生就是量纲不统一的,我们的确可以将所有的信息录入之后,统一进行标准化,然后导入算法计算,但是最终落到医务人员手上去判断的时候,他们会完全不理解为什么录入的信息变成了一串统计上很美但实际上根本看不懂的数字。所以在制作评分卡的时候,我们要尽量保持数据的原貌,年龄就是8-110的数字即便量纲不统一,我们也不对数据进行标准化处理。

import pandas as pd

#读取数据和只保留8个因子

pre_pyh_df=pd.read_csv('data/mimic3811_jueduizhi.csv')

# # 'dongwuqixue'第一类,

Lactate = pre_pyh_df.pop('Lactate')

pH = pre_pyh_df.pop('pH')

Anion_Gap = pre_pyh_df.pop('Anion Gap')

Bicarbonate = pre_pyh_df.pop('Bicarbonate')

Calcium = pre_pyh_df.pop('Calcium')

Chloride = pre_pyh_df.pop('Chloride')

Glucose = pre_pyh_df.pop('Glucose')

Potassium = pre_pyh_df.pop('Potassium')

Sodium = pre_pyh_df.pop('Sodium')

Hematocrit = pre_pyh_df.pop('Hematocrit')

Hemoglobin = pre_pyh_df.pop('Hemoglobin')

# # 'shengongneng'第二类,

Creatinine = pre_pyh_df.pop('Creatinine')

UreaNitogen = pre_pyh_df.pop('UreaNitogen')

Magnesium = pre_pyh_df.pop('Magnesium')

Phosphate = pre_pyh_df.pop('Phosphate')

# #

# # 'ningxuegongneng'第三类,

PT = pre_pyh_df.pop('PT')

# PTT = pre_pyh_df.pop('PTT')

# INR = pre_pyh_df.pop('INR(PT)')

#

# # 'yanzhengmianyi'第四类,

# Lymphocytes = pre_pyh_df.pop('Lymphocytes')

# Monocytes = pre_pyh_df.pop('Monocytes')

# Neutrophil = pre_pyh_df.pop('Neutrophil')

# White_Blood_Cells = pre_pyh_df.pop('White_Blood_Cells')

Basophils = pre_pyh_df.pop('Basophils')

Eosinophils = pre_pyh_df.pop('Eosinophils')

# Platelet_Count = pre_pyh_df.pop('Platelet_Count')

#

# # 'gangongneng',第五类

AlanineAminotransferase = pre_pyh_df.pop('AlanineAminotransferase(ALT)')

AlkalinePhosphatase = pre_pyh_df.pop('AlkalinePhosphatase')

AspirateAminotransferase = pre_pyh_df.pop('AspirateAminotransferase(AST)')

Albumin = pre_pyh_df.pop('Albumin')

Bilirubin = pre_pyh_df.pop('Bilirubin')

# #

# # # 'qita'其他

MCH = pre_pyh_df.pop('MCH')

MCHC = pre_pyh_df.pop('MCHC')

MCV = pre_pyh_df.pop('MCV')

RDW = pre_pyh_df.pop('RDW')

Red_Blood_Cells = pre_pyh_df.pop('Red _Blood_Cells')

# patients_age = pre_pyh_df.pop('patients_age')

data=pre_pyh_df

data_num=pre_pyh_df

data1=pre_pyh_df

print(data.shape,data.columns)

2.分箱

(一)等频分箱(对单列数据-age)

#等频分箱

#pd.qcut,基于分位数的分箱函数,本质是将连续型变量离散化 只能够处理一维数据。返回箱子的上限和下限

#参数q:要分箱的个数

#参数retbins=True来要求同时返回结构为索引为样本索引,元素为分到的箱子的Series 现在返回两个值:每个样本属于哪个箱子,以及所有箱子的上限和下限

import pandas as pd

data["qcut"], updown = pd.qcut(data["patients_age"], retbins=True, q=5)

#在这里时让model_data新添加一列叫做“分箱”,这一列其实就是每个样本所对应的箱子

data["qcut"]

# 统计每个分箱中0和1的数量

# 这里使用了数据透视表的功能groupby

coount_y0 = data[data["dead"] == 0].groupby(by="qcut").count()["dead"]

#得到每个箱子里标签为0的样本量的个数

coount_y1 = data[data["dead"] == 1].groupby(by="qcut").count()["dead"]

#得到每个箱子里标签为1的样本量的个数

print(coount_y0)

print('-'*15)

print(coount_y1)

#num_bins值分别为每个区间的上界,下界,0出现的次数,1出现的次数

num_bins = [*zip(updown, updown[1:],coount_y0 ,coount_y1 )]

print(num_bins)

(二)确保每个箱中都有0和1

for i in range(5):

#如果第一个组没有包含正样本或负样本,向后合并

if 0 in num_bins[0][2:]:

num_bins[0:2] = [(

num_bins[0][0],

num_bins[1][1],

num_bins[0][2]+num_bins[1][2],

num_bins[0][3]+num_bins[1][3])]

continue

for i in range(len(num_bins)):

if 0 in num_bins[i][2:]:

[(num_bins)[i-1: i+1]] = ([

num_bins[i-1][0],

num_bins[i][1],

num_bins[i-1][2]+num_bins[i][2],

num_bins[i-1][3]+num_bins[i][3]])

break

else:

break

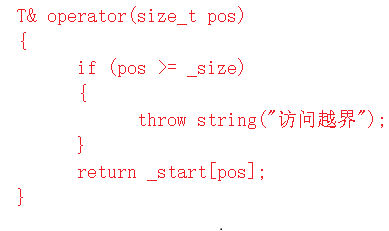

(三)定义WOE和IV函数

columns = ['min', 'max', 'count_0', 'count_1']

df = pd.DataFrame(num_bins,columns=columns)

print(df.count_0+df.count_1)

#计算WOE和BAD RATE

#BAD RATE与bad%不是一个东西

#BAD RATE是一个箱中,坏的样本所占的比例 (bad/total)

#而bad%是一个箱中的坏样本占整个特征中的坏样本的比例

import numpy as np

def get_woe(num_bins):

# 通过 num_bins 数据计算 woe

columns = ["min","max","count_0","count_1"]

df = pd.DataFrame(num_bins,columns=columns)

df["total"] = df.count_0 + df.count_1

df["percentage"] = df.total / df.total.sum()

df["bad_rate"] = df.count_1 / df.total

df["good%"] = df.count_0/df.count_0.sum()

df["bad%"] = df.count_1/df.count_1.sum()

df["woe"] = np.log(df["good%"] / df["bad%"])

return df

#计算IV值

def get_iv(df):

rate = df["good%"] - df["bad%"]

iv = np.sum(rate * df.woe)

return iv

(四)卡方检验,合并箱体,画出IV曲线

num_bins_ = num_bins.copy()

import matplotlib.pyplot as plt

import scipy

IV = []

axisx = []

while len(num_bins_) > 2:

pvs = []

# 获取 num_bins_两两之间的卡方检验的置信度(或卡方值)

for i in range(len(num_bins_)-1):

x1 = num_bins_[i][2:]

x2 = num_bins_[i+1][2:]

# 0 返回 chi2 值,1 返回 p 值。

pv = scipy.stats.chi2_contingency([x1,x2])[1]

# chi2 = scipy.stats.chi2_contingency([x1,x2])[0]

pvs.append(pv)

#通过p值处理,合并p值最大的两组

i = pvs.index(max(pvs))

num_bins_[i:i+2] = [(

num_bins_[i][0],

num_bins_[i+1][1],

num_bins_[i][2]+num_bins_[i+1][2],

num_bins_[i][3]+num_bins_[i+1][3])]

bins_df = get_woe(num_bins_)

axisx.append(len(num_bins_))

IV.append(get_iv(bins_df))

plt.figure()

plt.plot(axisx, IV)

plt.xticks(axisx)

plt.xlabel("number of box")

plt.ylabel("IV")

plt.show()

(五)用最佳分箱个数分箱,并验证分箱结果

def get_bin(num_bins_,n):

while len(num_bins_) > n:

pvs = []

for i in range(len(num_bins_)-1):

x1 = num_bins_[i][2:]

x2 = num_bins_[i+1][2:]

pv = scipy.stats.chi2_contingency([x1,x2])[1]

# chi2 = scipy.stats.chi2_contingency([x1,x2])[0]

pvs.append(pv)

i = pvs.index(max(pvs))

num_bins_[i:i+2] = [(

num_bins_[i][0],

num_bins_[i+1][1],

num_bins_[i][2]+num_bins_[i+1][2],

num_bins_[i][3]+num_bins_[i+1][3])]

return num_bins_

afterbins = get_bin(num_bins,4)

print(afterbins)

(六)将选取最佳分箱个数的过程包装为函数,对所有特征进行分箱选择

def graphforbestbin(DF, X, Y, n=5,q=20,graph=True):

"""

自动最优分箱函数,基于卡方检验的分箱

参数:

DF: 需要输入的数据

X: 需要分箱的列名

Y: 分箱数据对应的标签 Y 列名

n: 保留分箱个数

q: 初始分箱的个数

graph: 是否要画出IV图像

区间为前开后闭 (]

"""

DF = DF[[X,Y]].copy()

DF["qcut"],bins = pd.qcut(DF[X], retbins=True, q=q,duplicates="drop")

coount_y0 = DF.loc[DF[Y]==0].groupby(by="qcut").count()[Y]

coount_y1 = DF.loc[DF[Y]==1].groupby(by="qcut").count()[Y]

num_bins = [*zip(bins,bins[1:],coount_y0,coount_y1)]

for i in range(q):

if 0 in num_bins[0][2:]:

num_bins[0:2] = [(

num_bins[0][0],

num_bins[1][1],

num_bins[0][2]+num_bins[1][2],

num_bins[0][3]+num_bins[1][3])]

continue

for i in range(len(num_bins)):

if 0 in num_bins[i][2:]:

num_bins[i-1:i+1] = [(

num_bins[i-1][0],

num_bins[i][1],

num_bins[i-1][2]+num_bins[i][2],

num_bins[i-1][3]+num_bins[i][3])]

break

else:

break

def get_woe(num_bins):

columns = ["min","max","count_0","count_1"]

df = pd.DataFrame(num_bins,columns=columns)

df["total"] = df.count_0 + df.count_1

df["percentage"] = df.total / df.total.sum()

df["bad_rate"] = df.count_1 / df.total

df["good%"] = df.count_0/df.count_0.sum()

df["bad%"] = df.count_1/df.count_1.sum()

df["woe"] = np.log(df["good%"] / df["bad%"])

return df

def get_iv(df):

rate = df["good%"] - df["bad%"]

iv = np.sum(rate * df.woe)

return iv

IV = []

axisx = []

while len(num_bins) > n:

pvs = []

for i in range(len(num_bins)-1):

x1 = num_bins[i][2:]

x2 = num_bins[i+1][2:]

pv = scipy.stats.chi2_contingency([x1,x2])[1]

pvs.append(pv)

i = pvs.index(max(pvs))

num_bins[i:i+2] = [(

num_bins[i][0],

num_bins[i+1][1],

num_bins[i][2]+num_bins[i+1][2],

num_bins[i][3]+num_bins[i+1][3])]

bins_df = pd.DataFrame(get_woe(num_bins))

axisx.append(len(num_bins))

IV.append(get_iv(bins_df))

if graph:

plt.figure()

plt.plot(axisx,IV)

plt.xticks(axisx)

plt.show()

return bins_df

data.columns

for i in data.columns[0:8]:

print(i)

graphforbestbin(data,i,"dead",n=2,q=20)

auto_col_bins = {"INR(PT)":6,

"PTT":5,

"Lymphocytes":4,

"Monocytes":3,

"Neutrophil":5,

"White_Blood_Cells":5,

"Platelet_Count":5,

"patients_age":5}

#不能使用自动分箱的变量,这部分主要是针对数据中只有几个固定的值

# hand_bins = {"patients_age":[0,40,60,80]

# }

#保证区间覆盖使用 np.inf替换最大值,用-np.inf替换最小值

# hand_bins = {k:[-np.inf,*v[:-1],np.inf] for k,v in hand_bins.items()}

bins_of_col = {}

# 生成自动分箱的分箱区间和分箱后的 IV 值

for col in auto_col_bins:

bins_df = graphforbestbin(data,col

,"dead"

,n=auto_col_bins[col]

#使用字典的性质来取出每个特征所对应的箱的数量

,q=20

,graph=False)

bins_list = sorted(set(bins_df["min"]).union(bins_df["max"]))

#保证区间覆盖使用 np.inf 替换最大值 -np.inf 替换最小值

bins_list[0],bins_list[-1] = -np.inf,np.inf

bins_of_col[col] = bins_list

#合并手动分箱数据

# bins_of_col.update(hand_bins)

bins_of_col

output:

{‘INR(PT)’: [-inf, 1.0, 1.293934734530496, 1.3, 1.5, 1.7, inf],

‘PTT’: [-inf, 23.4, 25.8, 30.9, 39.09021282009289, inf],

‘Lymphocytes’: [-inf,

1.8724525058772297,

2.153362490193166,

2.732900000000007,

inf],

‘Monocytes’: [-inf, 0.4676, 0.9481735938491223, inf],

‘Neutrophil’: [-inf,

1.5190000000000001,

8.4112,

10.136775615962982,

21.6273253954346,

inf],

‘White_Blood_Cells’: [-inf,

10.032206982762704,

10.8,

12.703728336029313,

25.9,

inf],

‘Platelet_Count’: [-inf,

2.115540934553256,

2.51054501,

2.541579244,

2.719331287,

inf],

‘patients_age’: [-inf, 41.0, 63.0, 72.3911909242274, 83.0, inf]}

bins_df

(七)计算各箱的WOE并映射到数据中

# data_num.pop('dead')

data_num.pop('qc

def get_woe(df,col,y,bins):

df = df[[col,y]].copy()

# df = df[col,y].copy()

df["cut"] = pd.cut(df[col],bins)

bins_df = df.groupby("cut")[y].value_counts().unstack()

woe = bins_df["woe"] = np.log((bins_df[0]/bins_df[0].sum())/(bins_df[1]/bins_df[1].sum()))

return woe

#将所有特征的WOE存储到字典当中

woeall = {}

for col in bins_of_col:

woeall[col] = get_woe(data_num,col,"dead",bins_of_col[col])

woeall

Output

Output exceeds the size limit. Open the full output data in a text editor{‘INR(PT)’: cut

(-inf, 1.0] 1.349146

(1.0, 1.294] 0.332691

(1.294, 1.3] 0.886254

(1.3, 1.5] -0.323383

(1.5, 1.7] -0.721225

(1.7, inf] -0.475794

dtype: float64,

‘PTT’: cut

(-inf, 23.4] 1.290972

(23.4, 25.8] 0.433930

(25.8, 30.9] 0.109695

(30.9, 39.09] -0.025172

(39.09, inf] -0.519744

dtype: float64,

‘Lymphocytes’: cut

(-inf, 1.872] -0.033571

(1.872, 2.153] 0.321251

(2.153, 2.733] -0.132417

(2.733, inf] 0.385898

dtype: float64,

‘Monocytes’: cut

(-inf, 0.468] 0.109710

(0.468, 0.948] -0.065385

(0.948, inf] -0.273483

…

(41.0, 63.0] 0.311843

(63.0, 72.391] -0.037377

(72.391, 83.0] -0.258544

(83.0, inf] -0.686025

dtype: float64}

(八)接下来,把所有的WOE映射到原始数据中

#不希望覆盖掉原本的数据,创建一个新的DataFrame,索引和原始数据model_data一模一样

model_woe = pd.DataFrame(index=data1.index)

#将原数据分箱后,按箱的结果把WOE结构用map函数映射到数据中

model_woe["patients_age"]=pd.cut(data["patients_age"], bins_of_col["patients_age"]).map(woeall["patients_age"])

model_woe["patients_age"]

#对所有特征都能这么写

for col in bins_of_col:

model_woe[col] = pd.cut(data[col], bins_of_col[col]).map(woeall[col])

#将标签补充到数据中

model_woe["dead"] = data["dead"]

model_woe

3.建模与模型验证

#处理测试集

vali_woe = pd.DataFrame(index=data.index)

for col in bins_of_col:

vali_woe[col]=pd.cut(data[col], bins_of_col[col]).map(woeall[col])

vali_woe["dead"]=data["dead"]

vali_x = vali_woe.iloc[:, :-1]

vali_y = vali_woe.iloc[:, -1]

x = model_woe.iloc[:, :-1]

y = model_woe.iloc[:, -1]

from sklearn.linear_model import LogisticRegression as LR

lr = LR().fit(x, y)

lr.score(vali_x, vali_y)

#0.6483862503279979

输出0.6483862503279979

画c学习曲线

c_1 = np.linspace(0.01,1,20)

c_2 = np.linspace(0.01,0.2,20)

score = []

for i in c_2:

lr = LR(solver='liblinear',C=i).fit(x,y)

score.append(lr.score(vali_x,vali_y))

plt.figure()

plt.plot(c_2,score)

plt.show()

lr.n_iter_ #查看迭代次数

#array([], dtype=int32) 迭代次数为

score=[]

for i in [1, 2, 3, 4, 5, 6]:

lr=LR(solver='liblinear', C=0.025, max_iter=i).fit(x,y)

score.append(lr.score(vali_x, vali_y))

plt.figure()

plt.plot([1,2,3,4,5,6], score)

plt.show()

import scikitplot as skplt

vali_proba_df = pd.DataFrame(lr.predict_proba(vali_x))

skplt.metrics.plot_roc(vali_y, vali_proba_df,

plot_micro=False,figsize=(6,6),

plot_macro=False)

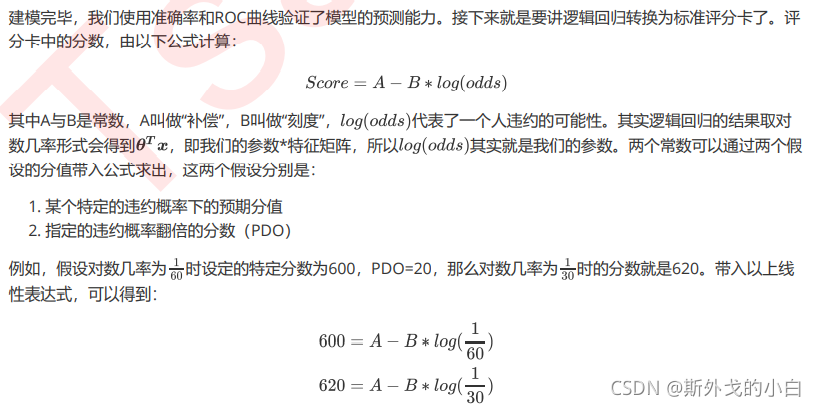

4.制作评分卡

B = 20/np.log(2)

A = 600 + B*np.log(1/60)

B,A

#(28.85390081777927, 481.8621880878296)

base_score = A - B*lr.intercept_

print(base_score)

#array([482.0125967])

score_age = woeall["patients_age"] * (-B*lr.coef_[0][0])

print(score_age)

file = r"ScoreData.csv"

with open(file,"w") as fdata:

fdata.write("base_score,{}\n".format(base_score))

for i,col in enumerate(x.columns):

score = woeall[col] * (-B*lr.coef_[0][i])

score.name = "Score"

score.index.name = col

score.to_csv(file,header=True,mode="a")

print(score)

评分卡的输出,详细可看周报上的

目前存在问题

1.分箱的个数的确定?使用最佳分箱个数or自定义分箱区间

2.评分卡制作中部分常数的确定。

![「欧拉定理」[SDOI2008]仪仗队](https://img-blog.csdnimg.cn/img_convert/6e21f1e1efc4be1c3f6f034ba8e38b9e.png)