Berkeley AUTOLAB’s GQCNN Package — GQCNN 1.1.0 documentation (berkeleyautomation.github.io)

(3条消息) 机器人抓取(六)—— 抓取点检测(抓取位姿估计) gqcnn代码测试与解读_zxxRobot的博客-CSDN博客

GQ-CNN模型对生成数据集时使用的以下参数很敏感:

- 机器人抓手

- 深度摄像机

- 相机和工作区之间的距离。

因此,我们不能保证我们的预训练模型在其他物理设置上的性能。关于预训练的模型和示例策略的样本输入的更多信息,请参见Pre-trained Models and Sample Inputs.。

💚Prerequisites

Python

The gqcnn package has only been tested with Python 3.5, Python 3.6, and Python 3.7.

Ubuntu

The gqcnn package has only been tested with Ubuntu 12.04, Ubuntu 14.04 and Ubuntu 16.04.

Virtualenv

We highly recommend using a Python environment management system, in particular Virtualenv, with the Pip and ROS installations. Note: Several users have encountered problems with dependencies when using Conda.

🎈Pip Installation

The pip installation is intended for users who are only interested in

1) Training GQ-CNNs or

2) Grasp planning on saved RGBD images, not interfacing with a physical robot.

If you have intentions of using GQ-CNNs for grasp planning on a physical robot, we suggest you install as a ROS package.

1. Clone the repository

$ git clone https://github.com/BerkeleyAutomation/gqcnn.git

2. Run pip installation

Change directories into the gqcnn repository and run the pip installation.

This will install gqcnn in your current virtual environment.

$ pip install .

🎈ROS Installation

Installation as a ROS package is intended for users who wish to use GQ-CNNs to plan grasps on a physical robot.

1. Clone the repository

Clone or download the project from Github.

$ cd <PATH_TO_YOUR_CATKIN_WORKSPACE>/src $ git clone https://github.com/BerkeleyAutomation/gqcnn.git

2. Build the catkin package

Build the catkin package.

$ cd <PATH_TO_YOUR_CATKIN_WORKSPACE> $ catkin_make

Then re-source devel/setup.bash for the package to be available through Python.

💚Overview

There are two main use cases of the gqcnn package:

1.在离线数据集中使用GQCNN模型,然后在RGBD图像上进行抓取规划

2.在RGBD图上使用预先训练的GQCNN模型

Prerequisites 先决条件

先下载示例模型和数据集

$ cd /path/to/your/gqcnn $ ./scripts/downloads/download_example_data.sh $ ./scripts/downloads/models/download_models.sh

🎈Running Python Scripts 运行python脚本

cd /path/to/your/gqcnn python /path/to/script.py

💚Training

The gqcnn package can be used to train a Dex-Net 4.0 GQ-CNN model on a custom offline Dex-Net dataset.【软件包可用于在自定义离线模式上训练GQ-CNN模型数据集。】从头训练耗时,所以训练新网络最有效的方法是微调预训练的GQ-CNN模型的权重。Dex-Net 4.0 GQ-CNN model,已经在百万张图像上进行了训练。

若要微调 GQ-CNN ,则运行:

$ python tools/finetune.py <training_dataset_path> <pretrained_network_name> --config_filename <config_filename> --name <model_name>

The args are:

-

training_dataset_path: Path to the training dataset.

-

pretrained_network_name: Name of pre-trained GQ-CNN.

-

config_filename: Name of the config file to use.

-

model_name: Name for the model

训练GQ-CNN,选择不同的gripper,运行不同的代码

To train a GQ-CNN for a parallel jaw gripper on a sample dataset, run the fine-tuning script:

$ python tools/finetune.py data/training/example_pj/ GQCNN-4.0-PJ --config_filename cfg/finetune_example_pj.yaml --name gqcnn_example_pj

To train a GQ-CNN for a suction gripper run:

$ python tools/finetune.py data/training/example_suction/ GQCNN-4.0-SUCTION --config_filename cfg/finetune_example_suction.yaml --name gqcnn_example_suction

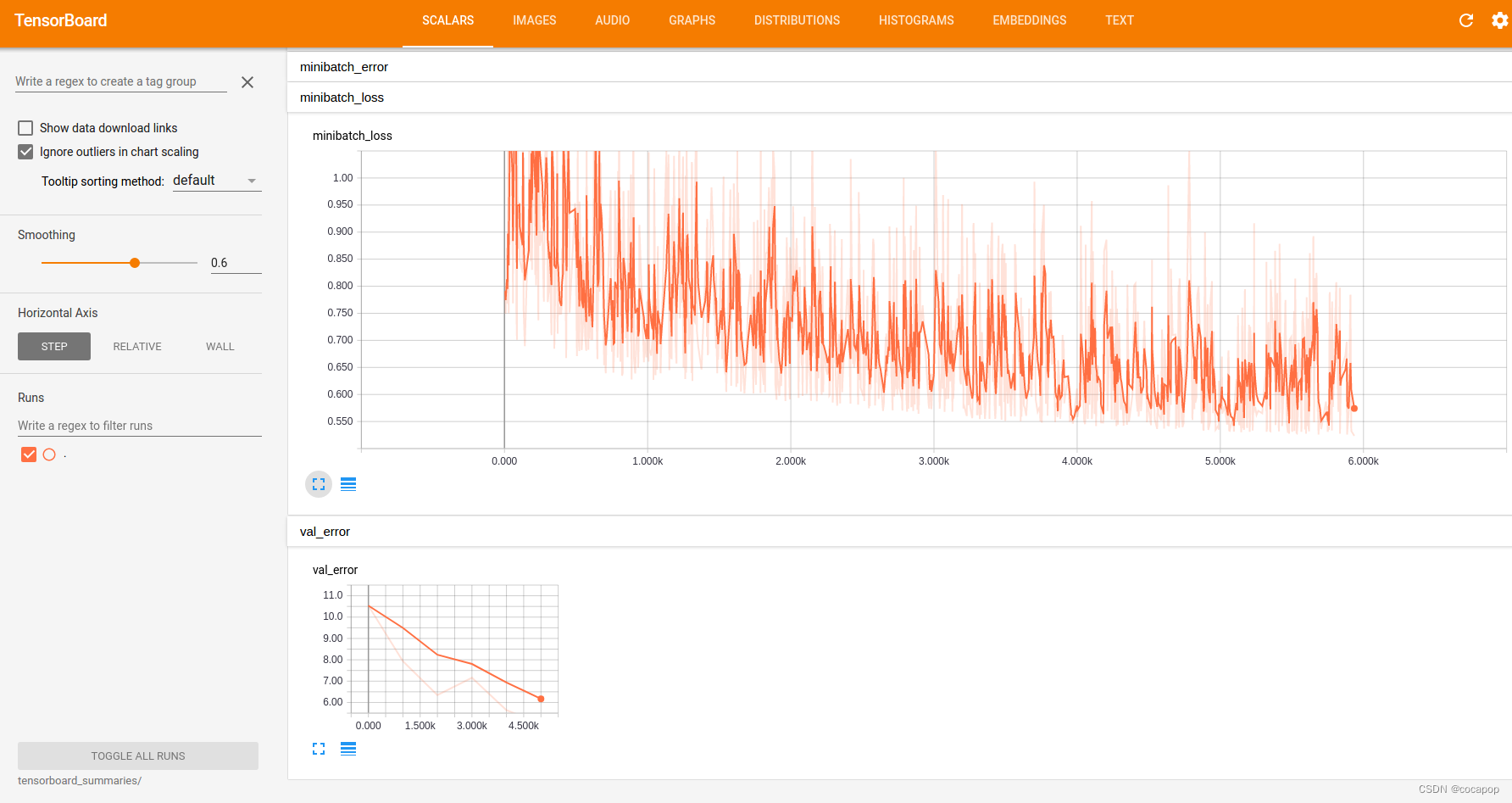

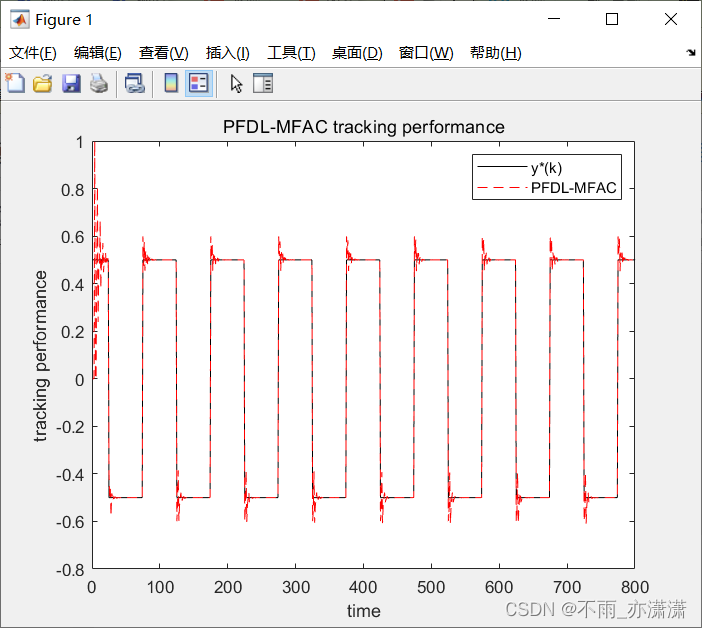

🎈Visualizing Training 训练可视化

gqcnn模型包含对通过Tensorboard可视化训练进度的支持。当训练脚本运行时,Tensorboard会自动启动,可以通过在网络浏览器中导航到localhost:6006来访问。

在那里你会发现类似下面的内容:

其中显示有用的训练统计数据,如验证误差、最小批量损失和学习率。

Tensorflow摘要存储在model/<model_name>/tensorboard_summaries/

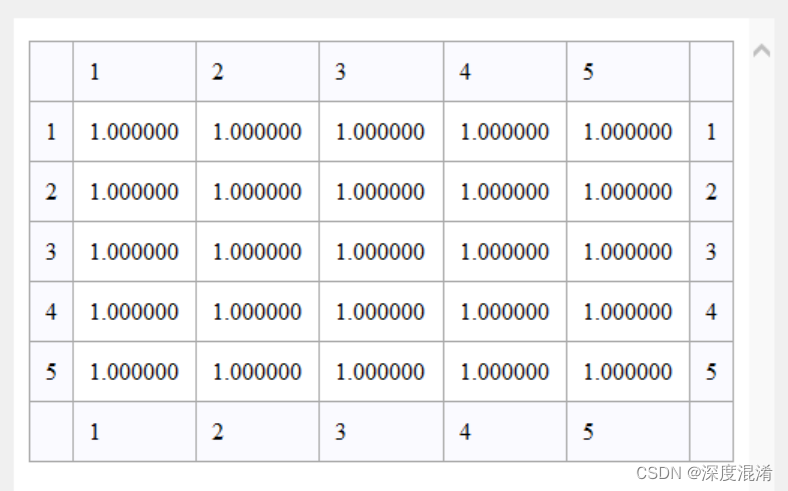

💚Analysis

检查训练和验证损失和分类的错误,有助于确保网络训练成功。想分析经过训练的GQ -CNN的性能,请运行:

$ python tools/analyze_gqcnn_performance.py <model_name>

The args are:

-

model_name: Name of a trained model.

To analyze the networks we just trained, run:

$ python tools/analyze_gqcnn_performance.py gqcnn_example_pj $ python tools/analyze_gqcnn_performance.py gqcnn_example_suction

💚Grasp Planning

抓取计划包括在给定的点云中寻找具有最高预测成功概率的抓取方式。在gqcnn软件包中,这被实现为通过最大化GQ-CNN的输出将RGBD图像映射到6-DOF抓取姿势的策略。最大化可以用迭代方法实现,如交叉熵法(CEM),它用于Dex-Net 2.0、Dex-Net 2.1、Dex-Net 3.0、Dex-Net 4.0,或者更快的全卷积网络,这在FC-GQ-CNN中使用。

我们在examples/中提供了策略的例子。特别是,我们提供了一个Python策略示例和一个ROS策略示例。注意,ROS策略需要安装ROS gqcnn,可以在这里找到。我们强烈建议使用Python策略,除非你需要使用ROS对物理机器人进行抓取规划。

Sample Inputs

Sample inputs from our experimental setup are provided with the repo:

-

data/examples/clutter/phoxi/dex-net_4.0: Set of example images from a PhotoNeo PhoXi S containing objects used in Dex-Net 4.0 experiments arranged in heaps.

-

data/examples/clutter/phoxi/fcgqcnn: Set of example images from a PhotoNeo PhoXi S containing objects in FC-GQ-CNN experiments arranged in heaps.

-

data/examples/single_object/primesense/: Set of example images from a Primesense Carmine containing objects used in Dex-Net 2.0 experiments in singulation.

-

data/examples/clutter/primesense/: Set of example images from a Primesense Carmine containing objects used in Dex-Net 2.1 experiments arranged in heaps.

请注意,在尝试这些样本输入时,你必须确保你所使用的GQ-CNN模型是为相应的摄像机和输入类型(单一化/杂乱化)训练的。

Pre-trained Models

Dex-Net 4.0的预训练的平行颚和吸力模型会随着gqcnn包的安装自动下载。如果你确实希望尝试旧的结果的模型(或我们的实验模型FC-GQ-CNN),所有预训练的模型都可以用下载:

$ ./scripts/downloads/models/download_models.sh

The models are:

-

GQCNN-2.0: For Dex-Net 2.0, trained on images of objects in singulation with parameters for a Primesense Carmine.

-

GQCNN-2.1: For Dex-Net 2.1, a Dex-Net 2.0 model fine-tuned on images of objects in clutter with parameters for a Primesense Carmine.

-

GQCNN-3.0: For Dex-Net 3.0, trained on images of objects in clutter with parameters for a Primesense Carmine.

-

GQCNN-4.0-PJ: For Dex-Net 4.0, trained on images of objects in clutter with parameters for a PhotoNeo PhoXi S.

-

GQCNN-4.0-SUCTION: For Dex-Net 4.0, trained on images of objects in clutter with parameters for a PhotoNeo PhoXi S.

-

FC-GQCNN-4.0-PJ: For FC-GQ-CNN, trained on images of objects in clutter with parameters for a PhotoNeo PhoXi S.

-

FC-GQCNN-4.0-SUCTION: For FC-GQ-CNN, trained on images of objects in clutter with parameters for a PhotoNeo PhoXi S.

请注意,GQ-CNN模型对数据集生成过程中使用的参数很敏感,特别是:1)抓手的几何形状,我们所有的预训练模型都使用ABB YuMi平行颚式抓手;2)相机的内在结构,我们所有的预训练模型都使用Primesense Carmine或PhotoNeo Phoxi S(关于哪一个见上文);3)渲染时相机和工作区之间的距离,我们所有的预训练模型都是50-70厘米。因此,我们不能保证我们的预训练模型在其他物理设置上的性能。如果你有一个特定的使用案例,请联系我们。**我们正在积极研究如何生成更强大的数据集,可以跨机器人、相机和视角进行通用化!

Python Policy

The example Python policy can be queried on saved images using:

$ python examples/policy.py <model_name> --depth_image <depth_image_filename> --segmask <segmask_filename> --camera_intr <camera_intr_filename>

The args are:

-

model_name: Name of the GQ-CNN model to use.

-

depth_image_filename: Path to a depth image (float array in .npy format).

-

segmask_filename: Path to an object segmentation mask (binary image in .png format).

-

camera_intr_filename: Path to a camera intrinsics file (.intr file generated with BerkeleyAutomation’s perception package).

To evaluate the pre-trained Dex-Net 4.0 parallel jaw network on sample images of objects in heaps run:

$ python examples/policy.py GQCNN-4.0-PJ --depth_image data/examples/clutter/phoxi/dex-net_4.0/depth_0.npy --segmask data/examples/clutter/phoxi/dex-net_4.0/segmask_0.png --camera_intr data/calib/phoxi/phoxi.intr

To evaluate the pre-trained Dex-Net 4.0 suction network on sample images of objects in heaps run:

$ python examples/policy.py GQCNN-4.0-SUCTION --depth_image data/examples/clutter/phoxi/dex-net_4.0/depth_0.npy --segmask data/examples/clutter/phoxi/dex-net_4.0/segmask_0.png --camera_intr data/calib/phoxi/phoxi.intr

FC-GQ-CNN Policy

Our most recent research result, the FC-GQ-CNN, combines novel fully convolutional network architectures with our prior work on GQ-CNNs to increase policy rate and reliability. Instead of relying on the Cross Entropy Method (CEM) to iteratively search over the policy action space for the best grasp, the FC-GQ-CNN instead densely and efficiently evaluates the entire action space in parallel. It is thus able to consider 5000x more grasps in 0.625s, resulting in a MPPH (Mean Picks Per Hour) of 296, compared to the prior 250 MPPH of Dex-Net 4.0.

FC-GQ-CNN architecture.

You can download the pre-trained FC-GQ-CNN parallel jaw and suction models along with the other pre-trained models:

$ ./scripts/downloads/models/download_models.sh

Then run the Python policy with the --fully_conv flag.

To evaluate the pre-trained FC-GQ-CNN parallel jaw network on sample images of objects in heaps run:

$ python examples/policy.py FC-GQCNN-4.0-PJ --fully_conv --depth_image data/examples/clutter/phoxi/fcgqcnn/depth_0.npy --segmask data/examples/clutter/phoxi/fcgqcnn/segmask_0.png --camera_intr data/calib/phoxi/phoxi.intr

To evaluate the pre-trained FC-GQ-CNN suction network on sample images of objects in heaps run:

$ python examples/policy.py FC-GQCNN-4.0-SUCTION --fully_conv --depth_image data/examples/clutter/phoxi/fcgqcnn/depth_0.npy --segmask data/examples/clutter/phoxi/fcgqcnn/segmask_0.png --camera_intr data/calib/phoxi/phoxi.intr

💚Replicating Results

有两种方法来复制结果:

1.使用预先训练过的模型: 下载一个预先训练好的GQ-CNN模型,并运行一个例子政策。

2.从头开始训练: 下载原始数据集,训练一个GQ-CN模型,并使用你刚刚训练的模型运行一个例子策略。

我们鼓励使用方法1。因为2计算昂贵,原始数据集太大。

方法1:Using a Pre-trained Model

First download the pre-trained models.

$ ./scripts/downloads/models/download_models.sh

Dex-Net 2.0

Evaluate the pre-trained GQ-CNN model.

$ ./scripts/policies/run_all_dex-net_2.0_examples.sh

Dex-Net 2.1

Evaluate the pre-trained GQ-CNN model.

$ ./scripts/policies/run_all_dex-net_2.1_examples.sh

Dex-Net 3.0

Evaluate the pre-trained GQ-CNN model.

$ ./scripts/policies/run_all_dex-net_3.0_examples.sh

Dex-Net 4.0

To evaluate the pre-trained parallel jaw GQ-CNN model.

$ ./scripts/policies/run_all_dex-net_4.0_pj_examples.sh

To evaluate the pre-trained suction GQ-CNN model.

$ ./scripts/policies/run_all_dex-net_4.0_suction_examples.sh

FC-GQ-CNN

To evaluate the pre-trained parallel jaw FC-GQ-CNN model.

$ ./scripts/policies/run_all_dex-net_4.0_fc_pj_examples.sh

To evaluate the pre-trained suction FC-GQ-CNN model.

$ ./scripts/policies/run_all_dex-net_4.0_fc_suction_examples.sh

方法2:Training from Scratch 从0开始训练

Dex-Net 2.0

First download the appropriate dataset.

$ ./scripts/downloads/datasets/download_dex-net_2.0.sh

Then train a GQ-CNN from scratch.

$ ./scripts/training/train_dex-net_2.0.sh

Finally, evaluate the trained GQ-CNN.

$ ./scripts/policies/run_all_dex-net_2.0_examples.sh

Dex-Net 2.1

First download the appropriate dataset.

$ ./scripts/downloads/datasets/download_dex-net_2.1.sh

Then train a GQ-CNN from scratch.

$ ./scripts/training/train_dex-net_2.1.sh

Finally, evaluate the trained GQ-CNN.

$ ./scripts/policies/run_all_dex-net_2.1_examples.sh

Dex-Net 3.0

First download the appropriate dataset.

$ ./scripts/downloads/datasets/download_dex-net_3.0.sh

Then train a GQ-CNN from scratch.

$ ./scripts/training/train_dex-net_3.0.sh

Finally, evaluate the trained GQ-CNN.

$ ./scripts/policies/run_all_dex-net_3.0_examples.sh

Dex-Net 4.0

To replicate the Dex-Net 4.0 parallel jaw results, first download the appropriate dataset.

$ ./scripts/downloads/datasets/download_dex-net_4.0_pj.sh

Then train a GQ-CNN from scratch.

$ ./scripts/training/train_dex-net_4.0_pj.sh

Finally, evaluate the trained GQ-CNN.

$ ./scripts/policies/run_all_dex-net_4.0_pj_examples.sh

To replicate the Dex-Net 4.0 suction results, first download the appropriate dataset.

$ ./scripts/downloads/datasets/download_dex-net_4.0_suction.sh

Then train a GQ-CNN from scratch.

$ ./scripts/training/train_dex-net_4.0_suction.sh

Finally, evaluate the trained GQ-CNN.

$ ./scripts/policies/run_all_dex-net_4.0_suction_examples.sh

FC-GQ-CNN

To replicate the FC-GQ-CNN parallel jaw results, first download the appropriate dataset.

$ ./scripts/downloads/datasets/download_dex-net_4.0_fc_pj.sh

Then train a FC-GQ-CNN from scratch.

$ ./scripts/training/train_dex-net_4.0_fc_pj.sh

Finally, evaluate the trained FC-GQ-CNN.

$ ./scripts/policies/run_all_dex-net_4.0_fc_pj_examples.sh

To replicate the FC-GQ-CNN suction results, first download the appropriate dataset.

$ ./scripts/downloads/datasets/download_dex-net_4.0_fc_suction.sh

Then train a FC-GQ-CNN from scratch.

$ ./scripts/training/train_dex-net_4.0_fc_suction.sh

Finally, evaluate the trained FC-GQ-CNN.

$ ./scripts/policies/run_all_dex-net_4.0_fc_suction_examples.sh

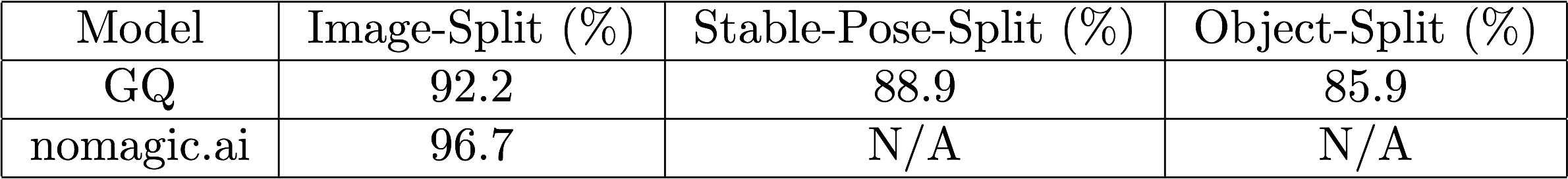

💚Benchmarks

Dex-Net 2.0

Below are the highest classification accuracies achieved on the Dex-Net 2.0 dataset on a randomized 80-20 train-validation split using various splitting rules:

The current leader is a ConvNet submitted by nomagic.ai.

GQ is our best GQ-CNN for Dex-Net 2.0.

API Documentation

GQ-CNN — GQCNN 1.1.0 documentation

![[Gitops--7]Kubesphere 配置镜像仓库](https://img-blog.csdnimg.cn/732cc8c81050434a9015d9b599ce46e4.png)

![NSSCTF-[NSSRound#X Basic]ez_z3 [MoeCTF 2022]Art [HDCTF2023]basketball](https://img-blog.csdnimg.cn/e5d6a7856fd445549ed035d16263c90f.png)