书接上回【传统方式部署Ruoyi微服务】,此刻要迁移至k8s。

环境说明

31 master , 32 node1 , 33 node2

迁移思路

交付思路:

其实和交付到Linux主机上是一样的,无外乎将这些微服务都做成了Docker镜像;

1、微服务数据层: MySQL、 Redis;

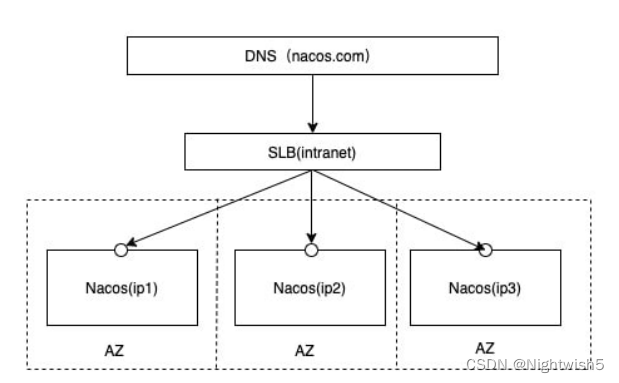

2、微服务治理层: NACos、sentinel、 skywalking...

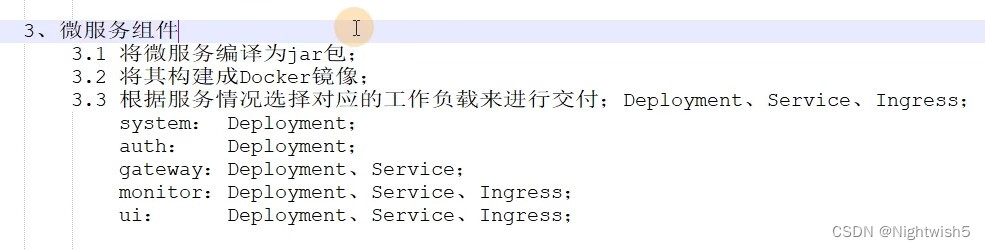

3、微服务组件

3.1 将微服务编译为jar包;

3.2 将其构建成Docker镜像;

3.3根据服务情况选择对应的工作负载来进行交付;Deployment、Service、Ingress:

system:Deployment;

auth:Deployment;

gateway: Deployment、 service;

monitor: Deployment、 Service、 Ingress

ui: Deployment、 Service、 Ingress; nginx/haproxy

01-mysql (Service、StatefulSet)

kubectl create ns dev

01-mysql-ruoyi-sts-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql-ruoyi-svc

namespace: dev

spec:

clusterIP: None

selector:

app: mysql

role: ruoyi

ports:

- port: 3306

targetPort: 3306

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql-ruoyi

namespace: dev

spec:

serviceName: "mysql-ruoyi-svc"

replicas: 1

selector:

matchLabels:

app: mysql

role: ruoyi

template:

metadata:

labels:

app: mysql

role: ruoyi

spec:

containers:

- name: db

image: mysql:5.7

args:

- "--character-set-server=utf8"

env:

- name: MYSQL_ROOT_PASSWORD

value: oldxu

- name: MYSQL_DATABASE

value: ry-cloud

ports:

- containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql/

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteMany"]

storageClassName: "nfs"

resources:

requests:

storage: 6Gi

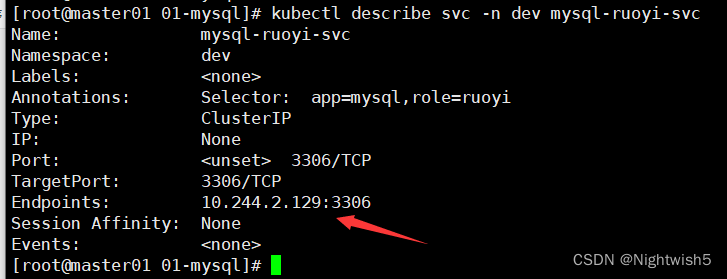

解析mysql对应的IP

${statefulSetName}-${headlessName}.{namspace}.svc.cluster.local

[root@master01 01-mysql]# dig @10.96.0.10 mysql-ruoyi-0.mysql-ruoyi-svc.dev.svc.cluster.local +short

10.244.2.129

连接mysql,导入sql文件

yum install -y mysql

mysql -uroot -poldxu -h10.244.2.129

mysql -uroot -poldxu -h10.244.2.129 -B ry-cloud < ry_20220814.sql

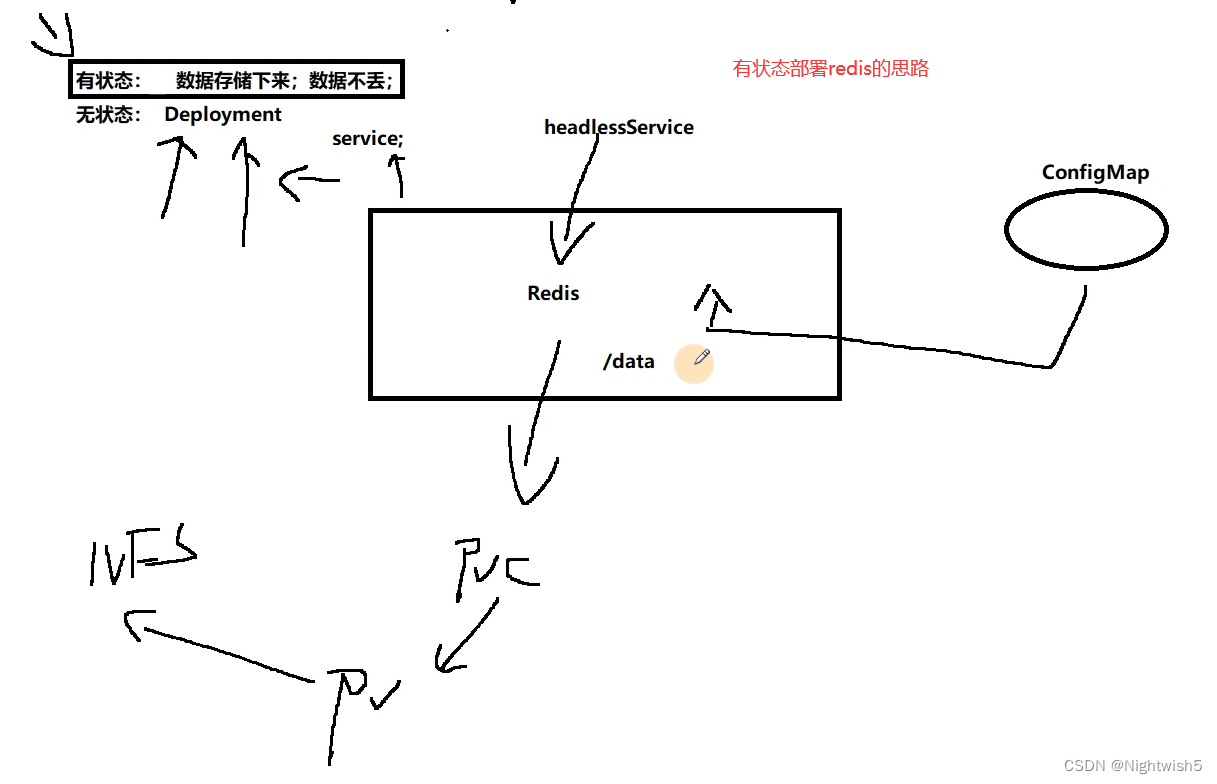

02-redis/

(这里使用的是无状态部署,做缓存。 按情况而定也可参考mysql的部署方法,做成有状态部署来redis)

01-redis-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-server

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- name: cache

image: redis

ports:

- containerPort: 6379

02-redis-service.yaml

apiVersion: v1

kind: Service

metadata:

name: redis-svc

namespace: dev

spec:

selector:

app: redis

ports:

- port: 6379

targetPort: 6379

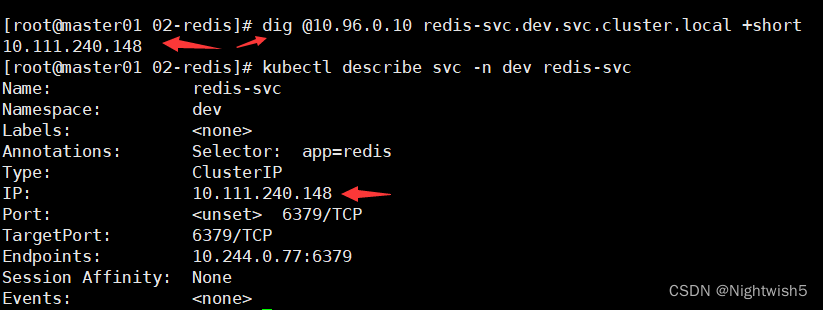

验证redis

[root@master01 02-redis]# dig @10.96.0.10 redis-svc.dev.svc.cluster.local +short

10.111.240.148

kubectl describe svc -n dev redis-svc

sudo yum install epel-release

sudo yum install redis

[root@master01 02-redis]# redis-cli -h 10.111.240.148

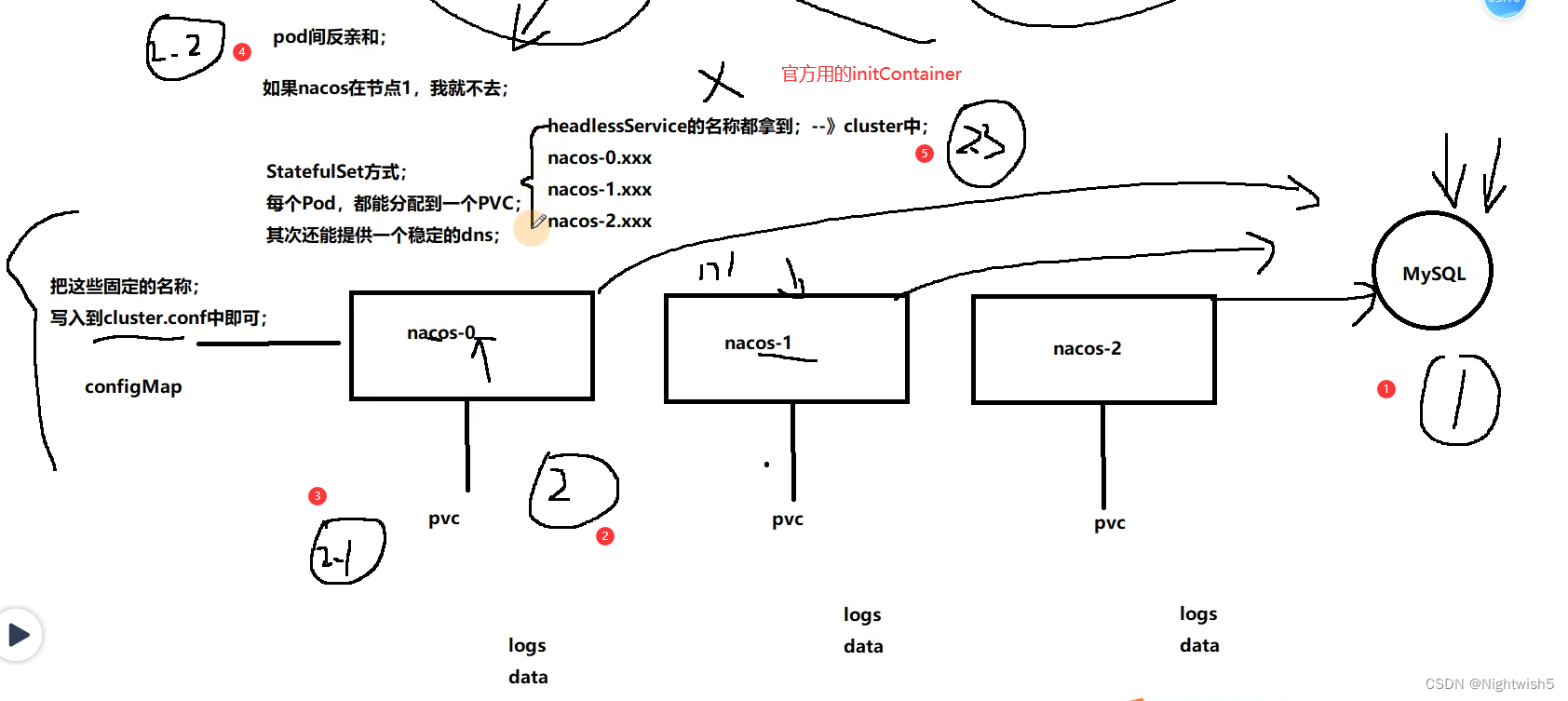

03-nacos/

官方的nacos k8s参考资料https://github.com/nacos-group/nacos-k8s/blob/master/README-CN.md

迁移思路:

安装nacos的mysql数据库

01-mysql-nacos-sts-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql-nacos-svc

namespace: dev

spec:

clusterIP: None

selector:

app: mysql

role: nacos

ports:

- port: 3306

targetPort: 3306

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql-nacos-sts

namespace: dev

spec:

serviceName: "mysql-nacos-svc"

replicas: 1

selector:

matchLabels:

app: mysql

role: nacos

template:

metadata:

labels:

app: mysql

role: nacos

spec:

containers:

- name: db

image: mysql:5.7

args:

- "--character-set-server=utf8"

env:

- name: MYSQL_ROOT_PASSWORD

value: oldxu

- name: MYSQL_DATABASE

value: ry-config

ports:

- containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql/

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteMany"]

storageClassName: "nfs"

resources:

requests:

storage: 6Gi

[root@master01 03-nacos]# dig @10.96.0.10 mysql-nacos-sts-0.mysql-nacos-svc.dev.svc.cluster.local +short

10.244.2.130

导入config的sql文件

mysql -uroot -poldxu -h10.244.2.130 -B ry-config < ry_config_20220510.sql

02-nacos-configmap.yaml

configmap(填写对应数据库地址、名称、端口、用户名及密码)

apiVersion: v1

kind: ConfigMap

metadata:

name: nacos-cm

namespace: dev

data:

mysql.host: "mysql-nacos-sts-0.mysql-nacos-svc.dev.svc.cluster.local"

mysql.db.name: "ry-config"

mysql.port: "3306"

mysql.user: "root"

mysql.password: "oldxu"

03-nacos-sts-deploy-svc.yaml

#可提前下载,因为镜像大小1GB多

docker pull nacos/nacos-peer-finder-plugin:1.1

docker pull nacos/nacos-server:v2.1.1

#自动PV,引用pvc 、pod反亲和性保证每个节点部署一个pod、initContainer找到nacos集群的IP、

apiVersion: v1

kind: Service

metadata:

name: nacos-svc

namespace: dev

spec:

clusterIP: None

selector:

app: nacos

ports:

- name: server

port: 8848

targetPort: 8848

- name: client-rpc

port: 9848

targetPort: 9848

- name: raft-rpc

port: 9849

targetPort: 9849

- name: old-raft-rpc

port: 7848

targetPort: 7848

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nacos

namespace: dev

spec:

serviceName: "nacos-svc"

replicas: 3

selector:

matchLabels:

app: nacos

template:

metadata:

labels:

app: nacos

spec:

affinity: # 避免Pod运行到同一个节点上了

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values: ["nacos"]

topologyKey: "kubernetes.io/hostname"

initContainers:

- name: peer-finder-plugin-install

image: nacos/nacos-peer-finder-plugin:1.1

imagePullPolicy: Always

volumeMounts:

- name: datan

mountPath: /home/nacos/plugins/peer-finder

subPath: peer-finder

containers:

- name: nacos

image: nacos/nacos-server:v2.1.1

resources:

requests:

memory: "800Mi"

cpu: "500m"

ports:

- name: client-port

containerPort: 8848

- name: client-rpc

containerPort: 9848

- name: raft-rpc

containerPort: 9849

- name: old-raft-rpc

containerPort: 7848

env:

- name: MODE

value: "cluster"

- name: NACOS_VERSION

value: 2.1.1

- name: NACOS_REPLICAS

value: "3"

- name: SERVICE_NAME

value: "nacos-svc"

- name: DOMAIN_NAME

value: "cluster.local"

- name: NACOS_SERVER_PORT

value: "8848"

- name: NACOS_APPLICATION_PORT

value: "8848"

- name: PREFER_HOST_MODE

value: "hostname"

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: MYSQL_SERVICE_HOST

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.host

- name: MYSQL_SERVICE_DB_NAME

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.db.name

- name: MYSQL_SERVICE_PORT

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.port

- name: MYSQL_SERVICE_USER

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.user

- name: MYSQL_SERVICE_PASSWORD

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.password

volumeMounts:

- name: datan

mountPath: /home/nacos/plugins/peer-finder

subPath: peer-finder

- name: datan

mountPath: /home/nacos/data

subPath: data

- name: datan

mountPath: /home/nacos/logs

subPath: logs

volumeClaimTemplates:

- metadata:

name: datan

spec:

storageClassName: "nfs"

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 20Gi

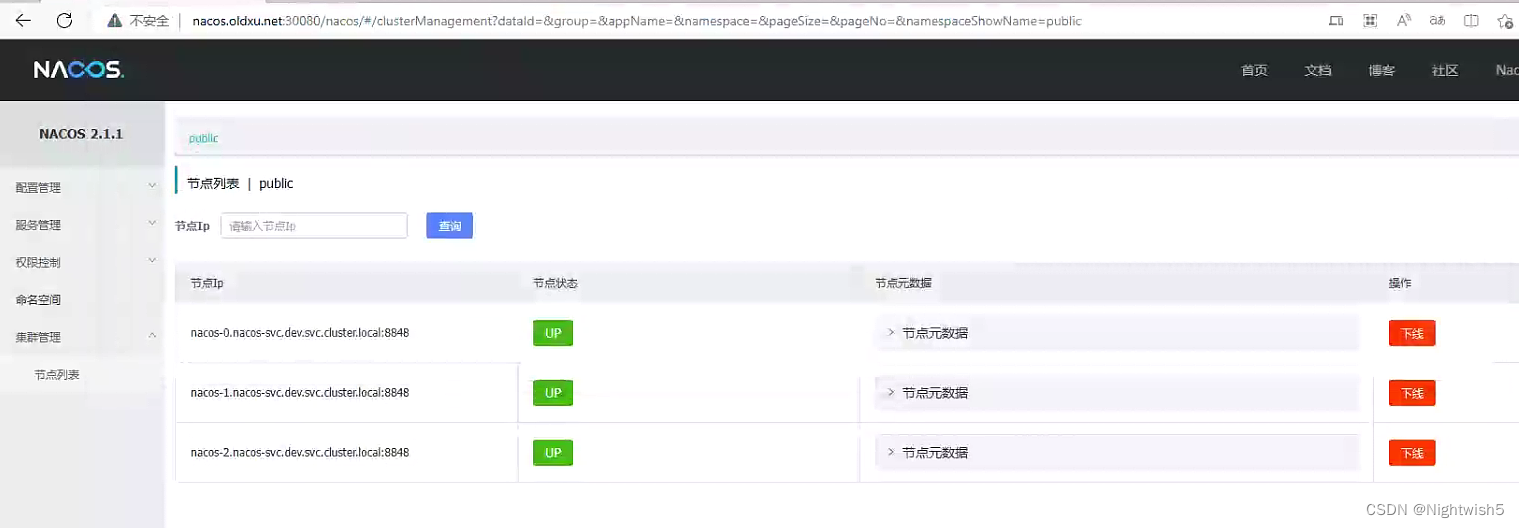

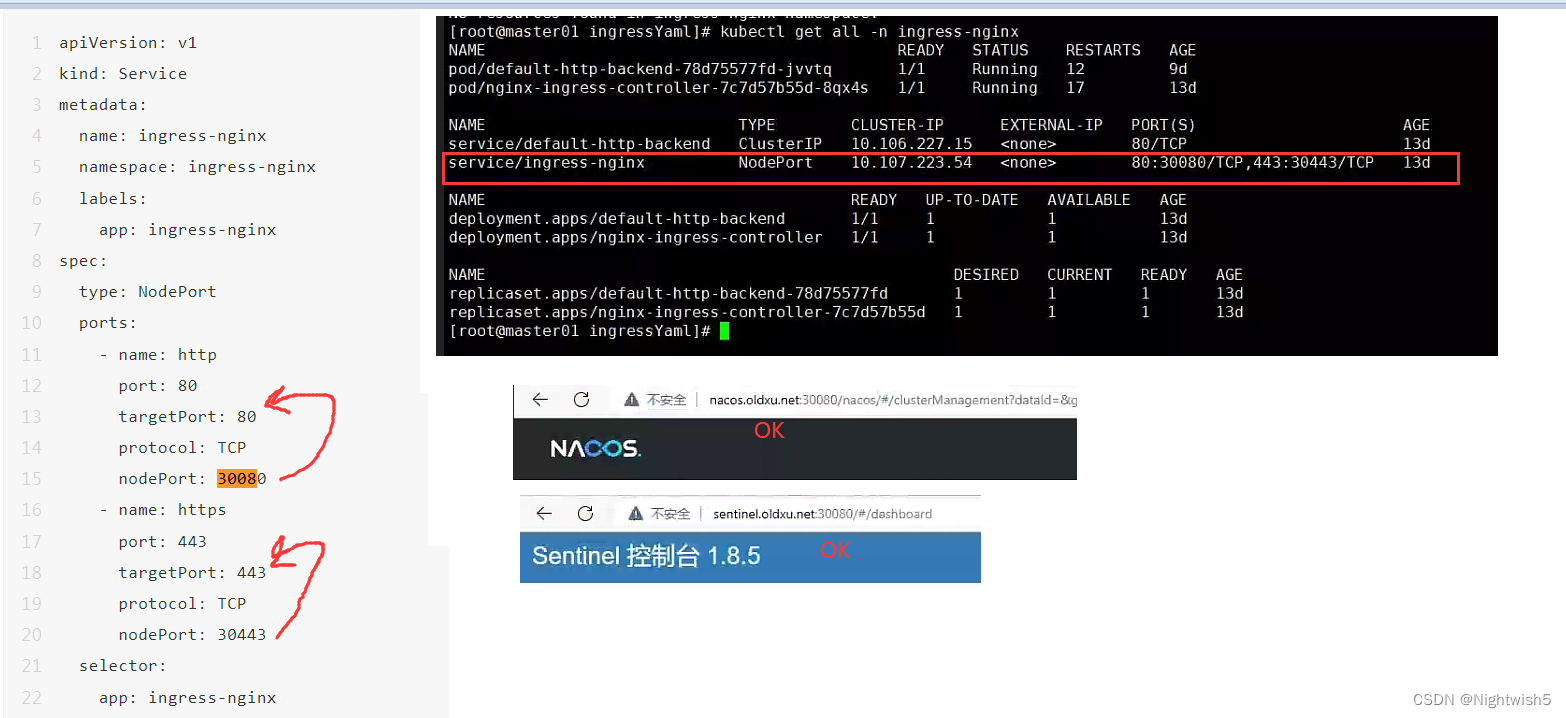

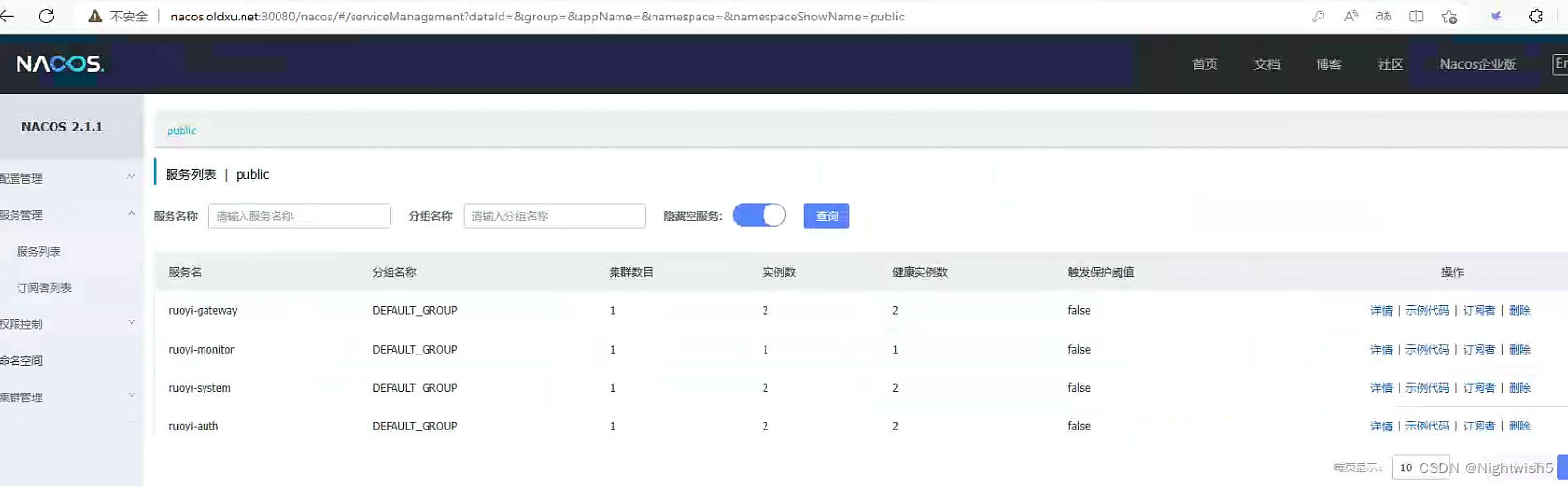

访问验证:

http://nacos.oldxu.net:30080/nacos/

04-nacos-ingress.yaml

打#号的是新版本的写法

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nacos-ingress

namespace: dev

spec:

ingressClassName: "nginx"

rules:

- host: nacos.oldxu.net

http:

paths:

- path: /

pathType: Prefix

backend:

serviceName: nacos-svc

servicePort: 8848

# service:

# name: nacos-svc

# port:

# name: server

04-sentinel/

sentinel迁移思路

Sentinel

1、编写Dockerfile 、entrypoint.sh

2、推送到Harbor镜像仓库;

3、使用Deployment就可以运行该镜像;

4、使用Service、 Ingress来讲 其对外提供访问;

编写sentinel的Dockerfile

#下载包

wget https://linux.oldxu.net/sentinel-dashboard-1.8.5.jar

docker login harbor.oldxu.net

Dockerfile 与 entrypoint.sh

Dockerfile

FROM openjdk:8-jre-alpine

COPY ./sentinel-dashboard-1.8.5.jar /sentinel-dashboard.jar

COPY ./entrypoint.sh /entrypoint.sh

RUN chmod +x /entrypoint.sh

EXPOSE 8718 8719

CMD ["/bin/sh","-c","/entrypoint.sh"]

entrypoint.sh

JAVA_OPTS="-Dserver.port=8718 \

-Dcsp.sentinel.dashboard.server=localhost:8718 \

-Dproject.name=sentinel-dashboard \

-Dcsp.sentinel.api.port=8719 \

-Xms${XMS_OPTS:-150m} \

-Xmx${XMX_OPTS:-150m}"

java ${JAVA_OPTS} -jar /sentinel-dashboard.jar

[root@master01 04-sentinel]# ls

Dockerfile entrypoint.sh sentinel-dashboard-1.8.5.jar

docker build -t harbor.oldxu.net/springcloud/sentinel-dashboard:v1.0 .

docker push harbor.oldxu.net/springcloud/sentinel-dashboard:v1.0

01-sentinel-deploy.yaml

kubectl create secret docker-registry harbor-admin \

--docker-username=admin \

--docker-password=Harbor12345 \

--docker-server=harbor.oldxu.net \

-n dev

apiVersion: apps/v1

kind: Deployment

metadata:

name: sentinel-server

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: sentinel

template:

metadata:

labels:

app: sentinel

spec:

imagePullSecrets:

- name: harbor-admin

containers:

- name: sentinel

image: harbor.oldxu.net/springcloud/sentinel-dashboard:v2.0

ports:

- name: server

containerPort: 8718

- name: api

containerPort: 8719

02-sentinel-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: sentinel-svc

namespace: dev

spec:

selector:

app: sentinel

ports:

- name: server

port: 8718

targetPort: 8718

- name: api

port: 8719

targetPort: 8719

03-sentinel-ingress.yaml

写#号的是新版本的写法。

#apiVersion: networking.k8s.io/v1

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: sentinel-ingress

namespace: dev

spec:

ingressClassName: "nginx"

rules:

- host: sentinel.oldxu.net

http:

paths:

- path: /

pathType: Prefix

backend:

serviceName: sentinel-svc

servicePort: 8718

#service:

# name: sentinel-svc

# port:

# name: server

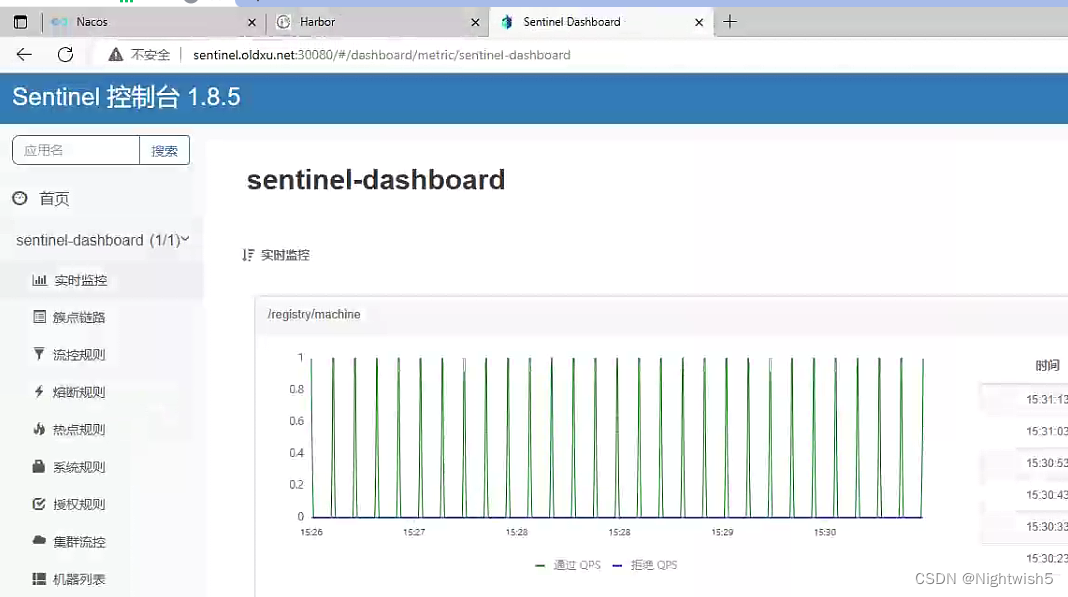

访问 http://sentinel.oldxu.net:30080/#/dashboard/metric/sentinel-dashboard

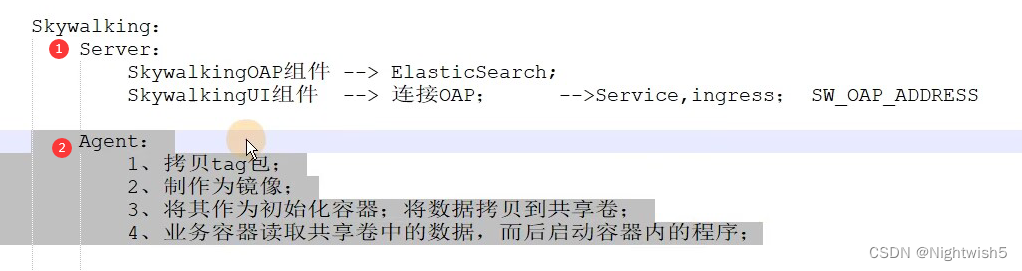

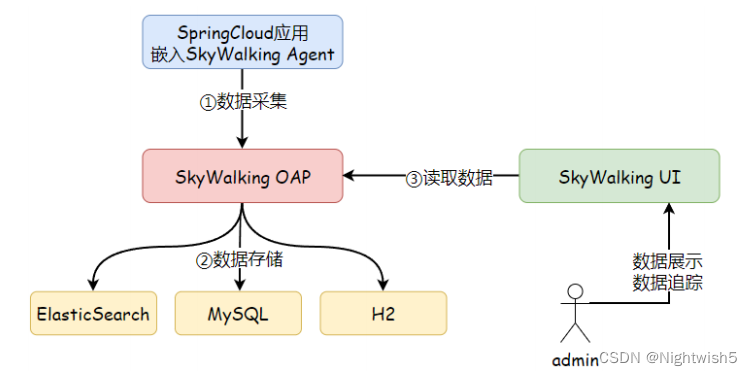

05-skywalking/

迁移思路

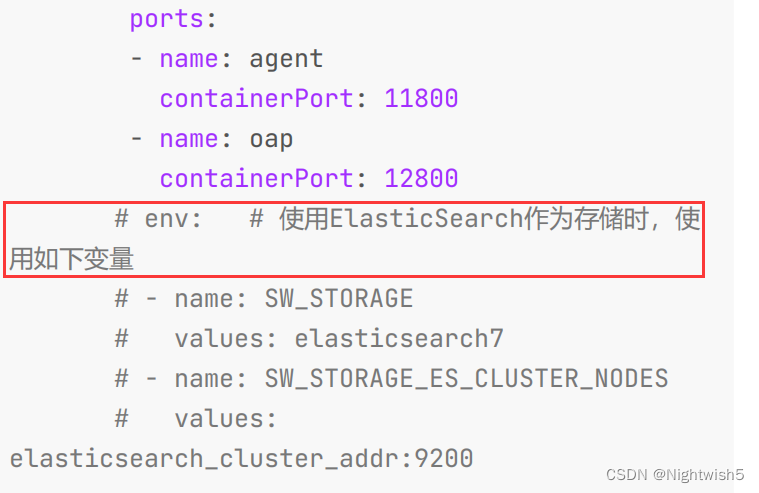

本次Skywalking采用内置H2作为存储,也可考虑采用ElasticSearch作为数据存储。

01-skywalking-oap-deploy.yaml

02-skywalking-ui-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: skywalking-ui

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: sky-ui

template:

metadata:

labels:

app: sky-ui

spec:

containers:

- name: ui

image: apache/skywalking-ui:8.9.1

ports:

- containerPort: 8080

env:

- name: SW_OAP_ADDRESS

value: "http://skywalking-oap-svc:12800"

---

apiVersion: v1

kind: Service

metadata:

name: skywalking-ui-svc

namespace: dev

spec:

selector:

app: sky-ui

ports:

- name: ui

port: 8080

targetPort: 8080

[root@master01 05-skywalking]# dig @10.96.0.10 skywalking-oap-svc.dev.svc.cluster.local +short

10.111.30.115

03-skywalking-ingress.yaml

带#号的是新版本的写法。

apiVersion: extensions/v1beta1

#apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: skywalking-ingress

namespace: dev

spec:

ingressClassName: "nginx"

rules:

- host: sky.oldxu.net

http:

paths:

- path: /

pathType: Prefix

backend:

serviceName: skywalking-ui-svc

servicePort: 8080

#service:

# name: skywalking-ui-svc

# port:

# name: ui

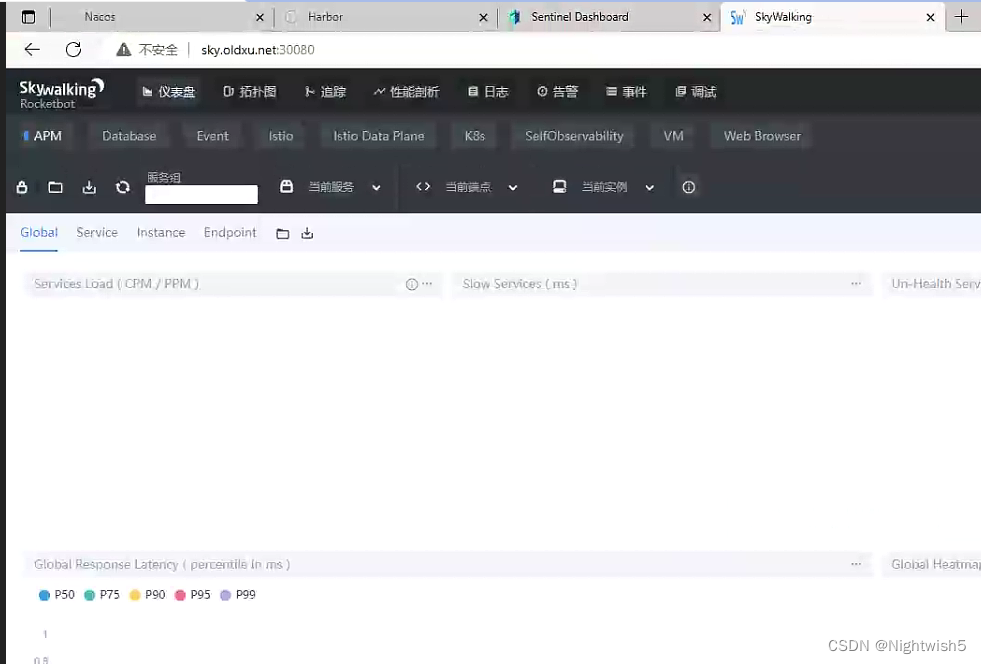

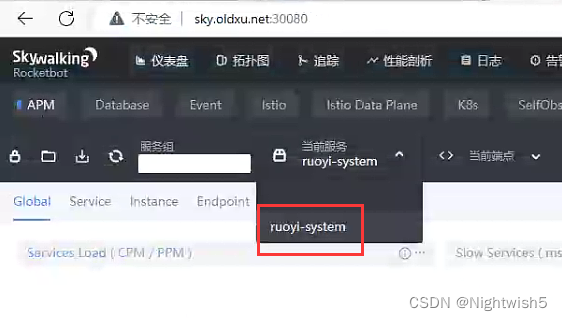

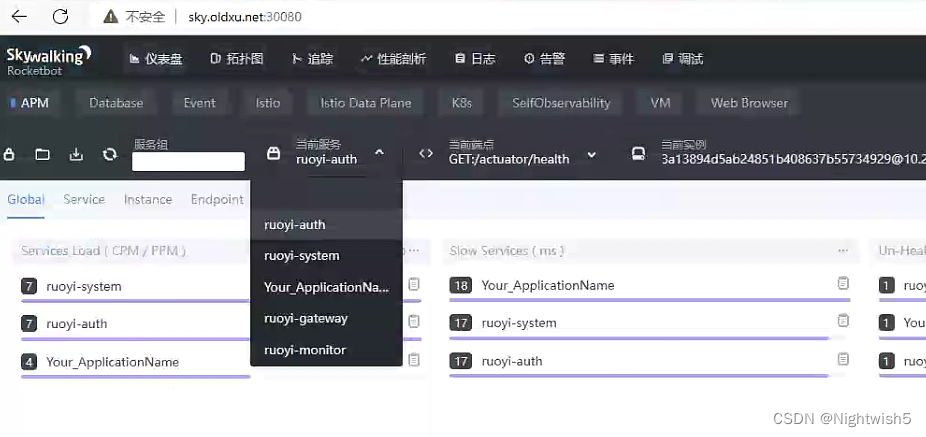

访问sky.oldxu.net:30080

04-skywalking-agent-demo.yaml (客户端demo)

将Skywalking-agent制作为Docker镜像,后续业务容器通过sidecar 模式挂载 agent

下载agent 和 制作dockerfile ,推送镜像

wget https://linux.oldxu.net/apache-skywalking-javaagent-8.8.0.tgz

wget https://linux.oldxu.net/apache-skywalking-java-agent-8.8.0.tgz

[root@master01 04-skywalking-agent-demo]# cat Dockerfile

FROM alpine

ADD ./apache-skywalking-java-agent-8.8.0.tgz /

[root@master01 04-skywalking-agent-demo]# ls

apache-skywalking-java-agent-8.8.0.tgz Dockerfile

docker build -t harbor.oldxu.net/springcloud/skywalking-java-agent:8.8 .

docker push harbor.oldxu.net/springcloud/skywalking-java-agent:8.8

#使用边车模式的思想来实现 (类似的有ELK收集Pod的日志)

业务容器通过sidecar模式挂载制作好的skywalking-agent镜像

apiVersion: apps/v1

kind: Deployment

metadata:

name: skywalking-agent-demo

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

imagePullSecrets:

- name: harbor-admin

volumes: #定义共享的存储卷

- name: skywalking-agent

emptyDir: {}

initContainers: #初始化容器,将这个容器中的数据拷贝到共享的卷中

- name: init-skywalking-agent

image: harbor.oldxu.net/springcloud/skywalking-java-agent:8.8

command:

- 'sh'

- '-c'

- 'mkdir -p /agent; cp -r /skywalking-agent/* /agent;'

volumeMounts:

- name: skywalking-agent

mountPath: /agent

containers:

- name: web

image: nginx

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking-agent/

06-service-all/ (ruoyi业务层面 system , auth , gateway ,monitor ,ui)

迁移思路

6.1 迁移微服务ruoyi-system

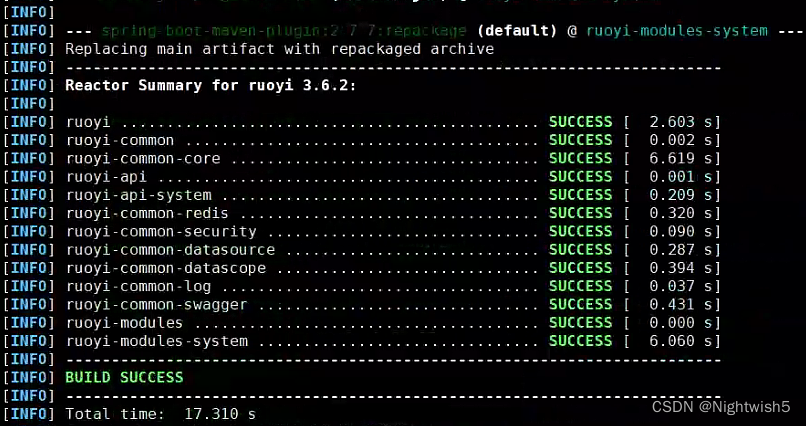

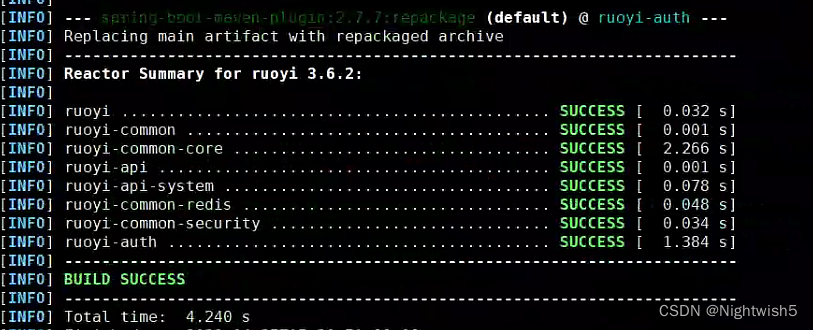

1 maven编译system项目

对应的路径及信息

cd /root/k8sFile/project/danji-ruoyi/guanWang

[root@node4 guanWang]# ls

logs note.txt RuoYi-Cloud skywalking-agent startServer.sh

[root@node4 guanWang]# ls RuoYi-Cloud/

bin docker LICENSE pom.xml README.md ruoyi-api ruoyi-auth ruoyi-common ruoyi-gateway ruoyi-modules ruoyi-ui ruoyi-visual sql

[root@node4 RuoYi-Cloud]# pwd

/root/k8sFile/project/danji-ruoyi/guanWang/RuoYi-Cloud

[root@node4 RuoYi-Cloud]# ls

bin docker LICENSE pom.xml README.md ruoyi-api ruoyi-auth ruoyi-common ruoyi-gateway ruoyi-modules ruoyi-ui ruoyi-visual sql

[root@node4 RuoYi-Cloud]#

[root@node4 RuoYi-Cloud]# mvn package -Dmaven.test.skip=true -pl ruoyi-modules/ruoyi-system/ -am

2 编写Dockerfile

vim ruoyi-modoles/ruoyi-system/Dockerfile

FROM openjdk:8-jre-alpine

COPY ./target/*.jar /ruoyi-modules-system.jar

COPY ./entrypoint.sh /entrypoint.sh

RUN chmod +x /entrypoint.sh

EXPOSE 8080

CMD ["/bin/sh","-c","/entrypoint.sh"]

3 编写entrypoint.sh

在此之前回顾传统部署system运行时的指令:

#启动ruoyi-system

nohup java -javaagent:./skywalking-agent/skywalking-agent.jar \

-Dskywalking.agent.service_name=ruoyi-system \

-Dskywalking.collector.backend_service=192.168.79.35:11800 \

-Dspring.profiles.active=dev \

-Dspring.cloud.nacos.config.file-extension=yml \

-Dspring.cloud.nacos.discovery.server-addr=192.168.79.35:8848 \

-Dspring.cloud.nacos.config.server-addr=192.168.79.35:8848 \

-jar RuoYi-Cloud/ruoyi-modules/ruoyi-system/target/ruoyi-modules-system.jar &>/var/log/system.log &

#entrypoint.sh

[root@node4 ruoyi-system]# cat entrypoint.sh

#设定端口

PARAMS="--server.port=${Server_Port:-8080}"

#JVM堆内存设置,

JAVA_OPTS="-Xms${XMS_OPTS:-150m} -Xmx${XMX_OPTS:-150m}"

#Nacos相关选项

NACOS_OPTS=" \

-Djava.security.egd=file:/dev/./urandom \

-Dfile.encoding=utf8 \

-Dspring.profiles.active=${Nacos_Active:-dev} \

-Dspring.cloud.nacos.config.file-extension=yml \

-Dspring.cloud.nacos.discovery.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848} \

-Dspring.cloud.nacos.config.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848}

"

#skywalking选项:

#边车模式的initContainer将skywalking.jar塞到了pod里面。

SKY_OPTS="-javaagent:/skywalking-agent/skywalking-agent.jar \

-Dskywalking.agent.service_name=ruoyi-system \

-Dskywalking.collector.backend_service=${Sky_Server_Addr:-localhost:11800}

"

# 启动命令(指定sky选项、jvm堆内存选项、jar包,最后跟上params参数)

java ${SKY_OPTS} ${NACOS_OPTS} ${JAVA_OPTS} -jar /ruoyi-modules-system.jar ${PARAMS}

#路径及文件信息

[root@node4 ruoyi-system]# ls

Dockerfile entrypoint.sh pom.xml src target

4 制作镜像和推送

docker build -t harbor.oldxu.net/springcloud/ruoyi-system:v1.0 .

docker push harbor.oldxu.net/springcloud/ruoyi-system:v1.0

5 修改system组件配置

通过Kubernetes运行system之前,先登录Nacos修改ruoyi-system-dev.yml的相关配置信息;

修改redis地址,新增sentienl字段、 mysql地址

# spring配置

spring:

cloud:

sentinel:

eager: true

transport:

dashboard: sentinel-svc.dev.svc.cluster.local:8718

redis:

host: redis-svc.dev.svc.cluster.local

port: 6379

password:

datasource:

druid:

stat-view-servlet:

enabled: true

loginUsername: admin

loginPassword: 123456

dynamic:

druid:

initial-size: 5

min-idle: 5

maxActive: 20

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1 FROM DUAL

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

filters: stat,slf4j

connectionProperties: druid.stat.mergeSql\=true;druid.stat.slowSqlMillis\=5000

datasource:

# 主库数据源

master:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://mysql-ruoyi-svc.dev.svc.cluster.local:3306/ry-cloud?useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=true&serverTimezone=GMT%2B8

username: root

password: oldxu

# 从库数据源

# slave:

# username:

# password:

# url:

# driver-class-name:

# seata: true # 开启seata代理,开启后默认每个数据源都代理,如果某个不需要代理可单独关闭

# seata配置

seata:

# 默认关闭,如需启用spring.datasource.dynami.seata需要同时开启

enabled: false

# Seata 应用编号,默认为 ${spring.application.name}

application-id: ${spring.application.name}

# Seata 事务组编号,用于 TC 集群名

tx-service-group: ${spring.application.name}-group

# 关闭自动代理

enable-auto-data-source-proxy: false

# 服务配置项

service:

# 虚拟组和分组的映射

vgroup-mapping:

ruoyi-system-group: default

config:

type: nacos

nacos:

serverAddr: 127.0.0.1:8848

group: SEATA_GROUP

namespace:

registry:

type: nacos

nacos:

application: seata-server

server-addr: 127.0.0.1:8848

namespace:

# mybatis配置

mybatis:

# 搜索指定包别名

typeAliasesPackage: com.ruoyi.system

# 配置mapper的扫描,找到所有的mapper.xml映射文件

mapperLocations: classpath:mapper/**/*.xml

# swagger配置

swagger:

title: 系统模块接口文档

license: Powered By ruoyi

licenseUrl: https://ruoyi.vip

#验证redis 、 mysql 、sentinel-svc

[root@master01 bin]# dig @10.96.0.10 redis-svc.dev.svc.cluster.local +short

10.111.240.148

[root@master01 bin]# dig @10.96.0.10 sentinel-svc.dev.svc.cluster.local +short

10.111.31.36

[root@master01 bin]# dig @10.96.0.10 mysql-ruoyi-svc.dev.svc.cluster.local +short

10.244.1.130

01-system-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ruoyi-system

namespace: dev

spec:

replicas: 2

selector:

matchLabels:

app: system

template:

metadata:

labels:

app: system

spec:

imagePullSecrets:

- name: harbor-admin

volumes:

- name: skywalking-agent

emptyDir: {}

initContainers:

- name: init-sky-java-agent

image: harbor.oldxu.net/springcloud/skywalking-java-agent:8.8

command:

- 'sh'

- '-c'

- 'mkdir -p /agent; cp -r /skywalking-agent/* /agent/;'

volumeMounts:

- name: skywalking-agent

mountPath: /agent

containers:

- name: system

image: harbor.oldxu.net/springcloud/ruoyi-system:v1.0

env:

- name: Nacos_Active

value: dev

- name: Nacos_Server_Addr

value: "nacos-svc.dev.svc.cluster.local:8848"

- name: Sky_Server_Addr

value: "skywalking-oap-svc.dev.svc.cluster.local:11800"

- name: XMS_OPTS

value: 200m

- name: XMX_OPTS

value: 200m

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 10

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking-agent/

#验证nacos和 skywalking-oap

[root@master01 bin]# dig @10.96.0.10 nacos-svc.dev.svc.cluster.local +short

10.244.1.129

10.244.0.143

10.244.2.154

[root@master01 bin]# dig @10.96.0.10 skywalking-oap-svc.dev.svc.cluster.local +short

10.111.30.115

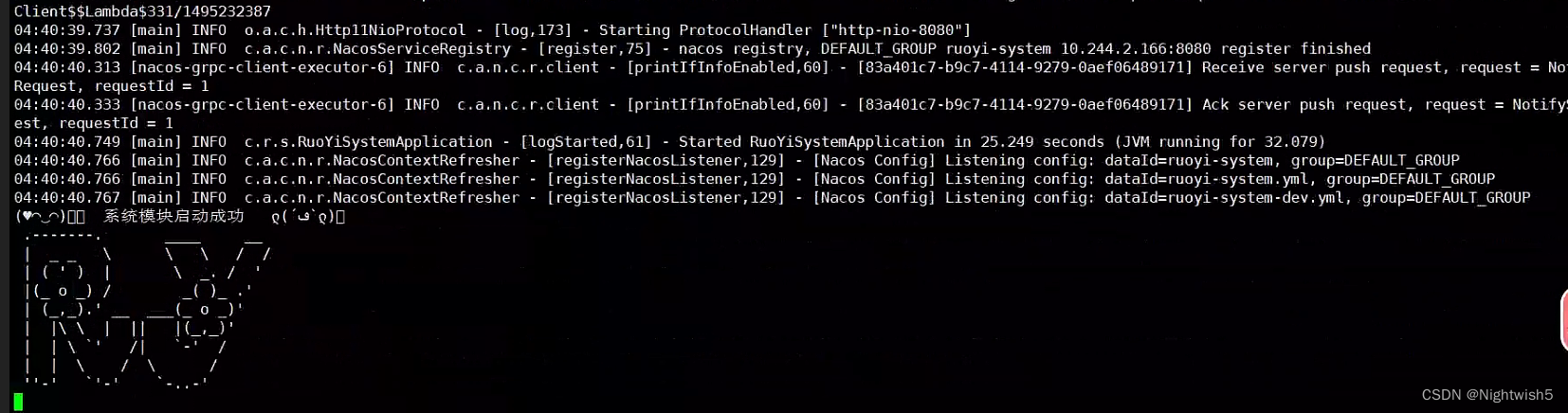

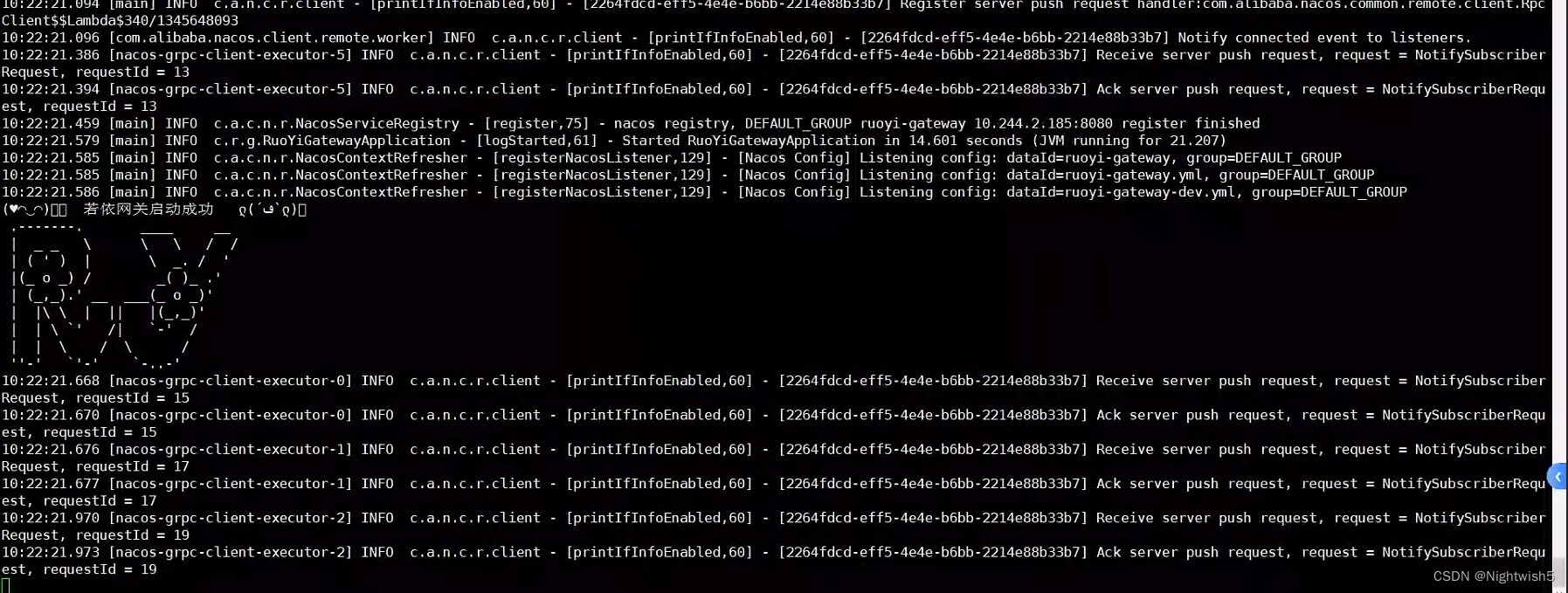

登录nacos、sentinel、skywalking查看状态

nacos:

sentinel:

skywalking:

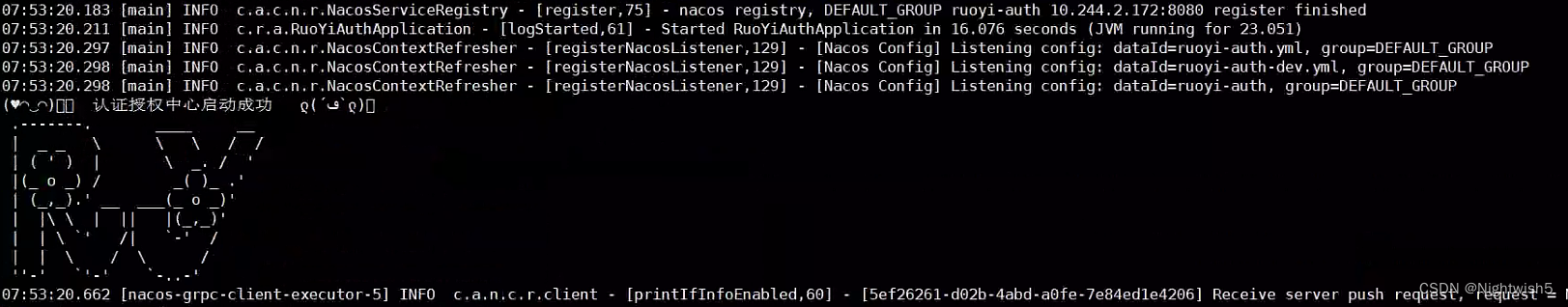

6.2 迁移微服务ruoyi-auth

1、编译auth项目

[root@node4 RuoYi-Cloud]# pwd

/root/k8sFile/project/danji-ruoyi/guanWang/RuoYi-Cloud

mvn package -Dmaven.test.skip=true -pl ruoyi-auth/ -am

2、编写dockerfile和entrypoint.sh

``shell

[root@node4 ruoyi-auth]# pwd

/root/k8sFile/project/danji-ruoyi/guanWang/RuoYi-Cloud/ruoyi-auth

[root@node4 ruoyi-auth]# ls

Dockerfile entrypoint.sh pom.xml src target

dockerfile

```shell

FROM openjdk:8-jre-alpine

COPY ./target/*.jar /ruoyi-auth.jar

COPY ./entrypoint.sh /entrypoint.sh

RUN chmod +x /entrypoint.sh

EXPOSE 8080

CMD ["/bin/sh","-c","/entrypoint.sh"]

entrypoint.sh

[root@node4 ruoyi-auth]# cat entrypoint.sh

# 设定端口,默认不传参则为8080端口

PARAMS="--server.port=${Server_Port:-8080}"

#JVM堆内存设定

JAVA_OPTS="-Xms${XMS_OPTS:-100M} -Xmx${XMX_OPTS:-100}"

# Nacos相关选项

NACOS_OPTS="

-Djava.security.egd=file:/dev/./urandom \

-Dfile.encoding=utf8 \

-Dspring.profiles.active.file=${Nacos_Active:-dev} \

-Dspring.cloud.nacos.config.file-extension=yml \

-Dspring.cloud.nacos.discovery.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848} \

-Dspring.cloud.nacos.config.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848}

"

#Skywalking相关选项

SKY_OPTS="

-javaagent:/skywalking-agent/skywalking-agent.jar \

-Dskywalking.agent.service_name=ruoyi-auth \

-Dskywalking.collector.backend_service=${Sky_Server_Addr:-localhost:11800}

"

# 启动命令(指定sky选项、jvm堆内存选项、jar包,最后跟上params参数)

java ${SKY_OPTS} ${NACOS_OPTS} ${JAVA_OPTS} -jar /ruoyi-auth.jar ${PARAMS}

3、制作镜像,推送

docker build -t harbor.oldxu.net/springcloud/ruoyi-auth:v1.0 .

docker push harbor.oldxu.net/springcloud/ruoyi-auth:v1.0

4、去nacos修改ruoyi-auth-dev.yml

使用Kubernetes运行auth之前,先通过Nacos修改对应ruoyi-auth-dev.yml相关配置;

spring:

cloud:

sentinel:

eager: true

transport:

dashboard: sentinel-svc.dev.svc.cluster.local:8718

redis:

host: redis-svc.dev.svc.cluster.local

port: 6379

password:

5、02-auth-deploy.yaml

运行auth应用

[root@master01 06-all-service]# cat 02-auth-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ruoyi-auth

namespace: dev

spec:

replicas: 2

selector:

matchLabels:

app: auth

template:

metadata:

labels:

app: auth

spec:

imagePullSecrets:

- name: harbor-admin

volumes:

- name: skywalking-agent

emptyDir: {}

initContainers:

- name: init-sky-java-agent

image: harbor.oldxu.net/springcloud/skywalking-java-agent:8.8

command:

- 'sh'

- '-c'

- 'mkdir -p /agent; cp -r /skywalking-agent/* /agent/;'

volumeMounts:

- name: skywalking-agent

mountPath: /agent

containers:

- name: auth

image: harbor.oldxu.net/springcloud/ruoyi-auth:v1.0

env:

- name: Nacos_Active

value: dev

- name: Nacos_Server_Addr

value: "nacos-svc.dev.svc.cluster.local:8848"

- name: Sky_Server_Addr

value: "skywalking-oap-svc.dev.svc.cluster.local:11800"

- name: XMS_OPTS

value: 200m

- name: XMX_OPTS

value: 200m

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 10

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking-agent/

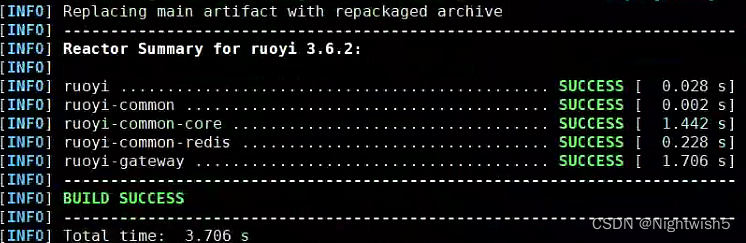

6.3 迁移微服务ruoyi-gateway

1、编译gateway项目

[root@node4 RuoYi-Cloud]# pwd

/root/k8sFile/project/danji-ruoyi/guanWang/RuoYi-Cloud

[root@node4 RuoYi-Cloud]# ls

bin docker LICENSE pom.xml README.md ruoyi-api ruoyi-auth ruoyi-common ruoyi-gateway ruoyi-modules ruoyi-ui ruoyi-visual sql

mvn package -Dmaven.test.skip=true -pl ruoyi-gateway/ -am

2、编写dockerfile和entrypoint

[root@node4 ruoyi-gateway]# ls

Dockerfile entrypoint.sh pom.xml src target

Dockerfile

#如果使用alpine镜像(openjdk:8-jre-alpine),会出现[网关异常处理]请求路径:/code

FROM openjdk:8-jre

COPY ./target/*.jar /ruoyi-gateway.jar

COPY ./entrypoint.sh /entrypoint.sh

RUN chmod +x /entrypoint.sh

EXPOSE 8080

CMD ["/bin/sh","-c","/entrypoint.sh"]

entrypoint.sh

# 设定端口,默认不传参则为8080端口

PARAMS="--server.port=${Server_Port:-8080}"

#JVM堆内存设定

JAVA_OPTS="-Xms${XMS_OPTS:-100m} -Xmx${XMX_OPTS:-100m}

"

#Nacos相关选项

NACOS_OPTS="

-Djava.security.egd=file:/dev/./urandom \

-Dfile.encoding=utf8 \

-Dspring.profiles.active=${Nacos_Active:-dev} \

-Dspring.cloud.nacos.config.file-extension=yml \

-Dspring.cloud.nacos.discovery.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848} \

-Dspring.cloud.nacos.config.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848}

"

# Skywalking相关选项

SKY_OPTS="

-javaagent:/skywalking-agent/skywalking-agent.jar \

-Dskywalking.agent.service_name=ruoyi-gateway \

-Dskywalking.collector.backend_service=${Sky_Server_Addr:-localhost:11800}

"

#启动命令(指定sky选项、jvm堆内存选项、jar包,最后跟上params参数)

java ${SKY_OPTS} ${NACOS_OPTS} ${JAVA_OPTS} -jar /ruoyi-gateway.jar ${PARAMS}

3、制作镜像并推送仓库

docker build -t harbor.oldxu.net/springcloud/ruoyi-gateway:v1.0 .

docker push harbor.oldxu.net/springcloud/ruoyi-gateway:v1.0

4、修改gateway组件配置(ruoyi-gateway-dev.yml)

使用Kubernetes运行gateway之前,先通过Nacos修改对应ruoyi-gateway-dev.yml的相关配置;

spring:

redis:

host: redis-svc.dev.svc.cluster.local

port: 6379

password:

sentinel:

eager: true

transport:

dashboard: sentinel-svc.dev.svc.cluster.local:8718

datasource:

ds1:

nacos:

server-addr: nacos-svc.dev.svc.cluster:8848

dataId: sentinel-ruoyi-gateway

groupId: DEFAULT_GROUP

data-type: json

rule-type: flow

cloud:

nacos:

discovery:

server-addr: nacos-svc.dev.svc.cluster.local:8848

config:

server-addr: nacos-svc.dev.svc.cluster.local:8848

gateway:

discovery:

locator:

lowerCaseServiceId: true

enabled: true

routes:

# 认证中心

- id: ruoyi-auth

uri: lb://ruoyi-auth

predicates:

- Path=/auth/**

filters:

# 验证码处理

- CacheRequestFilter

- ValidateCodeFilter

- StripPrefix=1

# 代码生成

- id: ruoyi-gen

uri: lb://ruoyi-gen

predicates:

- Path=/code/**

filters:

- StripPrefix=1

# 定时任务

- id: ruoyi-job

uri: lb://ruoyi-job

predicates:

- Path=/schedule/**

filters:

- StripPrefix=1

# 系统模块

- id: ruoyi-system

uri: lb://ruoyi-system

predicates:

- Path=/system/**

filters:

- StripPrefix=1

# 文件服务

- id: ruoyi-file

uri: lb://ruoyi-file

predicates:

- Path=/file/**

filters:

- StripPrefix=1

# 安全配置

security:

# 验证码

captcha:

enabled: true

type: math

# 防止XSS攻击

xss:

enabled: true

excludeUrls:

- /system/notice

# 不校验白名单

ignore:

whites:

- /auth/logout

- /auth/login

- /auth/register

- /*/v2/api-docs

- /csrf

5、 03-gateway-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ruoyi-gateway

namespace: dev

spec:

replicas: 2

selector:

matchLabels:

app: gateway

template:

metadata:

labels:

app: gateway

spec:

imagePullSecrets:

- name: harbor-admin

volumes:

- name: skywalking-agent

emptyDir: {}

initContainers:

- name: init-sky-java-agent

image: harbor.oldxu.net/springcloud/skywalking-java-agent:8.8

command:

- 'sh'

- '-c'

- 'mkdir -p /agent; cp -r /skywalking-agent/* /agent/;'

volumeMounts:

- name: skywalking-agent

mountPath: /agent

containers:

- name: gateway

image: harbor.oldxu.net/springcloud/ruoyi-gateway:v2.0

env:

- name: Nacos_Active

value: dev

- name: Nacos_Server_Addr

value: "nacos-svc.dev.svc.cluster.local:8848"

- name: Sky_Server_Addr

value: "skywalking-oap-svc.dev.svc.cluster.local:11800"

- name: XMS_OPTS

value: 500m

- name: XMX_OPTS

value: 500M

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 10

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking-agent/

---

apiVersion: v1

kind: Service

metadata:

name: gateway-svc

namespace: dev

spec:

selector:

app: gateway

ports:

- port: 8080

targetPort: 8080

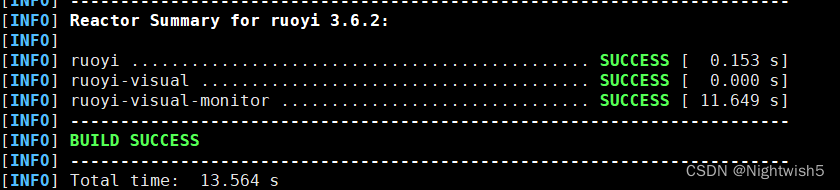

6.4 迁移微服务ruoyi-monitor

1 编译monitor项目

[root@node4 RuoYi-Cloud]# pwd

/root/k8sFile/project/danji-ruoyi/guanWang/RuoYi-Cloud

[root@node4 RuoYi-Cloud]# ls

bin docker LICENSE pom.xml README.md ruoyi-api ruoyi-auth ruoyi-common ruoyi-gateway ruoyi-modules ruoyi-ui ruoyi-visual sql

[root@node4 RuoYi-Cloud]# mvn package -Dmaven.test.skip=true -pl ruoyi-visual/ruoyi-monitor/ -am

2 编写dockerfile 和 entrypoint.sh

cd /root/k8sFile/project/danji-ruoyi/guanWang/RuoYi-Cloud/ruoyi-visual/ruoyi-monitor

[root@node4 ruoyi-monitor]# ls

Dockerfile entrypoint.sh pom.xml src target

dockerfile

FROM openjdk:8-jre-alpine

COPY ./target/*.jar /ruoyi-monitor.jar

COPY ./entrypoint.sh /entrypoint.sh

RUN chmod +x /entrypoint.sh

EXPOSE 8080

CMD ["/bin/sh", "-c", "/entrypoint.sh"]

entrypoint.sh

#设定端口,默认不传参则为8080端口

PARAMS="--server.port=${Server_Port:-8080}"

#JVM堆内存设定

JAVA_OPTS="-Xms${XMS_OPTS:-100m} -Xmx${XMX_OPTS:-100m}"

# Nacos相关选项

NACOS_OPTS="

-Djava.security.egd=file:/dev/./urandom \

-Dfile.encoding=utf8 \

-Dspring.profiles.active=${Nacos_Active:-dev} \

-Dspring.cloud.nacos.config.file-extension=yml \

-Dspring.cloud.nacos.discovery.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848} \

-Dspring.cloud.nacos.config.server-addr=${Nacos_Server_Addr:-127.0.0.1:8848}

"

#Skywalking相关选项

SKY_OPTS="

-javaagent:/skywalking-agent/sky-java-agent.jar \

-Dskywalking.agent.service_name=ruoyi-monitor \

-Dskywalking.collector.backend_service=${Sky_Server_Addr:-11800}

"

#启动命令(指定sky选项、jvm堆内存选项、jar包,最后跟上params参数)

java ${SKY_OPTS} ${NACOS_OPTS} ${JAVA_OPTS} -jar /ruoyi-monitor.jar ${PARAMS}

3 制作镜像并推送仓库

docker build -t harbor.oldxu.net/springcloud/ruoyi-monitor:v1.0 .

docker push harbor.oldxu.net/springcloud/ruoyi-monitor:v1.0

4 修改monitor组件配置(ruoyi-monitor-dev.yml)

使用Kubernetes运行monitor之前,先通过Nacos修改对应ruoyi-monitor-dev.yml的相关配置;

# spring

spring:

cloud:

sentinel:

eager: true

transport:

dashboard: sentinel-svc.dev.svc.cluster.local:8718

security:

user:

name: ruoyi

password: 123456

boot:

admin:

ui:

title: 若依服务状态监控

5、04-monitor-deploy-svc-ingress.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ruoyi-monitor

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: monitor

template:

metadata:

labels:

app: monitor

spec:

imagePullSecrets:

- name: harbor-admin

volumes:

- name: skywalking-agent

emptyDir: {}

initContainers:

- name: init-sky-java-agent

image: harbor.oldxu.net/springcloud/skywalking-java-agent:8.8

command:

- 'sh'

- '-c'

- 'mkdir -p /agent; cp -r /skywalking-agent/* /agent/;'

volumeMounts:

- name: skywalking-agent

mountPath: /agent

containers:

- name: monitor

image: harbor.oldxu.net/springcloud/ruoyi-monitor:v3.0

env:

- name: Nacos_Active

value: dev

- name: Nacos_Server_Addr

value: "nacos-svc.dev.svc.cluster.local:8848"

- name: Sky_Server_Addr

value: "skywalking-oap-svc.dev.svc.cluster.local:11800"

- name: XMS_OPTS

value: 200m

- name: XMX_OPTS

value: 200m

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 10

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking-agent/

---

apiVersion: v1

kind: Service

metadata:

name: monitor-svc

namespace: dev

spec:

selector:

app: monitor

ports:

- port: 8080

targetPort: 8080

---

#apiVersion: networking.k8s.io/v1

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: monitor-ingress

namespace: dev

spec:

ingressClassName: "nginx"

rules:

- host: "monitor.oldxu.net"

http:

paths:

- path: /

pathType: Prefix

backend:

serviceName: monitor-svc

servicePort: 8080

#service:

# name: monitor-svc

# port:

# number: 8080

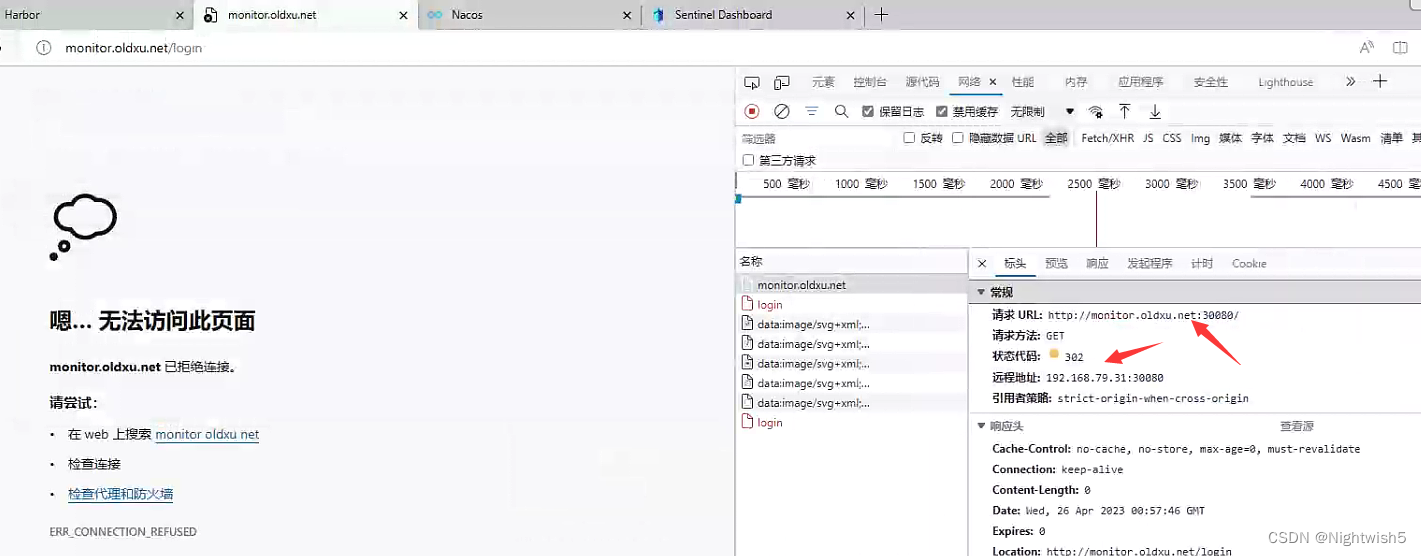

有个蛇皮问题。(monitor.oldxu.net:30080 会重定向到 monitor.oldxu.net/login),这是monitor程序的哪里搞了重定向?

还有静态文件js 、css文件会走80端口,不是走30080端口。

6.5 迁移微服务ruoyi-ui 前端

1 修改前端配置ruoyi-ui/vue.config.js

[root@node4 ruoyi-ui]# pwd

/root/k8sFile/project/danji-ruoyi/guanWang/RuoYi-Cloud/ruoyi-ui

修改网关的地址

devServer: {

host: '0.0.0.0',

port: port,

open: true,

proxy: {

// detail: https://cli.vuejs.org/config/#devserver-proxy

[process.env.VUE_APP_BASE_API]: {

target: `http://gateway-svc.dev.svc.cluster.local:8080`,

changeOrigin: true,

pathRewrite: {

['^' + process.env.VUE_APP_BASE_API]: ''

}

}

},

disableHostCheck: true

},

css: {

[root@master01 06-all-service]# dig @10.96.0.10 gateway-svc.dev.svc.cluster.local +short

10.97.133.31

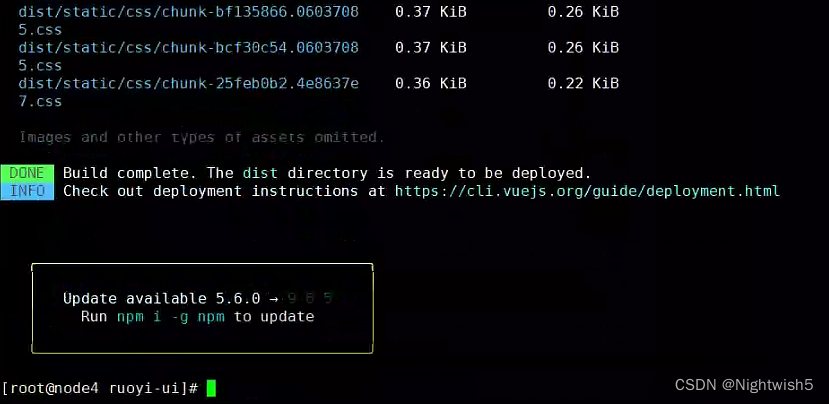

2 编译前端项目

npm install --registry=https://registry.npmmirror.com

npm run build:prod

3 编写Dockerfile

[root@node4 ruoyi-ui]# ls

babel.config.js bin build dist Dockerfile node_modules package.json package-lock.json public README.md src vue.config.js vue.config.js.bak vue.config.js-danji

[root@node4 ruoyi-ui]#

[root@node4 ruoyi-ui]# cat Dockerfile

FROM nginx

COPY ./dist /code/

4 制作镜像并推送仓库

docker build -t harbor.oldxu.net/springcloud/ruoyi-ui:v1.0 .

docker push harbor.oldxu.net/springcloud/ruoyi-ui:v1.0

5 创建ConfigMap ( ruoyi.oldxu.net.conf)

ruoyi.oldxu.net.conf

server {

listen 80;

server_name ruoyi.oldxu.net;

charset utf-8;

root /code;

location / {

try_files $uri $uri/ /index.html;

index index.html index.htm;

}

location /prod-api/ {

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://gateway-svc.dev.svc.cluster.local:8080/;

}

}

kubectl create configmap ruoyi-ui-conf --from-file=ruoyi.oldxu.net.conf -n dev

6、 05-ui-dp-svc-ingress.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ruoyi-ui

namespace: dev

spec:

replicas: 2

selector:

matchLabels:

app: ui

template:

metadata:

labels:

app: ui

spec:

imagePullSecrets:

- name: harbor-admin

containers:

- name: ui

image: harbor.oldxu.net/springcloud/ruoyi-ui:v1.0

ports:

- containerPort: 80

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 10

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 10

volumeMounts:

- name: ngxconfs

mountPath: /etc/nginx/conf.d/

volumes:

- name: ngxconfs

configMap:

name: ruoyi-ui-conf

---

apiVersion: v1

kind: Service

metadata:

name: ui-svc

namespace: dev

spec:

selector:

app: ui

ports:

- port: 80

targetPort: 80

---

#apiVersion: networking.k8s.io/v1

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ui-ingress

namespace: dev

spec:

ingressClassName: "nginx"

rules:

- host: "ruoyi.oldxu.net"

http:

paths:

- path: /

pathType: Prefix

backend:

serviceName: ui-svc

servicePort: 80

#service:

# name: ui-svc

# port:

# number: 80

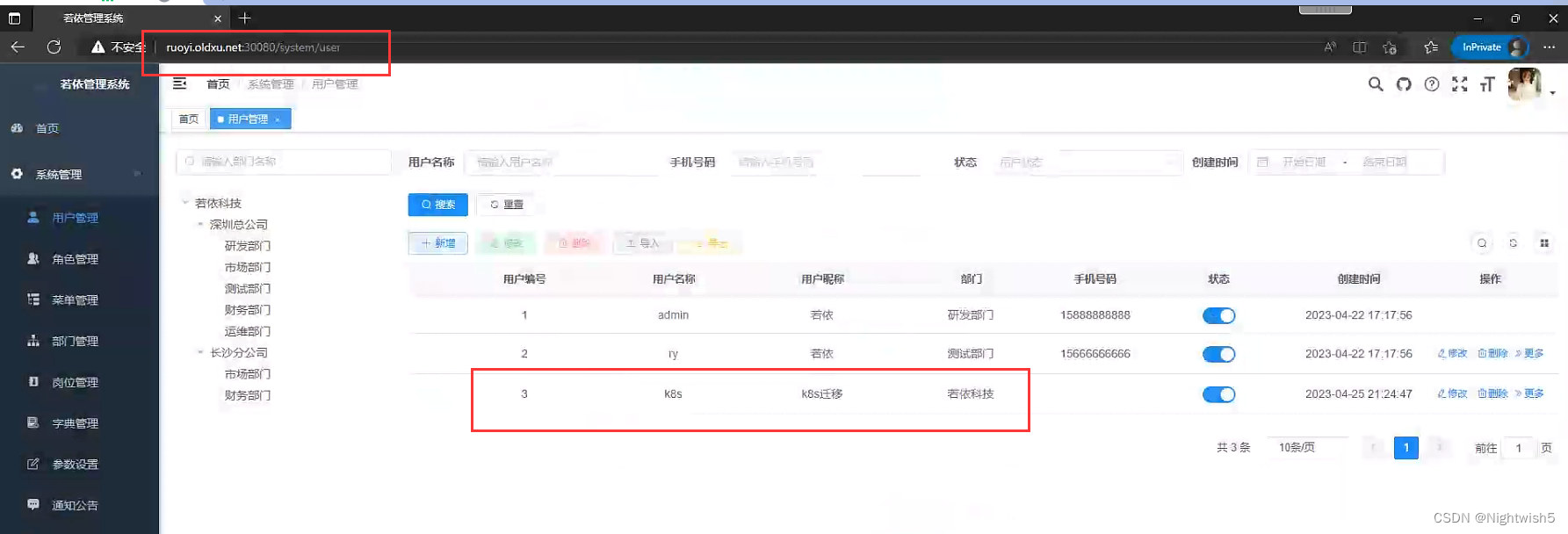

访问:

END

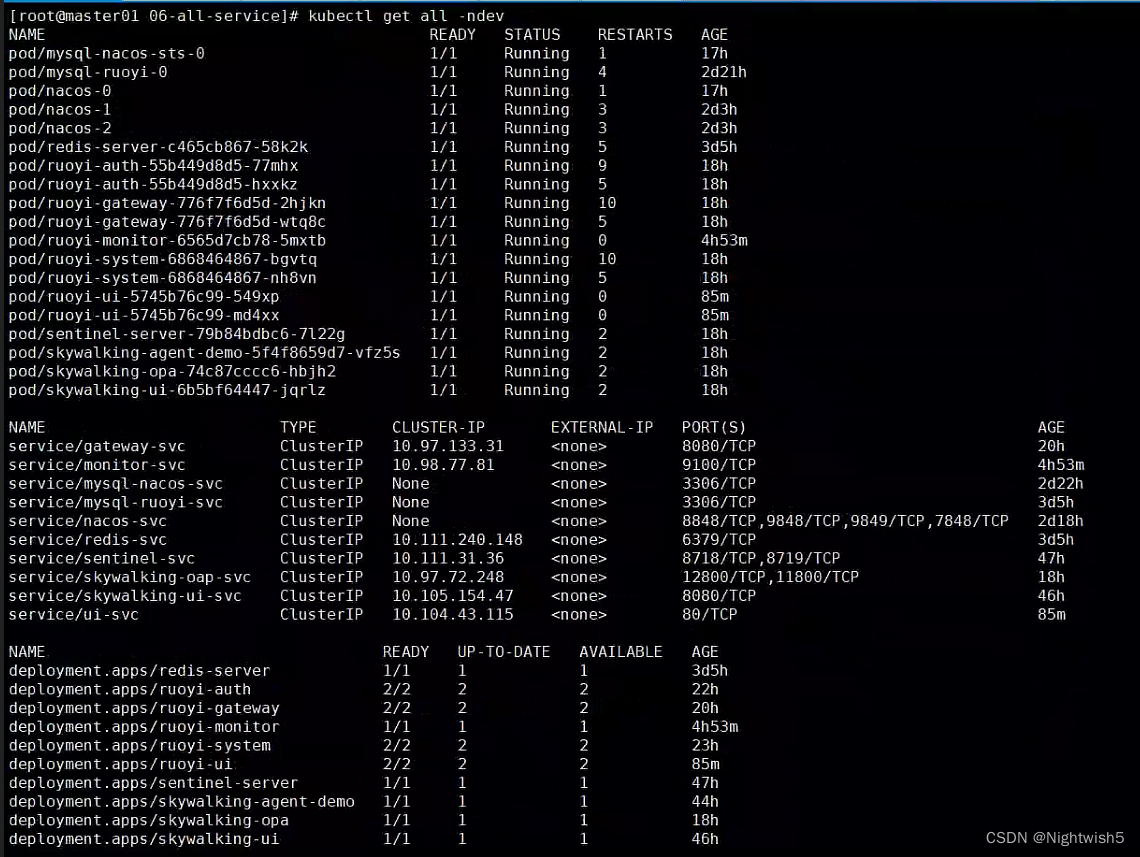

其他/迁移小结

#排查查看pod 已使用的内存信息

kubectl top pod --sort-by=memory --all-namespaces