目录

简介

原理

任务管理

编辑同步拓扑示例

使用场景

限制

硬件配置

安装&升级

部署

生成配置文件

生成配置文件模板

更具自身的机器修改

部署

启动集群&查看集群

实战

上游数据库前提

配置mysql的相关配置

编写DM的MySQL相关配置

把MySQL和DM master 产生关系

加入第二个MySQL(更具自己的情况选做)

查看数据源的连接信息

下游数据库前提

查看下上游要同步的数据库

编写同步配置问题

启动下游任务

查询任务

查看下DM状态

结果

MySQL1

MySQL2

TiDB

性能优化

结论

相关任务操作

简介

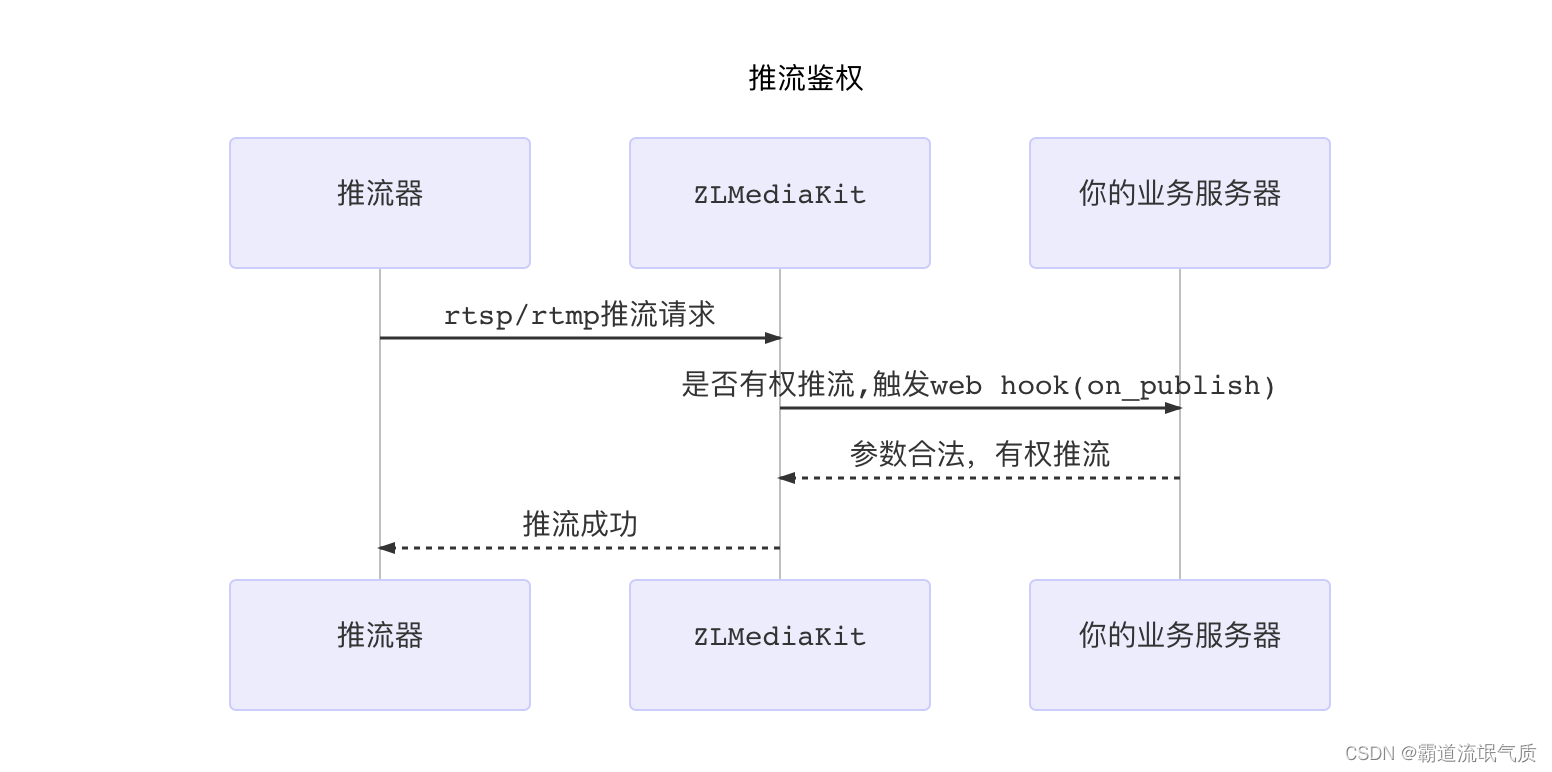

使用Data Migration (DM) 同步数据。

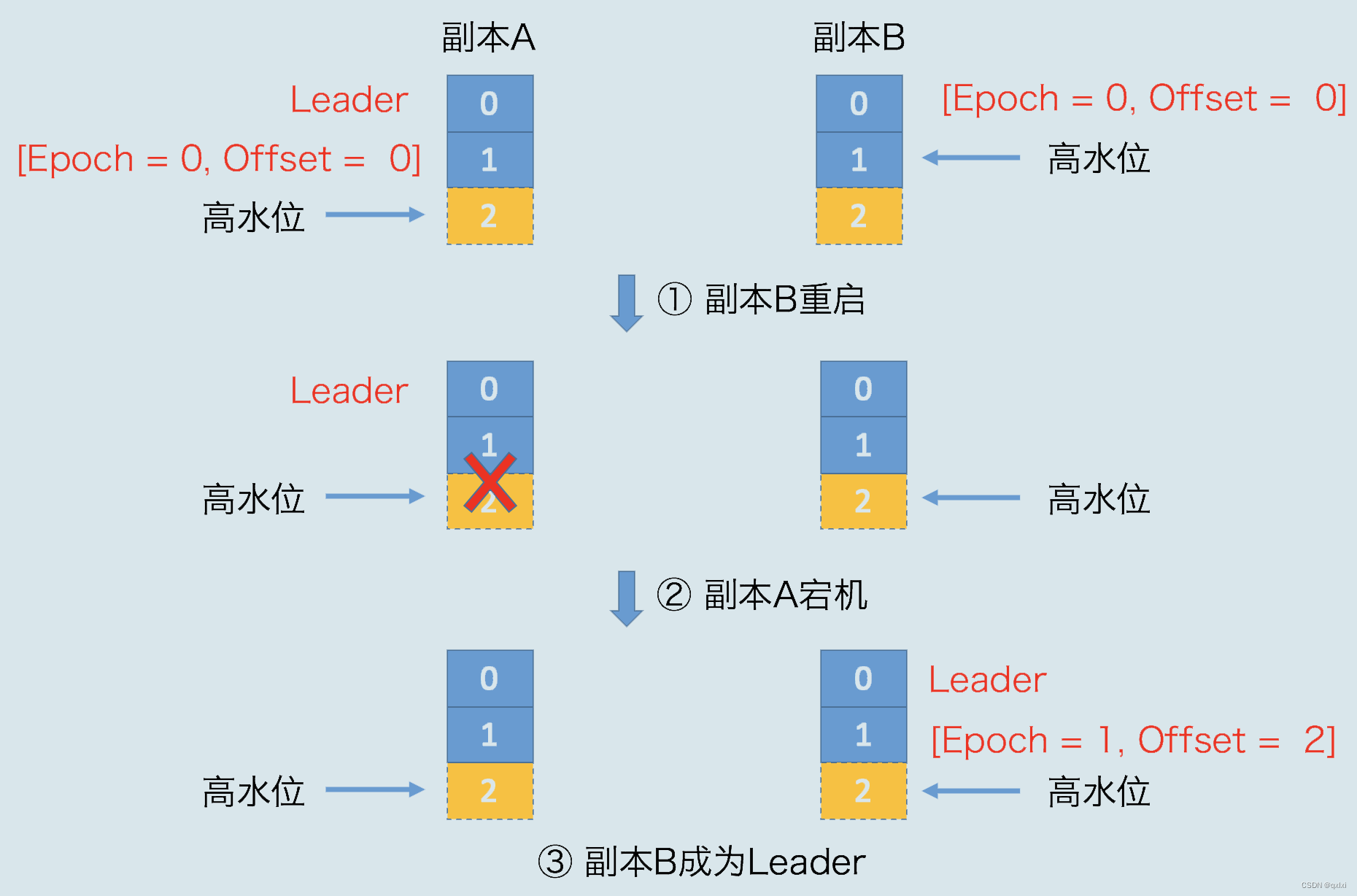

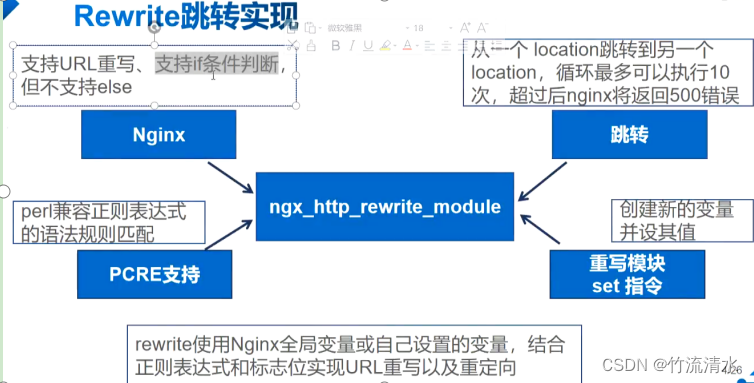

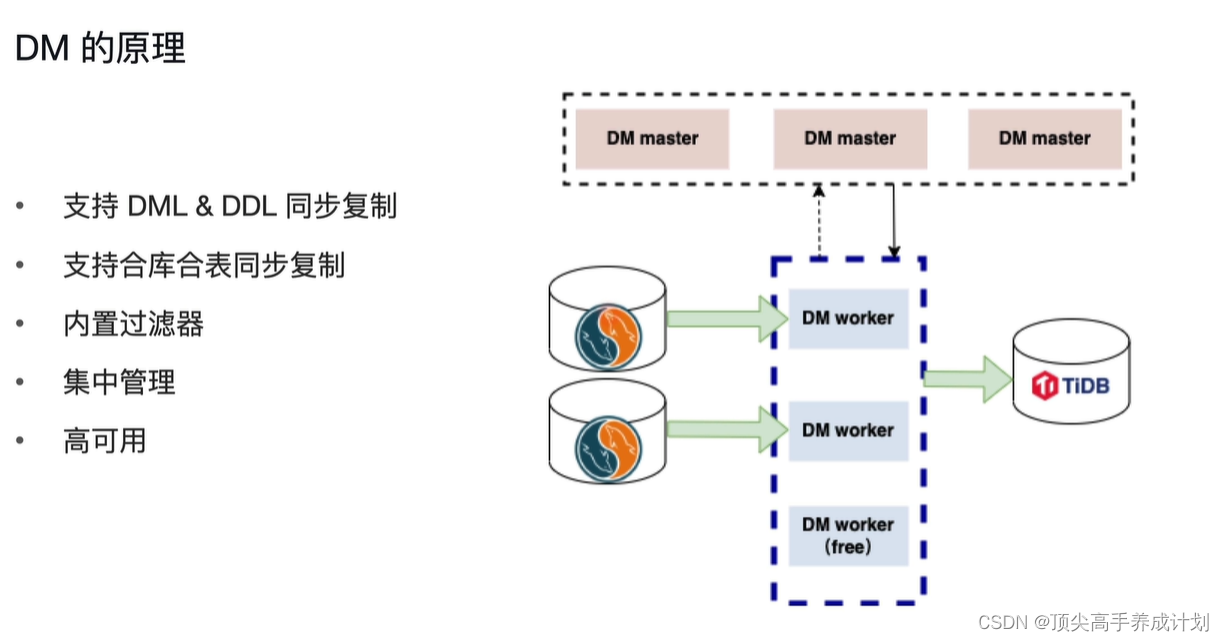

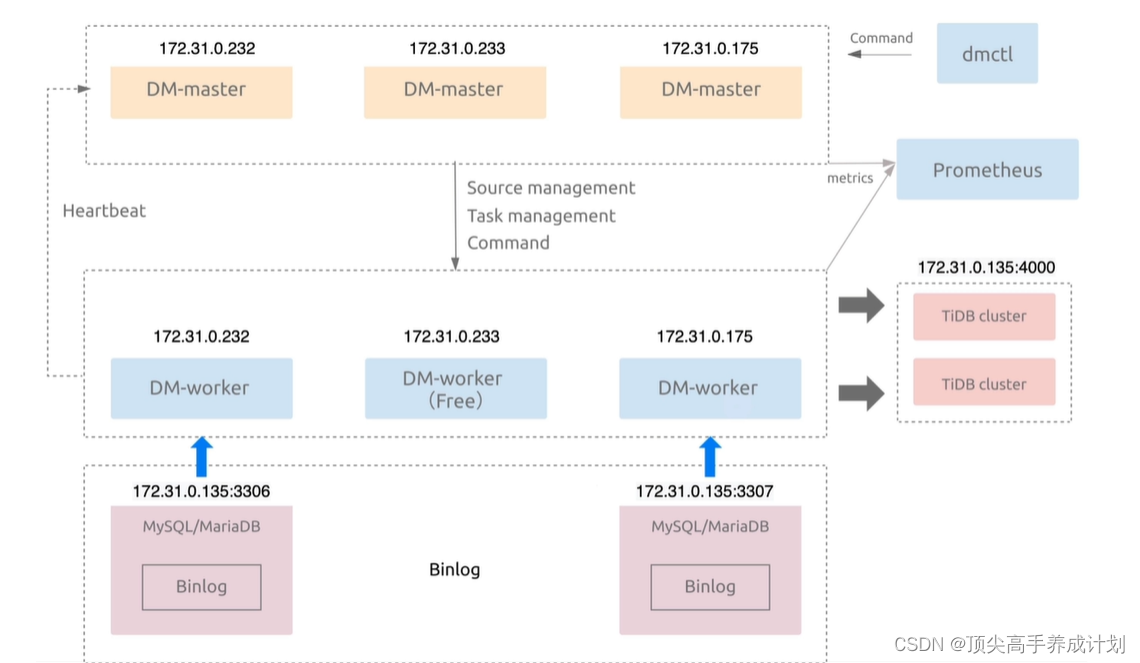

原理

- DM worker负责读取上游的binlog(它是和上游兼容MySQL协议的数据库一对一的关系)。

- DM master 监控和管理 DM worker。

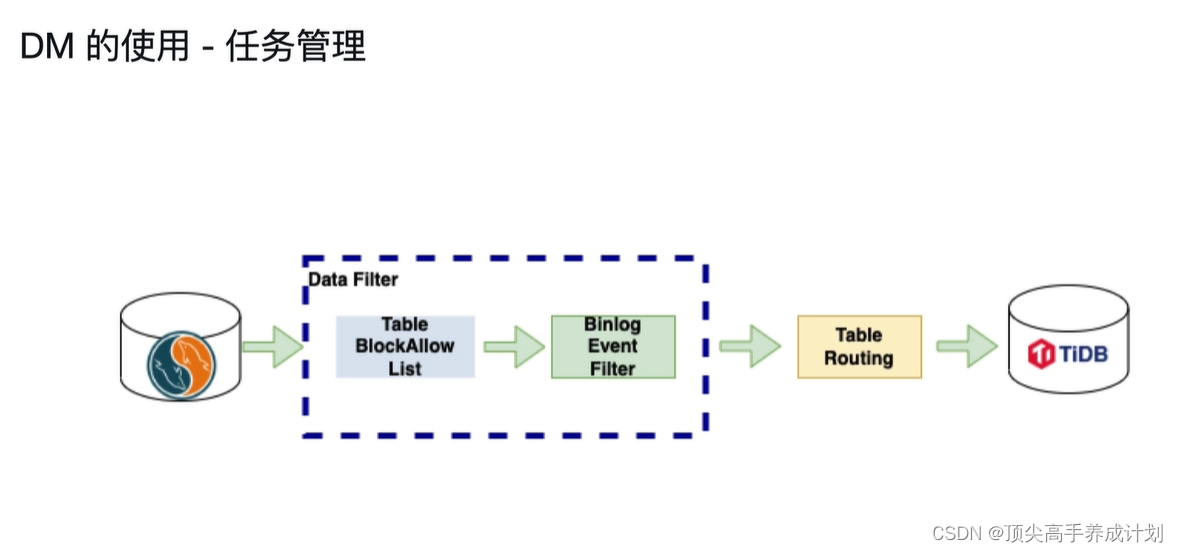

任务管理

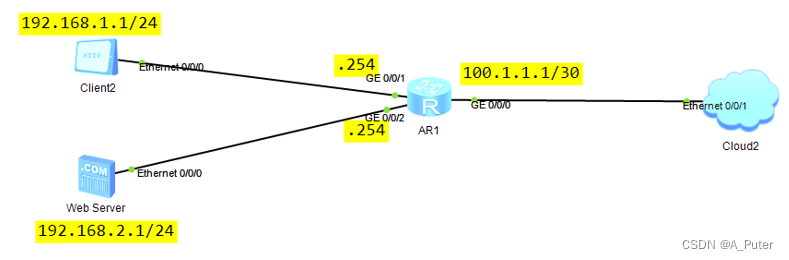

同步拓扑示例

同步拓扑示例

使用场景

全量加增量的同步。

限制

- 如果TiDB5.4之前,不支持其他的数据库的GBK同步数据过来。

- 使用DM的时候会同步表的DDL语句,如果有些MySQL比较特殊的用法,TiDB不太支持。

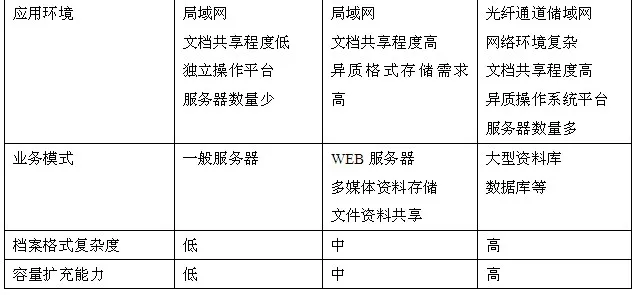

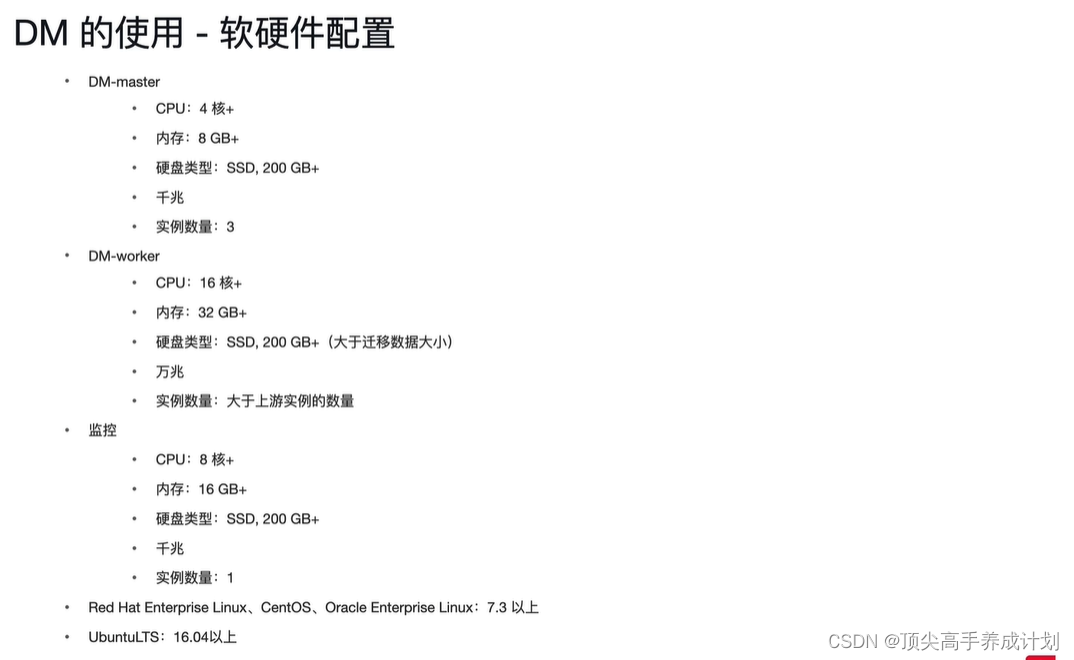

硬件配置

安装&升级

#安装

tiup install dm dmctl

#升级

tiup update --self && tiup update dm 部署

生成配置文件

生成配置文件模板

#生成配置文件

tiup dm template > topology.yaml更具自身的机器修改

# The topology template is used deploy a minimal DM cluster, which suitable

# for scenarios with only three machinescontains. The minimal cluster contains

# - 3 master nodes

# - 3 worker nodes

# You can change the hosts according your environment

---

global:

user: "root"

ssh_port: 22

deploy_dir: "/home/tidb/dm/deploy"

data_dir: "/home/tidb/dm/data"

# arch: "amd64"

master_servers:

- host: 192.168.66.10

- host: 192.168.66.20

- host: 192.168.66.21

worker_servers:

- host: 192.168.66.10

- host: 192.168.66.20

- host: 192.168.66.21

monitoring_servers:

- host: 192.168.66.10

grafana_servers:

- host: 192.168.66.20

alertmanager_servers:

- host: 192.168.66.21部署

#查找支持的版本,找一个最新稳定的就行

tiup list dm-master

tiup dm deploy dm-deploy v7.0.0 ./topology.yaml --user root -p启动集群&查看集群

#查看启动的集群

tiup dm list

#启动集群

tiup dm start dm-deploy

#查看集群状态

tiup dm display dm-deploy实战

上游数据库前提

配置mysql的相关配置

#设置mysql的相关参数

mysql -h127.0.0.1 -P3306 -uroot -proot

在mysql端先查看下是否开启了binlog

show variables like 'log_bin';

show variables like '%56%';

set global show_compatibility_56 ='ON';编写DM的MySQL相关配置

注意下面的host不能够写127.0.0.1不然加入不成功。

#密码加密,配置文件里面的password就是这个root加密以后的字符串

tiup dmctl --encrypt 'root'

#创建数据源配置

vi mysql-source-config.yaml

source-id: "mysql-replice-01"

from:

host: "192.168.66.10"

port: 3306

user: "root"

password: "7EYsHL8G+fQUQnKp6fd+1UDUe/DO"把MySQL和DM master 产生关系

#把MySQL和DM master 产生关系

tiup dmctl --master-addr=192.168.66.10:8261 operate-source create mysql-source-config.yaml打印

[root@master output]# tiup dmctl --master-addr=192.168.66.10:8261 operate-source create mysql-source-config.yaml

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.0.0/dmctl/dmctl --master-addr=192.168.66.10:8261 operate-source create mysql-source-config.yaml

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"source": "mysql-replice-01",

"worker": "dm-192.168.66.10-8262"

}

]

}

加入第二个MySQL(更具自己的情况选做)

注意加入多个MySQL的时候source-id不能够相同

vi mysql-source-config1.yaml

source-id: "mysql-replice-02"

from:

host: "192.168.66.10"

port: 3307

user: "root"

password: "7EYsHL8G+fQUQnKp6fd+1UDUe/DO"

tiup dmctl --master-addr=192.168.66.10:8261 operate-source create mysql-source-config1.yaml查看数据源的连接信息

#查看所有数据源的连接信息

tiup dmctl --master-addr=192.168.66.10:8261 operate-source show

#查看某一个数据源的连接信息

tiup dmctl --master-addr=192.168.66.10:8261 get-config source mysql-replice-02打印信息

[root@master output]# tiup dmctl --master-addr=192.168.66.10:8261 operate-source show

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.0.0/dmctl/dmctl --master-addr=192.168.66.10:8261 operate-source show

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"source": "mysql-replice-01",

"worker": "dm-192.168.66.10-8262"

},

{

"result": true,

"msg": "",

"source": "mysql-replice-02",

"worker": "dm-192.168.66.21-8262"

}

]

}

[root@master output]# tiup dmctl --master-addr=192.168.66.10:8261 get-config source mysql-replice-02

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.0.0/dmctl/dmctl --master-addr=192.168.66.10:8261 get-config source mysql-replice-02

{

"result": true,

"msg": "",

"cfg": "enable: true\nenable-gtid: false\nauto-fix-gtid: false\nrelay-dir: relay-dir\nmeta-dir: \"\"\nflavor: mysql\ncharset: \"\"\nenable-relay: false\nrelay-binlog-name: \"\"\nrelay-binlog-gtid: \"\"\nsource-id: mysql-replice-02\nfrom:\n host: 192.168.66.10\n port: 3307\n user: root\n password: '******'\n max-allowed-packet: null\n session: {}\n security: null\npurge:\n interval: 3600\n expires: 0\n remain-space: 15\nchecker:\n check-enable: true\n backoff-rollback: 5m0s\n backoff-max: 5m0s\n check-interval: 5s\n backoff-min: 1s\n backoff-jitter: true\n backoff-factor: 2\nserver-id: 429524188\ntracer: {}\ncase-sensitive: false\nfilters: []\n"

}

下游数据库前提

查看下上游要同步的数据库

#查看有哪些数据库

mysql -h127.0.0.1 -P3306 -uroot -proot

mysql -h127.0.0.1 -P3307 -uroot -proot

show databases;

#创建TiDB的目标数据库

mysql -h127.0.0.1 -P4000 -uroot -ptidb

create database hue_mysql1;

create database gmall_config_mysql2;

编写同步配置问题

vi dm-task.yaml# 任务名,多个同时运行的任务不能重名。

name: "test"

# 全量+增量 (all) 迁移模式。

task-mode: "all"

# 如果是分表分库里面就忽略自增主键了

ignore-checking-items: ["auto_increment_ID"]

target-database:

host: "192.168.66.10"

port: 4000

user: "root"

password: "2hcK5FlVHzKfM8GF6njtPECyxeRz" #tiup dmctl --encrypt 'tidb' 加密得来

mysql-instances:

-

source-id: "mysql-replice-01"

block-allow-list: "log-ignored" #对应下面的block-allow-list

mydumper-config-name: "global" #全量导入的配置

route-rules: ["sharding-route-rules-table"] #对应下面的routes

filter-rules: ["trace-filter-rule"] #对应下面的filters

-

source-id: "mysql-replice-02"

block-allow-list: "log-ignored"

mydumper-config-name: "global"

route-rules: ["sharding-route-rules-schema"]

routes:

sharding-route-rules-table:

schema-pattern: gmall

target-schema: hue_mysql1

sharding-route-rules-schema:

schema-pattern: gmall_config

target-schema: gmall_config_mysql2

block-allow-list:

log-ignored:

ignore-dbs: ["test1"] #在复制的时候忽略MySQL的test1数据库

mydumpers:

global:

threads: 4

chunk-filesize: 64

filters:

trace-filter-rule:

schema-pattern: "hue" #源库hue的desktop_settings的表下面的操作忽略

table-pattern: "desktop_settings"

events: ["truncate table","drop table","delete"]

action: Ignore启动下游任务

tiup dmctl --master-addr="192.168.66.10:8261" start-task dm-task.yaml打印

[root@master output]# tiup dmctl --master-addr="192.168.66.10:8261" start-task dm-task.yarml

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.0.0/dmctl/dmctl --master-addr=192.168.66.10:8261 start-task dm-task.yarml

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"source": "mysql-replice-01",

"worker": "dm-192.168.66.10-8262"

},

{

"result": true,

"msg": "",

"source": "mysql-replice-02",

"worker": "dm-192.168.66.21-8262"

}

],

"checkResult": "fail to check synchronization configuration with type: no errors but some warnings

detail: {

"results": [

{

"id": 22,

"name": "table structure compatibility check",

"desc": "check compatibility of table structure",

"state": "warn",

"errors": [

{

"severity": "warn",

"short_error": "table `metastore`.`SORT_COLS` Foreign Key SORT_COLS_FK1 is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `hue`.`oozie_email` Foreign Key oozie_email_node_ptr_id_b6164766_fk_oozie_node_id is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `gmall`.`base_province` primary/unique key does not exist"

},

{

"severity": "warn",

"short_error": "table `metastore`.`PARTITION_KEYS` Foreign Key PARTITION_KEYS_FK1 is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `metastore`.`SKEWED_COL_NAMES` Foreign Key SKEWED_COL_NAMES_FK1 is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `hue`.`oozie_kill` Foreign Key oozie_kill_node_ptr_id_6e3b4c7f_fk_oozie_node_id is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `metastore`.`TBL_PRIVS` Foreign Key TBL_PRIVS_FK1 is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `hue`.`oozie_bundledcoordinator` Foreign Key oozie_bundledcoordin_bundle_id_c0a51e15_fk_oozie_bun is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `hue`.`oozie_bundledcoordinator` Foreign Key oozie_bundledcoordin_coordinator_id_deb5052a_fk_oozie_coo is parsed but ignored by TiDB."

},

{

"severity": "warn",

"short_error": "table `metastore`.`SKEWED_STRING_LIST_VALUES` Foreign Key SKEWED_STRING_LIST_VALUES_FK1 is parsed but ignored by TiDB."

}

],

"instruction": "TiDB does not support foreign key constraints. See the document: https://docs.pingcap.com/tidb/stable/mysql-compatibility#unsupported-features; You need to set primary/unique keys for the table. Otherwise replication efficiency might become very low and exactly-once replication cannot be guaranteed."

}

],

"summary": {

"passed": true,

"total": 23,

"successful": 22,

"failed": 0,

"warning": 1

}

}"

}查询任务

tiup dmctl --master-addr="192.168.66.10:8261" query-status test打印

[root@master output]# tiup dmctl --master-addr="192.168.66.10:8261" query-status test

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.0.0/dmctl/dmctl --master-addr=192.168.66.10:8261 query-status test

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"sourceStatus": {

"source": "mysql-replice-01",

"worker": "dm-192.168.66.10-8262",

"result": null,

"relayStatus": null

},

"subTaskStatus": [

{

"name": "test",

"stage": "Running",

"unit": "Load",

"result": null,

"unresolvedDDLLockID": "",

"load": {

"finishedBytes": "0",

"totalBytes": "0",

"progress": "0.00 %",

"metaBinlog": "(mysql-bin.000046, 154)",

"metaBinlogGTID": "",

"bps": "0"

},

"validation": null

}

]

},

{

"result": true,

"msg": "",

"sourceStatus": {

"source": "mysql-replice-02",

"worker": "dm-192.168.66.21-8262",

"result": null,

"relayStatus": null

},

"subTaskStatus": [

{

"name": "test",

"stage": "Running",

"unit": "Sync",

"result": null,

"unresolvedDDLLockID": "",

"sync": {

"totalEvents": "0",

"totalTps": "0",

"recentTps": "0",

"masterBinlog": "(mysql-bin.000045, 154)",

"masterBinlogGtid": "",

"syncerBinlog": "(mysql-bin.000045, 154)",

"syncerBinlogGtid": "",

"blockingDDLs": [

],

"unresolvedGroups": [

],

"synced": true,

"binlogType": "remote",

"secondsBehindMaster": "0",

"blockDDLOwner": "",

"conflictMsg": "",

"totalRows": "0",

"totalRps": "0",

"recentRps": "0"

},

"validation": null

}

]

}

]

}

查看下DM状态

#查看下DM状态 发现对饮的 dm-worker 变成了Bound状态

tiup dm list

tiup dm display dm-deploy打印

[root@master output]# tiup dm display dm-deploy

tiup is checking updates for component dm ...

Starting component `dm`: /root/.tiup/components/dm/v1.12.1/tiup-dm display dm-deploy

Cluster type: dm

Cluster name: dm-deploy

Cluster version: v7.0.0

Deploy user: tidb

SSH type: builtin

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.21:9093 alertmanager 192.168.66.21 9093/9094 linux/x86_64 Up /home/tidb/dm/data/alertmanager-9093 /home/tidb/dm/deploy/alertmanager-9093

192.168.66.10:8261 dm-master 192.168.66.10 8261/8291 linux/x86_64 Healthy /home/tidb/dm/data/dm-master-8261 /home/tidb/dm/deploy/dm-master-8261

192.168.66.20:8261 dm-master 192.168.66.20 8261/8291 linux/x86_64 Healthy /home/tidb/dm/data/dm-master-8261 /home/tidb/dm/deploy/dm-master-8261

192.168.66.21:8261 dm-master 192.168.66.21 8261/8291 linux/x86_64 Healthy|L /home/tidb/dm/data/dm-master-8261 /home/tidb/dm/deploy/dm-master-8261

192.168.66.10:8262 dm-worker 192.168.66.10 8262 linux/x86_64 Bound /home/tidb/dm/data/dm-worker-8262 /home/tidb/dm/deploy/dm-worker-8262

192.168.66.20:8262 dm-worker 192.168.66.20 8262 linux/x86_64 Free /home/tidb/dm/data/dm-worker-8262 /home/tidb/dm/deploy/dm-worker-8262

192.168.66.21:8262 dm-worker 192.168.66.21 8262 linux/x86_64 Bound /home/tidb/dm/data/dm-worker-8262 /home/tidb/dm/deploy/dm-worker-8262

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /home/tidb/dm/deploy/grafana-3000

192.168.66.10:9090 prometheus 192.168.66.10 9090 linux/x86_64 Up /home/tidb/dm/data/prometheus-9090 /home/tidb/dm/deploy/prometheus-9090

Total nodes: 9

结果

MySQL1

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| dolphinscheduler |

| gmall |

| gmall_config |

| hivemetastore |

| hue |

| metastore |

| mysql |

| performance_schema |

| shishimaxwell |

| sys |

| test1 |

| uploadfile |

+--------------------+

13 rows in set (0.00 sec)

MySQL2

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| gmall_config |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

TiDB

mysql> show databases;

+---------------------+

| Database |

+---------------------+

| INFORMATION_SCHEMA |

| METRICS_SCHEMA |

| PERFORMANCE_SCHEMA |

| dm_meta |

| dolphinscheduler |

| gmall_config |

| gmall_config_mysql2 |

| hivemetastore |

| hue |

| hue_mysql1 |

| metastore |

| mysql |

| shishimaxwell |

| test |

| test1 |

| uploadfile |

+---------------------+

16 rows in set (0.00 sec)性能优化

结论

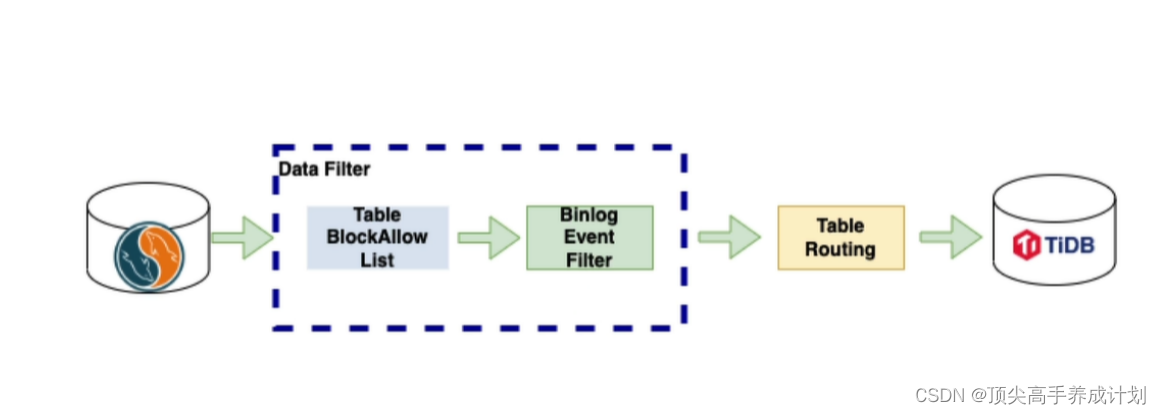

Table BlockAllow: 作用是过滤掉不同步的库。

Binlog Event Fliter: 作用是表级别的操作过滤 ,比如不监听Delete那么源删除数据,目标不会删除数据。

Table Routing: 库和表的路由配置,也就是分表分库的合表合库操作。

上面没有把MySQL的库全部配置在 Routing 里面,但是其他的库也同步过去了,配置 Routing 的库,也成功同步过去了。也就是说默认是全库同步,可以配置Table BlockAllow忽略那些库不同步。

相关任务操作

#启动

tiup dmctl --master-addr="192.168.66.10:8261" start-task dm-task.yaml

#暂停(Binlog暂时不读)

tiup dmctl --master-addr="192.168.66.10:8261" pause-task dm-task.yaml

#恢复(开始从Binlog暂停的地方读取)

tiup dmctl --master-addr="192.168.66.10:8261" resume-task dm-task.yaml

#查询

tiup dmctl --master-addr="192.168.66.10:8261" query-status dm-task.yaml

#停止任务(这个停止以后不会记录Binlog)

tiup dmctl --master-addr="192.168.66.10:8261" stop-task dm-task.yaml