注:参考B站‘小土堆’视频教程

视频链接:【PyTorch深度学习快速入门教程(绝对通俗易懂!)【小土堆】

上一篇:深度学习快速入门----Pytorch 1

文章目录

- 八、神经网络--非线性激活

- 九、神经网络--线性层及其他层介绍

- 十、神经网络--全连接层Sequential

- 十一、损失函数与反向传播

- 十二、优化器

- 十三、现有网络模型的使用及修改

- 十四、网络模型的保存与读取

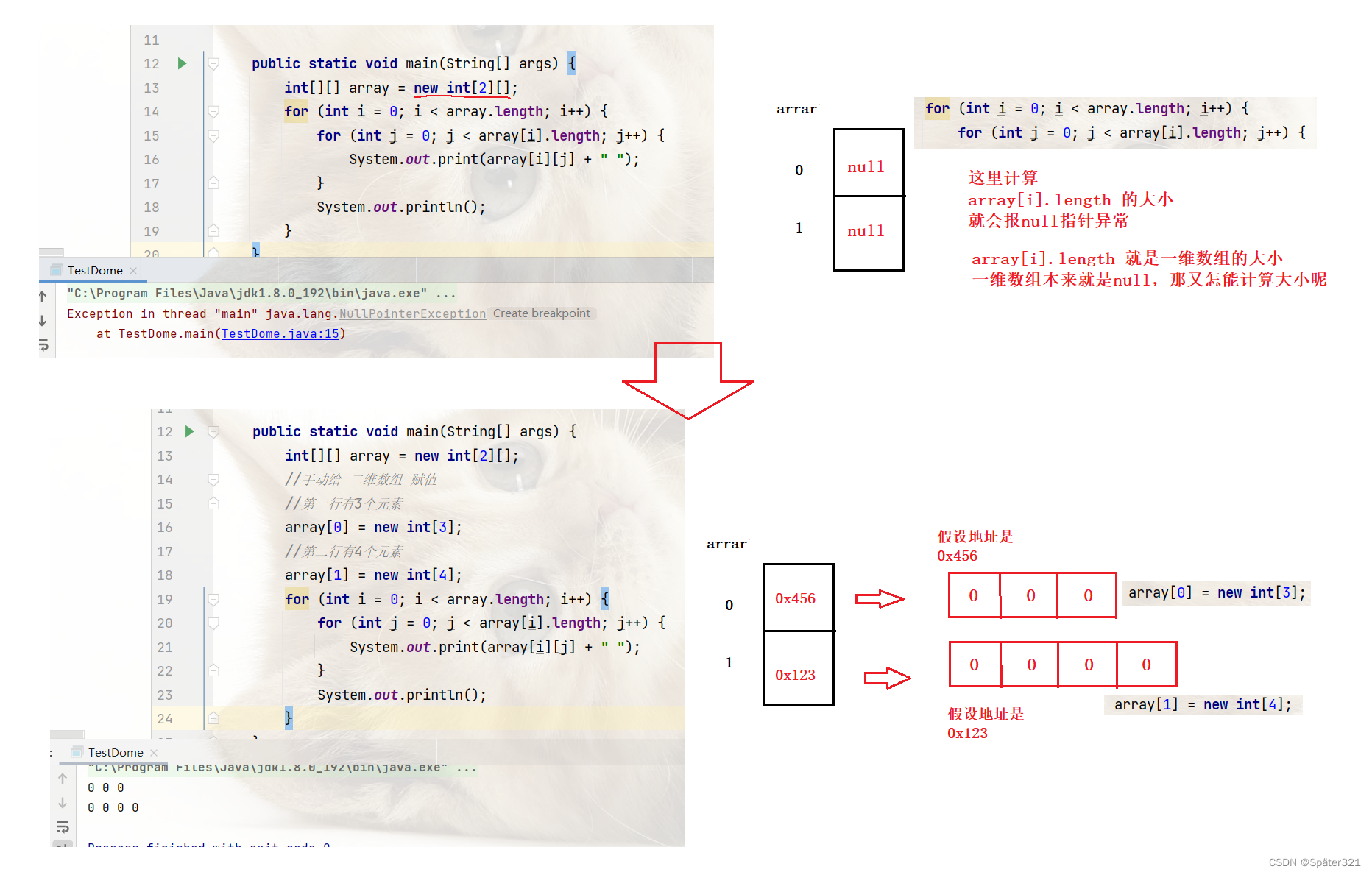

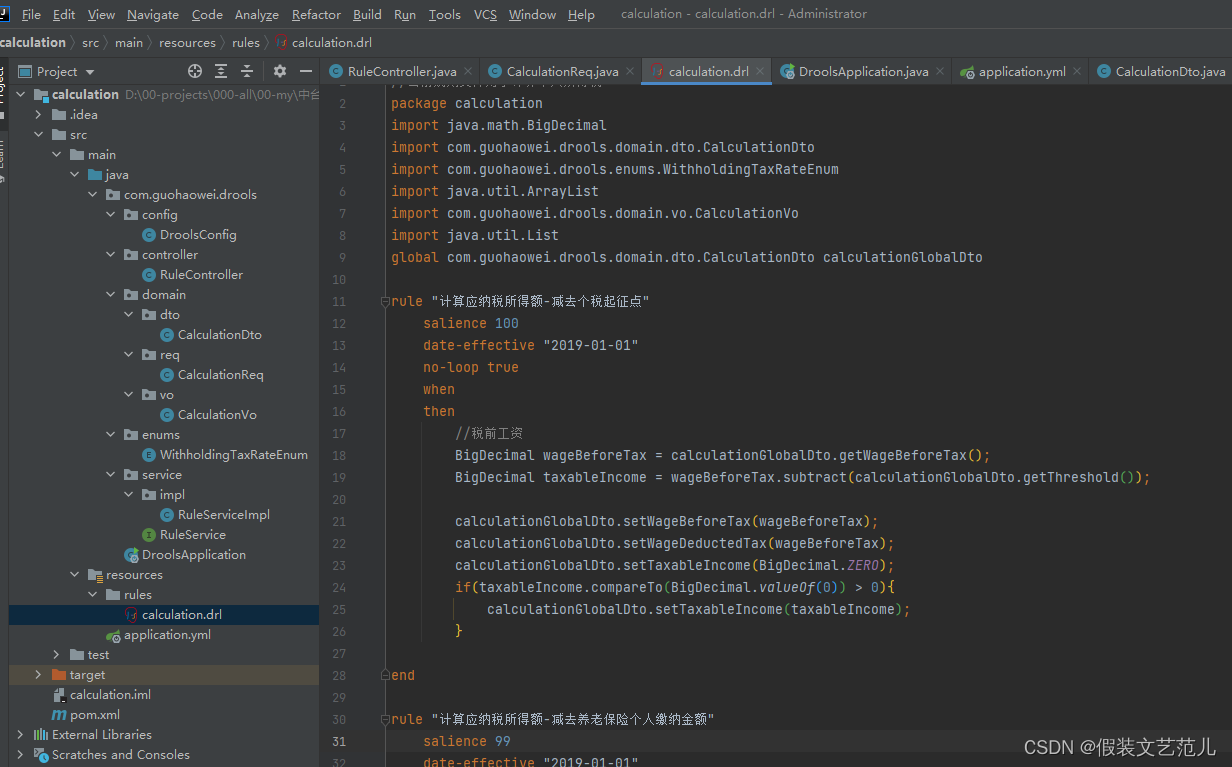

八、神经网络–非线性激活

1、ReLU

2、Sigmoid

使用sigmoid函数:

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input = torch.tensor([[1, -0.5],

[-1, 3]])

input = torch.reshape(input, (-1, 1, 2, 2))

print(input.shape)

dataset = torchvision.datasets.CIFAR10("dataset", train=False, download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU()

self.sigmoid1 = Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

tudui = Tudui()

writer = SummaryWriter("logs_relu")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, global_step=step)

output = tudui(imgs)

writer.add_images("output", output, step)

step += 1

writer.close()

运行结果:

九、神经网络–线性层及其他层介绍

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

# torch.Size([64,3,32,32]) --> torch.Size([1,1,1,196608]) --> torch.Size([1,1,1,10])

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.linear1 = Linear(196608, 10)

def forward(self, input):

output = self.linear1(input)

return output

tudui = Tudui()

for data in dataloader:

imgs, targets = data

print(imgs.shape)

# output = torch.reshape(imgs,(1,1,1,-1))

# 将图片展平

output = torch.flatten(imgs)

print(output.shape)

output = tudui(output)

print(output.shape)

运行结果:

torch.Size([64,3,32,32]) --> torch.Size([1,1,1,196608]) --> torch.Size([1,1,1,10])

十、神经网络–全连接层Sequential

Pytorch官方文档—Conv2d

***cifar10 model structure***

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

# in_channels=3, out_channels=32, kernel_size=5, padding需要根据公式计算

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

# 展平

Flatten(),

# 全连接

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

writer = SummaryWriter("logs_seq")

writer.add_graph(tudui, input)

writer.close()

运行结果:

可视化结果:

十一、损失函数与反向传播

L1Loss & MSELoss & CrossEntropyLoss

import torch

from torch.nn import L1Loss

from torch import nn

inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

targets = torch.tensor([1, 2, 5], dtype=torch.float32)

inputs = torch.reshape(inputs, (1, 1, 1, 3))

targets = torch.reshape(targets, (1, 1, 1, 3))

loss = L1Loss(reduction='sum')

result = loss(inputs, targets)

# 平方差

loss_mse = nn.MSELoss()

result_mse = loss_mse(inputs, targets)

print(result)

print(result_mse)

# 计算交叉熵

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x, (1, 3))

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x, y)

print(result_cross)

运行结果:

损失函数作用: 1、计算实际输出与目标之间的差距 2、为我们更新输出提供一定的依据(反向传播)

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

# print(outputs)

# print(targets)

result_loss = loss(outputs, targets)

print(result_loss)

outputs 与 targets 输出:

result_loss:

十二、优化器

import torch

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.optim.lr_scheduler import StepLR

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

# 优化器

optim = torch.optim.SGD(tudui.parameters(), lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

# 将梯度清0

optim.zero_grad()

result_loss.backward()

# 对网络进行调优

optim.step()

running_loss = running_loss + result_loss

print(running_loss)

十三、现有网络模型的使用及修改

VGG16输出有1000个类别

VGG网络用ImageNet数据集来训练,但是该数据集太大;改成用cifar10数据集来进行,于是需要改动VGG网络结构

import torchvision

# ImageNet数据集太大

# train_data = torchvision.datasets.ImageNet("../data_image_net", split='train', download=True,

# transform=torchvision.transforms.ToTensor())

from torch import nn

# pretrained=False表示使用初始化的参数,没有经过数据集训练

vgg16_false = torchvision.models.vgg16(pretrained=False)

vgg16_true = torchvision.models.vgg16(pretrained=True)

# print(vgg16_true)

train_data = torchvision.datasets.CIFAR10('dataset', train=True, transform=torchvision.transforms.ToTensor(),

download=True)

# 方法1、修改网络模型

vgg16_true.classifier.add_module('add_linear', nn.Linear(1000, 10))

# print(vgg16_true)

# 方法2、直接改为输出10类

print(vgg16_false)

vgg16_false.classifier[6] = nn.Linear(4096, 10)

print(vgg16_false)

方法1、修改网络模型

方法2、直接改为输出10类

十四、网络模型的保存与读取

# model_save.py

import torch

import torchvision

from torch import nn

vgg16 = torchvision.models.vgg16(pretrained=False)

# 保存方式1,模型结构+模型参数

torch.save(vgg16, "vgg16_method1.pth")

# 保存方式2,模型参数(官方推荐)将vgg16的状态保存为字典形式

torch.save(vgg16.state_dict(), "vgg16_method2.pth")

# 陷阱

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=3)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

torch.save(tudui, "tudui_method1.pth")

# model_load.py

import torch

from model_save import *

# 方式1-》保存方式1,加载模型

import torchvision

from torch import nn

model = torch.load("vgg16_method1.pth")

# print(model)

# 方式2,加载模型

vgg16 = torchvision.models.vgg16(pretrained=False)

vgg16.load_state_dict(torch.load("vgg16_method2.pth"))

# model = torch.load("vgg16_method2.pth")

# print(model)

# 陷阱1

# class Tudui(nn.Module):

# def __init__(self):

# super(Tudui, self).__init__()

# self.conv1 = nn.Conv2d(3, 64, kernel_size=3)

#

# def forward(self, x):

# x = self.conv1(x)

# return x

# 采用方法1,需要使程序能够访问到自定义的模型,不然会报错

model = torch.load('tudui_method1.pth')

print(model)

保存方式1:模型结构+模型参数

保存方式2:模型参数(官方推荐)将vgg16的状态保存为字典形式

自定义的网络模型:

![[附源码]计算机毕业设计springboot儿童早教课程管理系统论文2022](https://img-blog.csdnimg.cn/074d443aaa1245b289a3e7f23f01688a.png)