Production Best Practices 生产最佳实例

- 前言

- Introduction 导言

- Setting up your organization 设置您的组织

- Managing billing limits 管理计费限额

- API keys API密钥

- Staging accounts 演示账户

- Building your prototype 构建您的原型

- Additional tips 其它技巧

- Techniques for improving reliability around prompts 用于提高提示周围的可靠性的技术

- Evaluation and iteration 评估和迭代

- Evaluating language models 评估语言模型

- Automated evaluations 自动化评价

- Example procedure for evaluating a GPT-3-based system 用于评估基于GPT-3的系统的示例程序

- Scaling your solution architecture 扩展您的解决方案架构

- Managing rate limits 管理速率限制

- Improving latencies 改善延迟

- Common factors affecting latency and possible mitigation techniques 影响延迟的常见因素和可能的缓解技术

- Model 模型

- Number of completion tokens 完成令牌数

- Streaming 串流

- Infrastructure 基础设施

- Batching 批处理

- Managing costs 管理成本

- Text generation 文本生成

- MLOps strategy 机器学习操作策略

- Security and compliance 安全性和合规性

- Safety best practices 安全最佳实践

- 其它资料下载

前言

作为高级开发工程师,如果你需要开发一个使用ChatGPT的应用程序并部署到生产环境上,那么在此之前,你需要提前考虑完善各项工作。比如如何做好相应的成本控制、并发性能监控,如何持续评估和迭代机器学习模型,以及数据安全性和合规性等方面。

值得一提的是,OpenAI关于ChatGPT的生产最佳实践官方指南覆盖了以上所有内容。相信这一最佳实践指南能够帮助我们从0到1打造出一个高水平的产品。

Introduction 导言

This guide provides a comprehensive set of best practices to help you transition from prototype to production. Whether you are a seasoned machine learning engineer or a recent enthusiast, this guide should provide you with the tools you need to successfully put the platform to work in a production setting: from securing access to our API to designing a robust architecture that can handle high traffic volumes. Use this guide to help develop a plan for deploying your application as smoothly and effectively as possible.

本指南提供了一套全面的最佳实例,可帮助您从原型过渡到生产。无论您是经验丰富的机器学习工程师还是最近的爱好者,本指南都应该为您提供成功将平台投入生产环境所需的工具:从保护对我们API的访问到设计一个可以处理高流量的强大架构。使用本指南可以帮助您制定尽可能平稳有效地部署应用程序的计划。

Setting up your organization 设置您的组织

Once you log in to your OpenAI account, you can find your organization name and ID in your organization settings. The organization name is the label for your organization, shown in user interfaces. The organization ID is the unique identifier for your organization which can be used in API requests.

登录OpenAI帐户后,您可以在组织设置中找到您的组织名称和ID。组织名称是组织的标签,显示在用户界面中。组织ID是您的组织的唯一标识符,可用于API请求。

Users who belong to multiple organizations can pass a header to specify which organization is used for an API request. Usage from these API requests will count against the specified organization’s quota. If no header is provided, the default organization will be billed. You can change your default organization in your user settings.

属于多个组织的用户可以传递一个标头,以指定哪个组织用于API请求。这些API请求的使用量将计入指定组织的配额。如果未提供标题,则将对默认组织开单。您可以在用户设置中更改默认组织。

You can invite new members to your organization from the members settings page. Members can be readers or owners. Readers can make API requests and view basic organization information, while owners can modify billing information and manage members within an organization.

您可以从成员设置页面邀请新成员加入组织。成员可以是读者或所有者。读者可以发出API请求并查看基本组织信息,而所有者可以修改计费信息并管理组织内的成员。

Managing billing limits 管理计费限额

New free trial users receive an initial credit of $5 that expires after three months. Once the credit has been used or expires, you can choose to enter billing information to continue your use of the API. If no billing information is entered, you will still have login access but will be unable to make any further API requests.

新的免费试用用户将获得5美元的初始信用,三个月后到期。信用额度用完或到期后,您可以选择输入账单信息以继续使用API。如果未输入任何计费信息,您仍将具有登录访问权限,但将无法进行任何进一步的API请求。

Once you’ve entered your billing information, you will have an approved usage limit of $120 per month, which is set by OpenAI. To increase your quota beyond the $120 monthly billing limit, please submit a quota increase request.

一旦您输入了账单信息,您将获得每月120美元的批准使用限额,这是由OpenAI设置的。要将您的配额增加到超过每月120美元的账单限额,请提交配额增加请求。

If you’d like to be notified when your usage exceeds a certain amount, you can set a soft limit through the usage limits page. When the soft limit is reached, the owners of the organization will receive an email notification. You can also set a hard limit so that, once the hard limit is reached, any subsequent API requests will be rejected. Note that these limits are best effort, and there may be 5 to 10 minutes of delay between the usage and the limits being enforced.

如果您希望在使用量超过一定数量时收到通知,您可以通过使用限制页面设置软限制。当达到软限制时,组织的所有者将收到电子邮件通知。您还可以设置硬限制,以便一旦达到硬限制,将拒绝任何后续API请求。请注意,这些限制是尽力而为的,在使用和强制执行的限制之间可能有5到10分钟的延迟。

API keys API密钥

The OpenAI API uses API keys for authentication. Visit your API keys page to retrieve the API key you’ll use in your requests.

OpenAI API使用API密钥进行身份验证。访问您的API密钥页面以检索您将在请求中使用的API密钥。

This is a relatively straightforward way to control access, but you must be vigilant about securing these keys. Avoid exposing the API keys in your code or in public repositories; instead, store them in a secure location. You should expose your keys to your application using environment variables or secret management service, so that you don’t need to hard-code them in your codebase. Read more in our Best practices for API key safety.

这是控制访问的一种相对简单的方法,但您必须对保护这些密钥保持警惕。避免在代码或公共存储库中暴露API密钥;而是将它们存储在安全位置。您应该使用环境变量或秘密管理服务将密钥公开给应用程序,这样就不需要在代码库中硬编码它们。请阅读我们的API密钥安全最佳实践。

Staging accounts 演示账户

As you scale, you may want to create separate organizations for your staging and production environments. Please note that you can sign up using two separate email addresses like bob+prod@widgetcorp.com and bob+dev@widgetcorp.com to create two organizations. This will allow you to isolate your development and testing work so you don’t accidentally disrupt your live application. You can also limit access to your production organization this way.

随着扩展,您可能希望为临时环境和生产环境创建单独的组织。请注意,您可以使用两个单独的电子邮件地址(如bob+prod@widgetcorp.com和bob+dev@widgetcorp.com)注册,以创建两个组织。这将允许您隔离开发和测试工作,这样您就不会意外地中断活动应用程序。您还可以通过这种方式限制对生产组织的访问。

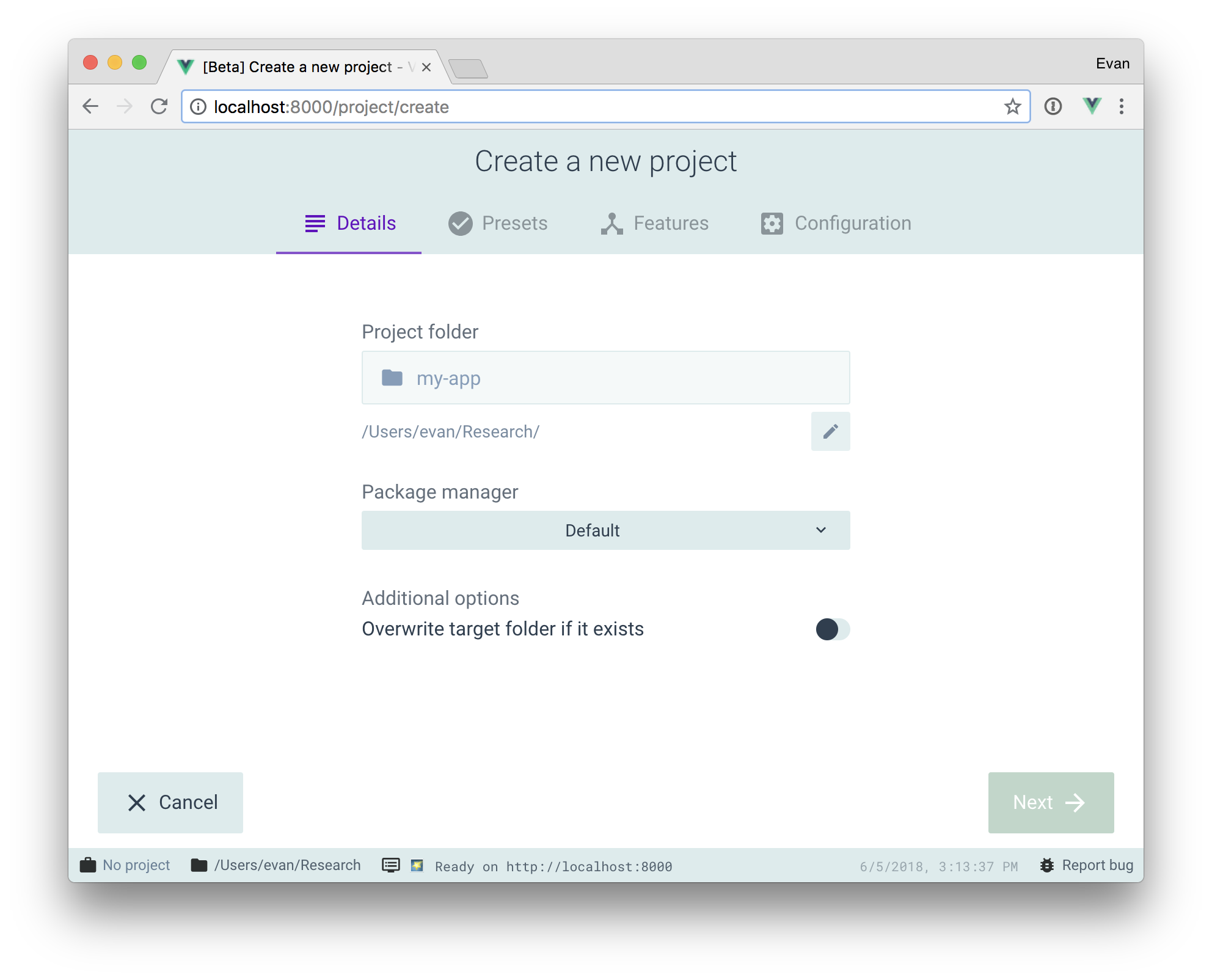

Building your prototype 构建您的原型

If you haven’t gone through the quickstart guide, we recommend you start there before diving into the rest of this guide.

如果您还没有浏览过快速入门指南,我们建议您在深入阅读本指南的其余部分之前先从快速入门指南开始。

For those new to the OpenAI API, our playground can be a great resource for exploring its capabilities. Doing so will help you learn what’s possible and where you may want to focus your efforts. You can also explore our example prompts.

对于那些OpenAI API的新手来说,我们的游乐场可以成为探索其功能的绝佳资源。这样做可以帮助你了解什么是可能的,以及你可能想把精力集中在哪里。您也可以浏览我们的示例提示。

While the playground is a great place to prototype, it can also be used as an incubation area for larger projects. The playground also makes it easy to export code snippets for API requests and share prompts with collaborators, making it an integral part of your development process.

虽然游乐场是一个很好的原型制作场所,但它也可以用作大型项目的孵化区。游乐场还可以轻松导出API请求的代码段,并与协作者共享提示,使其成为开发过程中不可或缺的一部分。

Additional tips 其它技巧

- Start by determining the core functionalities you want your application to have. Consider the types of data inputs, outputs, and processes you will need. Aim to keep the prototype as focused as possible, so that you can iterate quickly and efficiently.

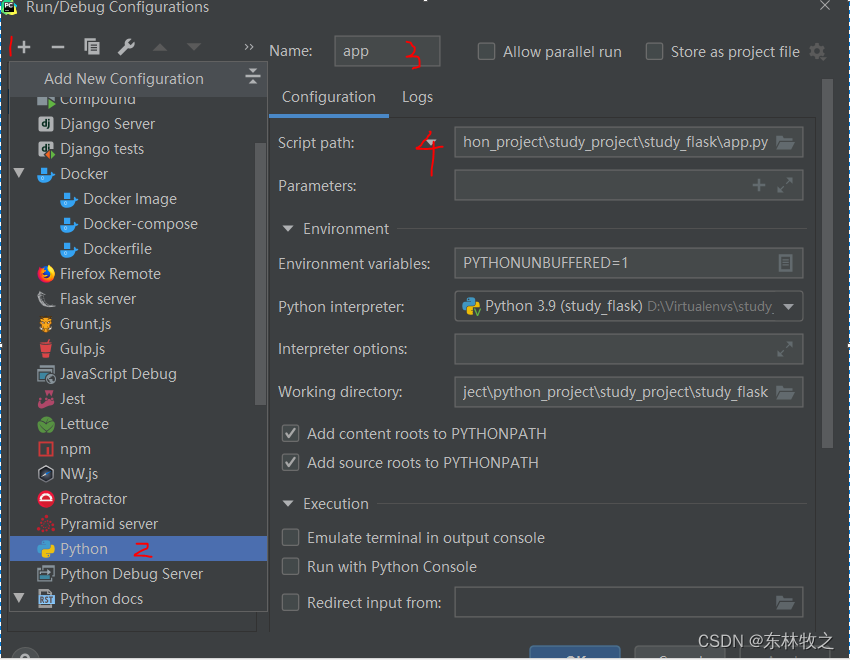

首先确定您希望应用程序具有的核心功能。考虑您将需要的数据输入、输出和处理的类型。目标是尽可能地保持原型的重点,以便您可以快速有效地迭代。 - Choose the programming language and framework that you feel most comfortable with and that best aligns with your goals for the project. Some popular options include Python, Java, and Node.js. See library support page to learn more about the library bindings maintained both by our team and by the broader developer community.

选择你觉得最舒服的编程语言和框架,并且最符合你的项目目标。一些流行的选项包括Python,Java和Node.js。请参阅库支持页面,了解有关我们团队和更广泛的开发人员社区维护的库绑定的更多信息。 - Development environment and support: Set up your development environment with the right tools and libraries and ensure you have the resources you need to train your model. Leverage our documentation, community forum and our help center to get help with troubleshooting. If you are developing using Python, take a look at this structuring your project guide (repository structure is a crucial part of your project’s architecture). In order to connect with our support engineers, simply log in to your account and use the “Help” button to start a conversation.

开发环境及支持:使用正确的工具和库设置您的开发环境,并确保您拥有训练模型所需的资源。利用我们的文档、社区论坛和帮助中心获取故障排除帮助。如果您正在使用Python进行开发,请查看此项目结构指南(存储库结构是项目架构的关键部分)。要与我们的支持工程师联系,只需登录您的帐户并使用“帮助”按钮开始对话。

Techniques for improving reliability around prompts 用于提高提示周围的可靠性的技术

Even with careful planning, it’s important to be prepared for unexpected issues when using GPT-3 in your application. In some cases, the model may fail on a task, so it’s helpful to consider what you can do to improve the reliability of your application.

即使经过仔细的规划,在应用程序中使用GPT-3时,为意外问题做好准备也很重要。在某些情况下,模型可能会在某个任务上失败,因此考虑如何提高应用程序的可靠性是很有帮助的。

If your task involves logical reasoning or complexity, you may need to take additional steps to build more reliable prompts. For some helpful suggestions, consult our Techniques to improve reliability guide. Overall the recommendations revolve around:

如果您的任务涉及逻辑推理或复杂性,则可能需要采取其他步骤来构建更可靠的提示。有关一些有用的建议,请参阅我们的提高可靠性技术指南。总的来说,这些建议围绕着:

- Decomposing unreliable operations into smaller, more reliable operations (e.g., selection-inference prompting)

将不可靠的操作分解成更小的、更可靠的操作(例如, 选择推理提示) - Using multiple steps or multiple relationships to make the system’s reliability greater than any individual component (e.g., maieutic prompting)

使用多个步骤或多个关系来使系统的可靠性大于任何单个组件(例如, 医疗提示)

Evaluation and iteration 评估和迭代

One of the most important aspects of developing a system for production is regular evaluation and iterative experimentation. This process allows you to measure performance, troubleshoot issues, and fine-tune your models to improve accuracy and efficiency. A key part of this process is creating an evaluation dataset for your functionality. Here are a few things to keep in mind:

开发用于生产的系统的最重要方面之一是定期评估和迭代实验。此过程允许您测量性能、解决问题并微调模型以提高准确性和效率。此过程的关键部分是为您的功能创建评估数据集。以下是需要牢记的几点:

- Make sure your evaluation set is representative of the data your model will be used on in the real world. This will allow you to assess your model’s performance on data it hasn’t seen before and help you understand how well it generalizes to new situations.

请确保评估集代表真实的世界中将使用模型的数据。这将允许您评估模型在以前没有见过的数据上的性能,并帮助您了解它对新情况的泛化能力。 - Regularly update your evaluation set to ensure that it stays relevant as your model evolves and as new data becomes available.

定期更新您的评估集,以确保它随着模型的发展和新数据的可用而保持相关性。 - Use a variety of metrics to evaluate your model’s performance. Depending on your application and business outcomes, this could include accuracy, precision, recall, F1 score, or mean average precision (MAP). Additionally, you can sync your fine-tunes with Weights & Biases to track experiments, models, and datasets.

使用各种指标来评估模型的性能。根据您的应用程序和业务成果,这可能包括准确度、精确度、召回率、F1分数或平均精度(MAP)。此外,您还可以使用权重和偏差同步微调,以跟踪实验、模型和数据集。 - Compare your model’s performance against baseline. This will give you a better understanding of your model’s strengths and weaknesses and can help guide your future development efforts.

将模型的性能与基线进行比较。这将给予您更好地了解模型的优点和缺点,并有助于指导您未来的开发工作。

By conducting regular evaluation and iterative experimentation, you can ensure that your GPT-powered application or prototype continues to improve over time.

通过进行定期评估和迭代实验,您可以确保GPT驱动的应用程序或原型随着时间的推移不断改进。

Evaluating language models 评估语言模型

Language models can be difficult to evaluate because evaluating the quality of generated language is often subjective, and there are many different ways to communicate the same message correctly in language. For example, when evaluating a model on the ability to summarize a long passage of text, there are many correct summaries. That being said, designing good evaluations is critical to making progress in machine learning.

语言模型可能很难评估,因为评估生成的语言的质量通常是主观的,并且有许多不同的方法可以用语言正确地传达相同的消息。例如,当评估一个模型总结一长段文本的能力时,有许多正确的总结。话虽如此,设计良好的评估对于机器学习取得进展至关重要。

An eval suite needs to be comprehensive, easy to run, and reasonably fast (depending on model size). It also needs to be easy to continue to add to the suite as what is comprehensive one month will likely be out of date in another month. We should prioritize having a diversity of tasks and tasks that identify weaknesses in the models or capabilities that are not improving with scaling.

一个eval套件需要全面、易于运行,并且相当快(取决于模型大小)。它还需要很容易继续添加到套件中,因为一个月的全面内容可能在另一个月就过时了。我们应该优先考虑任务的多样性,这些任务可以识别模型中的弱点或无法随着扩展而改进的功能。

The simplest way to evaluate your system is to manually inspect its outputs. Is it doing what you want? Are the outputs high quality? Are they consistent?

评估系统的最简单方法是手动检查其输出。它在做你想做的事吗?产出是否高质量?它们是一致的吗?

Automated evaluations 自动化评价

The best way to test faster is to develop automated evaluations. However, this may not be possible in more subjective applications like summarization tasks.

加快测试速度的最佳方法是开发自动评估。然而,这在更主观的应用(如摘要任务)中可能是不可能的。

Automated evaluations work best when it’s easy to grade a final output as correct or incorrect. For example, if you’re fine-tuning a classifier to classify text strings as class A or class B, it’s fairly simple: create a test set with example input and output pairs, run your system on the inputs, and then grade the system outputs versus the correct outputs (looking at metrics like accuracy, F1 score, cross-entropy, etc.).

当很容易将最终输出分为正确或不正确时,自动评估工作最好。例如,如果你正在微调一个分类器,将文本字符串分类为A类或B类,这相当简单:使用示例输入和输出对创建一个测试集,在输入上运行系统,然后将系统输出与正确的输出进行比较(查看准确性,F1得分,交叉熵等指标)。

If your outputs are semi open-ended, as they might be for a meeting notes summarizer, it can be trickier to define success: for example, what makes one summary better than another? Here, possible techniques include:

如果您的输出是半开放式的,就像会议记录摘要器一样,那么定义成功可能会更棘手:例如,是什么让一个总结比另一个更好?这里,可能的技术包括:

- Writing a test with ‘gold standard’ answers and then measuring some sort of similarity score between each gold standard answer and the system output (we’ve seen embeddings work decently well for this)

用“黄金标准”答案编写一个测试,然后测量每个黄金标准答案和系统输出之间的某种相似性分数(我们已经看到嵌入在这方面工作得很好) - Building a discriminator system to judge / rank outputs, and then giving that discriminator a set of outputs where one is generated by the system under test (this can even be GPT model that is asked whether the question is answered correctly by a given output)

构建一个鉴别器系统来判断/排序输出,然后给该鉴别器一组输出,其中一个输出由被测系统生成(这甚至可以是GPT模型,该模型被问及给定输出是否正确回答了问题) - Building an evaluation model that checks for the truth of components of the answer; e.g., detecting whether a quote actually appears in the piece of given text

建立一个评估模型,检查答案组成部分的真实性;例如,检测引用是否实际上出现在给定文本的片段中

For very open-ended tasks, such as a creative story writer, automated evaluation is more difficult. Although it might be possible to develop quality metrics that look at spelling errors, word diversity, and readability scores, these metrics don’t really capture the creative quality of a piece of writing. In cases where no good automated metric can be found, human evaluations remain the best method.

对于非常开放式的任务,例如创造性的故事作者,自动评估就比较困难。尽管可以开发出质量指标来衡量拼写错误、单词多样性和可读性得分,但这些指标并不能真正反映一篇文章的创造性质量。在无法找到良好的自动化指标的情况下,人工评估仍然是最佳方法。

Example procedure for evaluating a GPT-3-based system 用于评估基于GPT-3的系统的示例程序

As an example, let’s consider the case of building a retrieval-based Q&A system.

作为一个例子,让我们考虑构建一个基于检索的问答系统的情况。

A retrieval-based Q&A system has two steps. First, a user’s query is used to rank potentially relevant documents in a knowledge base. Second, GPT-3 is given the top-ranking documents and asked to generate an answer to the query.

基于检索的Q&A系统有两个步骤。首先,使用用户的查询来对知识库中的潜在相关文档进行排名。第二,GPT-3被赋予最高排名的文档,并被要求生成查询的答案。

Evaluations can be made to measure the performance of each step.

可以进行评估以测量每个步骤的性能。

For the search step, one could:

对于搜索步骤,可以:

- First, generate a test set with ~100 questions and a set of correct documents for each

首先,生成一个包含约100个问题的测试集,并为每个问题生成一组正确的文档 - The questions can be sourced from user data if you have any; otherwise, you can invent a set of questions with diverse styles and difficulty.

问题可以来源于你有的任何用户数据;否则,你可以发明一套不同风格和难度的问题。 - For each question, have a person manually search through the knowledge base and record the set of documents that contain the answer.

对于每个问题,让一个人手动搜索知识库并记录包含答案的文档集。 - Second, use the test set to grade the system’s performance

其次,使用测试集对系统的性能进行分级 - For each question, use the system to rank the candidate documents (e.g., by cosine similarity of the document embeddings with the query embedding).

对于每个问题,使用系统对候选文档进行排名(例如,通过文档嵌入与查询嵌入的余弦相似性)。 - You can score the results with a binary accuracy score of 1 if the candidate documents contain at least 1 relevant document from the answer key and 0 otherwise

如果候选文档至少包含1个答案关键字的相关文档,则可以使用二进制准确性得分1对结果进行评分,否则为0 - You can also use a continuous metric like Mean Reciprocal Rank which can help distinguish between answers that were close to being right or far from being right (e.g., a score of 1 if the correct document is rank 1, a score of ½ if rank 2, a score of ⅓ if rank 3, etc.)

您还可以使用连续指标,如平均倒数排名,它可以帮助区分接近正确或远离正确的答案(例如,如果正确的文档是等级1,则得分为1,如果等级2,则得分为1/2,如果等级3,则得分为1/2,等等)。

For the question answering step, one could:

对于问题回答步骤,可以:

- First, generate a test set with ~100 sets of {question, relevant text, correct answer}

首先,生成一个包含约100组{问题,相关文本,正确答案}的测试集

-For the questions and relevant texts, use the above data

对于问题和相关文本,使用上述数据 - For the correct answers, have a person write down ~100 examples of what a great answer looks like.

对于正确的答案,让一个人写下100个例子,说明一个伟大的答案是什么样子的。

Second, use the test set to grade the system’s performance

其次,使用测试集对系统的性能进行分级

- For each question & text pair, combine them into a prompt and submit the prompt to GPT-3

对于每个问题和文本对,将它们组合成一个提示,并将提示提交给GPT-3 - Next, compare GPT-3’s answers to the gold-standard answer written by a human

接下来,将GPT-3的答案与人类写的黄金标准答案进行比较 - This comparison can be manual, where humans look at them side by side and grade whether the GPT-3 answer is correct/high quality

这种比较可以是手动的,人类将它们并排看,并对GPT-3答案是否正确/高质量进行评分 - This comparison can also be automated, by using embedding similarity scores or another method (automated methods will likely be noisy, but noise is ok as long as it’s unbiased and equally noisy across different types of models that you’re testing against one another)

这种比较也可以通过使用嵌入相似性得分或其他方法来自动化(自动化方法可能会有噪声,但噪声是可以的,只要它是无偏差的,并且在不同类型的模型之间具有相同的噪声)

Of course,N=100is just an example, and in early stages, you might start with a smaller set that’s easier to generate, and in later stages, you might invest in a larger set that’s more costly but more statistically reliable.

当然,N=100只是一个例子,在早期阶段,你可能会从一个更容易生成的较小集合开始,在后期阶段,你可能会投资一个更大的集合,成本更高,但在统计上更可靠。

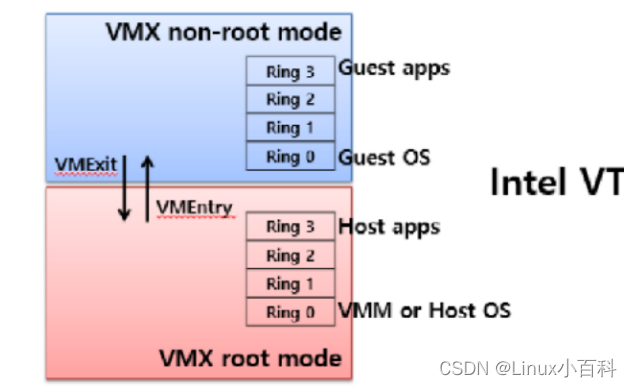

Scaling your solution architecture 扩展您的解决方案架构

When designing your application or service for production that uses our API, it’s important to consider how you will scale to meet traffic demands. There are a few key areas you will need to consider regardless of the cloud service provider of your choice:

在设计使用我们的API的生产应用或服务时,重要的是要考虑如何扩展以满足流量需求。无论您选择哪种云服务提供商,都需要考虑以下几个关键领域:

- Horizontal scaling: You may want to scale your application out horizontally to accommodate requests to your application that come from multiple sources. This could involve deploying additional servers or containers to distribute the load. If you opt for this type of scaling, make sure that your architecture is designed to handle multiple nodes and that you have mechanisms in place to balance the load between them.

水平缩放:您可能希望横向扩展应用程序,以适应来自多个源的应用程序请求。这可能涉及部署额外的服务器或容器来分配负载。如果您选择这种类型的扩展,请确保您的架构设计为处理多个节点,并且您有适当的机制来平衡它们之间的负载。 - Vertical scaling: Another option is to scale your application up vertically, meaning you can beef up the resources available to a single node. This would involve upgrading your server’s capabilities to handle the additional load. If you opt for this type of scaling, make sure your application is designed to take advantage of these additional resources.

垂直缩放:另一种选择是垂直扩展应用程序,这意味着您可以增加单个节点的可用资源。这将涉及到升级服务器的功能以处理额外的负载。如果您选择这种类型的扩展,请确保您的应用程序被设计为利用这些额外的资源。 - Caching: By storing frequently accessed data, you can improve response times without needing to make repeated calls to our API. Your application will need to be designed to use cached data whenever possible and invalidate the cache when new information is added. There are a few different ways you could do this. For example, you could store data in a database, filesystem, or in-memory cache, depending on what makes the most sense for your application.

缓存:通过存储频繁访问的数据,您可以缩短响应时间,而无需重复调用我们的API。您的应用程序需要设计为尽可能使用缓存数据,并在添加新信息时该高速缓存无效。有几种不同的方法可以做到这一点。例如,您可以将数据存储在数据库、文件系统或内存缓存中,这取决于什么对您的应用程序最有意义。 - Load balancing: Finally, consider load-balancing techniques to ensure requests are distributed evenly across your available servers. This could involve using a load balancer in front of your servers or using DNS round-robin. Balancing the load will help improve performance and reduce bottlenecks.

负载均衡:最后,考虑负载平衡技术,以确保请求在可用服务器上均匀分布。这可能涉及在服务器前使用负载平衡器或使用DNS轮询。平衡负载将有助于提高性能和减少瓶颈。

Managing rate limits 管理速率限制

When using our API, it’s important to understand and plan for rate limits.

在使用我们的API时,了解和规划速率限制非常重要。

Improving latencies 改善延迟

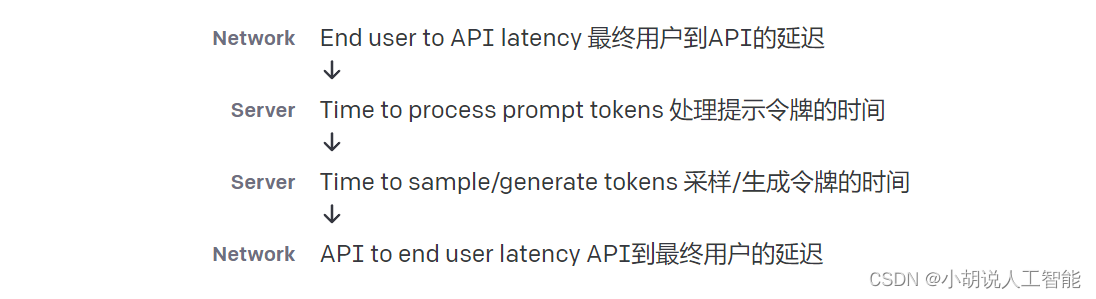

Latency is the time it takes for a request to be processed and a response to be returned. In this section, we will discuss some factors that influence the latency of our text generation models and provide suggestions on how to reduce it.

延迟是处理请求和返回响应所花费的时间。在本节中,我们将讨论影响文本生成模型延迟的一些因素,并提供有关如何减少延迟的建议。

The latency of a completion request is mostly influenced by two factors: the model and the number of tokens generated. The life cycle of a completion request looks like this:

完成请求的延迟主要受两个因素的影响:模型和生成的token的数量。完成请求的生命周期如下所示:

The bulk of the latency typically arises from the token generation step.

大部分延迟通常由token生成步骤引起。

Intuition: Prompt tokens add very little latency to completion calls. Time to generate completion tokens is much longer, as tokens are generated one at a time. Longer generation lengths will accumulate latency due to generation required for each token.

直觉:提示符token 几乎不会给完成调用增加延迟。生成完成token的时间要长得多,因为token是一次生成一个。更长的生成长度将由于每个令牌所需的生成而累积延迟。

Common factors affecting latency and possible mitigation techniques 影响延迟的常见因素和可能的缓解技术

Now that we have looked at the basics of latency, let’s take a look at various factors that can affect latency, broadly ordered from most impactful to least impactful.

现在我们已经了解了延迟的基本知识,让我们来看看可能影响延迟的各种因素,从最具影响力到最不具影响力大致排序。

Model 模型

Our API offers different models with varying levels of complexity and generality. The most capable models, such as gpt-4, can generate more complex and diverse completions, but they also take longer to process your query. Models such as gpt-3.5-turbo, can generate faster and cheaper chat completions, but they may generate results that are less accurate or relevant for your query. You can choose the model that best suits your use case and the trade-off between speed and quality.

我们的API提供不同的模型,具有不同的复杂性和通用性。功能最强大的模型(如 gpt-4 )可以生成更复杂和更多样化的补全,但它们也需要更长的时间来处理您的查询。 gpt-3.5-turbo 等模型可以生成更快、更便宜的聊天完成,但它们可能生成不太准确或与您的查询相关的结果。您可以选择最适合您的用例的模型,并在速度和质量之间进行权衡。

Number of completion tokens 完成令牌数

Requesting a large amount of generated tokens completions can lead to increased latencies:

请求大量生成的令牌完成可能会导致延迟增加:

- Lower max tokens: for requests with a similar token generation count, those that have a lower

max_tokensparameter incur less latency.

最大令牌数下限:对于具有类似令牌生成计数的请求,具有较低max_tokens参数的那些请求招致较少等待时间。 - Include stop sequences: to prevent generating unneeded tokens, add a stop sequence. For example, you can use stop sequences to generate a list with a specific number of items. In this case, by using

11.as a stop sequence, you can generate a list with only 10 items, since the completion will stop when11.is reached. Read our help article on stop sequences for more context on how you can do this.

包括终止序列:要防止生成不需要的令牌,请添加停止序列。例如,可以使用停止序列生成包含特定数量项的列表。在这种情况下,通过使用11.作为停止序列,您可以生成一个只有10个项目的列表,因为完成将在达到11.时停止。 请阅读我们关于停止序列的帮助文章,了解如何执行此操作的更多上下文。 - Generate fewer completions: lower the values of

nandbest_ofwhen possible where n refers to how many completions to generate for each prompt and best_of is used to represent the result with the highest log probability per token.

生成更少的完成:尽可能降低n和best_of的值,其中n是指为每个提示生成多少个完成,best_of用于表示每个令牌具有最高对数概率的结果。

If n and best_of both equal 1 (which is the default), the number of generated tokens will be at most, equal to max_tokens.

如果 n 和best_of 都等于1(这是默认值),则生成的令牌的数量将最多等于 max_tokens 。

If n (the number of completions returned) or best_of (the number of completions generated for consideration) are set to > 1, each request will create multiple outputs. Here, you can consider the number of generated tokens as [ max_tokens * max (n, best_of) ]

如果将 n (返回的完成数)或 best_of (生成的完成数)设置为 > 1 ,则每个请求将创建多个输出。在这里,您可以将生成的令牌数视为 [ max_tokens * max (n, best_of) ]

Streaming 串流

Setting stream: true in a request makes the model start returning tokens as soon as they are available, instead of waiting for the full sequence of tokens to be generated. It does not change the time to get all the tokens, but it reduces the time for first token for an application where we want to show partial progress or are going to stop generations. This can be a better user experience and a UX improvement so it’s worth experimenting with streaming.

在请求中设置 stream: true 会使模型在令牌可用时立即开始返回令牌,而不是等待生成完整的令牌序列。它不会改变获取所有令牌的时间,但它减少了我们想要显示部分进度或将要停止生成的应用程序的第一个令牌的时间。这可能是一个更好的用户体验和UX改进,所以值得尝试串流。

Infrastructure 基础设施

Our servers are currently located in the US. While we hope to have global redundancy in the future, in the meantime you could consider locating the relevant parts of your infrastructure in the US to minimize the roundtrip time between your servers and the OpenAI servers.

我们的服务器目前位于美国。虽然我们希望在未来实现全球冗余,但与此同时,您可以考虑将基础设施的相关部分放在美国,以最大限度地减少服务器和OpenAI服务器之间的往返时间。

Batching 批处理

Depending on your use case, batching may help. If you are sending multiple requests to the same endpoint, you can batch the prompts to be sent in the same request. This will reduce the number of requests you need to make. The prompt parameter can hold up to 20 unique prompts. We advise you to test out this method and see if it helps. In some cases, you may end up increasing the number of generated tokens which will slow the response time.

根据您的用例,批处理可能会有所帮助。如果要向同一端点发送多个请求,则可以批处理要在同一请求中发送的提示。这将减少您需要提出的请求的数量。prompt参数最多可以保存20个唯一提示。我们建议您测试一下这个方法,看看是否有帮助。在某些情况下,您最终可能会增加生成的令牌的数量,这将减慢响应时间。

Managing costs 管理成本

To monitor your costs, you can set a soft limit in your account to receive an email alert once you pass a certain usage threshold. You can also set a hard limit. Please be mindful of the potential for a hard limit to cause disruptions to your application/users. Use the usage tracking dashboard to monitor your token usage during the current and past billing cycles.

为了监控您的成本,您可以在帐户中设置软限制,以便在超过特定使用阈值时收到电子邮件提醒。您也可以设置一个硬限制。请注意硬限制可能会对您的应用程序/用户造成中断。使用使用情况跟踪仪表板监控当前和过去计费周期内的令牌使用情况。

Text generation 文本生成

One of the challenges of moving your prototype into production is budgeting for the costs associated with running your application. OpenAI offers a pay-as-you-go pricing model, with prices per 1,000 tokens (roughly equal to 750 words). To estimate your costs, you will need to project the token utilization. Consider factors such as traffic levels, the frequency with which users will interact with your application, and the amount of data you will be processing.

将原型投入生产的挑战之一是为运行应用程序的相关成本进行预算。OpenAI提供了一个按需付费的定价模型,每1,000个token(大约等于750个单词)的价格。要估计成本,您需要预测token利用率。考虑一些因素,如流量水平、用户与应用程序交互的频率以及您将处理的数据量。

One useful framework for thinking about reducing costs is to consider costs as a function of the number of tokens and the cost per token. There are two potential avenues for reducing costs using this framework. First, you could work to reduce the cost per token by switching to smaller models for some tasks in order to reduce costs. Alternatively, you could try to reduce the number of tokens required. There are a few ways you could do this, such as by using shorter prompts, fine-tuning models, or caching common user queries so that they don’t need to be processed repeatedly.

考虑降低成本的一个有用框架是将成本视为token数量和每个token成本的函数。有两个潜在的途径来降低使用该框架的成本。首先,您可以通过为某些任务切换到较小的模型来降低每个令牌的成本,以降低成本。或者,您可以尝试减少所需的令牌数量。有几种方法可以做到这一点,例如使用更短的提示,微调模型,或缓存常见的用户查询,以便它们不需要重复处理。

You can experiment with our interactive tokenizer tool to help you estimate costs. The API and playground also returns token counts as part of the response. Once you’ve got things working with our most capable model, you can see if the other models can produce the same results with lower latency and costs. Learn more in our token usage help article.

您可以尝试使用我们的交互式符分词工具来帮助您估算成本。API和playground还返回令牌计数作为响应的一部分。一旦你使用我们最强大的模型,你就可以看到其他模型是否可以以更低的延迟和成本产生相同的结果。在我们的token 使用帮助文章中了解更多信息。

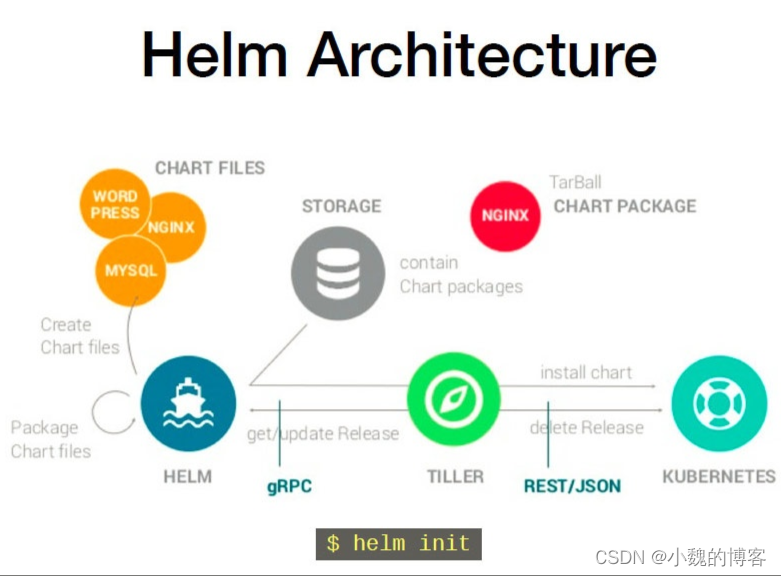

MLOps strategy 机器学习操作策略

As you move your prototype into production, you may want to consider developing an MLOps strategy. MLOps (machine learning operations) refers to the process of managing the end-to-end life cycle of your machine learning models, including any models you may be fine-tuning using our API. There are a number of areas to consider when designing your MLOps strategy. These include

当您将原型投入生产时,您可能需要考虑开发一个MLOps策略。MLOps(机器学习操作策略)是指管理机器学习模型的端到端生命周期的过程,包括您可能使用我们的API进行微调的任何模型。在设计MLOps策略时,有许多方面需要考虑。其中包括

- Data and model management: managing the data used to train or fine-tune your model and tracking versions and changes.

数据和模型管理:管理用于训练或微调模型的数据,并跟踪版本和更改。 - Model monitoring: tracking your model’s performance over time and detecting any potential issues or degradation.

模型监测:跟踪模型随时间推移的性能,并检测任何潜在问题或性能下降。 - Model retraining: ensuring your model stays up to date with changes in data or evolving requirements and retraining or fine-tuning it as needed.

模型再训练:确保您的模型保持与数据或不断变化的需求的变化同步,并根据需要对其进行重新训练或微调。 - Model deployment: automating the process of deploying your model and related artifacts into production.

模型部署:自动化将模型和相关工件部署到生产中的过程。

Thinking through these aspects of your application will help ensure your model stays relevant and performs well over time.

仔细考虑应用程序的这些方面将有助于确保您的模型保持相关性,并随着时间的推移表现良好。

Security and compliance 安全性和合规性

As you move your prototype into production, you will need to assess and address any security and compliance requirements that may apply to your application. This will involve examining the data you are handling, understanding how our API processes data, and determining what regulations you must adhere to. For reference, here is our Privacy Policy and Terms of Use.

当您将原型投入生产时,您需要评估和解决可能适用于您的应用程序的任何安全性和合规性要求。这将涉及检查您正在处理的数据,了解我们的API如何处理数据,并确定您必须遵守的法规。以下是我们的隐私政策和使用条款,供您参考。

Some common areas you’ll need to consider include data storage, data transmission, and data retention. You might also need to implement data privacy protections, such as encryption or anonymization where possible. In addition, you should follow best practices for secure coding, such as input sanitization and proper error handling.

您需要考虑的一些常见领域包括数据存储、数据传输和数据保留。您可能还需要实施数据隐私保护,例如在可能的情况下进行加密或匿名化。此外,您应该遵循安全编码的最佳实践,例如输入清理和合适的错误处理。

Safety best practices 安全最佳实践

When creating your application with our API, consider our safety best practices to ensure your application is safe and successful. These recommendations highlight the importance of testing the product extensively, being proactive about addressing potential issues, and limiting opportunities for misuse.

使用我们的API创建应用程序时,请考虑我们的安全最佳实践,以确保您的应用程序安全且成功。这些建议强调了广泛测试产品的重要性,积极主动地解决潜在问题,并限制误用的机会。

其它资料下载

如果大家想继续了解人工智能相关学习路线和知识体系,欢迎大家翻阅我的另外一篇博客《重磅 | 完备的人工智能AI 学习——基础知识学习路线,所有资料免关注免套路直接网盘下载》

这篇博客参考了Github知名开源平台,AI技术平台以及相关领域专家:Datawhale,ApacheCN,AI有道和黄海广博士等约有近100G相关资料,希望能帮助到所有小伙伴们。