注意:我这里的离线安装包是V1.20.5的,单安装一个master节点并部署服务,保证可以使用。如果安装集群也是可以的,但是需要把离线包上传到所有的node节点,导入,最后把node节点接入到K8S集群即可,本人都亲测过。

K8SV1.20.5离线安装包下载:

链接:https://pan.baidu.com/s/1gjp8gta8SLAbdLrc8e-zJA?pwd=MAQQ

提取码:MAQQ

–来自百度网盘的分享

1.系统性能优化

hostnamectl set-hostname k8s-master && bash

cat >> /etc/hosts << EOF

192.168.243.215 k8s-master

EOF

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

swapoff -a # 临时

sed -i 's/.*swap.*/#&/' /etc/fstab # 永久

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效

2.离线安装docker

docker离线安装请参考博客

3.安装kubeadm,kubelet 和kubectl

[root@k8s-master qq]# ls

0f2a2afd740d476ad77c508847bad1f559afc2425816c1f2ce4432a62dfe0b9d-kubernetes-cni-1.2.0-0.x86_64.rpm libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm

356e511f8963b4b68fdf41593e64e92f03f0b58c72aae0613aeff3e770078cf7-kubelet-1.20.5-0.x86_64.rpm libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm

3f5ba2b53701ac9102ea7c7ab2ca6616a8cd5966591a77577585fde1c434ef74-cri-tools-1.26.0-0.x86_64.rpm libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm

8593f28d972a6818131c1a6cd34f52b22a6acd0c4c7dcf3d7447ad53a9f24cc3-kubectl-1.20.5-0.x86_64.rpm socat-1.7.3.2-2.el7.x86_64.rpm

c2634321e0d8ebe24ba7c6f025df171f5d1707c75a90e3bdd08199ab47aac565-kubeadm-1.20.5-0.x86_64.rpm 安装说明.txt

conntrack-tools-1.4.4-7.el7.x86_64.rpm

[root@k8s-master qq]# rpm -ivh *.rpm #直接安装

4.部署Kubernetes Master

kubeadm init --apiserver-advertise-address=192.168.243.215 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.20.5 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=all

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master mqq]# kubectl get nodes #安装后看到状态是NotReady

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 33m v1.20.5

5.安装Pod 网络插件(CNI)

cat > calico.yaml << EOF

---

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Typha is disabled.

typha_service_name: "none"

# Configure the backend to use.

calico_backend: "bird"

# Configure the MTU to use

veth_mtu: "1440"

# The CNI network configuration to install on each node. The special

# values in this config will be automatically populated.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "info",

"datastore_type": "kubernetes",

"nodename": "__KUBERNETES_NODE_NAME__",

"mtu": __CNI_MTU__,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

}

]

}

---

# Source: calico/templates/kdd-crds.yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: felixconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: FelixConfiguration

plural: felixconfigurations

singular: felixconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ipamblocks.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPAMBlock

plural: ipamblocks

singular: ipamblock

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: blockaffinities.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BlockAffinity

plural: blockaffinities

singular: blockaffinity

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ipamhandles.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPAMHandle

plural: ipamhandles

singular: ipamhandle

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ipamconfigs.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPAMConfig

plural: ipamconfigs

singular: ipamconfig

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgppeers.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPPeer

plural: bgppeers

singular: bgppeer

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgpconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPConfiguration

plural: bgpconfigurations

singular: bgpconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ippools.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPPool

plural: ippools

singular: ippool

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: hostendpoints.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: HostEndpoint

plural: hostendpoints

singular: hostendpoint

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusterinformations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: ClusterInformation

plural: clusterinformations

singular: clusterinformation

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworkpolicies.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkPolicy

plural: globalnetworkpolicies

singular: globalnetworkpolicy

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworksets.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkSet

plural: globalnetworksets

singular: globalnetworkset

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: networkpolicies.crd.projectcalico.org

spec:

scope: Namespaced

group: crd.projectcalico.org

version: v1

names:

kind: NetworkPolicy

plural: networkpolicies

singular: networkpolicy

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: networksets.crd.projectcalico.org

spec:

scope: Namespaced

group: crd.projectcalico.org

version: v1

names:

kind: NetworkSet

plural: networksets

singular: networkset

---

# Source: calico/templates/rbac.yaml

# Include a clusterrole for the kube-controllers component,

# and bind it to the calico-kube-controllers serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

rules:

# Nodes are watched to monitor for deletions.

- apiGroups: [""]

resources:

- nodes

verbs:

- watch

- list

- get

# Pods are queried to check for existence.

- apiGroups: [""]

resources:

- pods

verbs:

- get

# IPAM resources are manipulated when nodes are deleted.

- apiGroups: ["crd.projectcalico.org"]

resources:

- ippools

verbs:

- list

- apiGroups: ["crd.projectcalico.org"]

resources:

- blockaffinities

- ipamblocks

- ipamhandles

verbs:

- get

- list

- create

- update

- delete

# Needs access to update clusterinformations.

- apiGroups: ["crd.projectcalico.org"]

resources:

- clusterinformations

verbs:

- get

- create

- update

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-kube-controllers

subjects:

- kind: ServiceAccount

name: calico-kube-controllers

namespace: kube-system

---

# Include a clusterrole for the calico-node DaemonSet,

# and bind it to the calico-node serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-node

rules:

# The CNI plugin needs to get pods, nodes, and namespaces.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

# Used to discover service IPs for advertisement.

- watch

- list

# Used to discover Typhas.

- get

- apiGroups: [""]

resources:

- nodes/status

verbs:

# Needed for clearing NodeNetworkUnavailable flag.

- patch

# Calico stores some configuration information in node annotations.

- update

# Watch for changes to Kubernetes NetworkPolicies.

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

# Used by Calico for policy information.

- apiGroups: [""]

resources:

- pods

- namespaces

- serviceaccounts

verbs:

- list

- watch

# The CNI plugin patches pods/status.

- apiGroups: [""]

resources:

- pods/status

verbs:

- patch

# Calico monitors various CRDs for config.

- apiGroups: ["crd.projectcalico.org"]

resources:

- globalfelixconfigs

- felixconfigurations

- bgppeers

- globalbgpconfigs

- bgpconfigurations

- ippools

- ipamblocks

- globalnetworkpolicies

- globalnetworksets

- networkpolicies

- networksets

- clusterinformations

- hostendpoints

- blockaffinities

verbs:

- get

- list

- watch

# Calico must create and update some CRDs on startup.

- apiGroups: ["crd.projectcalico.org"]

resources:

- ippools

- felixconfigurations

- clusterinformations

verbs:

- create

- update

# Calico stores some configuration information on the node.

- apiGroups: [""]

resources:

- nodes

verbs:

- get

- list

- watch

# These permissions are only requried for upgrade from v2.6, and can

# be removed after upgrade or on fresh installations.

- apiGroups: ["crd.projectcalico.org"]

resources:

- bgpconfigurations

- bgppeers

verbs:

- create

- update

# These permissions are required for Calico CNI to perform IPAM allocations.

- apiGroups: ["crd.projectcalico.org"]

resources:

- blockaffinities

- ipamblocks

- ipamhandles

verbs:

- get

- list

- create

- update

- delete

- apiGroups: ["crd.projectcalico.org"]

resources:

- ipamconfigs

verbs:

- get

# Block affinities must also be watchable by confd for route aggregation.

- apiGroups: ["crd.projectcalico.org"]

resources:

- blockaffinities

verbs:

- watch

# The Calico IPAM migration needs to get daemonsets. These permissions can be

# removed if not upgrading from an installation using host-local IPAM.

- apiGroups: ["apps"]

resources:

- daemonsets

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

annotations:

# This, along with the CriticalAddonsOnly toleration below,

# marks the pod as a critical add-on, ensuring it gets

# priority scheduling and that its resources are reserved

# if it ever gets evicted.

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

nodeSelector:

beta.kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container performs upgrade from host-local IPAM to calico-ipam.

# It can be deleted if this is a fresh installation, or if you have already

# upgraded to use calico-ipam.

- name: upgrade-ipam

image: calico/cni:v3.11.3

command: ["/opt/cni/bin/calico-ipam", "-upgrade"]

env:

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

volumeMounts:

- mountPath: /var/lib/cni/networks

name: host-local-net-dir

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

securityContext:

privileged: true

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: calico/cni:v3.11.3

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# Set the hostname based on the k8s node name.

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# CNI MTU Config variable

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

securityContext:

privileged: true

# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes

# to communicate with Felix over the Policy Sync API.

- name: flexvol-driver

image: calico/pod2daemon-flexvol:v3.11.3

volumeMounts:

- name: flexvol-driver-host

mountPath: /host/driver

securityContext:

privileged: true

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: calico/node:v3.11.3

env:

# Use Kubernetes API as the backing datastore.

- name: DATASTORE_TYPE

value: "kubernetes"

# Wait for the datastore.

- name: WAIT_FOR_DATASTORE

value: "true"

# Set based on the k8s node name.

- name: NODENAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

exec:

command:

- /bin/calico-node

- -felix-ready

- -bird-ready

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- name: policysync

mountPath: /var/run/nodeagent

volumes:

# Used by calico-node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Mount in the directory for host-local IPAM allocations. This is

# used when upgrading from host-local to calico-ipam, and can be removed

# if not using the upgrade-ipam init container.

- name: host-local-net-dir

hostPath:

path: /var/lib/cni/networks

# Used to create per-pod Unix Domain Sockets

- name: policysync

hostPath:

type: DirectoryOrCreate

path: /var/run/nodeagent

# Used to install Flex Volume Driver

- name: flexvol-driver-host

hostPath:

type: DirectoryOrCreate

path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-kube-controllers.yaml

# See https://github.com/projectcalico/kube-controllers

apiVersion: apps/v1

kind: Deployment

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

# The controllers can only have a single active instance.

replicas: 1

selector:

matchLabels:

k8s-app: calico-kube-controllers

strategy:

type: Recreate

template:

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

nodeSelector:

beta.kubernetes.io/os: linux

tolerations:

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- key: node-role.kubernetes.io/master

effect: NoSchedule

serviceAccountName: calico-kube-controllers

priorityClassName: system-cluster-critical

containers:

- name: calico-kube-controllers

image: calico/kube-controllers:v3.11.3

env:

# Choose which controllers to run.

- name: ENABLED_CONTROLLERS

value: node

- name: DATASTORE_TYPE

value: kubernetes

readinessProbe:

exec:

command:

- /usr/bin/check-status

- -r

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-kube-controllers

namespace: kube-system

---

# Source: calico/templates/calico-etcd-secrets.yaml

---

# Source: calico/templates/calico-typha.yaml

---

# Source: calico/templates/configure-canal.yaml

EOF

kubectl apply -f calico.yaml

[root@k8s-master manifests]# kubectl get nodes #现在看到状态是Ready就OK

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 40m v1.20.5

[root@k8s-master manifests]#

[root@k8s-master manifests]# kubectl get pods -n kube-system #全部状态是Running就OK

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6b8f6f78dc-qrw2g 1/1 Running 0 2m39s

calico-node-s5ddr 1/1 Running 0 2m39s

coredns-7f89b7bc75-b49sr 1/1 Running 0 40m

coredns-7f89b7bc75-gtft5 1/1 Running 0 40m

etcd-k8s-master 1/1 Running 0 40m

kube-apiserver-k8s-master 1/1 Running 0 40m

kube-controller-manager-k8s-master 1/1 Running 0 40m

kube-proxy-grkw8 1/1 Running 0 40m

kube-scheduler-k8s-master 1/1 Running 0 40m

[root@k8s-master manifests]#

6.单集版的k8s安装后, 无法部署服务

因为默认master不能部署pod,有污点, 需要去掉污点或者新增一个node,我这里是去除污点.

kubectl get node -o yaml | grep taint -A 5

kubectl taint nodes --all node-role.kubernetes.io/master- #执行这句就行,就是取消污点

kubectl get node -o yaml | grep taint -A 5 #什么查不到就OK拉

7.部署metrics服务

cat > metrics-server.yaml << EOF

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: lizhenliang/metrics-server:v0.3.7

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- name: tmp-dir

mountPath: /tmp

nodeSelector:

kubernetes.io/os: linux

kubernetes.io/arch: "amd64"

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: main-port

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

EOF

kubectl apply -f metrics-server.yaml

kubectl top nodes

[root@k8s-master manifests]# kubectl top nodes #看到CUP的%出来就OK

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 531m 17% 2035Mi 55%

[root@k8s-master manifests]#

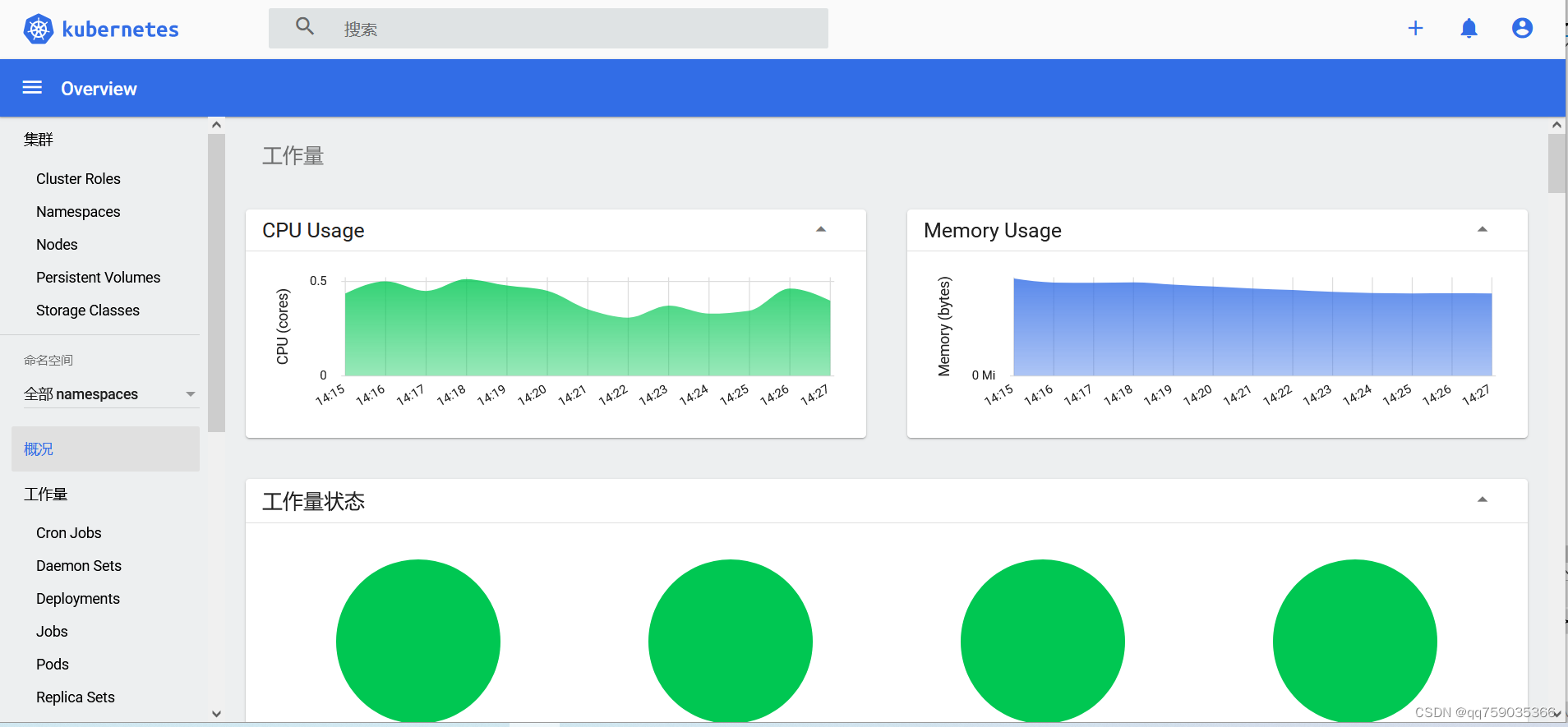

8.部署Dashboard

cat > recommended.yaml << EOF

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #新添加的,下面有一个删除了,注意缩进了2个空格

ports:

- port: 443

targetPort: 8443

nodePort: 30001 #新添加的

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.4

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

EOF

kubectl apply -f recommended.yaml

[root@k8s-master manifests]# kubectl get pods -n kubernetes-dashboard #状态全部是 Running就OK

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b59f7d4df-5n42w 1/1 Running 0 50s

kubernetes-dashboard-74d688b6bc-rdw9r 1/1 Running 0 50s

[root@k8s-master manifests]#

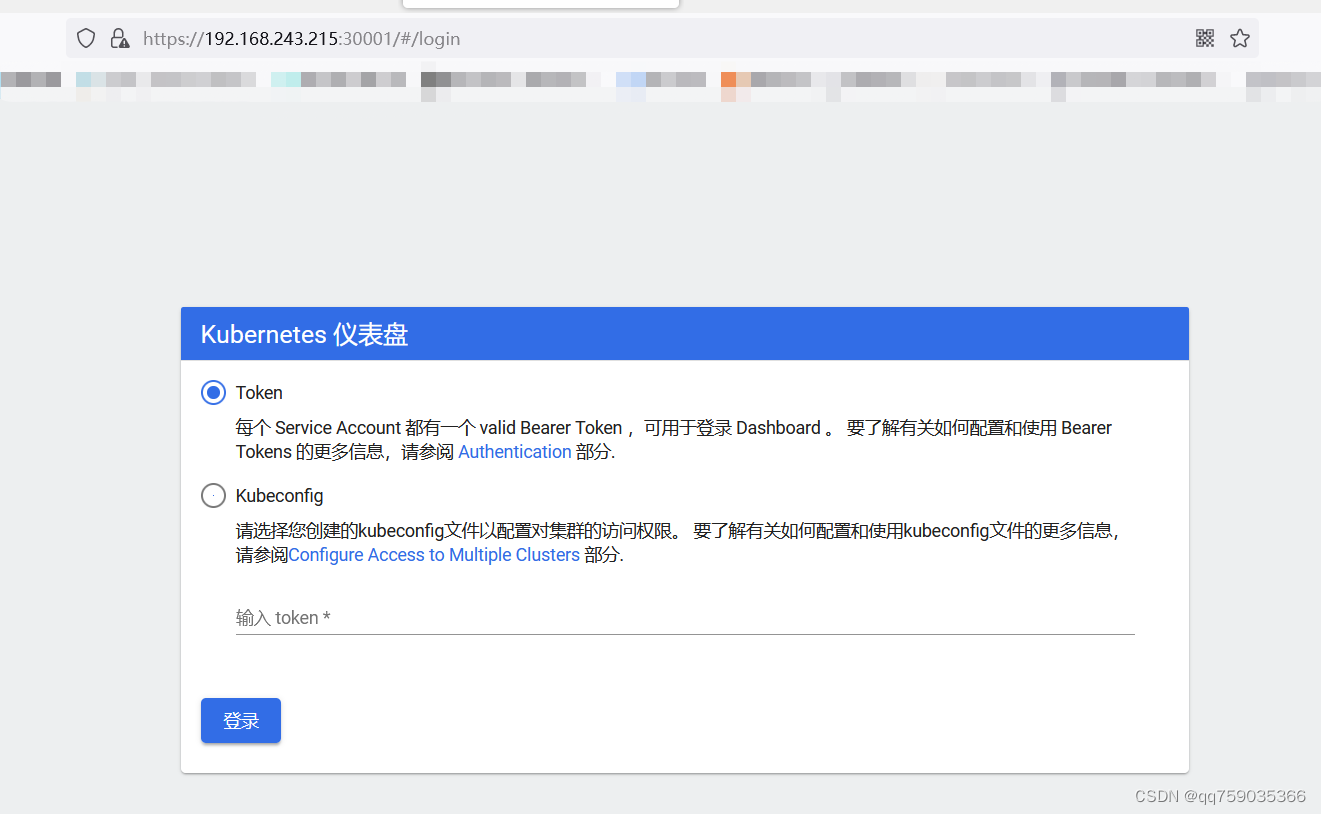

访问地址:https://192.168.0.134:30001/ #必须要用https://

创建service account并绑定默认cluster-admin管理员群集角色

使用输出的token登录Dashboard

kubectl create serviceaccount dashboard-admin -n kube-system #创建用户

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin #用户授权

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') #获取用户Token,用于页面登录

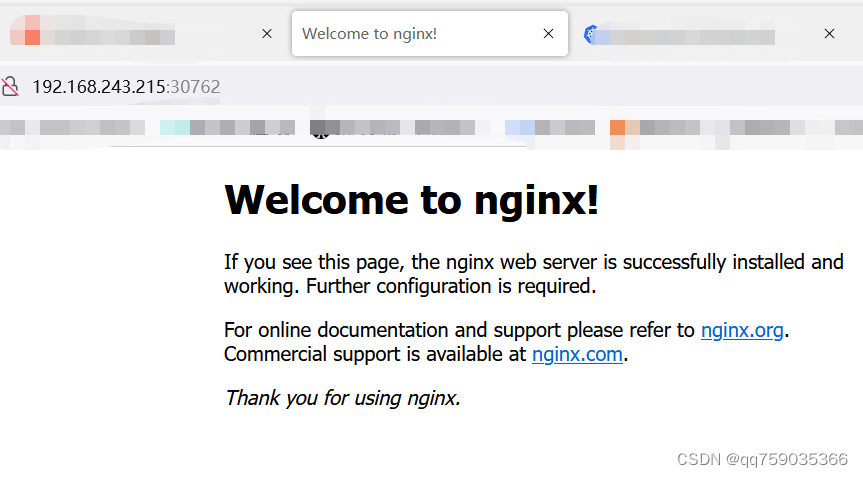

9.测试kubernetes是否正常

cat > nginx.yaml << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx

name: nginx

spec:

type: NodePort

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

EOF

kubectl apply -f nginx.yaml

kubectl get pods,svc

[root@k8s-master mqq]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-7cf7d6dbc8-8lrzb 1/1 Running 0 46s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 76m

service/nginx NodePort 10.98.34.181 <none> 80:30762/TCP 46s

[root@k8s-master mqq]# ip:30762 去页面访问,能访问就OK