title: Sqoop系列

第一章 Sqoop理论

1.1 概述

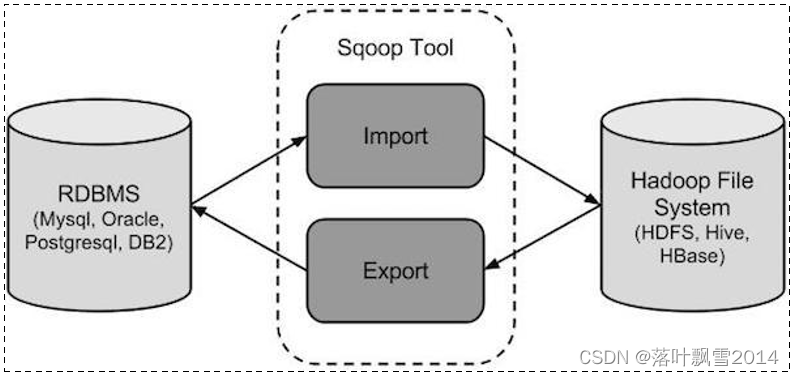

sqoop 是 apache 旗下一款“Hadoop 和关系数据库服务器之间传送数据”的工具。

导入(Import)数据:MySQL,Oracle 导入数据到 Hadoop 的 HDFS、HIVE、HBASE 等数据存储系统

导出(Export)数据:从 Hadoop 的文件系统中导出数据到关系数据库 MySQL等

Sqoop 的本质还是一个命令行工具,和 HDFS,Hive 相比,并没有什么高深的理论。

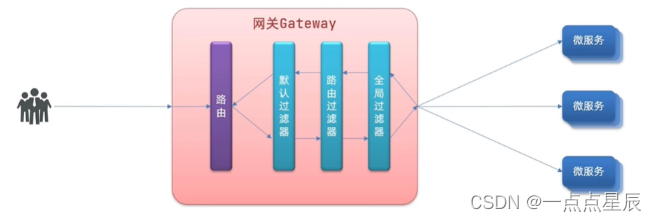

1.2 工作机制

将导入或导出命令翻译成 MapReduce 程序来实现

在翻译出的 MapReduce 中主要是对 InputFormat 和 OutputFormat 进行定制

1.3 Sqoop安装

1.3.1 Sqoop1.4.6 安装

安装 Sqoop 的前提是已经具备 Java 和 Hadoop 的环境

安装包:sqoop-1.4.6.bin_hadoop-2.0.4-alpha.tar.gz

安装过程:

1、上传解压重命名

[root@hadoop0 software]# tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

[root@hadoop0 software]# mv sqoop-1.4.6.bin__hadoop-2.0.4-alpha sqoop

2、修改配置文件

[root@hadoop0 conf]# mv sqoop-env-template.sh sqoop-env.sh

添加如下的信息:

export HADOOP_COMMON_HOME=/software/hadoop

export HADOOP_MAPRED_HOME=/software/hadoop

export HBASE_HOME=/software/hbase

export HIVE_HOME=/software/hive

export ZOOCFGDIR=/software/zk/conf

3、 加入 MySQL 驱动包到 Sqoop的lib目录下

mysql-connector-java-5.1.40-bin.jar放到Sqoop的lib目录下

4、配置环境变量

export SQOOP_HOME=/software/sqoop

export PATH=$PATH:$SQOOP_HOME/bin

最后配置完了之后注意source一下。

5、验证安装是否成功

[root@hadoop0 sqoop]# sqoop-version

Warning: /software/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /software/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

20/08/23 00:34:13 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

Sqoop 1.4.6

git commit id c0c5a81723759fa575844a0a1eae8f510fa32c25

Compiled by root on Mon Apr 27 14:38:36 CST 2015

1.3.2 Sqoop1.4.7 安装

官网链接:http://sqoop.apache.org/

更新比较慢了。

安装 Sqoop 的前提是已经具备 Java 和 Hadoop 的环境

安装包:sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

安装过程:

1、上传解压重命名

[root@hadoop10 software]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@hadoop10 software]# mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop

2、修改配置文件

[root@hadoop10 conf]# mv sqoop-env-template.sh sqoop-env.sh

添加如下的信息:

export HADOOP_COMMON_HOME=/software/hadoop

export HADOOP_MAPRED_HOME=/software/hadoop

export HBASE_HOME=/software/hbase

export HIVE_HOME=/software/hive

export ZOOCFGDIR=/software/zk/conf

3、 加入 MySQL 驱动包到 Sqoop的lib目录下

mysql-connector-java-5.1.40-bin.jar放到Sqoop的lib目录下

4、配置环境变量

export SQOOP_HOME=/software/sqoop

export PATH=$PATH:$SQOOP_HOME/bin

最后配置完了之后注意source一下。

5、验证安装是否成功

[root@hadoop10 software]# sqoop-version

Warning: /software/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /software/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

2021-10-19 11:37:31,693 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

1.4 Sqoop基本命令介绍

首先,我们可以使用 sqoop help 来查看,sqoop 支持哪些命令

[root@hadoop0 sqoop]# sqoop help

Warning: /software/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /software/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

20/08/23 00:35:03 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

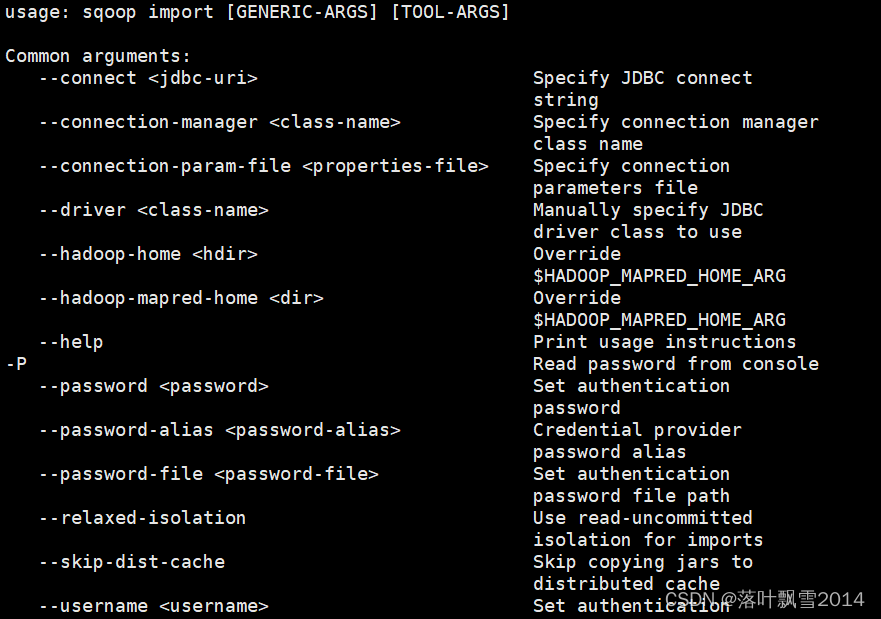

然后得到这些支持的命令之后,如果不知道使用方式,可以使用 sqoop help 命令名称 的方式来查看某条具体命令的使用方式,比如

sqoop help import

第二章 Sqoop实践

2.1 Sqoop数据导入导出

Sqoop : 数据迁入迁出的工具

RDBMS -- import -> Hadoop

Hadoop -- export -> RDBMS

删除hive表

drop table student;

drop table if exists student;

删除HBase表

disable 'student'

drop 'student'

下面是使用啦:

列出MySQL数据有哪些数据库:

sqoop list-databases \

--connect jdbc:mysql://localhost:3306/ \

--username root \

--password 111111

sqoop list-databases --connect jdbc:mysql://localhost:3306/ --username root --password 111111

列出MySQL中的某个数据库有哪些数据表:

sqoop list-tables \

--connect jdbc:mysql://localhost:3306/mysql \

--username root \

--password 111111

创建一张跟mysql中的help_keyword表一样的hive表hk:

使用默认的default库

sqoop create-hive-table \

--connect jdbc:mysql://localhost:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

--hive-table hk

另开一个hive窗口

hive> use default;

hive> show tables;

hive> desc hk;

hive> drop table hk;

hive> show tables;

使用myhive库

sqoop create-hive-table \

--connect jdbc:mysql://localhost:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

--hive-database myhive \

--hive-table hk

第一个:导入

导入MySQL表中数据到HDFS中:

// 普通导入:导入mysql库中的help_keyword的数据到HDFS上的默认路径:/user/root/help_keyword

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

-m 1

-m 1就是一个MapTask

可能会报错,给上面的localhost改成ip地址或者主机名hadoop10即可。

查看结果:hadoop fs -cat /user/root/help_keyword/p*

先删除原来的结果文件: hadoop fs -rm -r /user/root/help_keyword

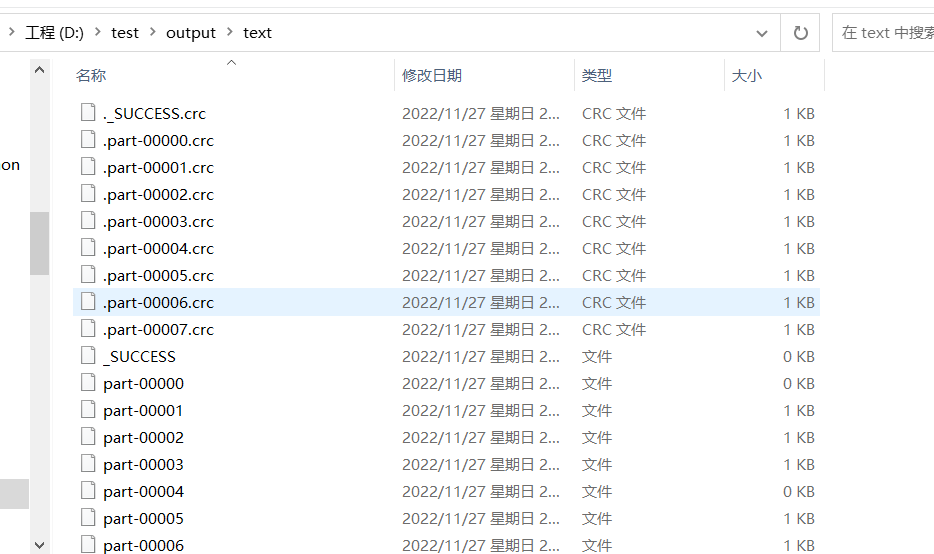

下面会生成4个文件,也就是说默认的m是4个。

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--table help_keyword

// 导入: 指定分隔符和导入路径

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

--target-dir /user/root/my_help_keyword \

--fields-terminated-by '\t' \

-m 1

查看:

hadoop fs -cat /user/root/my_help_keyword/p*

// 导入数据:带where条件

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--where "name='STRING' " \

--table help_keyword \

--target-dir /sqoop/root/myoutport1 \

-m 1

查看:

hadoop fs -cat /sqoop/root/myoutport1/part-m-00000

// 导入:指定自定义查询SQL

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--target-dir /user/root/myimport \

--query 'select help_keyword_id,name from help_keyword WHERE $CONDITIONS and name = "STRING"' \

--split-by help_keyword_id \

--fields-terminated-by '\t' \

-m 1

查看:

hadoop fs -cat /user/root/myimport/*

下面是导入所有,其实没有添加条件,但是WHERE \$CONDITIONS 必须要有。

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--target-dir /user/root/myimport10 \

--query "select help_keyword_id,name from help_keyword WHERE \$CONDITIONS" \

--split-by help_keyword_id \

--fields-terminated-by '\t' \

-m 2

查看:

hadoop fs -cat /user/root/myimport10/*

下面会报错

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--target-dir /user/root/myimport222 \

--query "select help_keyword_id,name from help_keyword" \

--split-by help_keyword_id \

--fields-terminated-by '\t' \

-m 3

具体报错:

2021-10-19 15:41:56,392 ERROR tool.ImportTool: Import failed: java.io.IOException: Query [select help_keyword_id,name from help_keyword] must contain '$CONDITIONS' in WHERE clause.

在以上需要按照自定义SQL语句导出数据到HDFS的情况下:

1、引号问题,要么外层使用单引号,内层使用双引号,$CONDITIONS的$符号不用转义, 要么外层使用双引号,那么内层使用单引号,然后$CONDITIONS的$符号需要转义

2、自定义的SQL语句中必须带有WHERE \$CONDITIONS

导入MySQL数据库中的表数据到Hive中:

// 普通导入:数据存储在默认的default hive库中,表名就是对应的mysql的表名:

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

--hive-import \

-m 1

先删除了前面测试的:

hadoop fs -rm -r /user/root/help_keyword

查看:

hadoop fs -cat /user/hive/warehouse/help_keyword/p* // 查看数据

hadoop fs -cat /user/root/help_keyword/p*

// 指定行分隔符和列分隔符,

指定hive-import,

指定覆盖导入,(好像没什么用)

指定自动创建hive表,

指定表名,

指定删除中间结果数据目录

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

--fields-terminated-by "\t" \

--lines-terminated-by "\n" \

--hive-import \

--hive-overwrite \

--create-hive-table \

--delete-target-dir \

--hive-database myhive \

--hive-table new_help_keyword

上面的这个命令可能会因为版本不同的原因不成功。

查看:

hadoop fs -rmr /user/hive/warehouse/myhive.db/new_help_keyword

hadoop fs -cat /user/hive/warehouse/myhive.db/new_help_keyword/p*

// 增量导入 导入到hdfs

sqoop import \

--connect jdbc:mysql://hadoop10:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

--target-dir /user/root/myimport \

--incremental append \

--check-column help_keyword_id \

--last-value 500 \

-m 1

查看:

hadoop fs -cat /user/root/myimport/p*

Incremental import arguments:

--check-column <column> Source column to check for incremental change

--incremental <import-type> Define an incremental import of type 'append' or 'lastmodified'

--last-value <value> Last imported value in the incremental check column

last-value 500 只导入 id > 500 的所有记录。。

概念:

1、增量导入

2、全量导入

导出:

大数据存储系统 --》RDBMS

注意:导出的RDBMS的表必须自己预先创建,不会自动创建

create database sqoopdb default character set utf8 COLLATE utf8_general_ci;

use sqoopdb;

CREATE TABLE sqoopstudent (

id INT NOT NULL PRIMARY KEY,

name VARCHAR(20),

sex VARCHAR(20),

age INT,

department VARCHAR(20)

);

// 导出HDFS数据到MySQL:

sqoop export \

--connect jdbc:mysql://hadoop10:3306/sqoopdb \

--username root \

--password 111111 \

--table sqoopstudent \

--export-dir /user/hive/warehouse/myhive.db/student \

--fields-terminated-by ','

// 导出hive数据到MySQL:

sqoop export \

--connect jdbc:mysql://localhost:3306/sqoopdb \

--username root \

--password 111111 \

--table sqoopstudent \

--export-dir /user/hive/warehouse/myhive.db/student \

--fields-terminated-by ','

一些其他操作:

列出mysql数据库中的所有数据库

sqoop list-databases \

--connect jdbc:mysql://localhost:3306/ \

-username root \

-password 111111

连接mysql并列出数据库中的表

sqoop list-tables \

--connect jdbc:mysql://localhost:3306/mysql \

-username root \

-password 111111

2.2 导入导出中可能遇到的问题总结

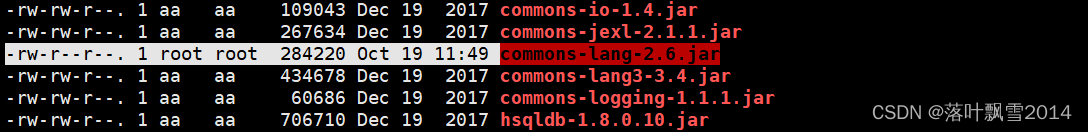

2.2.1 找不到StringUtils

执行下面的命令的时候:

[root@hadoop10 software]# sqoop list-databases --connect jdbc:mysql://localhost:3306/ --username root --password 111111

Warning: /software/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /software/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

2021-10-19 11:44:21,417 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

2021-10-19 11:44:21,468 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

2021-10-19 11:44:21,532 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/commons/lang/StringUtils

at org.apache.sqoop.manager.MySQLManager.initOptionDefaults(MySQLManager.java:73)

at org.apache.sqoop.manager.SqlManager.<init>(SqlManager.java:89)

at com.cloudera.sqoop.manager.SqlManager.<init>(SqlManager.java:33)

at org.apache.sqoop.manager.GenericJdbcManager.<init>(GenericJdbcManager.java:51)

at com.cloudera.sqoop.manager.GenericJdbcManager.<init>(GenericJdbcManager.java:30)

at org.apache.sqoop.manager.CatalogQueryManager.<init>(CatalogQueryManager.java:46)

at com.cloudera.sqoop.manager.CatalogQueryManager.<init>(CatalogQueryManager.java:31)

at org.apache.sqoop.manager.InformationSchemaManager.<init>(InformationSchemaManager.java:38)

at com.cloudera.sqoop.manager.InformationSchemaManager.<init>(InformationSchemaManager.java:31)

at org.apache.sqoop.manager.MySQLManager.<init>(MySQLManager.java:65)

at org.apache.sqoop.manager.DefaultManagerFactory.accept(DefaultManagerFactory.java:67)

at org.apache.sqoop.ConnFactory.getManager(ConnFactory.java:184)

at org.apache.sqoop.tool.BaseSqoopTool.init(BaseSqoopTool.java:272)

at org.apache.sqoop.tool.ListDatabasesTool.run(ListDatabasesTool.java:44)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: java.lang.ClassNotFoundException: org.apache.commons.lang.StringUtils

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 20 more

解决方案:将旧版的commons-lang-2.6.jar包下载并导入到sqoop目录下的lib目录下即可。

2.2.2 HIVE_CONF_DIR 问题

2021-10-19 14:32:34,277 ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_DIR is set correctly.

2021-10-19 14:32:34,278 ERROR tool.CreateHiveTableTool: Encountered IOException running create table job: java.io.IOException: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:50)

at org.apache.sqoop.hive.HiveImport.getHiveArgs(HiveImport.java:392)

at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:379)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

at org.apache.sqoop.tool.CreateHiveTableTool.run(CreateHiveTableTool.java:57)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:264)

at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:44)

... 11 more

解决方案:

网上有的地方说是要配置hive conf,进行如下配置

export HADOOP_CLASSPATH=/software/hadoop/lib

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:/software/hive/lib/*

export HIVE_CONF_DIR=$HIVE_HOME/conf

但是我们这边,经过测试,加上上面也没有效果。

再次确认是sqoop的lib中缺少hive的依赖包。进入到sqoop的目录下,你会发现果然没有hive的依赖包。

进入到hive目录中.将hive-exec-3.1.2.jar拷到sqoop的lib目录下。

将hive的依赖包拷过来以后,成功导入数据。

[root@hadoop10 lib]# cp hive-exec-3.1.2.jar /software/sqoop/lib/

[root@hadoop10 lib]# pwd

/software/hive/lib

[root@hadoop10 lib]#

2.2.3 在命令中使用localhost问题总结(Communications link failure。0ms)

[root@hadoop10 lib]# hadoop fs -rm -r /user/root/help_keyword

Deleted /user/root/help_keyword

[root@hadoop10 lib]# sqoop import \

> --connect jdbc:mysql://localhost:3306/mysql \

> --username root \

> --password 111111 \

> --table help_keyword \

> -m 1

Warning: /software/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /software/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

2021-10-19 15:11:03,501 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

2021-10-19 15:11:03,569 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

2021-10-19 15:11:03,643 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

2021-10-19 15:11:03,643 INFO tool.CodeGenTool: Beginning code generation

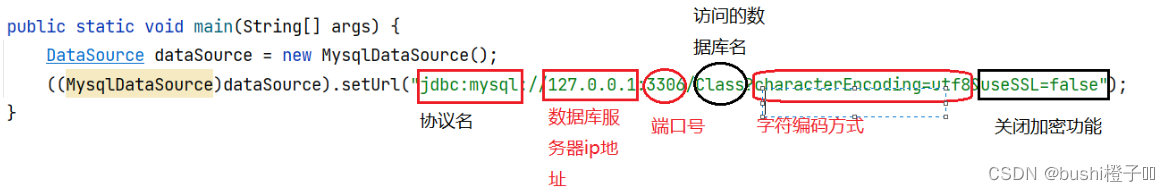

Tue Oct 19 15:11:03 CST 2021 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2021-10-19 15:11:04,093 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

2021-10-19 15:11:04,110 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

2021-10-19 15:11:04,117 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /software/hadoop

Note: /tmp/sqoop-root/compile/553aaec3a5c8ad15b4bbc9e369955b27/help_keyword.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

2021-10-19 15:11:05,134 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/553aaec3a5c8ad15b4bbc9e369955b27/help_keyword.jar

2021-10-19 15:11:05,143 WARN manager.MySQLManager: It looks like you are importing from mysql.

2021-10-19 15:11:05,143 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

2021-10-19 15:11:05,143 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

2021-10-19 15:11:05,143 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

2021-10-19 15:11:05,149 INFO mapreduce.ImportJobBase: Beginning import of help_keyword

2021-10-19 15:11:05,150 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2021-10-19 15:11:05,228 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

2021-10-19 15:11:05,586 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

2021-10-19 15:11:05,649 INFO client.RMProxy: Connecting to ResourceManager at hadoop10/192.168.22.136:8032

2021-10-19 15:11:05,920 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1634625143234_0004

Tue Oct 19 15:11:14 CST 2021 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2021-10-19 15:11:14,804 INFO db.DBInputFormat: Using read commited transaction isolation

2021-10-19 15:11:15,659 INFO mapreduce.JobSubmitter: number of splits:1

2021-10-19 15:11:16,162 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1634625143234_0004

2021-10-19 15:11:16,162 INFO mapreduce.JobSubmitter: Executing with tokens: []

2021-10-19 15:11:16,331 INFO conf.Configuration: resource-types.xml not found

2021-10-19 15:11:16,331 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2021-10-19 15:11:16,376 INFO impl.YarnClientImpl: Submitted application application_1634625143234_0004

2021-10-19 15:11:16,400 INFO mapreduce.Job: The url to track the job: http://hadoop10:8088/proxy/application_1634625143234_0004/

2021-10-19 15:11:16,400 INFO mapreduce.Job: Running job: job_1634625143234_0004

2021-10-19 15:11:25,485 INFO mapreduce.Job: Job job_1634625143234_0004 running in uber mode : false

2021-10-19 15:11:25,485 INFO mapreduce.Job: map 0% reduce 0%

2021-10-19 15:11:29,602 INFO mapreduce.Job: Task Id : attempt_1634625143234_0004_m_000000_0, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:170)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:161)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:77)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:157)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:125)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:763)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1762)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:223)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:168)

... 11 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2251)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2284)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2083)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:806)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:410)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:328)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:216)

... 12 more

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 28 more

2021-10-19 15:11:34,707 INFO mapreduce.Job: Task Id : attempt_1634625143234_0004_m_000000_1, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:170)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:161)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:77)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:157)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:125)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:763)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1762)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:223)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:168)

... 11 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2251)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2284)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2083)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:806)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:410)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:328)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:216)

... 12 more

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 28 more

2021-10-19 15:11:38,729 INFO mapreduce.Job: Task Id : attempt_1634625143234_0004_m_000000_2, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:170)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:161)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:77)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:157)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:125)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:763)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1762)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:223)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:168)

... 11 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2251)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2284)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2083)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:806)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:410)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:328)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:216)

... 12 more

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 28 more

2021-10-19 15:11:44,839 INFO mapreduce.Job: map 100% reduce 0%

2021-10-19 15:11:45,852 INFO mapreduce.Job: Job job_1634625143234_0004 failed with state FAILED due to: Task failed task_1634625143234_0004_m_000000

Job failed as tasks failed. failedMaps:1 failedReduces:0 killedMaps:0 killedReduces: 0

2021-10-19 15:11:45,898 INFO mapreduce.Job: Counters: 8

Job Counters

Failed map tasks=4

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=12619

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=12619

Total vcore-milliseconds taken by all map tasks=12619

Total megabyte-milliseconds taken by all map tasks=12921856

2021-10-19 15:11:45,905 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead

2021-10-19 15:11:45,906 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 40.3111 seconds (0 bytes/sec)

2021-10-19 15:11:45,911 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead

2021-10-19 15:11:45,911 INFO mapreduce.ImportJobBase: Retrieved 0 records.

2021-10-19 15:11:45,911 ERROR tool.ImportTool: Import failed: Import job failed!

解决方案:

改成ip地址192.168.22.136或者主机名hadoop10即可访问

参考:https://www.aboutyun.com/thread-9302-1-1.html

2.3 和HBase相关的操作

导入 MySQL 数据到 HBase

先手动创建表

create 'new_help_keyword','info'

提示:sqoop1.4.6只支持HBase1.0.1之前的版本的自动创建HBase表的功能

sqoop import \

--connect jdbc:mysql://localhost:3306/mysql \

--username root \

--password 111111 \

--table help_keyword \

--columns "help_keyword_id,name" \

--column-family "info" \

--hbase-create-table \

--hbase-row-key "help_keyword_id" \

--hbase-table "new_help_keyword" \

字段解释:

--connect jdbc:mysql://localhost:3306/mysql 表示远程或者本地 Mysql 服务的 URI

--hbase-create-table 表示在 HBase 中建立表。

--hbase-table new_help_keyword 表示在 HBase 中建立表 new_help_keyword。

--hbase-row-key help_keyword_id 表示hbase表的rowkey是mysql表的help_keyword_id 字段。

--column-family "info" 表示在表 new_help_keyword 中建立列簇 info。

--username root 表示使用用户 root 连接 mysql。

--password 111111 连接 mysql 的用户密码

--table help_keyword 表示导出 mysql 数据库的 help_keyword 表。

导出 HBase 数据到 MySQL

很遗憾,现在还没有直接的命令将 HBase 的数据导出到 MySQL

一般采用如下方法:

1、将 HBase 数据,转成 HDFS 文件,然后再由 sqoop 导入

2、直接使用 HBase 的 Java API 读取表数据,直接向 mysql 导入,不需要使用 sqoop

2.4 和HBase相关的操作过程中遇到的问题

[root@hadoop10 bin]# sqoop import \

> --connect jdbc:mysql://localhost:3306/mysql \

> --username root \

> --password 111111 \

> --table help_keyword \

> --columns "help_keyword_id,name" \

> --column-family "info" \

> --hbase-create-table \

> --hbase-row-key "help_keyword_id" \

> --hbase-table "new_help_keyword" \

>

Warning: /software/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /software/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

2021-10-19 16:06:22,807 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

2021-10-19 16:06:22,868 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

2021-10-19 16:06:22,950 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

2021-10-19 16:06:22,950 INFO tool.CodeGenTool: Beginning code generation

Tue Oct 19 16:06:23 CST 2021 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2021-10-19 16:06:23,428 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

2021-10-19 16:06:23,440 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

2021-10-19 16:06:23,451 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /software/hadoop

Note: /tmp/sqoop-root/compile/410f0acf253deeca3bc21db9a3f628e3/help_keyword.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

2021-10-19 16:06:24,697 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/410f0acf253deeca3bc21db9a3f628e3/help_keyword.jar

2021-10-19 16:06:24,717 WARN manager.MySQLManager: It looks like you are importing from mysql.

2021-10-19 16:06:24,717 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

2021-10-19 16:06:24,717 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

2021-10-19 16:06:24,717 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

2021-10-19 16:06:24,819 INFO mapreduce.ImportJobBase: Beginning import of help_keyword

2021-10-19 16:06:24,820 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2021-10-19 16:06:24,968 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

2021-10-19 16:06:25,001 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.hbase.client.HBaseAdmin.<init>(Lorg/apache/hadoop/conf/Configuration;)V

at org.apache.sqoop.mapreduce.HBaseImportJob.jobSetup(HBaseImportJob.java:163)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:268)

at org.apache.sqoop.manager.SqlManager.importTable(SqlManager.java:692)

at org.apache.sqoop.manager.MySQLManager.importTable(MySQLManager.java:127)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:520)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

解决方案:

Sqoop1.4.7更新很慢,和HBase2.3.6 兼容性有些问题。将HBase的版本更换成HBase1.3.1 就可以测试通估。

声明:

文章中代码及相关语句为自己根据相应理解编写,文章中出现的相关图片为自己实践中的截图和相关技术对应的图片,若有相关异议,请联系删除。感谢。转载请注明出处,感谢。

By luoyepiaoxue2014

微博地址: http://weibo.com/luoyepiaoxue2014 点击打开链接