文章目录

- 前言

- 语义分割发展史及意义

- 一、数据集的准备

- 二、基于Mxnet的语义分割框架构建

- 1.引入库

- 2.CPU/GPU配置

- 3.数据标准化

- 4.解析数据集到列表中

- JSON格式

- Label 图像的标注格式

- 5.设置数据迭代器

- 6.模型构建

- fcn模型结构

- pspnet模型结构

- deeplabv3模型结构

- deeplabv3+模型结构

- ICNet模型结构

- fastscnn模型结构

- DANet模型结构

- 7.模型训练

- 学习率设置

- 优化器设置

- 模型循环

- 8.模型预测

- 三、主入口

- 四、训练过程截图

- 五、效果展示

前言

本文主要讲解基于mxnet深度学习框架实现目标检测,鉴于之前写chainer的麻烦,本结构代码也类似chainer的目标检测框架,各个模型只需要修改网络结构即可,本次直接一篇博文写完目标检测框架及网络结构的搭建,让志同道合者不需要切换文章。

本次多模型实现语义分割,可按实际情况进行选择

环境配置:

python 3.8

mxnet 1.7.0

cuda 10.1

语义分割发展史及意义

图像语义分割,它是将整个图像分成一个个像素组,然后对其进行标记和分类。特别地,语义分割试图在语义上理解图像中每个像素的角色。

图像语义分割可以说是图像理解的基石性技术,在自动驾驶系统中举足轻重。众所周知,图像是由一个个像素(Pixel)组成的,而语义分割就是将图像中表达语义含义的不同进行分组(Grouping)/分割(Segmentation)。语义图像分割就是将每个像素都标注上其对应的类别。需要注意的是,这里不但单独区分同一类别的不同个体,而是仅仅关系该像素是属于哪个类别。

分割任务对于许多任务都非常有用,比如自动驾驶汽车(为了使自动驾驶汽车能够适应现存道路,其需要具有对周围环境的感知能力);医疗图像判断(可以通过机器辅助放射治疗师的分析,从而加速放射检查)

一、数据集的准备

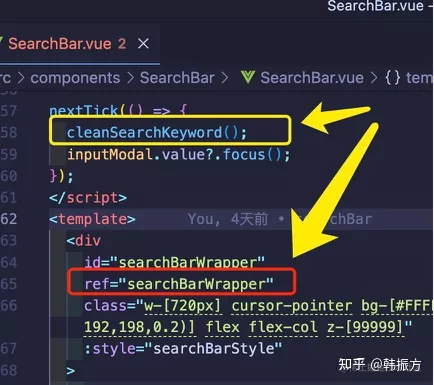

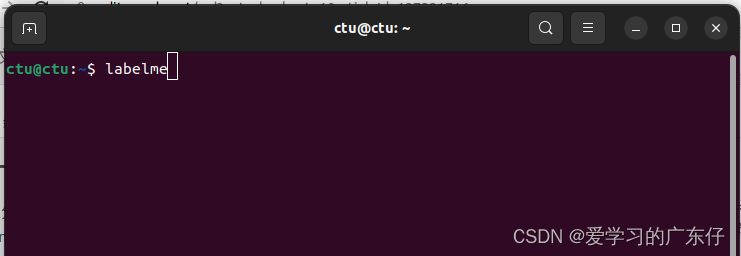

语义分割数据标注主要使用labelImg工具,python安装只需要:pip install labelme 即可,然后在命令提示符输入:labelme即可,如图:

在这里只需要修改“OpenDir“,“OpenDir“主要是存放图片需要标注的路径

选择好路径之后即可开始绘制:

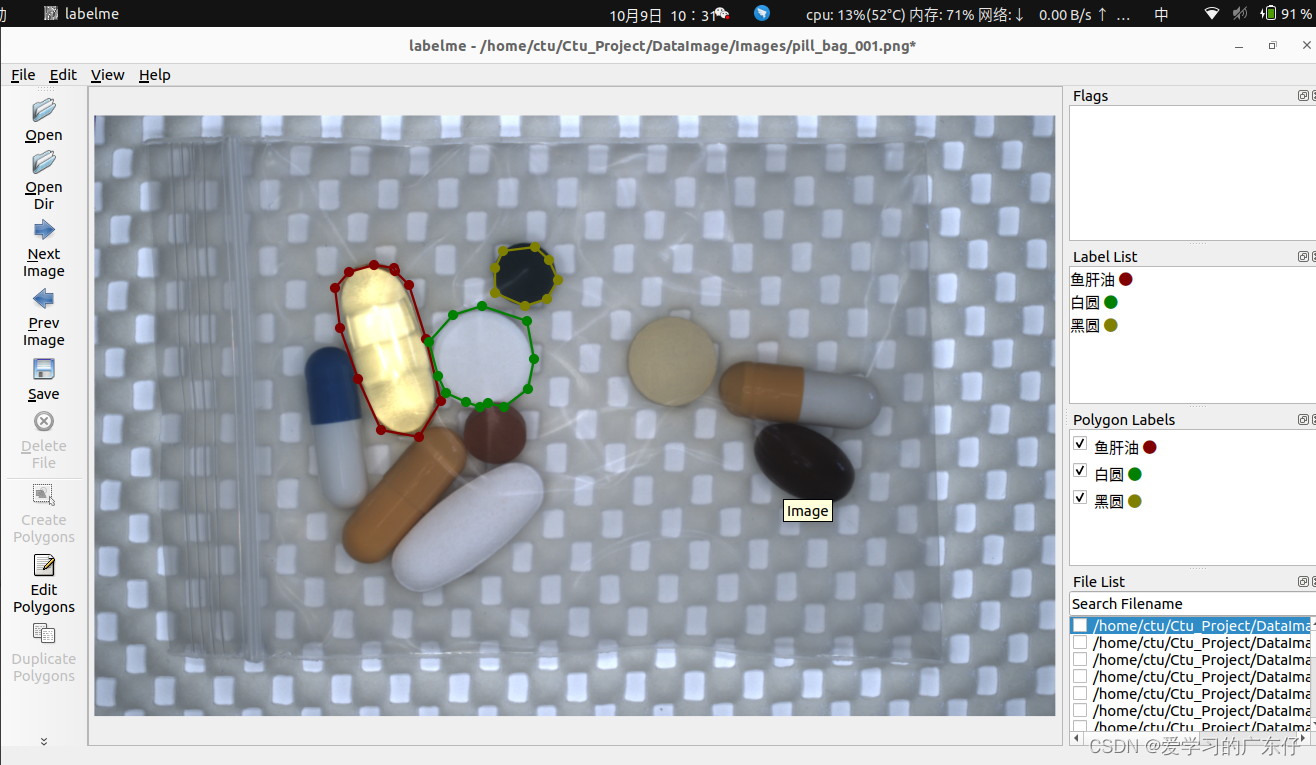

我在平时标注的时候快捷键一般只用到:

createpolygons:(ctrl+N)开始绘制

a:上一张

d:下一张

绘制过程如图:

就只需要一次把目标绘制完成即可。

二、基于Mxnet的语义分割框架构建

本语义分割架目录结构如下:

core:此目录主要保存标准的py文件,功能如语义分割评估算法等计算

data:此目录主要保存标准py文件,功能如数据加载器,迭代器等

nets:快速生成pyd文件

utils:整个项目的一些预处理文件

Ctu_Segementation.py:语义分割主入口

1.引入库

import os, math,json, random,cv2, time,sys,warnings

import numpy as np

from tqdm import tqdm

from PIL import Image

from functools import partial

import mxnet as mx

from mxnet import gluon, autograd, ndarray as nd

from mxnet.gluon.data.vision import transforms

from data.data_loader import VOCSegmentation

from nets.fcn import get_fcn_Net

from nets.pspnet import get_psp_Net

from nets.deeplabv3 import get_deeplabv3_Net

from nets.deeplabv3_plus import get_deeplabv3_plus_Net

from nets.deeplabv3b_plus import get_deeplabv3b_plus_Net

from nets.fastscnn import get_fastscnn_Net

from nets.icnet import get_icnet_Net

from nets.danet import get_danet_Net

from nets.backbone.resnest import set_drop_prob

from nets.segbase import SegEvalModel

from core.softdog import CheckSoft

from core.loss import ICNetLoss, SegmentationMultiLosses, MixSoftmaxCrossEntropyLoss

from utils.parallel import DataParallelModel, DataParallelCriterion

from utils.lr_scheduler import LRScheduler, LRSequential

from utils.metrics import SegmentationMetric

2.CPU/GPU配置

if USEGPU == '-1':

self.kvstore = 'local'

self.ctx = [mx.cpu(0)]

self.USEGPU = 0

os.environ["CUDA_VISIBLE_DEVICES"] = "-1"

else:

self.kvstore = 'device'

self.ctx = [mx.gpu(i) for i in range(len(USEGPU.split(',')))]

self.USEGPU = len(USEGPU.split(','))

3.数据标准化

self.input_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize([.485, .456, .406], [.229, .224, .225]),

])

4.解析数据集到列表中

JSON格式

def CreateDataList(self, DataDir):

DataList = [os.path.join(DataDir, fileEach) for fileEach in [each for each in os.listdir(DataDir) if re.match(r'.*\.json', each)]]

All_label_name = ['_background_']

voc_colormap = [[0, 0, 0]]

for each_json in DataList:

data = json.load(open(each_json,encoding='utf-8'))

for shape in sorted(data['shapes'], key=lambda x: x['label']):

label_name = shape['label']

if label_name not in All_label_name:

All_label_name.append(label_name)

voc_colormap.append([len(voc_colormap), len(voc_colormap), len(voc_colormap)])

return DataList, All_label_name, voc_colormap

Label 图像的标注格式

def CreateDataList2(self,ImgDir,LabDir,ClassTxt,ext_file=['bmp','BMP','jpg','JPG','png','PNG','jpeg','JPEG']):

ImgList = [fileEach for fileEach in [each for each in os.listdir(ImgDir) if each.split('.')[-1] in ext_file]]

LabList = [fileEach for fileEach in [each for each in os.listdir(LabDir) if each.split('.')[-1] in ext_file]]

DataList=[]

for each_img in ImgList:

file_name = each_img.split('.')[0]

for each_lab in LabList:

if file_name == each_lab.split('.')[0]:

DataList.append([os.path.join(ImgDir, each_img),os.path.join(LabDir, each_lab)])

break

All_label_name = ['_background_']

voc_colormap = [[0, 0, 0]]

with open(ClassTxt,'r') as f_read:

for each_class in f_read.readlines():

c = each_class.strip()

if c!='':

All_label_name.append(c)

voc_colormap.append([len(voc_colormap), len(voc_colormap), len(voc_colormap)])

return DataList, All_label_name, voc_colormap

5.设置数据迭代器

self.train_data = gluon.data.DataLoader(trainset, batch_size, shuffle=True, last_batch='rollover', num_workers=num_workers)

self.eval_data = gluon.data.DataLoader(valset, batch_size, last_batch='rollover', num_workers=num_workers)

6.模型构建

本次模型拥有多个形式,如下字典:

self.networks={

'fcn':get_fcn_Net,

'pspnet':get_psp_Net,

'deeplabv3':get_deeplabv3_Net,

'deeplabv3_plus':get_deeplabv3_plus_Net,

'deeplabv3b_plus':get_deeplabv3b_plus_Net,#

'icnet':get_icnet_Net,

'fastscnn':get_fastscnn_Net,

'danet':get_danet_Net

}

self.model = self.networks[self.network](self.backbone, self.classes_names,norm_layer=self.norm_layer, norm_kwargs=self.norm_kwargs, aux=self.aux, base_size=self.base_size, crop_size=self.image_size,ctx=self.ctx[0],alpha=self.alpha)

fcn模型结构

class FCN(SegBaseModel):

def __init__(self, nclass, backbone='resnet50', aux=True, ctx=cpu(), base_size=520, crop_size=480,alpha=1, **kwargs):

self.alpha=alpha

super(FCN, self).__init__(nclass, aux, backbone, ctx=ctx, base_size=base_size, crop_size=crop_size,alpha=self.alpha, **kwargs)

with self.name_scope():

if backbone == 'resnet18' or backbone == 'resnet34':

in_channels = 512//self.alpha

else:

in_channels = 2048//self.alpha

self.head = _FCNHead(in_channels, nclass, **kwargs) #2048

self.head.initialize(ctx=ctx)

self.head.collect_params().setattr('lr_mult', 10)

if self.aux:

self.auxlayer = _FCNHead(1024//self.alpha, nclass, **kwargs) #1024

self.auxlayer.initialize(ctx=ctx)

self.auxlayer.collect_params().setattr('lr_mult', 10)

def hybrid_forward(self, F, x):

c3, c4 = self.base_forward(x)

outputs = []

x = self.head(c4)

x = F.contrib.BilinearResize2D(x, **self._up_kwargs)

outputs.append(x)

if self.aux:

auxout = self.auxlayer(c3)

auxout = F.contrib.BilinearResize2D(auxout, **self._up_kwargs)

outputs.append(auxout)

return tuple(outputs)

pspnet模型结构

class PSPNet(SegBaseModel):

def __init__(self, nclass, backbone='resnet50', aux=True, ctx=cpu(), base_size=520, crop_size=480,alpha=1, **kwargs):

self.alpha=alpha

super(PSPNet, self).__init__(nclass, aux, backbone, ctx=ctx, base_size=base_size, crop_size=crop_size,alpha=self.alpha, **kwargs)

with self.name_scope():

if backbone == 'resnet18' or backbone == 'resnet34':

in_channels = 512//self.alpha

else:

in_channels = 2048//self.alpha

self.head = _PSPHead(in_channels, nclass, feature_map_height=self._up_kwargs['height']//8, feature_map_width=self._up_kwargs['width']//8,alpha=self.alpha, **kwargs)

self.head.initialize(ctx=ctx)

self.head.collect_params().setattr('lr_mult', 10)

if self.aux:

self.auxlayer = _FCNHead(1024//self.alpha, nclass, **kwargs)

self.auxlayer.initialize(ctx=ctx)

self.auxlayer.collect_params().setattr('lr_mult', 10)

def hybrid_forward(self, F, x):

c3, c4 = self.base_forward(x)

outputs = []

x = self.head(c4)

x = F.contrib.BilinearResize2D(x, **self._up_kwargs)

outputs.append(x)

if self.aux:

auxout = self.auxlayer(c3)

auxout = F.contrib.BilinearResize2D(auxout, **self._up_kwargs)

outputs.append(auxout)

return tuple(outputs)

def demo(self, x):

return self.predict(x)

def predict(self, x):

h, w = x.shape[2:]

self._up_kwargs['height'] = h

self._up_kwargs['width'] = w

c3, c4 = self.base_forward(x)

outputs = []

x = self.head.demo(c4)

import mxnet.ndarray as F

pred = F.contrib.BilinearResize2D(x, **self._up_kwargs)

return pred

deeplabv3模型结构

class DeepLabV3(SegBaseModel):

def __init__(self, nclass, backbone='resnet50', aux=True, ctx=cpu(), height=None, width=None, base_size=520, crop_size=480,alpha=1, **kwargs):

self.alpha=alpha

super(DeepLabV3, self).__init__(nclass, aux, backbone, ctx=ctx, base_size=base_size, crop_size=crop_size,alpha=self.alpha, **kwargs)

height = height if height is not None else crop_size

width = width if width is not None else crop_size

with self.name_scope():

if backbone == 'resnet18' or backbone == 'resnet34':

in_channels = 512//self.alpha

else:

in_channels = 2048//self.alpha

self.head = _DeepLabHead(in_channels, nclass, height=height//8, width=width//8,alpha=self.alpha, **kwargs)

self.head.initialize(ctx=ctx)

self.head.collect_params().setattr('lr_mult', 10)

if self.aux:

self.auxlayer = _FCNHead(1024//self.alpha, nclass, **kwargs)

self.auxlayer.initialize(ctx=ctx)

self.auxlayer.collect_params().setattr('lr_mult', 10)

self._up_kwargs = {'height': height, 'width': width}

def hybrid_forward(self, F, x):

c3, c4 = self.base_forward(x)

outputs = []

x = self.head(c4)

x = F.contrib.BilinearResize2D(x, **self._up_kwargs)

outputs.append(x)

if self.aux:

auxout = self.auxlayer(c3)

auxout = F.contrib.BilinearResize2D(auxout, **self._up_kwargs)

outputs.append(auxout)

return tuple(outputs)

def demo(self, x):

return self.predict(x)

def predict(self, x):

h, w = x.shape[2:]

self._up_kwargs['height'] = h

self._up_kwargs['width'] = w

c3, c4 = self.base_forward(x)

x = self.head.demo(c4)

import mxnet.ndarray as F

pred = F.contrib.BilinearResize2D(x, **self._up_kwargs)

return pred

deeplabv3+模型结构

class DeepLabV3Plus(HybridBlock):

def __init__(self, nclass, backbone='xception65', aux=True, ctx=cpu(), height=None, width=None,base_size=576, crop_size=512, dilated=True,alpha=1, **kwargs):

super(DeepLabV3Plus, self).__init__()

self.alpha=alpha

self.aux = aux

height = height if height is not None else crop_size

width = width if width is not None else crop_size

output_stride = 8 if dilated else 32

with self.name_scope():

pretrained = get_xcetption(num_classes = nclass, backbone=backbone, output_stride=output_stride, ctx=ctx,alpha=self.alpha, **kwargs)

# base network

self.conv1 = pretrained.conv1

self.bn1 = pretrained.bn1

self.relu = pretrained.relu

self.conv2 = pretrained.conv2

self.bn2 = pretrained.bn2

self.block1 = pretrained.block1

self.block2 = pretrained.block2

self.block3 = pretrained.block3

# Middle flow

self.midflow = pretrained.midflow

# Exit flow

self.block20 = pretrained.block20

self.conv3 = pretrained.conv3

self.bn3 = pretrained.bn3

self.conv4 = pretrained.conv4

self.bn4 = pretrained.bn4

self.conv5 = pretrained.conv5

self.bn5 = pretrained.bn5

# deeplabv3 plus

self.head = _DeepLabHead(nclass, height=height//4, width=width//4,alpha=self.alpha, **kwargs)

self.head.initialize(ctx=ctx)

self.head.collect_params().setattr('lr_mult', 10)

if self.aux:

self.auxlayer = _FCNHead(728//self.alpha, nclass, **kwargs)

self.auxlayer.initialize(ctx=ctx)

self.auxlayer.collect_params().setattr('lr_mult', 10)

self._up_kwargs = {'height': height, 'width': width}

self.base_size = base_size

self.crop_size = crop_size

def base_forward(self, x):

# Entry flow

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu(x)

x = self.block1(x)

# add relu here

x = self.relu(x)

low_level_feat = x

x = self.block2(x)

x = self.block3(x)

# Middle flow

x = self.midflow(x)

mid_level_feat = x

# Exit flow

x = self.block20(x)

x = self.relu(x)

x = self.conv3(x)

x = self.bn3(x)

x = self.relu(x)

x = self.conv4(x)

x = self.bn4(x)

x = self.relu(x)

x = self.conv5(x)

x = self.bn5(x)

x = self.relu(x)

return low_level_feat, mid_level_feat, x

def hybrid_forward(self, F, x):

c1, c3, c4 = self.base_forward(x)

outputs = []

x = self.head(c4, c1)

x = F.contrib.BilinearResize2D(x, **self._up_kwargs)

outputs.append(x)

if self.aux:

auxout = self.auxlayer(c3)

auxout = F.contrib.BilinearResize2D(auxout, **self._up_kwargs)

outputs.append(auxout)

return tuple(outputs)

def demo(self, x):

h, w = x.shape[2:]

self._up_kwargs['height'] = h

self._up_kwargs['width'] = w

self.head.aspp.concurent[-1]._up_kwargs['height'] = h// 8

self.head.aspp.concurent[-1]._up_kwargs['width'] = w// 8

pred = self.forward(x)

if self.aux:

pred = pred[0]

return pred

def evaluate(self, x):

return self.forward(x)[0]

ICNet模型结构

class ICNet(SegBaseModel):

def __init__(self, nclass, backbone='resnet50', aux=False, ctx=cpu(), height=None, width=None, base_size=520, crop_size=480, lr_mult=10,alpha=1, **kwargs):

self.alpha=alpha

super(ICNet, self).__init__(nclass, aux=aux, backbone=backbone, ctx=ctx, base_size=base_size, crop_size=crop_size,alpha=self.alpha, **kwargs)

height = height if height is not None else crop_size

width = width if width is not None else crop_size

self._up_kwargs = {'height': height, 'width': width}

self.base_size = base_size

self.crop_size = crop_size

with self.name_scope():

self.conv_sub1 = nn.HybridSequential()

with self.conv_sub1.name_scope():

self.conv_sub1.add(ConvBnRelu(3, 32//self.alpha, 3, 2, 1, **kwargs),

ConvBnRelu(32//self.alpha, 32//self.alpha, 3, 2, 1, **kwargs),

ConvBnRelu(32//self.alpha, 64//self.alpha, 3, 2, 1, **kwargs))

self.conv_sub1.initialize(ctx=ctx)

self.conv_sub1.collect_params().setattr('lr_mult', lr_mult)

if backbone == 'resnet18' or backbone == 'resnet34':

in_channels = 512//self.alpha

else:

in_channels = 2048//self.alpha

self.psp_head = _PSPHead(in_channels, nclass,

feature_map_height=self._up_kwargs['height'] // 32,

feature_map_width=self._up_kwargs['width'] // 32,

alpha=self.alpha,

**kwargs)

self.psp_head.block = self.psp_head.block[:-1]

self.psp_head.initialize(ctx=ctx)

self.psp_head.collect_params().setattr('lr_mult', lr_mult)

self.head = _ICHead(nclass=nclass,

height=self._up_kwargs['height'],

width=self._up_kwargs['width'],

alpha=self.alpha,

**kwargs)

self.head.initialize(ctx=ctx)

self.head.collect_params().setattr('lr_mult', lr_mult)

self.conv_sub4 = ConvBnRelu(512//self.alpha, 256//self.alpha, 1, **kwargs)

self.conv_sub4.initialize(ctx=ctx)

self.conv_sub4.collect_params().setattr('lr_mult', lr_mult)

self.conv_sub2 = ConvBnRelu(in_channels//4, 256//self.alpha, 1, **kwargs)

self.conv_sub2.initialize(ctx=ctx)

self.conv_sub2.collect_params().setattr('lr_mult', lr_mult)

def hybrid_forward(self, F, x):

x_sub1_out = self.conv_sub1(x)

x_sub2 = F.contrib.BilinearResize2D(x, height=self._up_kwargs['height'] // 2, width=self._up_kwargs['width'] // 2)

x = self.conv1(x_sub2)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x_sub2_out = self.layer2(x)

x_sub4 = F.contrib.BilinearResize2D(x_sub2_out, height=self._up_kwargs['height'] // 32, width=self._up_kwargs['width'] // 32)

x = self.layer3(x_sub4)

x = self.layer4(x)

x_sub4_out = self.psp_head(x)

x_sub4_out = self.conv_sub4(x_sub4_out)

x_sub2_out = self.conv_sub2(x_sub2_out)

res = self.head(x_sub1_out, x_sub2_out, x_sub4_out)

return res

def demo(self, x):

return self.predict(x)

def predict(self, x):

h, w = x.shape[2:]

self._up_kwargs['height'] = h

self._up_kwargs['width'] = w

import mxnet.ndarray as F

x_sub1_out = self.conv_sub1(x)

x_sub2 = F.contrib.BilinearResize2D(x, height=self._up_kwargs['height'] // 2, width=self._up_kwargs['width'] // 2)

x = self.conv1(x_sub2)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x_sub2_out = self.layer2(x)

x_sub4 = F.contrib.BilinearResize2D(x_sub2_out, height=self._up_kwargs['height'] // 32, width=self._up_kwargs['width'] // 32)

x = self.layer3(x_sub4)

x = self.layer4(x)

x_sub4_out = self.psp_head.demo(x)

x_sub4_out = self.conv_sub4(x_sub4_out)

x_sub2_out = self.conv_sub2(x_sub2_out)

res = self.head.demo(x_sub1_out, x_sub2_out, x_sub4_out)

return res[0]

fastscnn模型结构

class FastSCNN(HybridBlock):

def __init__(self, nclass, aux=True, ctx=cpu(), height=None, width=None, base_size=2048, crop_size=1024,alpha=1, **kwargs):

super(FastSCNN, self).__init__()

self.alpha=alpha

height = height if height is not None else crop_size

width = width if width is not None else crop_size

self._up_kwargs = {'height': height, 'width': width}

self.base_size = base_size

self.crop_size = crop_size

self.aux = aux

with self.name_scope():

self.learning_to_downsample = LearningToDownsample(32//self.alpha, 48//self.alpha, 64//self.alpha, **kwargs)

self.learning_to_downsample.initialize(ctx=ctx)

self.global_feature_extractor = GlobalFeatureExtractor(64//self.alpha, [64//self.alpha, 96//self.alpha, 128//self.alpha], 128//self.alpha, 6, [3, 3, 3], height=height//32, width=width//32, **kwargs)

self.global_feature_extractor.initialize(ctx=ctx)

self.feature_fusion = FeatureFusionModule(64//self.alpha, 128//self.alpha, 128//self.alpha, height=height//8, width=width//8, **kwargs)

self.feature_fusion.initialize(ctx=ctx)

self.classifier = Classifer(128//self.alpha, nclass, **kwargs)

self.classifier.initialize(ctx=ctx)

if self.aux:

self.auxlayer = _auxHead(in_channels=64//self.alpha, channels=64//self.alpha, nclass=nclass, **kwargs)

self.auxlayer.initialize(ctx=ctx)

self.auxlayer.collect_params().setattr('lr_mult', 10)

def hybrid_forward(self, F, x):

higher_res_features = self.learning_to_downsample(x)

x = self.global_feature_extractor(higher_res_features)

x = self.feature_fusion(higher_res_features, x)

x = self.classifier(x)

x = F.contrib.BilinearResize2D(x, **self._up_kwargs)

outputs = []

outputs.append(x)

if self.aux:

auxout = self.auxlayer(higher_res_features)

auxout = F.contrib.BilinearResize2D(auxout, **self._up_kwargs)

outputs.append(auxout)

return tuple(outputs)

def demo(self, x):

h, w = x.shape[2:]

self._up_kwargs['height'] = h

self._up_kwargs['width'] = w

self.global_feature_extractor.ppm._up_kwargs = {'height': h // 32, 'width': w // 32}

self.feature_fusion._up_kwargs = {'height': h // 8, 'width': w // 8}

higher_res_features = self.learning_to_downsample(x)

x = self.global_feature_extractor(higher_res_features)

x = self.feature_fusion(higher_res_features, x)

x = self.classifier(x)

import mxnet.ndarray as F

x = F.contrib.BilinearResize2D(x, **self._up_kwargs)

return x

def predict(self, x):

return self.demo(x)

def evaluate(self, x):

return self.forward(x)[0]

DANet模型结构

class DANet(SegBaseModel):

def __init__(self, nclass, backbone='resnet50', aux=False, ctx=cpu(), height=None, width=None, base_size=520, crop_size=480, dilated=True,alpha=1, **kwargs):

self.alpha=alpha

super(DANet, self).__init__(nclass, aux, backbone, ctx=ctx, base_size=base_size, crop_size=crop_size,alpha=self.alpha, **kwargs)

self.aux = aux

height = height if height is not None else crop_size

width = width if width is not None else crop_size

if backbone == 'resnet18' or backbone == 'resnet34':

in_channels = 512//self.alpha

else:

in_channels = 2048//self.alpha

with self.name_scope():

self.head = DANetHead(in_channels, nclass, backbone, alpha=self.alpha, **kwargs)

self.head.initialize(ctx=ctx)

self._up_kwargs = {'height': height, 'width': width}

def hybrid_forward(self, F, x):

c3, c4 = self.base_forward(x)

x = self.head(c4)

x = list(x)

x[0] = F.contrib.BilinearResize2D(x[0], **self._up_kwargs)

x[1] = F.contrib.BilinearResize2D(x[1], **self._up_kwargs)

x[2] = F.contrib.BilinearResize2D(x[2], **self._up_kwargs)

outputs = [x[0]]

outputs.append(x[1])

outputs.append(x[2])

return tuple(outputs)

7.模型训练

学习率设置

self.lr_scheduler = LRSequential([

LRScheduler('linear', base_lr=0, target_lr=learning_rate,

nepochs=0, iters_per_epoch=len(self.train_data)),

LRScheduler(mode='poly', base_lr=learning_rate,

nepochs=TrainNum-0,

iters_per_epoch=len(self.train_data),

power=0.9)

])

优化器设置

if optim == 'sgd':

optimizer_params = {'lr_scheduler': self.lr_scheduler,

'wd': 1e-4,

'momentum': 0.9,

'learning_rate': learning_rate}

else:

optimizer_params = {'lr_scheduler': self.lr_scheduler,

'wd': 1e-4,

'learning_rate': learning_rate}

if self.dtype == 'float16':

optimizer_params['multi_precision'] = True

self.optimizer = gluon.Trainer(self.net.module.collect_params(), optim, optimizer_params, kvstore=kv)

模型循环

for i, (data, target) in enumerate(tbar):

if self.TrainWhileFlag == False:

break

with autograd.record(True):

outputs = self.net(data.astype(self.dtype, copy=False))

losses = self.criterion(outputs, target)

mx.nd.waitall()

autograd.backward(losses)

self.optimizer.step(self.batch_size)

for loss in losses:

train_loss += np.mean(loss.asnumpy()) / len(losses)

tbar.set_description('迭代:%d:%d -> 训练损失值:%.5f' % (epoch+1,TrainNum, train_loss/(i+1)))

mx.nd.waitall()

self.status_Data['train_loss'] = train_loss/(i+1)

self.status_Data['train_progress'] = (epoch*len(self.train_data) + i + 1)/(len(self.train_data)*TrainNum)

print('迭代:%d:%04d/%04d -> 训练损失值:%.5f' % (epoch, i, len(self.train_data), train_loss/(i+1)))

8.模型预测

def predict(self,img_cv):

if self.status_Data['can_test'] == False:

return None

start_time = time.time()

base_imageSize = img_cv.shape

img_cv_ = cv2.resize(img_cv,(self.image_size,self.image_size))

img = Image.fromarray(cv2.cvtColor(img_cv_,cv2.COLOR_BGR2RGB)).convert('RGB')

img = mx.ndarray.array(np.array(img), self.ctx[0])

data = self.input_transform(img)

data = data.as_in_context(self.ctx[0])

if len(data.shape) < 4:

data = nd.expand_dims(data, axis=0)

data = data.astype(self.dtype, copy=False)

predict = self.model(data)[0]

target = nd.argmax(predict, axis=1)

predict = self.predict2img(target,self.colormap)

predict_reslabel = self.predict2img(target,self.colormap_label)

image_result = cv2.resize(predict, (base_imageSize[1], base_imageSize[0]))

predict_reslabel = cv2.resize(predict_reslabel, (base_imageSize[1], base_imageSize[0]))

img_add = cv2.addWeighted(img_cv, 1.0, image_result, 0.5, 0)

predict_reslabel = cv2.cvtColor(predict_reslabel,cv2.COLOR_BGR2GRAY)

DataJson = {}

for each in range(len(self.classes_names)-1):

DataJson[self.classes_names[each+1]] = []

_, thresh = cv2.threshold(predict_reslabel, each + 1, 255, cv2.THRESH_TOZERO_INV)

_, thresh = cv2.threshold(thresh, each, 255, cv2.THRESH_BINARY_INV)

contours, hierarchy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

for i in range(len(contours) - 1):

img_cv = cv2.drawContours(img_cv, [contours[i + 1]], -1, 0, 2)

polygonList = []

for each_point in range(len(contours[i + 1])):

polygonList.append([contours[i + 1][each_point][0][0], contours[i + 1][each_point][0][1]])

DataJson[self.classes_names[each+1]].append(polygonList)

result_value = {

"classes_names":self.classes_names,

"image_result": image_result,

"colormap":self.colormap,

"image_result_label":predict_reslabel,

"polygon":DataJson,

"polygon_img":img_cv,

"img_add": img_add,

"time": (time.time() - start_time) * 1000

}

return result_value

三、主入口

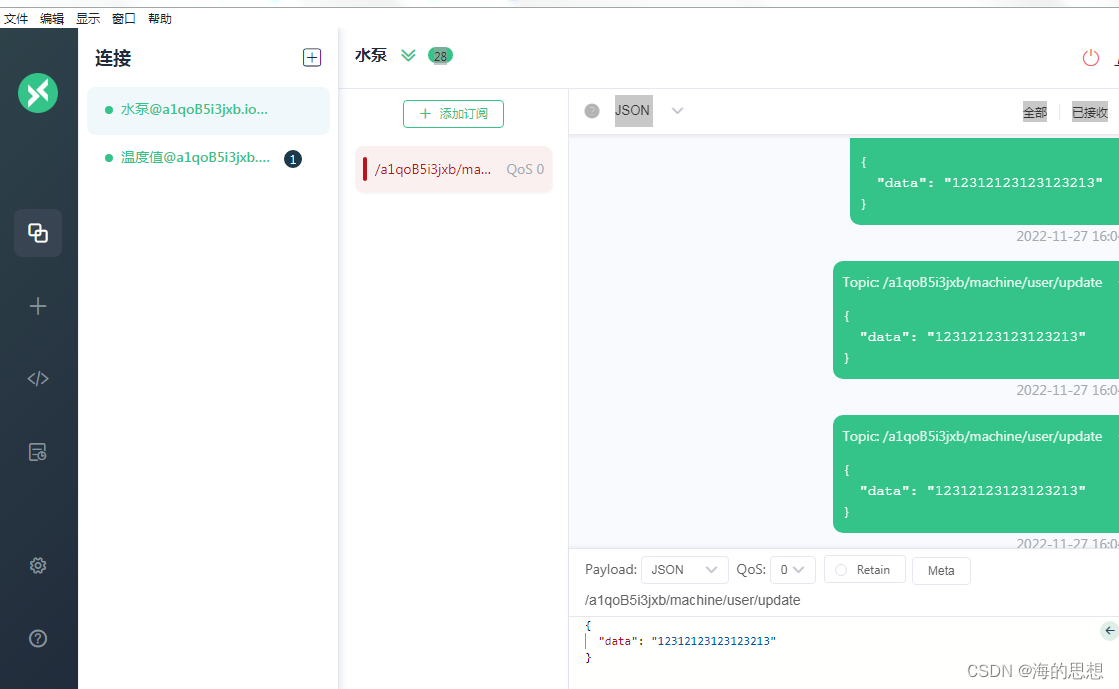

这里可以根据多个模型自定义选择,调用和修改及其方便

主入口代码:

if __name__ == '__main__':

# ctu = Ctu_Segmentation(USEGPU = '0', image_size = 512, aux=True)

# ctu.InitModel(DataDir=r'E:\DL_Project\DataSet\DataSet_Segmentation\DataSet_YaoPian\DataImage', DataLabel = None,batch_size=2,num_workers = 0, Pre_Model= None,network='fcn',backbone='resnet18',dtype='float32',alpha=1)

# ctu.train(TrainNum=120, learning_rate=0.0001,optim = 'adam', ModelPath='./Model_LSEC')

ctu = Ctu_Segmentation(USEGPU = '0')

ctu.LoadModel('./Model_LSEC_fcn')

cv2.namedWindow("result", 0)

cv2.resizeWindow("result", 640, 480)

for root, dirs, files in os.walk(r'E:\DL_Project\DataSet\DataSet_Segmentation\DataSet_YaoPian\DataImage'):

for f in files:

img_cv = ctu.read_image(os.path.join(root, f))

if img_cv is None:

continue

res = ctu.predict(img_cv)

if res is not None:

print(os.path.join(root, f))

print("耗时:" + str(res['time']) + ' ms')

# print(res['polygon'])

cv2.imshow("result", res['img_add'])

cv2.waitKey()

四、训练过程截图

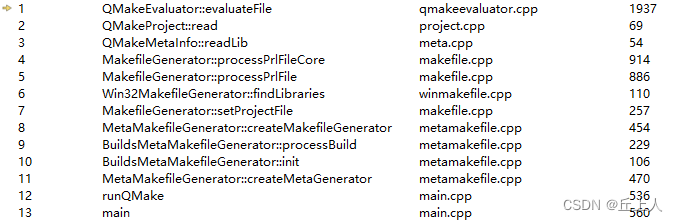

因已训练模型文件已经删除(在模型测试时占用过多空间),有序有机会会上传模型训练结果,此处为模型训练过程,可观察到逐步收敛

训练生成文件

五、效果展示