文章目录

- SimGNN:快速图相似度计算的神经网络方法

- 1. 数据

- 2. 模型

- 2.1 python文件功能介绍

- 2.2 重要函数和类的实现

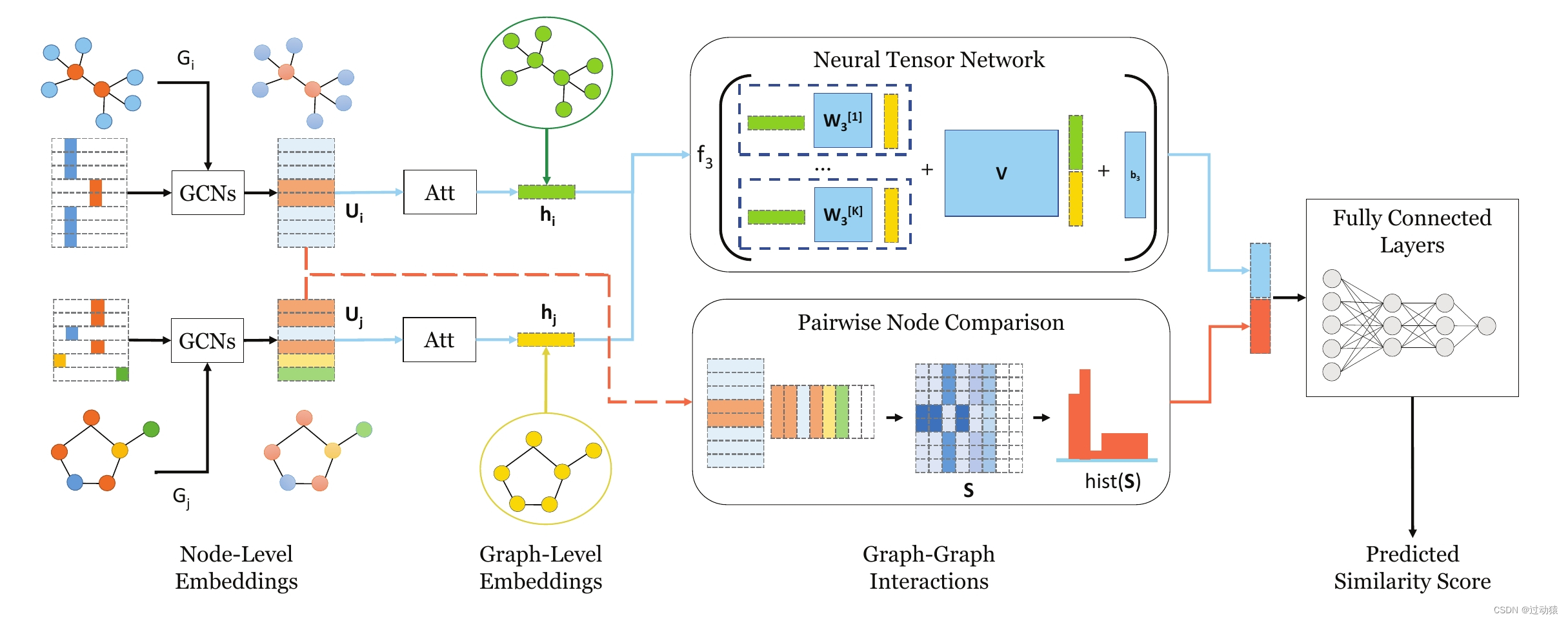

SimGNN:快速图相似度计算的神经网络方法

原论文名称:SimGNN: A Neural Network Approach to Fast Graph Similarity Computation

代码链接:https://github.com/benedekrozemberczki/SimGNN

1. 数据

例子:

{"graph_1": [[0, 8], [0, 9], [0, 2], [0, 3], [0, 11], [1, 2], [1, 3], [1, 5], [1, 6], [1, 7], [2, 3], [2, 5], [2, 6], [2, 7], [2, 8], [2, 10], [2, 11], [3, 5], [3, 7], [3, 8], [3, 10], [3, 11], [4, 9], [4, 10], [4, 5], [4, 6], [4, 7], [5, 7], [5, 8], [5, 11], [6, 7], [6, 8], [6, 11], [7, 8], [7, 10], [7, 11], [8, 9], [10, 11]], "ged": 32, "graph_2": [[0, 1], [0, 2], [0, 4], [1, 8], [1, 10], [1, 2], [1, 7], [2, 4], [2, 7], [2, 9], [2, 11], [3, 10], [3, 11], [3, 5], [3, 6], [3, 7], [4, 9], [4, 11], [5, 8], [5, 9], [5, 6], [6, 9], [7, 9], [7, 10], [7, 11], [8, 9], [8, 10], [9, 10], [9, 11], [10, 11]], "labels_2": ["3", "5", "6", "5", "4", "4", "3", "6", "4", "8", "6", "6"], "labels_1": ["5", "5", "9", "8", "5", "7", "6", "9", "7", "3", "5", "7"]}

一个样本包含五个属性:

graph_1:graph1的邻接矩阵

graph_2:graph2的邻接矩阵

labels_1:graph1的特征矩阵

labels_2:graph2的特征矩阵

ged:图相似度

2. 模型

2.1 python文件功能介绍

layers.py -- 包含注意力机制层和Neural Tensor Network层的实现

simgnn.py -- 模型实现

utils.py -- 一些辅助函数的实现

param_parser.py -- 参数

main.py -- 主函数

--filters-1 INT Number of filter in 1st GCN layer. Default is 128.

--filters-2 INT Number of filter in 2nd GCN layer. Default is 64.

--filters-3 INT Number of filter in 3rd GCN layer. Default is 32.

--tensor-neurons INT Neurons in tensor network layer. Default is 16.

--bottle-neck-neurons INT Bottle neck layer neurons. Default is 16.

--bins INT Number of histogram bins. Default is 16.

--batch-size INT Number of pairs processed per batch. Default is 128.

--epochs INT Number of SimGNN training epochs. Default is 5.

--dropout FLOAT Dropout rate. Default is 0.5.

--learning-rate FLOAT Learning rate. Default is 0.001.

--weight-decay FLOAT Weight decay. Default is 10^-5.

--histogram BOOL Include histogram features. Default is False.

2.2 重要函数和类的实现

- 注意力机制层

- __init__初始化函数

功能:

导入需要的参数:args;

设置参数矩阵,形状为[self.args.filters_3,self.args.filters_3]:调用setup_weights(),

初始化参数矩阵的值:调用init_parameters()

def __init__(self, args):

"""

:param args: Arguments object.

"""

super(AttentionModule, self).__init__()

self.args = args

self.setup_weights()

self.init_parameters()

- setup_weights函数

def setup_weights(self):

"""

Defining weights.

"""

self.weight_matrix = torch.nn.Parameter(torch.Tensor(self.args.filters_3,

self.args.filters_3))

- init_parameters函数

def init_parameters(self):

"""

Initializing weights.

"""

torch.nn.init.xavier_uniform_(self.weight_matrix)

- forward函数

'''

key: embedding

value: embedding

query: embedding_i

'''

def forward(self, embedding): # embedding形状:[graph_node_num, self.args.filters_3]

"""

Making a forward propagation pass to create a graph level representation.

:param embedding: Result of the GCN.

:return representation: A graph level representation vector.

"""

global_context = torch.mean(torch.matmul(embedding, self.weight_matrix), dim=0) # [1,self.args.filters_3]

transformed_global = torch.tanh(global_context) # [1, self.args.filters_3]

sigmoid_scores = torch.sigmoid(torch.mm(embedding, transformed_global.view(-1, 1))) # [graph_node_num, 1]

representation = torch.mm(torch.t(embedding), sigmoid_scores) # [self.args.filters_3, 1]

return representation

整体代码:

class AttentionModule(torch.nn.Module):

"""

SimGNN Attention Module to make a pass on graph.

"""

def __init__(self, args):

"""

:param args: Arguments object.

"""

super(AttentionModule, self).__init__()

self.args = args

self.setup_weights()

self.init_parameters()

def setup_weights(self):

"""

Defining weights.

"""

self.weight_matrix = torch.nn.Parameter(torch.Tensor(self.args.filters_3,

self.args.filters_3))

def init_parameters(self):

"""

Initializing weights.

"""

torch.nn.init.xavier_uniform_(self.weight_matrix)

def forward(self, embedding):

"""

Making a forward propagation pass to create a graph level representation.

:param embedding: Result of the GCN.

:return representation: A graph level representation vector.

"""

global_context = torch.mean(torch.matmul(embedding, self.weight_matrix), dim=0)

transformed_global = torch.tanh(global_context)

sigmoid_scores = torch.sigmoid(torch.mm(embedding, transformed_global.view(-1, 1)))

representation = torch.mm(torch.t(embedding), sigmoid_scores)

return representation

- Neural Tensor Network层

class TenorNetworkModule(torch.nn.Module):

"""

SimGNN Tensor Network module to calculate similarity vector.

"""

def __init__(self, args):

"""

:param args: Arguments object.

"""

super(TenorNetworkModule, self).__init__()

self.args = args

self.setup_weights()

self.init_parameters()

def setup_weights(self):

"""

Defining weights.

"""

self.weight_matrix = torch.nn.Parameter(torch.Tensor(self.args.filters_3,

self.args.filters_3,

self.args.tensor_neurons))

self.weight_matrix_block = torch.nn.Parameter(torch.Tensor(self.args.tensor_neurons,

2*self.args.filters_3))

self.bias = torch.nn.Parameter(torch.Tensor(self.args.tensor_neurons, 1))

def init_parameters(self):

"""

Initializing weights.

"""

torch.nn.init.xavier_uniform_(self.weight_matrix)

torch.nn.init.xavier_uniform_(self.weight_matrix_block)

torch.nn.init.xavier_uniform_(self.bias)

def forward(self, embedding_1, embedding_2):

# embedding_1:[self.args.filters_3, 1]

# embedding_2:[self.args.filters_3, 1]

"""

Making a forward propagation pass to create a similarity vector.

:param embedding_1: Result of the 1st embedding after attention.

:param embedding_2: Result of the 2nd embedding after attention.

:return scores: A similarity score vector.

"""

scoring = torch.mm(torch.t(embedding_1), self.weight_matrix.view(self.args.filters_3, -1)) # [1, self.args.filters_3*self.args.tensor_neurons]

scoring = scoring.view(self.args.filters_3, self.args.tensor_neurons) # [self.args.filters_3, self.args.tensor_neurons]

scoring = torch.mm(torch.t(scoring), embedding_2) # [self.args.tensor_neurons, 1]

combined_representation = torch.cat((embedding_1, embedding_2)) # [2*self.args.filters_3, 1]

block_scoring = torch.mm(self.weight_matrix_block, combined_representation) # [self.args.tensor_neurons, 1]

scores = torch.nn.functional.relu(scoring + block_scoring + self.bias) # [self.args.tensor_neurons, 1]

return scores

- SimGNN模型

- __init__初始化函数:

功能:导入需要用到的参数:args,label数量:number_of_labels,构建模型:调用setup_layers函数

def __init__(self, args, number_of_labels):

"""

:param args: Arguments object.

:param number_of_labels: Number of node labels.

"""

super(SimGNN, self).__init__()

self.args = args

self.number_labels = number_of_labels # 存放label种类数量

self.setup_layers()

- calculate_bottleneck_features函数:

功能:是否要加上histogram层(下半部分)提取的embedding

def calculate_bottleneck_features(self):

"""

Deciding the shape of the bottleneck layer.

"""

if self.args.histogram == True:

self.feature_count = self.args.tensor_neurons + self.args.bins

else:

self.feature_count = self.args.tensor_neurons

- setup_layers函数:

功能:构建模型,包括三个图卷积层,自注意力机制层,Neural Tensor Network层,两个线性层,最后输出一个预测的值。

def setup_layers(self):

"""

Creating the layers.

"""

self.calculate_bottleneck_features()

self.convolution_1 = GCNConv(self.number_labels, self.args.filters_1)

self.convolution_2 = GCNConv(self.args.filters_1, self.args.filters_2)

self.convolution_3 = GCNConv(self.args.filters_2, self.args.filters_3)

self.attention = AttentionModule(self.args)

self.tensor_network = TenorNetworkModule(self.args)

self.fully_connected_first = torch.nn.Linear(self.feature_count,

self.args.bottle_neck_neurons)

self.scoring_layer = torch.nn.Linear(self.args.bottle_neck_neurons, 1)

- calculate_histogram函数:

def calculate_histogram(self, abstract_features_1, abstract_features_2):

"""

Calculate histogram from similarity matrix.

:param abstract_features_1: Feature matrix for graph 1.

:param abstract_features_2: Feature matrix for graph 2.

:return hist: Histsogram of similarity scores.

"""

scores = torch.mm(abstract_features_1, abstract_features_2).detach()

scores = scores.view(-1, 1)

hist = torch.histc(scores, bins=self.args.bins)

hist = hist/torch.sum(hist)

hist = hist.view(1, -1)

return hist

- convolutional_pass函数:

def convolutional_pass(self, edge_index, features):

"""

Making convolutional pass.

:param edge_index: Edge indices.

:param features: Feature matrix.

:return features: Absstract feature matrix.

"""

features = self.convolution_1(features, edge_index)

features = torch.nn.functional.relu(features)

features = torch.nn.functional.dropout(features,

p=self.args.dropout,

training=self.training)

features = self.convolution_2(features, edge_index)

features = torch.nn.functional.relu(features)

features = torch.nn.functional.dropout(features,

p=self.args.dropout,

training=self.training)

features = self.convolution_3(features, edge_index)

return features

- forward函数:

功能:运行神经网络,预测结果

def forward(self, data):

"""

Forward pass with graphs.

:param data: Data dictiyonary.

:return score: Similarity score.

"""

edge_index_1 = data["edge_index_1"]

edge_index_2 = data["edge_index_2"]

features_1 = data["features_1"]

features_2 = data["features_2"]

# 图卷积的计算

abstract_features_1 = self.convolutional_pass(edge_index_1, features_1) # [graph1_num_node,self.args.filters_3]

abstract_features_2 = self.convolutional_pass(edge_index_2, features_2) # [graph2_num_node,self.args.filters_3]

# 计算histogram

if self.args.histogram == True:

hist = self.calculate_histogram(abstract_features_1,

torch.t(abstract_features_2))

# 使用注意力机制层

pooled_features_1 = self.attention(abstract_features_1)

pooled_features_2 = self.attention(abstract_features_2)

scores = self.tensor_network(pooled_features_1, pooled_features_2) # [self.args.tensor_neurons, 1]

scores = torch.t(scores) # [1,self.args.tensor_neurons]

# 合并注意力机制层和Neural Tensor Network层提取的特征

if self.args.histogram == True:

scores = torch.cat((scores, hist), dim=1).view(1, -1) # [1, self.feature_count]

# 获得预测分数,由于标准答案使用了归一化,所以最后要过一下sigmoid层

scores = torch.nn.functional.relu(self.fully_connected_first(scores)) # [1, self.args.bottle_neck_neurons]

score = torch.sigmoid(self.scoring_layer(scores)) # self.scoring_layer(scores): [1,1]

return score

整体代码:

class SimGNN(torch.nn.Module):

"""

SimGNN: A Neural Network Approach to Fast Graph Similarity Computation

https://arxiv.org/abs/1808.05689

"""

def __init__(self, args, number_of_labels):

"""

:param args: Arguments object.

:param number_of_labels: Number of node labels.

"""

super(SimGNN, self).__init__()

self.args = args

self.number_labels = number_of_labels

self.setup_layers()

def calculate_bottleneck_features(self):

"""

Deciding the shape of the bottleneck layer.

"""

if self.args.histogram == True:

self.feature_count = self.args.tensor_neurons + self.args.bins

else:

self.feature_count = self.args.tensor_neurons

def setup_layers(self):

"""

Creating the layers.

"""

self.calculate_bottleneck_features()

self.convolution_1 = GCNConv(self.number_labels, self.args.filters_1)

self.convolution_2 = GCNConv(self.args.filters_1, self.args.filters_2)

self.convolution_3 = GCNConv(self.args.filters_2, self.args.filters_3)

self.attention = AttentionModule(self.args)

self.tensor_network = TenorNetworkModule(self.args)

self.fully_connected_first = torch.nn.Linear(self.feature_count,

self.args.bottle_neck_neurons)

self.scoring_layer = torch.nn.Linear(self.args.bottle_neck_neurons, 1)

def calculate_histogram(self, abstract_features_1, abstract_features_2):

"""

Calculate histogram from similarity matrix.

:param abstract_features_1: Feature matrix for graph 1.

:param abstract_features_2: Feature matrix for graph 2.

:return hist: Histsogram of similarity scores.

"""

scores = torch.mm(abstract_features_1, abstract_features_2).detach()

scores = scores.view(-1, 1)

hist = torch.histc(scores, bins=self.args.bins)

hist = hist/torch.sum(hist)

hist = hist.view(1, -1)

return hist

def convolutional_pass(self, edge_index, features):

"""

Making convolutional pass.

:param edge_index: Edge indices.

:param features: Feature matrix.

:return features: Absstract feature matrix.

"""

features = self.convolution_1(features, edge_index)

features = torch.nn.functional.relu(features)

features = torch.nn.functional.dropout(features,

p=self.args.dropout,

training=self.training)

features = self.convolution_2(features, edge_index)

features = torch.nn.functional.relu(features)

features = torch.nn.functional.dropout(features,

p=self.args.dropout,

training=self.training)

features = self.convolution_3(features, edge_index)

return features

def forward(self, data):

"""

Forward pass with graphs.

:param data: Data dictiyonary.

:return score: Similarity score.

"""

edge_index_1 = data["edge_index_1"]

edge_index_2 = data["edge_index_2"]

features_1 = data["features_1"]

features_2 = data["features_2"]

# 图卷积的计算

abstract_features_1 = self.convolutional_pass(edge_index_1, features_1) # [graph1_num_node,self.args.filters_3]

abstract_features_2 = self.convolutional_pass(edge_index_2, features_2) # [graph2_num_node,self.args.filters_3]

# 计算histogram

if self.args.histogram == True:

hist = self.calculate_histogram(abstract_features_1,

torch.t(abstract_features_2))

# 使用注意力机制层

pooled_features_1 = self.attention(abstract_features_1)

pooled_features_2 = self.attention(abstract_features_2)

scores = self.tensor_network(pooled_features_1, pooled_features_2) # [self.args.tensor_neurons, 1]

scores = torch.t(scores) # [1,self.args.tensor_neurons]

# 合并注意力机制层和Neural Tensor Network层提取的特征

if self.args.histogram == True:

scores = torch.cat((scores, hist), dim=1).view(1, -1) # [1, self.feature_count]

# 获得预测分数,由于标准答案使用了归一化,所以最后要过一下sigmoid层

scores = torch.nn.functional.relu(self.fully_connected_first(scores)) # [1, self.args.bottle_neck_neurons]

score = torch.sigmoid(self.scoring_layer(scores)) # self.scoring_layer(scores): [1,1]

return score