上篇文章在做 整合K8s+SpringCloudK8s+SpringBoot+gRpc 时,发现K8s中使用gRpc通信,负载均衡功能失效

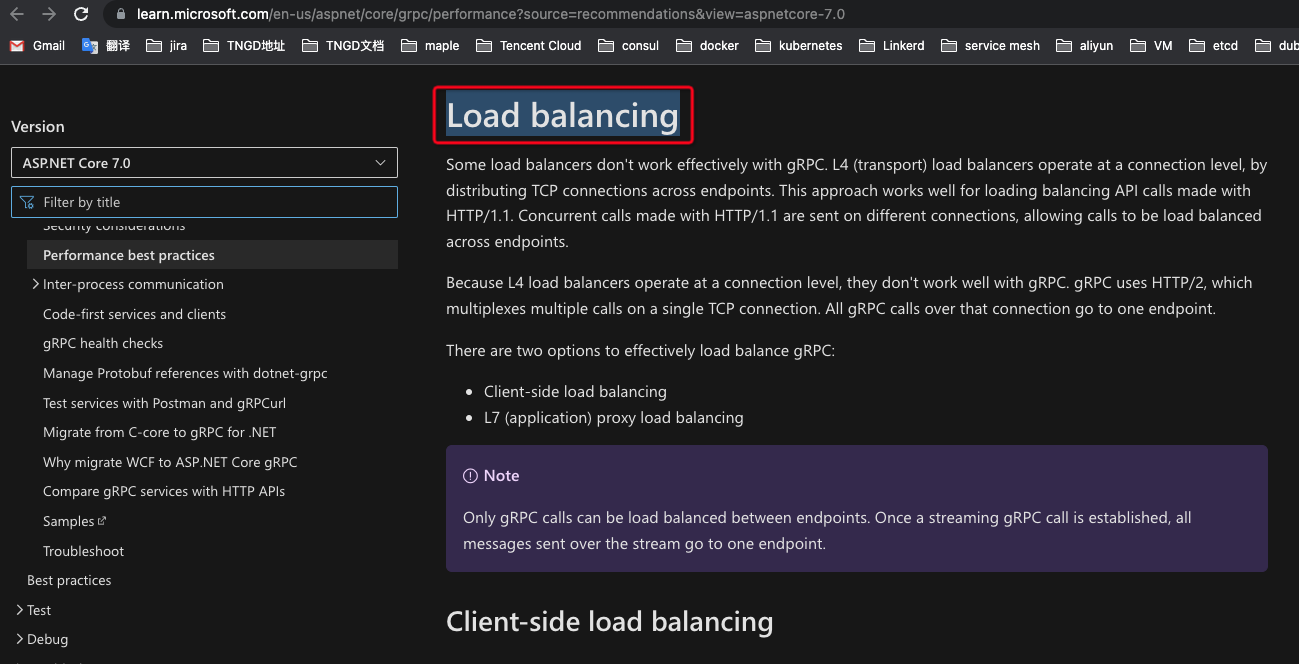

查了下gRpc的最佳实践,找到这里

Load balancing

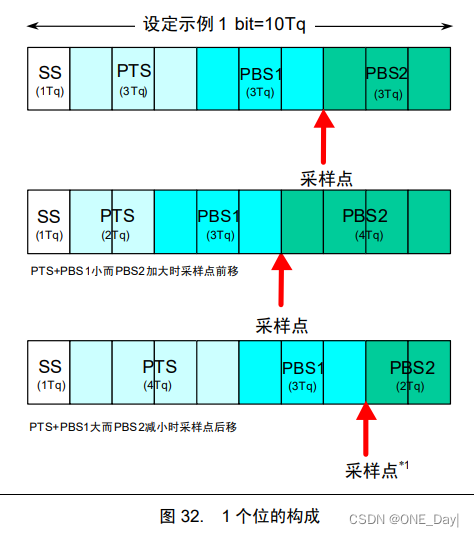

Some load balancers don't work effectively with gRPC. L4 (transport) load balancers operate at a connection level, by distributing TCP connections across endpoints. This approach works well for loading balancing API calls made with HTTP/1.1. Concurrent calls made with HTTP/1.1 are sent on different connections, allowing calls to be load balanced across endpoints.

Because L4 load balancers operate at a connection level, they don't work well with gRPC. gRPC uses HTTP/2, which multiplexes multiple calls on a single TCP connection. All gRPC calls over that connection go to one endpoint.

There are two options to effectively load balance gRPC:

Client-side load balancing

L7 (application) proxy load balancing

Note

Only gRPC calls can be load balanced between endpoints. Once a streaming gRPC call is established, all messages sent over the stream go to one endpoint.

Client-side load balancing

With client-side load balancing, the client knows about endpoints. For each gRPC call, it selects a different endpoint to send the call to. Client-side load balancing is a good choice when latency is important. There's no proxy between the client and the service, so the call is sent to the service directly. The downside to client-side load balancing is that each client must keep track of the available endpoints that it should use.

Lookaside client load balancing is a technique where load balancing state is stored in a central location. Clients periodically query the central location for information to use when making load balancing decisions.

For more information, see gRPC client-side load balancing.

Proxy load balancing

An L7 (application) proxy works at a higher level than an L4 (transport) proxy. L7 proxies understand HTTP/2, and are able to distribute gRPC calls multiplexed to the proxy on one HTTP/2 connection across multiple endpoints. Using a proxy is simpler than client-side load balancing, but can add extra latency to gRPC calls.

There are many L7 proxies available. Some options are:

Envoy - A popular open source proxy.

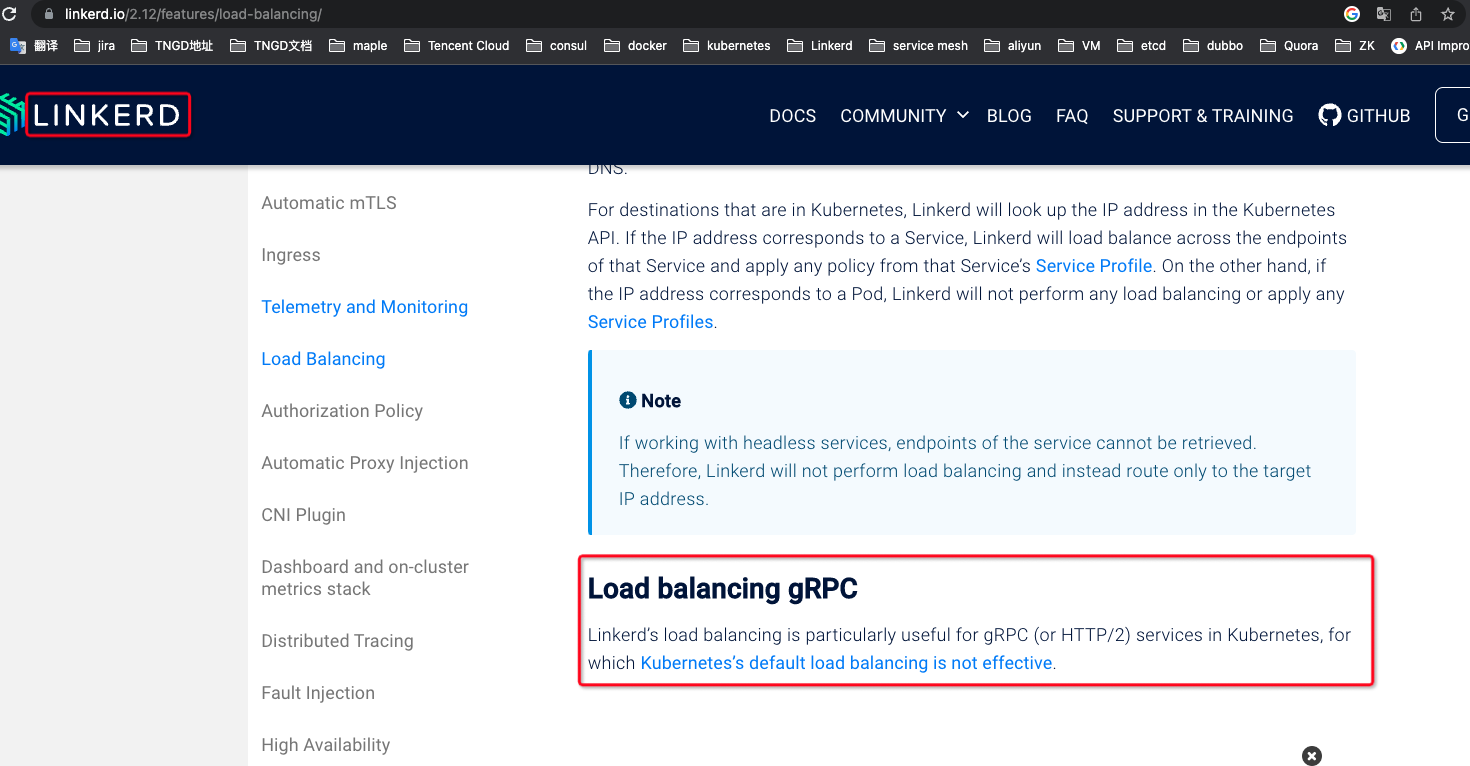

Linkerd - Service mesh for Kubernetes.

YARP: Yet Another Reverse Proxy - An open source proxy written in .NET.

上面也给出了解决方案,我们采用第二种 Linkerd 来做,这是个Service Mesh的一个实现,类似于Istio,但要比Istio更轻量级,我们这里只是选择一个代理而已,之后可能会集成Trace和监控的功能,所以功能上不用太丰富,而是要简单轻量,还要快,毕竟是代理嘛。

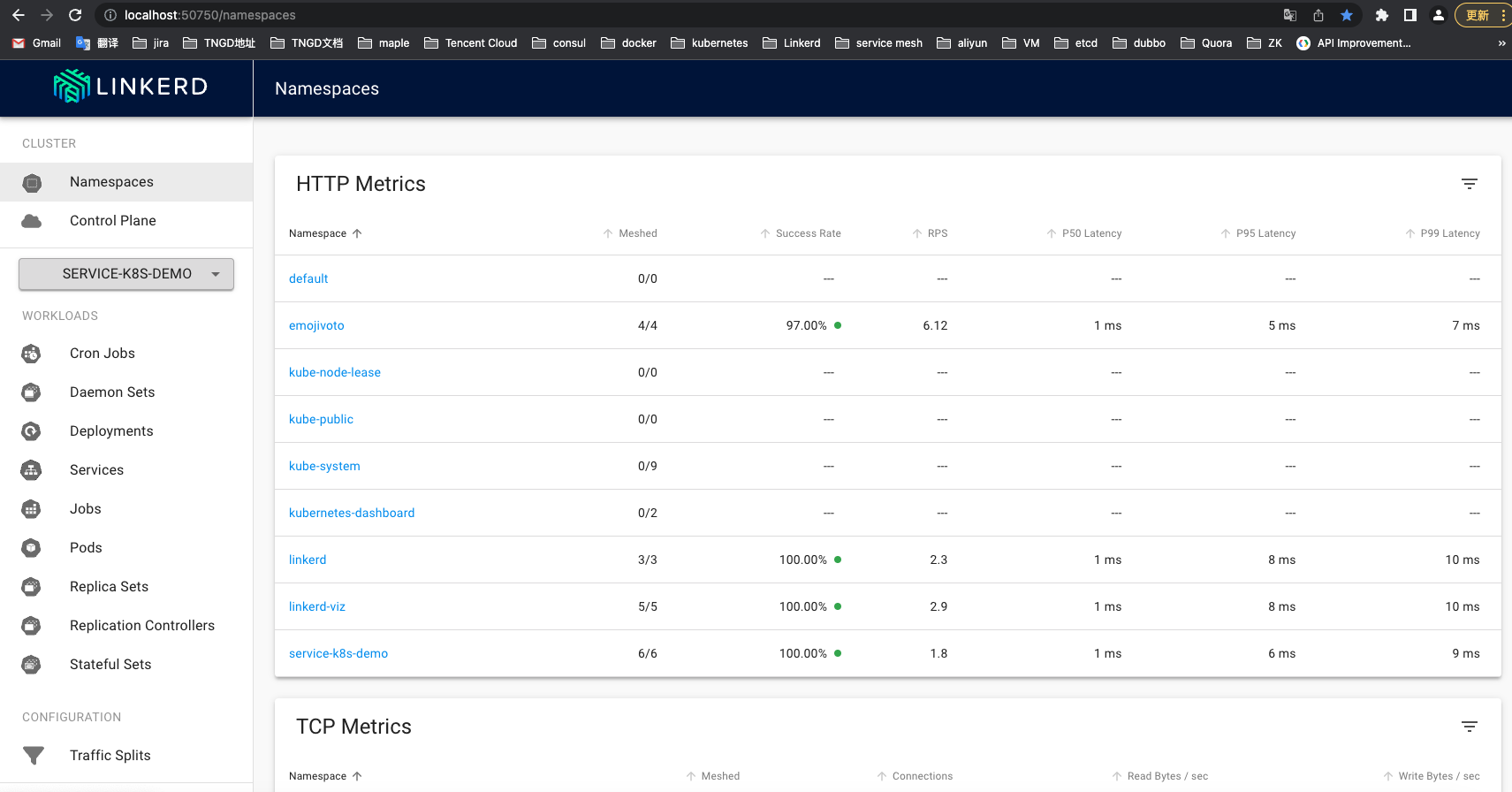

一、安装Linkerd

https://linkerd.io/2.12/getting-started/

安装完成之后会自动打开 dashboard

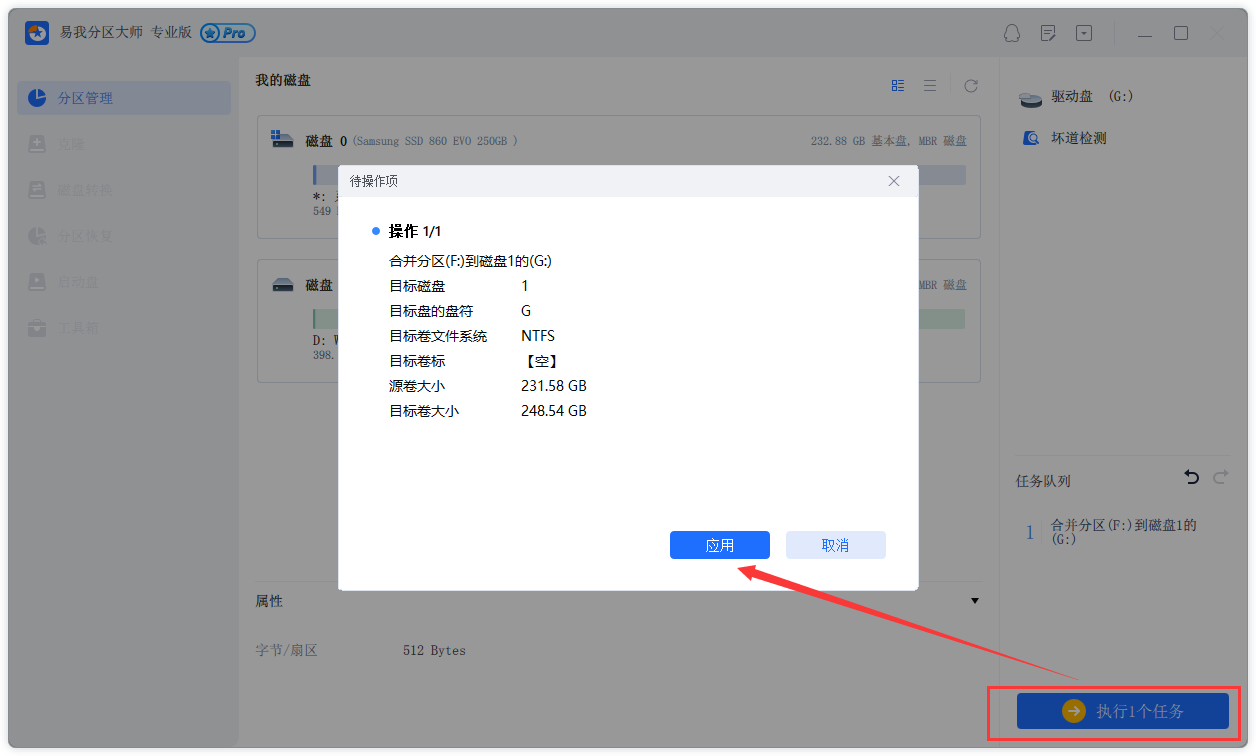

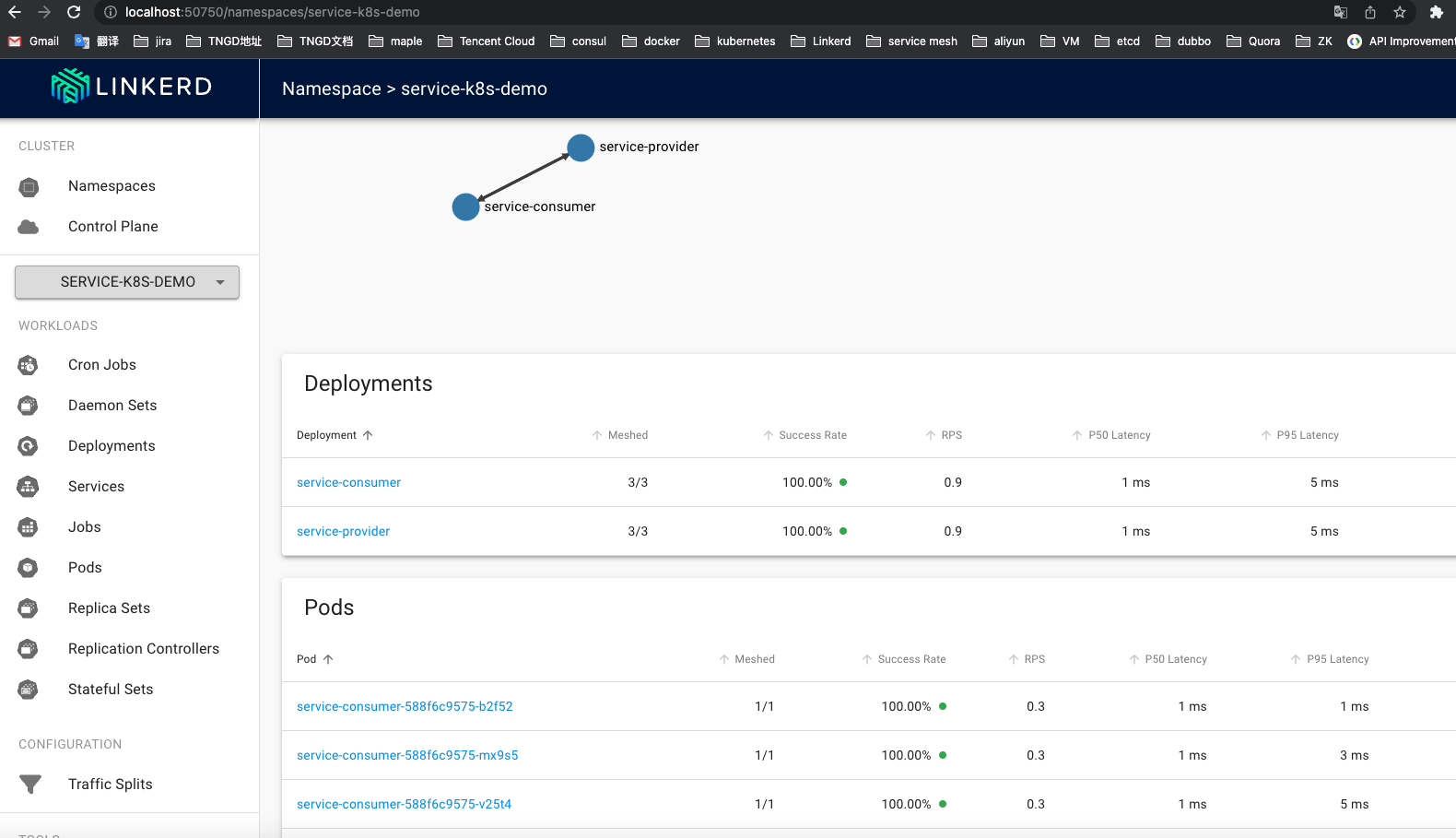

二、把应用交给Linkerd 托管

kubectl get deploy service-consumer -n service-k8s-demo -o yaml \

| linkerd inject - \

| kubectl apply -f -

kubectl get deploy service-provider -n service-k8s-demo -o yaml \

| linkerd inject - \

| kubectl apply -f -

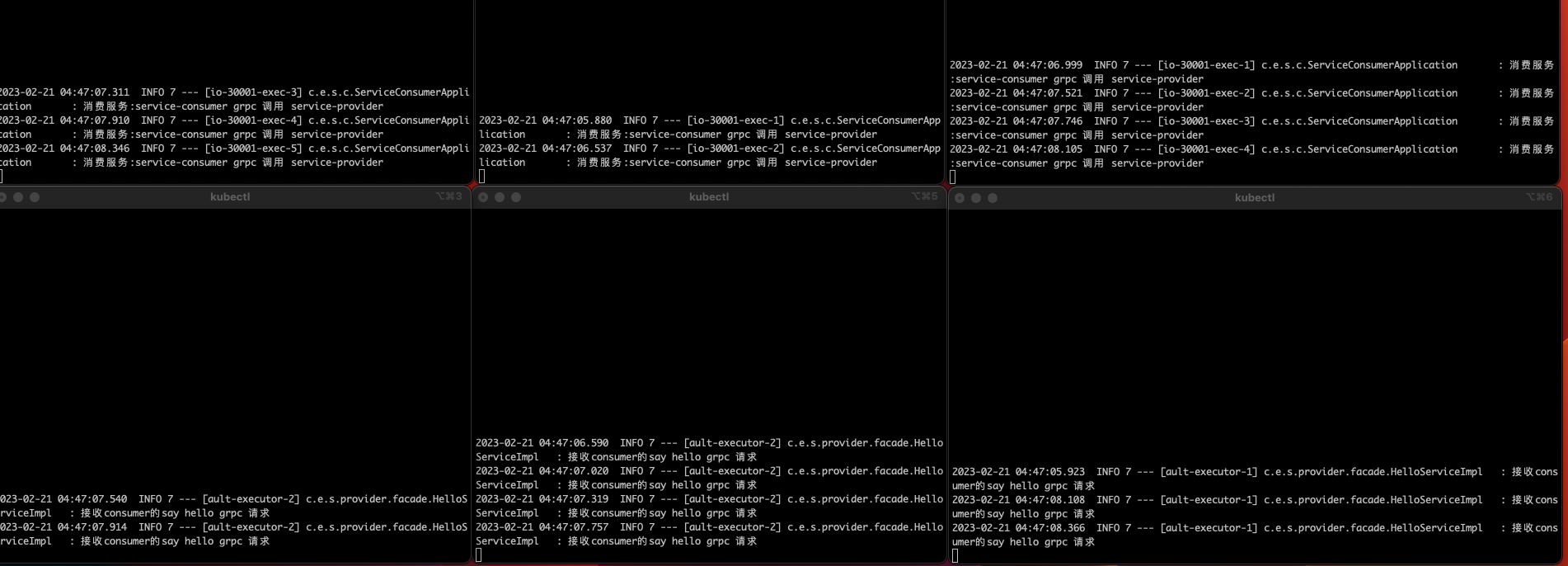

三、我这里有两个服务 service-consumer 和 service-provider,每个服务都有3个副本,再测试下负载均衡,发现已经生效了。