一,网络爬虫介绍

爬虫也叫网络机器人,可以代替人工,自动的在网络上采集和处理信息。

爬虫包括数据采集,分析,存储三部

爬虫引入依赖

<!--引入httpClient依赖-->

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.14</version>

</dependency>

二,入门程序

public static void main(String[] args) throws IOException {

//1,打开浏览器,创建HttpClient对象

CloseableHttpClient client = HttpClients.createDefault();

//2,输入网址,发起HttpGet请求

HttpGet httpGet = new HttpGet("http://www.baidu.cn");

//3,按回车,发起请求,使用Httpclient对象发起请求

CloseableHttpResponse response = client.execute(httpGet);

//4,解析响应,获取数据

if (response.getStatusLine().getStatusCode() == 200){//获取响应数据的状态码

HttpEntity entity = response.getEntity();//获取响应体

String content = EntityUtils.toString(entity,"utf8");//获取静态页面

System.out.println(content);

}

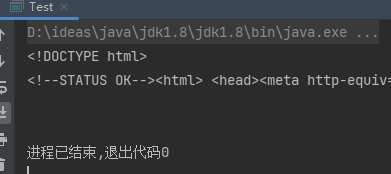

获取到百度首页的前端页面

三,HttpClient请求

一),使用Http协议客户端HttpClient这个技术,抓取网页数据。

二),Get请求

1,不带参数的get请求,获取静态页面的行数

public static void main(String[] args) throws IOException {

//创建HttpGet对象

HttpGet httpGet = new HttpGet("http://www.itcast.com");

//创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

CloseableHttpResponse response = null;

try {

//使用HttpClient对象发起请求,获取response

response = httpClient.execute(httpGet);

//解析响应

if (response.getStatusLine().getStatusCode() == 200){

String content = EntityUtils.toString(response.getEntity(), "utf8");

System.out.println(content.length());

}

} catch (IOException e) {

}finally {

response.close();

httpClient.close();

}

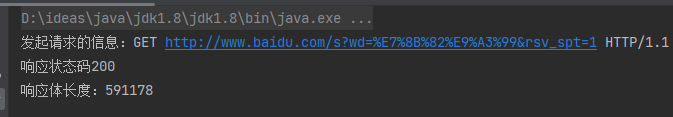

}2,带参数的HttpGet请求

public static void main(String[] args) throws Exception {

//建立URIBuilder请求的路径

URIBuilder uriBuilder = new URIBuilder("http://www.baidu.com/s");

uriBuilder.setParameter("wd","狂飙")

.setParameter("rsv_spt","1");//设置参数

//建立该路径下的Get请求

HttpGet httpGet = new HttpGet(uriBuilder.build());

System.out.println("发起请求的信息:" + httpGet);

//创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

CloseableHttpResponse response = null;

try {

//使用HttpClient对象发起请求,获取response

response = httpClient.execute(httpGet);

System.out.println("响应状态码"+response.getStatusLine().getStatusCode());

//解析响应

if (response.getStatusLine().getStatusCode() == 200){

String content = EntityUtils.toString(response.getEntity(), "utf8");

System.out.println("响应体长度:" + content.length());

}

} catch (IOException e) {

}finally {

response.close();

httpClient.close();

}

}

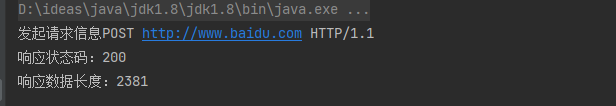

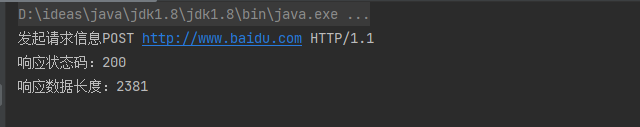

三),Post请求

1,不带参数的HttpPost请求

public static void main(String[] args) {

//创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//创建HttpPost对象

HttpPost httpPost = new HttpPost("http://www.baidu.com");

System.out.println("发起请求信息"+httpPost);

CloseableHttpResponse response = null;

//获取响应

try {

response = httpClient.execute(httpPost);

System.out.println("响应状态码:" + response.getStatusLine().getStatusCode());

String content = EntityUtils.toString(response.getEntity());

System.out.println("响应数据长度:"+content.length());

} catch (IOException e) {

throw new RuntimeException(e);

}finally {

try {

httpClient.close();

} catch (IOException e) {

throw new RuntimeException(e);

}

}

}

2,带参数的HttpPost请求

public static void main(String[] args) throws UnsupportedEncodingException {

//创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//创建HttpPost对象

HttpPost httpPost = new HttpPost("http://www.baidu.com");

System.out.println("发起请求信息"+httpPost);

//设置List集合,用来存放请求的参数

List<NameValuePair> params = new ArrayList<>();

//添加请求参数

params.add(new BasicNameValuePair("wd","狂飙"));

//创建Entity对象,构造方法中,一个是请求参数集合,一个是字符集编码

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(params,"utf8");

//设置表单的Entity对象到Post请求中

httpPost.setEntity(formEntity);

CloseableHttpResponse response = null;

//获取响应

try {

response = httpClient.execute(httpPost);

System.out.println("响应状态码:" + response.getStatusLine().getStatusCode());

String content = EntityUtils.toString(response.getEntity());

System.out.println("响应数据长度:"+content.length());

} catch (IOException e) {

throw new RuntimeException(e);

}finally {

try {

httpClient.close();

} catch (IOException e) {

throw new RuntimeException(e);

}

}

}

四,HttpClient连接池

public static void main(String[] args) {

//创建httpClient连接池管理对象

PoolingHttpClientConnectionManager manager = new PoolingHttpClientConnectionManager();

//设置连接池最大连接数量

manager.setMaxTotal(100);

//设置本机访问每个url时,最多分配10个httpClient对象访问

manager.setDefaultMaxPerRoute(10);

doGet(manager);

doGet(manager);

}

public static void doGet(PoolingHttpClientConnectionManager manager) {

//从连接池中获取httpClient对象

CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(manager).build();

CloseableHttpResponse response = null;

try {

//设置请求路径和参数

URIBuilder uriBuilder = new URIBuilder("http://www.baidu.com/s");

uriBuilder.setParameter("wd","狂飙");

//创建HttpGet请求

HttpGet httpGet = new HttpGet(uriBuilder.build());

System.out.println("请求头:" + httpGet);

//发起请求

response = httpClient.execute(httpGet);

//解析请求

if (response.getStatusLine().getStatusCode() == 200){

HttpEntity entity = response.getEntity();

String content = EntityUtils.toString(entity);

System.out.println("响应数据的长度:"+content.length());

}

}catch (Exception w){

}finally {

try {

response.close();

} catch (IOException e) {

throw new RuntimeException(e);

}

//httpClient不能手动关闭,因为交给了连接管理

}

}五,请求参数

因为网络或者服务器的原因,需要设置一些参数,确保访问快速,正常!

//创建HttpGet对象

HttpGet httpGet = new HttpGet("http://www.baidu.com");

//设置HttpClient请求参数

RequestConfig config = RequestConfig.custom().setConnectTimeout(1000)//设置连接创建最大时间

.setConnectionRequestTimeout(1000)//设置获取连接最大时间,单位ms

.setSocketTimeout(10 * 1000) //设置数据传输最大时间

.build();

httpGet.setConfig(config);//将配置设置到get请求中六,Jsoup

Jsoup是专门解析html的技术,是一款Java的html解析器

可以直接解析url地址、Html文本内容,提供了非常省力的API,课通过DOM,CSS以及类似于JQuery的操作方法来取出和操作数据。

主要功能:

1,从一个url,文件或字符串中解析html

2,使用DOM,CSS选择器来查找,取出数据

3,可操作HTML元素,熟悉,文本;

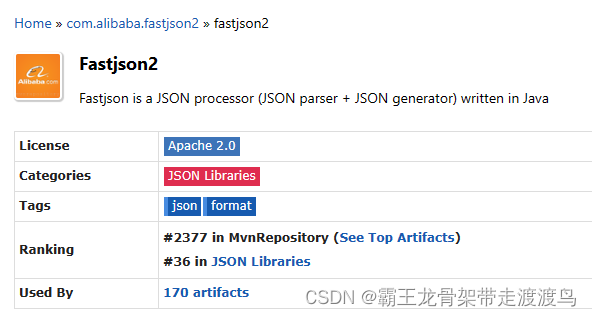

Jsoup依赖:

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.10.2</version>

</dependency>引入IO工具类依赖

<!-- https://mvnrepository.com/artifact/commons-io/commons-io -->

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.6</version>

</dependency>

引入字符串工具类

<!-- https://mvnrepository.com/artifact/org.apache.commons/commons-lang3 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.7</version>

</dependency>

一),Jsoup解析url

@Test

public void testURL() throws Exception{

//Jsoup解析url地址,获取百度首页标题,超时时间1000ms

Document document = Jsoup.parse(new URL("http://www.baidu.com"), 1000);

String title = document.getElementsByTag("title").first().text();

System.out.println(title);

}二),Jsoup解析字符串

@Test

public void testString() throws Exception{

//将文件转换成字符串

String fileToString = FileUtils.readFileToString(new File(""), "utf8");

//使用Jsoup解析字符串

Document document = Jsoup.parse(fileToString);

//获取title标签

Element title = document.getElementsByTag("title").first();

System.out.println(title.text());

}三),Jsoup解析文件

@Test

public void testFile() throws Exception{

Document document = Jsoup.parse(new File(""), "utf8");

Element title = document.getElementsByTag("title").first();

System.out.println(title.text());

}四),使用dom方式遍历文档

@Test

public void testDOM() throws Exception{

//获取dom对象

Document document = Jsoup.parse("http://www.baidu.com/");

//解析

Elements title = document.getElementsByTag("title");//通过标签获取元素

Element elementById = document.getElementById("s_menu_mine");//通过id获取元素

document.getElementsByAttribute("属性名");//通过属性获取元素

document.getElementsByAttributeValue("href","value");

document.getElementsByClass("类名");//通过类获取元素

}五),从元素中获取数据

@Test

public void testData() throws Exception{

Document document = Jsoup.parse("http://www.baidu.com/");

Element element = document.getElementById("kw");

element.id();//获取元素id

element.text();//获取文本内容

element.className();//获取元素的class

element.attributes();//从元素中获取所有属性的值

element.attr("class");//从元素获取属性的值

element.select("span");//通过搜索span标签获取Element

element.select("#id");//通过搜索id标签获取Element

element.select(".class");//通过搜索class标签获取Element

element.select("[name]");//通过搜索属性获取Element

element.select("[class=class_a]");//通过属性的值获取Element

}