在运行代码之前,需要到微软商店下载安装python环境,35m,都是自动的。

1、安装python 的extensions插件。

ctrl+shift+x 输入 python 后点击 install 按钮。

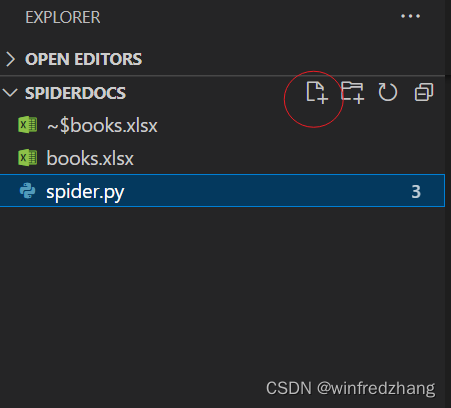

2、新建文件夹spider文件夹。

3、在新建文件夹spider下新建文件spider.py源代码。

4、遇到问题,升级pip执行文件。

pip install request

报如下错误:

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'SSLError(SSLEOFError(8, 'EOF occurred in violation of protocol (_ssl.c:997)'))': /simple/request/

WARNING: Retrying (Retry(total=3, connect=None, read=None, redirect=None, status=None)) after connection broken by 'SSLError(SSLEOFError(8, 'EOF occurred in violation of protocol (_ssl.c:997)'))': /simple/request/

ERROR: Could not find a version that satisfies the requirement request (from versions: none)

ERROR: No matching distribution found for request解决:

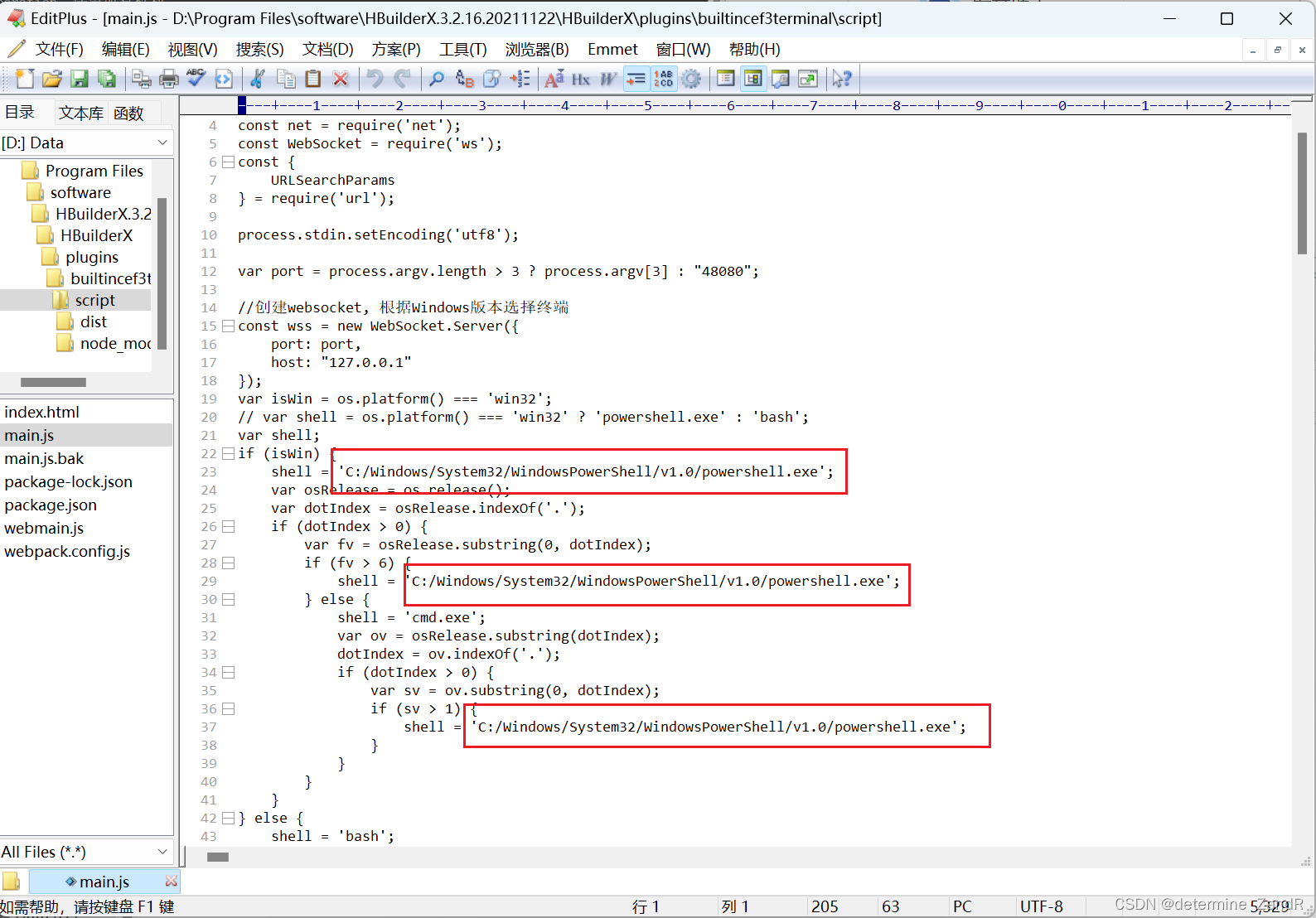

C:\Users\zhang\AppData\Local\Microsoft\WindowsApps\PythonSoftwareFoundation.Python.3.10_qbz5n2kfra8p0\python.exe -m pip install --upgrade pip5、遇到问题,pip命令行修改。

再次

pip install request报如下错误:

ERROR: Could not find a version that satisfies the requirement request (from versions: none)

ERROR: No matching distribution found for request

解决:

转换下载地址:douban

pip install request-i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com接着报错:

ERROR: Cannot unpack file C:\Users\zhang\AppData\Local\Temp\pip-unpack-2ejahzt3\simple.html (downloaded from C:\Users\zhang\AppData\Local\Temp\pip-req-build-xfd621gj, content-type: text/html); cannot detect archive format

ERROR: Cannot determine archive format of C:\Users\zhang\AppData\Local\Temp\pip-req-build-xfd621gj

解决:换成清华的网址,改写requests【加s】

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple --trusted-host pypi.tuna.tsinghua.edu.cn requests按照以上方法,分别安装bs4,openpyxl模块

6、按运行按钮执行,或者在控制台程序中输入命令:

python spider.py最后发现一切的原因来自于requests 少打了一个s字母。

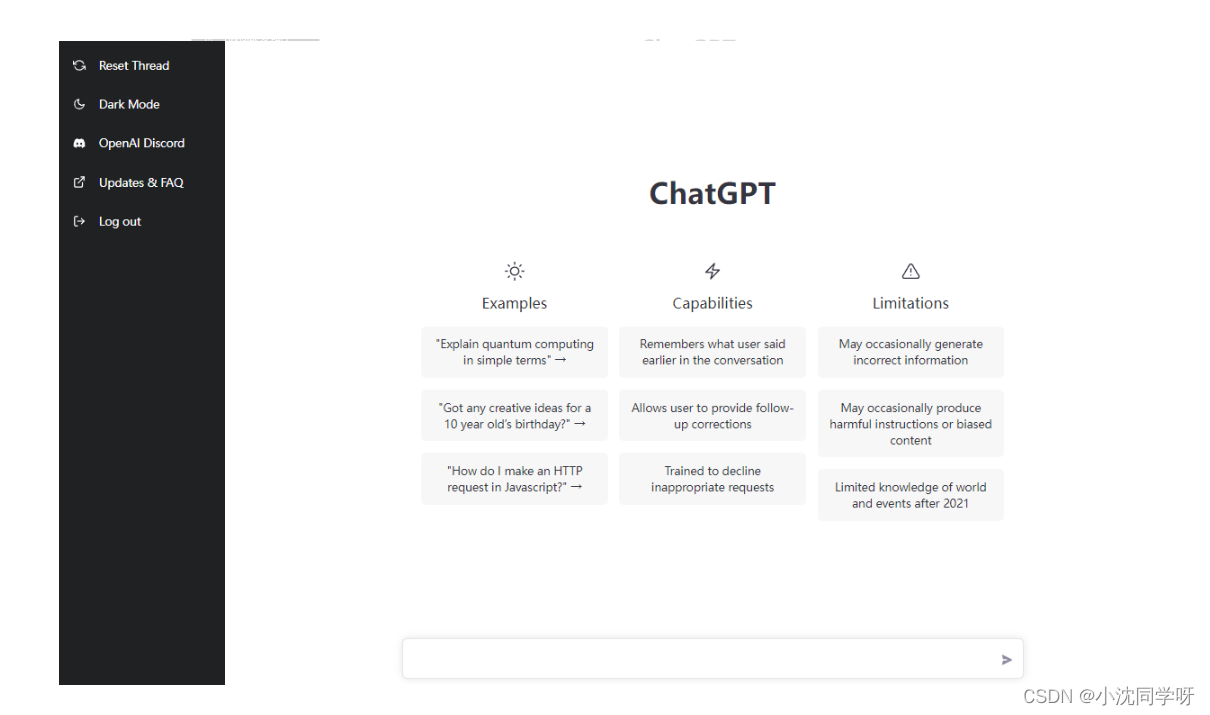

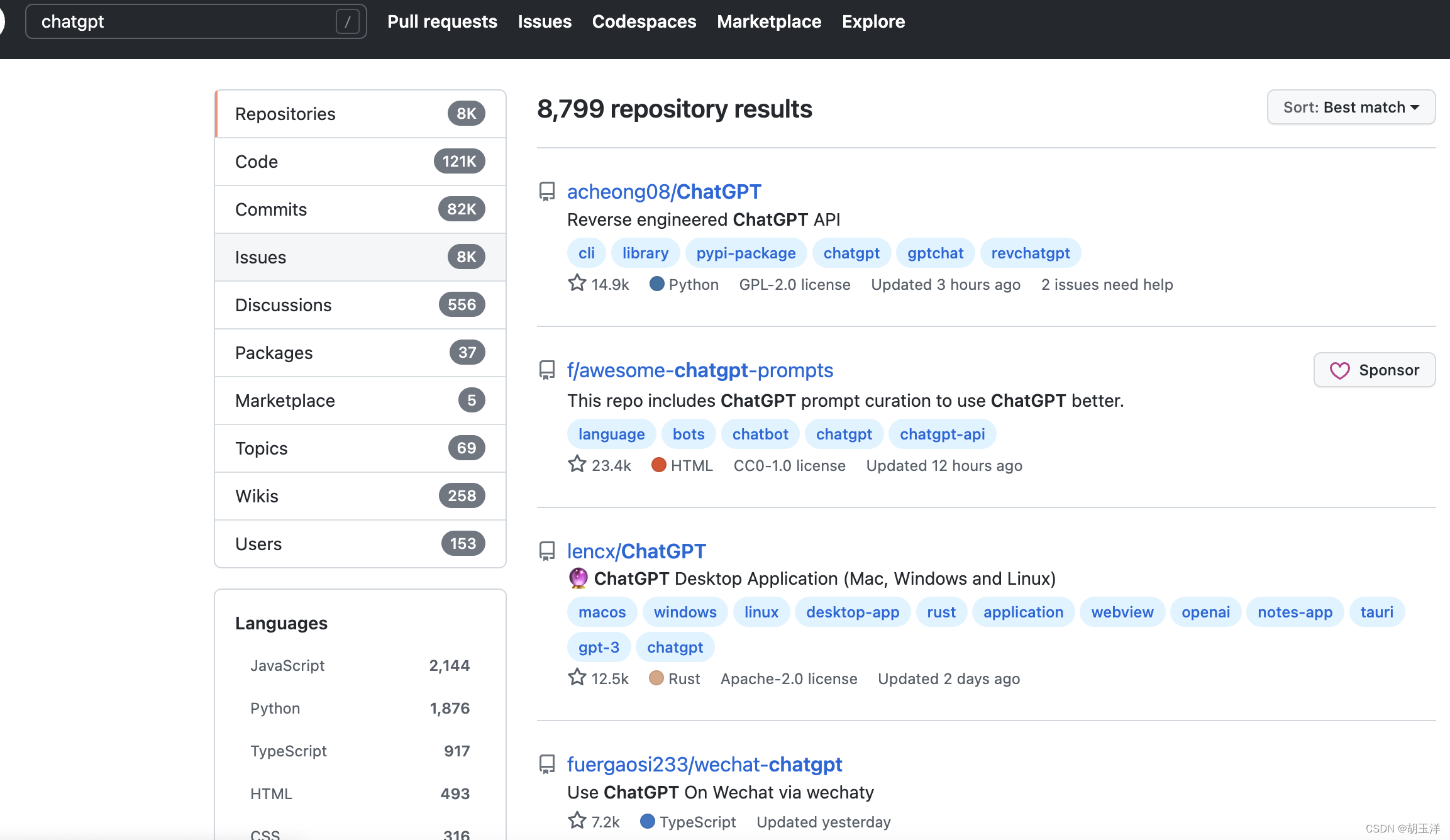

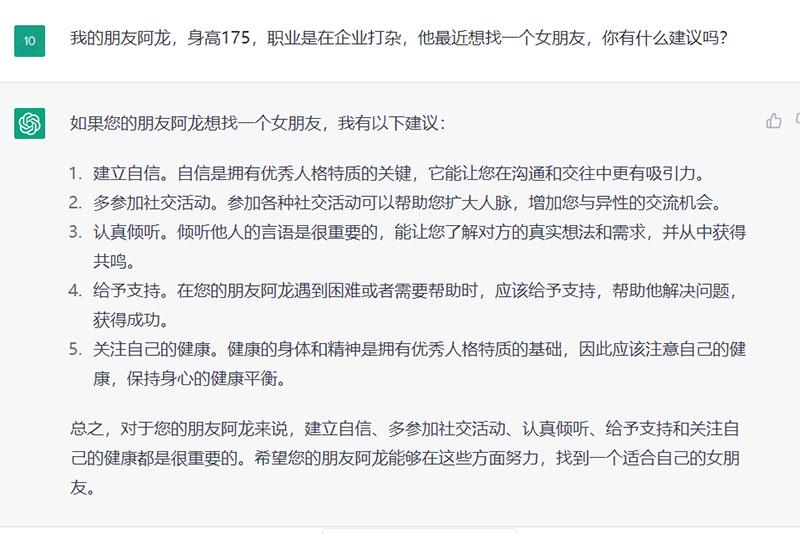

以下是chatgpt写的爬虫代码:

import requests

from bs4 import BeautifulSoup

import openpyxl

# Make the request and parse the HTML content

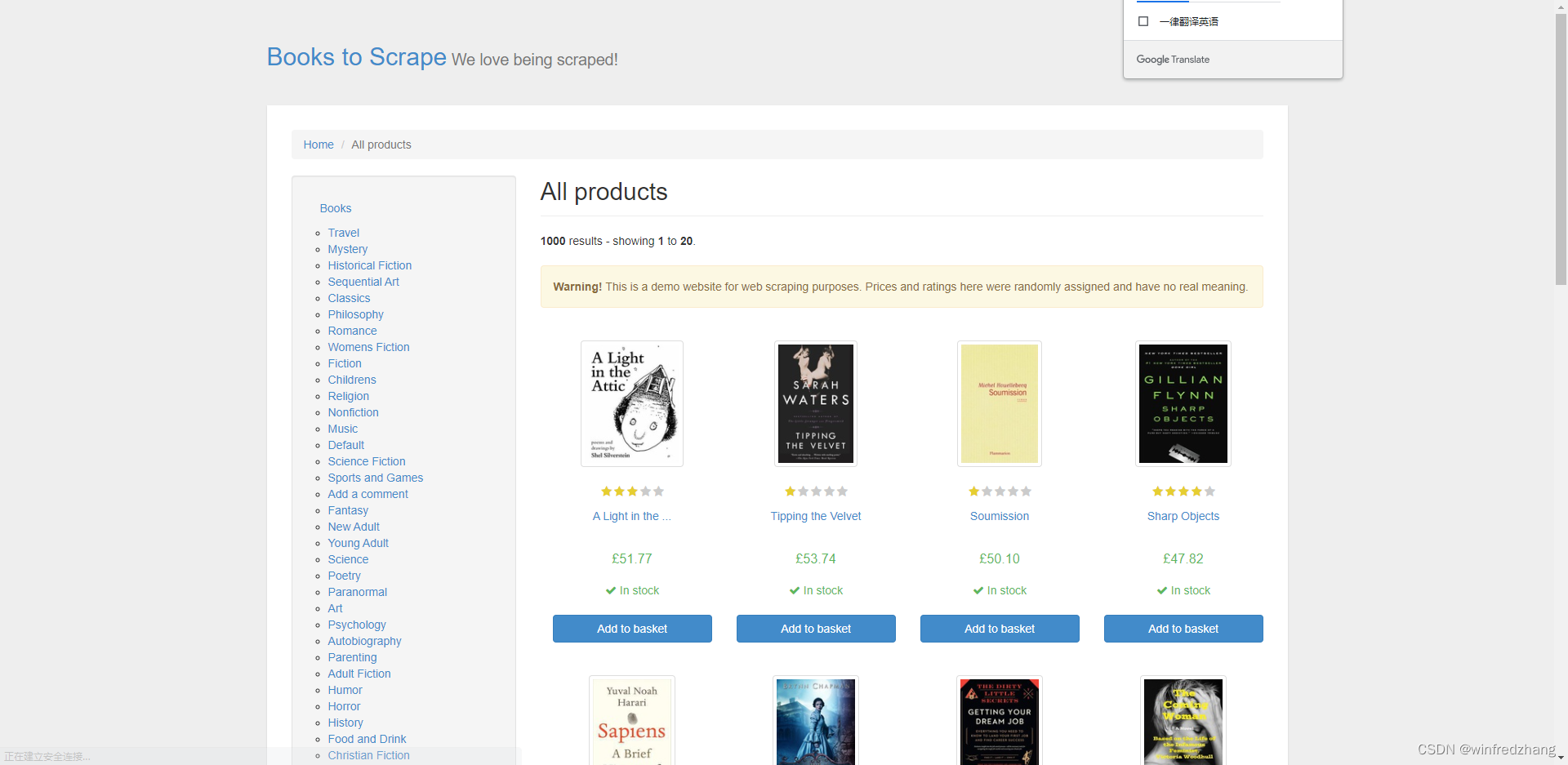

url = "https://books.toscrape.com/"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

# Extract the book data

books = []

for article in soup.find_all("article"):

book = {}

book["title"] = article.h3.a["title"]

book["price"] = article.select_one(".price_color").get_text(strip=True)

books.append(book)

# Save the book data to an Excel file

wb = openpyxl.Workbook()

sheet = wb.active

sheet.append(["Title", "Price"])

for book in books:

sheet.append([book["title"], book["price"]])

wb.save("books.xlsx")

网址:All products | Books to Scrape - Sandbox

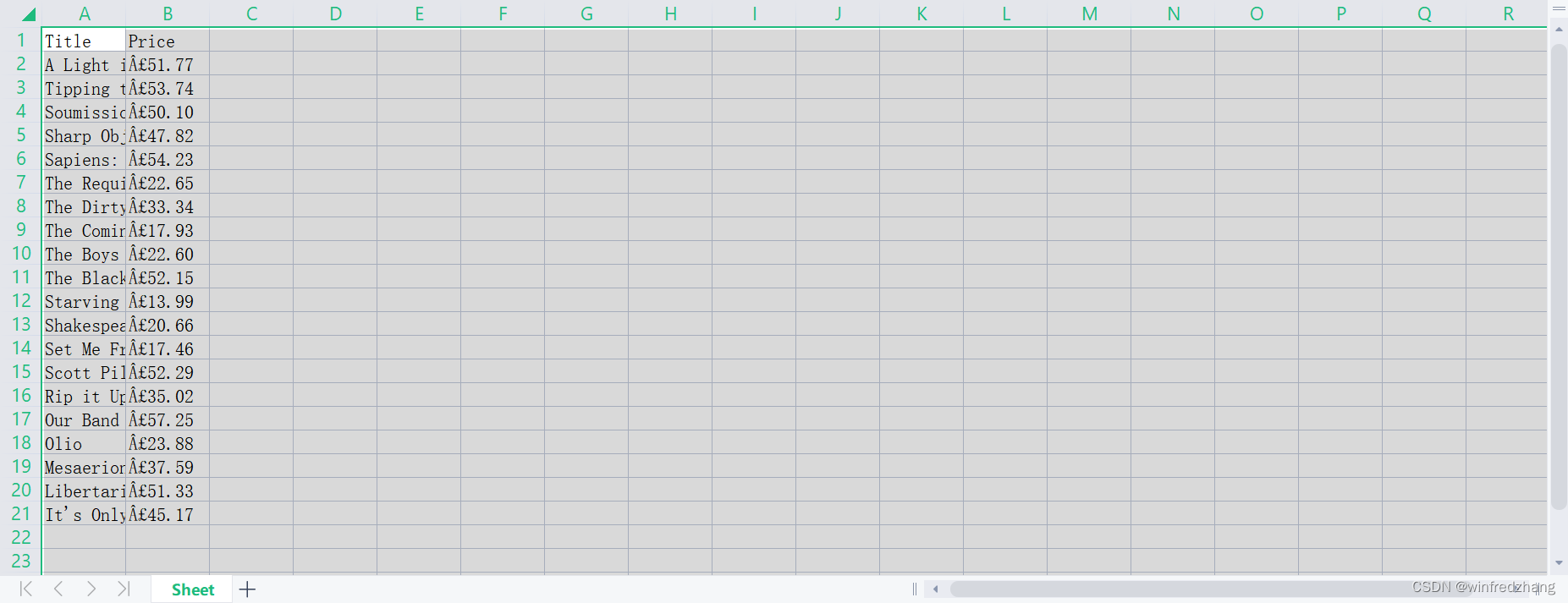

结果: