前言:

为了能够提前发现kubernetes集群的问题以及方便快捷的查询容器的各类参数,比如,某个pod的内存使用异常高企 等等这样的异常状态(虽然kubernetes有自动重启或者驱逐等等保护措施,但万一没有配置或者失效了呢),容器的内存使用量限制,过去10秒容器CPU的平均负载等等容器的运行参数,这些情况我们自然还是将kubernetes集群纳入到监控系统中好些,毕竟能够发现问题和解决问题更加的优雅嘛。

因此,我们需要能够有一个比较全面的监测容器运行的实时的监控系统,版本答案当然就是Prometheus了。Prometheus监控系统可以多维度的,方便的将我们需要的信息收集起来,然后通过Grafana做一个华丽的展示。

那么,对于容器这个对象来说,我们要使用的收集器就是cAdvisor啦,但cAdvisor这个收集器和node_exporter,mysqld_exporter 这些收集器不太一样,它是集成在kubelet这个服务内的,因此,我们不需要额外的安装cAdvisor收集器,也就是说不需要像node_exporter这样的系统信息收集器一样单独部署了,只要kubernetes的节点上有运行kubelet这个服务就可以了。

下面就kubernetes集群的Prometheus专用于容器的实时信息收集器cAdvisor如何认识它,如何部署它并集成到Prometheus内做一个详细的介绍。

一,

cAdvisor的简介

cAdvisor是一个谷歌开发的容器监控工具,它被内嵌到k8s中作为k8s的监控组件。cAdvisor对Node机器上的资源及容器进行实时监控和性能数据采集,包括CPU使用情况、内存使用情况、网络吞吐量及文件系统使用情况,由于cAdvisor是集成在Kubelet中的,因此,当kubelet启动时会自动启动cAdvisor,即一个cAdvisor仅对一台Node机器进行监控。

二,

其它的用于收集容器信息的信息收集器

heapster和Metrics server以及kube-state-metrics都可用于提供容器信息,但它们所提供的信息维度是不同的,heapster已经被Metrics server所取代 了,如果没记错的话,应该是从k8s的1.16版本后放弃了heapster。

Metrics server作为新的容器信息收集器,是从 api-server 中获取容器的 cpu、内存使用率这种监控指标,并把他们发送给存储后端,可以算作一个完整的监控系统。cAdvisor是专有的容器信息收集,是一个专有工具的地位,而kube-state-metrics是偏向于kubernetes集群内的资源对象,例如deployment,StateFulSet,daemonset等等资源,可以算作一个特定的数据源。

三,

cAdvisor的初步使用

本文以一个minikube搭建的kubernetes单实例为例子,IP地址为:192.168.217.23

A,

关于kubelet

kubelet的API:

kubelet是kubernetes集群中真正维护容器状态,负责主要的业务的一个关键组件。每个节点上都运行一个 kubelet 服务进程,默认监听 10250 端口,接收并执行 master 发来的指令,管理 Pod 及 Pod 中的容器。kubernetes的节点IP+10250端口就是kubelet的API。

几个重要的端口:

10250(kubelet API):kubelet server 与 apiserver 通信的端口,定期请求 apiserver 获取自己所应当处理的任务,通过该端口可以访问获取 node 资源以及状态。kubectl查看pod的日志和cmd命令,都是通过kubelet端口10250访问。

10248端口是什么呢?是kubelet的健康检查端口,可以通过 kubelet 的启动参数 –healthz-port 和 –healthz-bind-address 来指定监听的地址和端口。

需要注意的是,Kubernetes 1.11+ 版本以后,kubelet 就移除了 10255 端口, metrics 接口又回到了 10250 端口中,我的minikube版本是1.18.8,自然是没有10255端口了。

低版本的kubernetes还有一个4194端口,此端口是cAdvisor的web管理界面的端口,可能是出于安全漏洞的考虑,后续版本移除了此端口,因此,此端口在我这个版本内并没有开启。

kubernetes的版本查询:

[root@node3 ~]# k get no

NAME STATUS ROLES AGE VERSION

node3 Ready master 18d v1.18.8

kubelet的端口情况:

[root@node3 ~]# netstat -antup |grep kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 29681/kubelet

tcp 0 0 127.0.0.1:39937 0.0.0.0:* LISTEN 29681/kubelet

tcp 0 0 192.168.217.23:51058 192.168.217.23:8443 ESTABLISHED 29681/kubelet

tcp6 0 0 :::10250 :::* LISTEN 29681/kubelet

tcp6 0 0 192.168.217.23:10250 192.168.217.1:59295 ESTABLISHED 29681/kubelet kube-apiserver的service:

[root@node3 ~]# k get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18d

B,

API的使用

既然都说了节点IP+10250是kubelet的API了,那么,我们肯定可以从这个API里获取到一些信息了,这些信息其实就是cAdvisor收集到的,如何使用这个API呢?这个API可是需要使用证书的https哦。因此,计划建立一个sa,通过sa的token来登陆这个API

(1)

利用ServiceAccount访问API

找一个具有cluster-admin权限的ServiceAccount,其实每个集群内都很容易找到这样的sa,但为了说明问题还是新建一个任意的具有最高权限的sa吧,实际的生产中可不要这么搞哦。

新建一个sa:

kubectl create ns monitor-sa #创建一个monitor-sa的名称空间

kubectl create serviceaccount monitor -n monitor-sa #创建一个sa账号

kubectl create clusterrolebinding monitor-clusterrolebinding -n monitor-sa --clusterrole=cluster-admin --serviceaccount=monitor-sa:monitor 删除这些都会吧,我就不演示了。

查看secret:

[root@node3 ~]# k get secrets -n monitor-sa

NAME TYPE DATA AGE

default-token-fw7pq kubernetes.io/service-account-token 3 81s

monitor-token-tf48k kubernetes.io/service-account-token 3 81s

获取登录用的token:

[root@node3 ~]# k describe secrets -n monitor-sa monitor-token-tf48k

Name: monitor-token-tf48k

Namespace: monitor-sa

Labels: <none>

Annotations: kubernetes.io/service-account.name: monitor

kubernetes.io/service-account.uid: 1c902004-bd78-4aca-b37e-5fe751dfcd4a

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 10 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IllIZ2VhUzN6Q2lSUmE3MEM0ZWpJU255ZXRGRjlkam9rT2JrN0FjNEd1WDgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yLXNhIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6Im1vbml0b3ItdG9rZW4tdGY0OGsiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoibW9uaXRvciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjFjOTAyMDA0LWJkNzgtNGFjYS1iMzdlLTVmZTc1MWRmY2Q0YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yLXNhOm1vbml0b3IifQ.aUW1XRfGsHiBDWME4JtCs_8144m308ioQ9W1zOg80YIA1VZq5SKAuq_R4XUTyDW7stuSUtTpXhG_HkzoO5sxU7SW6EBIvnOhz-hp3P3S_7BTd5QgZ2PZP9PnJP46lSNS2g0VpqThqtJzXPNZWZnquRV9oHpdeKeeC9b8dcdIwMw_HYC30ydCzt15axf3YNUzVsW1xgLM9fkhTthBm1Z02kcPMqa49nXRQFS3AwVOnlh7Mn4z8OxufVuFY_f5PDkHwnYX4zRgN0PL3On5k_yZgDWgT2kh63fTi4Skmlee7i_1t_lucU-B_8JfLOqiUCUCkt9nr3W4Qj2FOxRyOJ2JtQ

使用10250这个API:

#将token保存到变量TOKEN里面,然后下面的API调试接口命令里面引用

[root@node3 ~]# TOKEN=eyJhbGciOiJSUzI1NiIsImtpZCI6IllIZ2VhUzN6Q2lSUmE3MEM0ZWpJU255ZXRGRjlkam9rT2JrN0FjNEd1WDgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yLXNhIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6Im1vbml0b3ItdG9rZW4tdGY0OGsiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoibW9uaXRvciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjFjOTAyMDA0LWJkNzgtNGFjYS1iMzdlLTVmZTc1MWRmY2Q0YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yLXNhOm1vbml0b3IifQ.aUW1XRfGsHiBDWME4JtCs_8144m308ioQ9W1zOg80YIA1VZq5SKAuq_R4XUTyDW7stuSUtTpXhG_HkzoO5sxU7SW6EBIvnOhz-hp3P3S_7BTd5QgZ2PZP9PnJP46lSNS2g0VpqThqtJzXPNZWZnquRV9oHpdeKeeC9b8dcdIwMw_HYC30ydCzt15axf3YNUzVsW1xgLM9fkhTthBm1Z02kcPMqa49nXRQFS3AwVOnlh7Mn4z8OxufVuFY_f5PDkHwnYX4zRgN0PL3On5k_yZgDWgT2kh63fTi4Skmlee7i_1t_lucU-B_8JfLOqiUCUCkt9nr3W4Qj2FOxRyOJ2JtQ

curl https://127.0.0.1:10250/metrics/cadvisor -k -H "Authorization: Bearer $TOKEN"OK,输出茫茫多,稍微截一点吧,剩下的就不贴了,具体的含义后面在说吧:

[root@node3 ~]# curl https://127.0.0.1:10250/metrics/cadvisor -k -H "Authorization: Bearer $TOKEN" |less

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0# HELP cadvisor_version_info A metric with a constant '1' value labeled by kernel version, OS version, docker version, cadvisor version & cadvisor revision.

# TYPE cadvisor_version_info gauge

cadvisor_version_info{cadvisorRevision="",cadvisorVersion="",dockerVersion="19.03.9",kernelVersion="5.16.9-1.el7.elrepo.x86_64",osVersion="CentOS Linux 7 (Core)"} 1

# HELP container_cpu_cfs_periods_total Number of elapsed enforcement period intervals.

# TYPE container_cpu_cfs_periods_total counter

container_cpu_cfs_periods_total{container="",id="/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod019fe473_4f9f_4827_bc76_526f7aa2f97d.slice",image="",name="",namespace="kube-system",pod="kube-flannel-ds-amd64-6cdl5"} 19477 1669053701394

container_cpu_cfs_periods_total{container="addon-resizer",id="/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod7f1fcd63_b032_421c_b929_5c7c905e7ef3.slice/docker-9edba1d252251d7e2886f8a278ee30bb055e73e0f07466cd6680fb13788ab755.scope",image="sha256:ee3db71672efc913209ec07e9912723dd3fc1acfbf92203743bdd847f1eb1578",name="k8s_addon-resizer_kube-state-metrics-c96857b7b-twtpz_kube-system_7f1fcd63-b032-421c-b929-5c7c905e7ef3_1",namespace="kube-system",pod="kube-state-metrics-c96857b7b-twtpz"} 4382 1669053703291

container_cpu_cfs_periods_total{container="kube-flannel",id="/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod019fe473_4f9f_4827_bc76_526f7aa2f97d.slice/docker-84a994ffae8ca838967510c7a79c8b7a5b8befb7c9be78acb4e697cb10a46953.scope",image="sha256:4e9f801d2217e98e94de72cefbcb010a7f2caccf03834dfd12a8e60abcaaecfd",name="k8s_kube-flannel_kube-flannel-ds-amd64-6cdl5_kube-system_019fe473-4f9f-4827-bc76-526f7aa2f97d_35",namespace="kube-system",pod="kube-flannel-ds-amd64-6cdl5"} 19467 1669053704667

# HELP container_cpu_cfs_throttled_periods_total Number of throttled period intervals.

(2)

将上面的命令改造一下,使用kube-apiserver 的服务来访问kube-apiserver 的API接口:

curl https://10.96.0.1/api/v1/nodes/node3/proxy/metrics -k -H "Authorization: Bearer $TOKEN"这个命令输出的也比较多,就截取一部分吧:

[root@node3 ~]# curl https://10.96.0.1/api/v1/nodes/node3/proxy/metrics -k -H "Authorization: Bearer $TOKEN" |less

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0# HELP apiserver_audit_event_total [ALPHA] Counter of audit events generated and sent to the audit backend.

# TYPE apiserver_audit_event_total counter

apiserver_audit_event_total 0

# HELP apiserver_audit_requests_rejected_total [ALPHA] Counter of apiserver requests rejected due to an error in audit logging backend.

# TYPE apiserver_audit_requests_rejected_total counter

apiserver_audit_requests_rejected_total 0

# HELP apiserver_client_certificate_expiration_seconds [ALPHA] Distribution of the remaining lifetime on the certificate used to authenticate a request.

# TYPE apiserver_client_certificate_expiration_seconds histogram

apiserver_client_certificate_expiration_seconds_bucket{le="0"} 0

apiserver_client_certificate_expiration_seconds_bucket{le="1800"} 0

apiserver_client_certificate_expiration_seconds_bucket{le="3600"} 0

apiserver_client_certificate_expiration_seconds_bucket{le="7200"} 0

apiserver_client_certificate_expiration_seconds_bucket{le="21600"} 0

OK,以上是通过一个具有admin权限的serviceAccount账户直接连接cadvisor和kube-apiserver的API动态获得节点的pod,endpoint等等资源的各个维度的数据。

现在需要将这些集成到Prometheus server里了。

四,

二进制部署的Prometheus server 接入minikube的数据:

将上面的token内容写入/opt/k8s.token这个文件:

echo "eyJhbGciOiJSUzI1NiIsImtpZCI6IllIZ2VhUzN6Q2lSUmE3MEM0ZWpJU255ZXRGRjlkam9rT2JrN0FjNEd1WDgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yLXNhIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6Im1vbml0b3ItdG9rZW4tdGY0OGsiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoibW9uaXRvciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjFjOTAyMDA0LWJkNzgtNGFjYS1iMzdlLTVmZTc1MWRmY2Q0YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yLXNhOm1vbml0b3IifQ.aUW1XRfGsHiBDWME4JtCs_8144m308ioQ9W1zOg80YIA1VZq5SKAuq_R4XUTyDW7stuSUtTpXhG_HkzoO5sxU7SW6EBIvnOhz-hp3P3S_7BTd5QgZ2PZP9PnJP46lSNS2g0VpqThqtJzXPNZWZnquRV9oHpdeKeeC9b8dcdIwMw_HYC30ydCzt15axf3YNUzVsW1xgLM9fkhTthBm1Z02kcPMqa49nXRQFS3AwVOnlh7Mn4z8OxufVuFY_f5PDkHwnYX4zRgN0PL3On5k_yZgDWgT2kh63fTi4Skmlee7i_1t_lucU-B_8JfLOqiUCUCkt9nr3W4Qj2FOxRyOJ2JtQ" >/opt/k8s.token

Master节点发现

在二进制部署的Prometheus服务器,找出配置文件并增加下面这一段(API Serevr 节点自动发现,如果是HA高可用的apiserver):

- job_name: 'kubernetes-apiserver'

kubernetes_sd_configs:

- role: endpoints

api_server: https://192.168.217.23:8443

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/k8s.token

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/k8s.token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- target_label: __address__

action: replace

replacement: 192.168.217.23:8443 # api集群地址

Node节点发现

在通用配置文件prometheus.yml,末尾添加Node节点发现:

- job_name: 'kubernetes-nodes-monitor'

kubernetes_sd_configs:

- role: node

api_server: https://192.168.217.23:8443

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/k8s.token

scheme: http

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/k8s.token

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: '${1}'

action: replace

target_label: LOC

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: 'NODE'

action: replace

target_label: Type

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: 'K8S-test'

action: replace

target_label: Env

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

部署kube-state-metrics 到minikube:

RBAC文件:

cat >kube-state-metrics-rbac-new.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["extensions","apps"]

resources:

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions","apps"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-syste

EOFservice文件:

cat >kube-state-metrics-svc.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "kube-state-metrics"

annotations:

prometheus.io/scrape: 'true'

spec:

type: NodePort

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP

nodePort: 32222

- name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metrics

EOF

deployment 文件:

cat >kube-state-metrics-deploy-new.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

spec:

priorityClassName: system-cluster-critical

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: quay.io/coreos/kube-state-metrics:v1.9.0

ports:

- name: http-metrics

containerPort: 8080

- name: telemetry

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer

image: registry.cn-hangzhou.aliyuncs.com/google_containers/addon-resizer:1.8.6

resources:

limits:

cpu: 100m

memory: 30Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

EOFkube-state-metrics接入Prometheus:

- job_name: "kube-stat-metrics"

static_configs:

- targets: ["192.168.217.23:32222"]

测试环节:

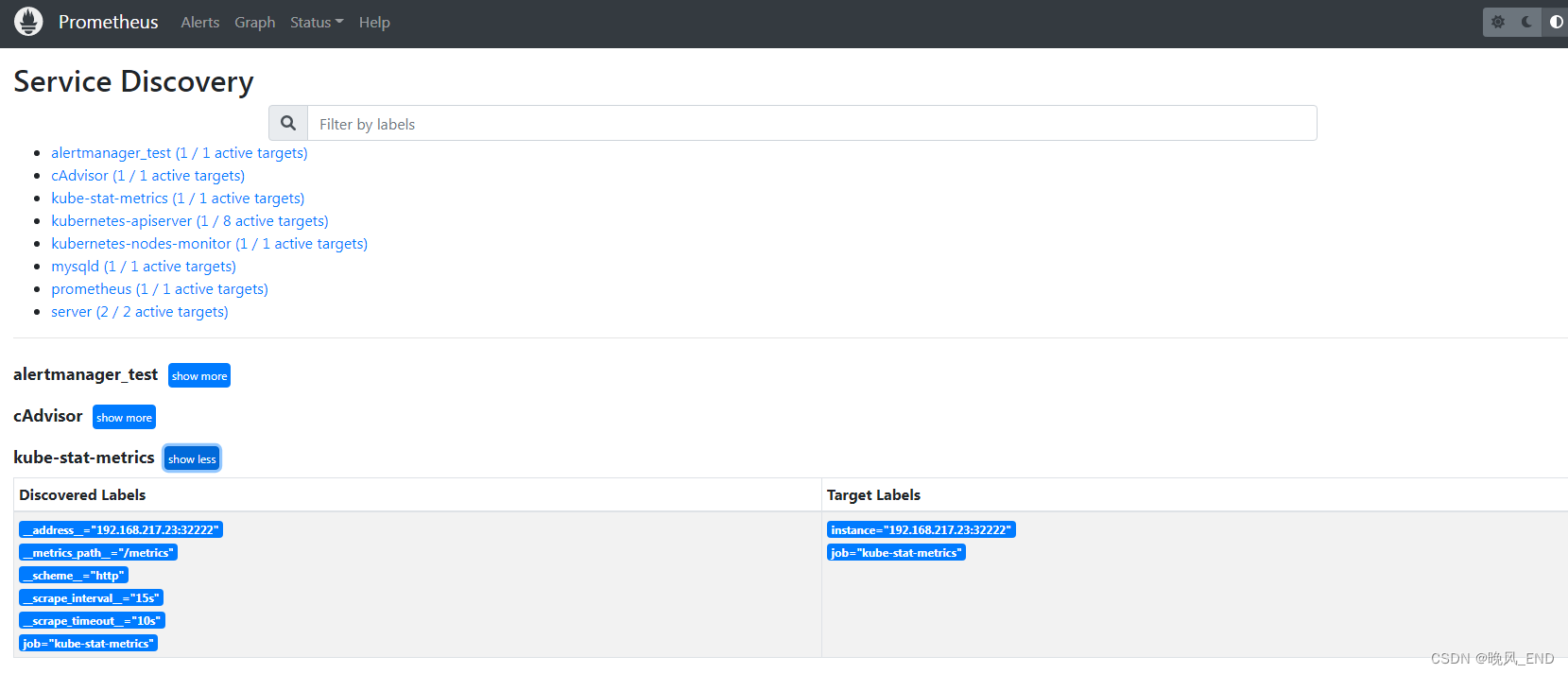

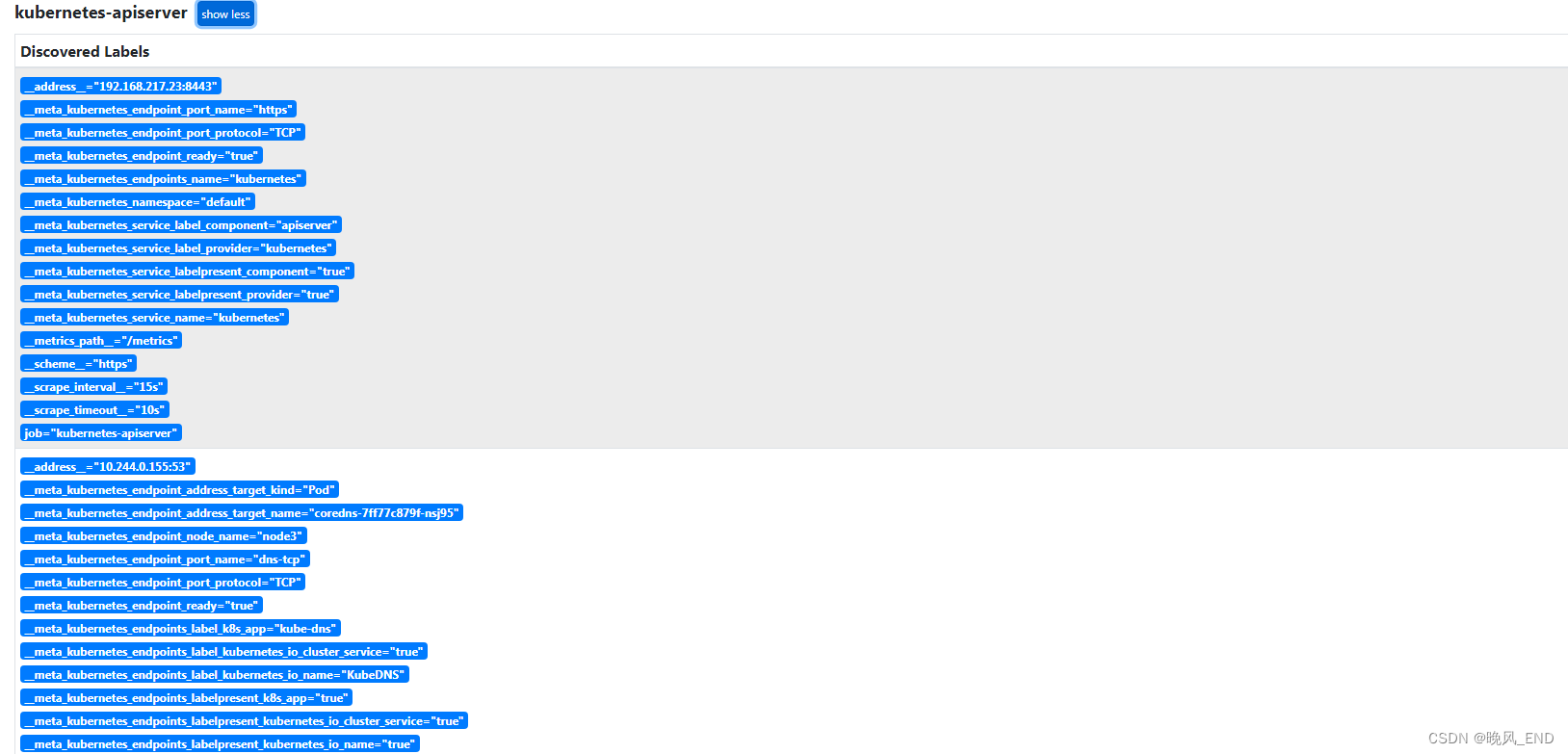

打开Prometheus的管理界面的service discovery:

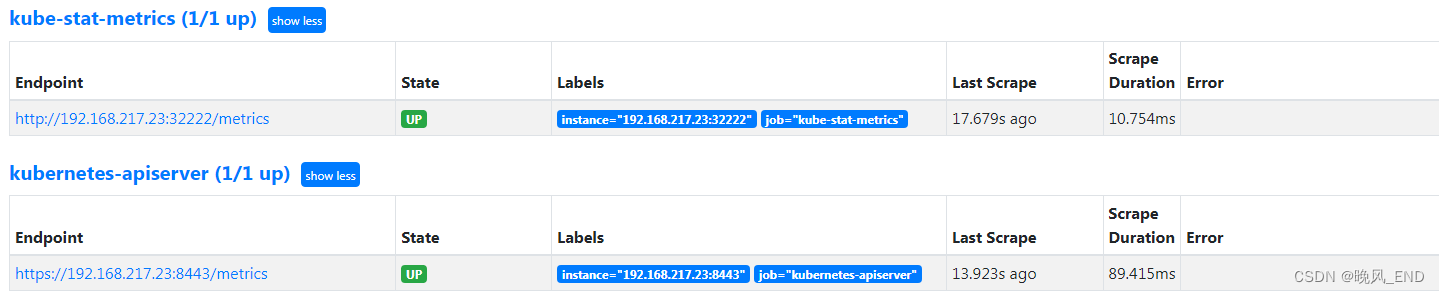

打开Prometheus的管理界面的targets:

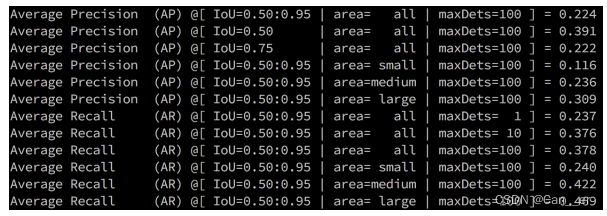

通过cadvisor采集器查询版本信息:

cadvisor_version_info

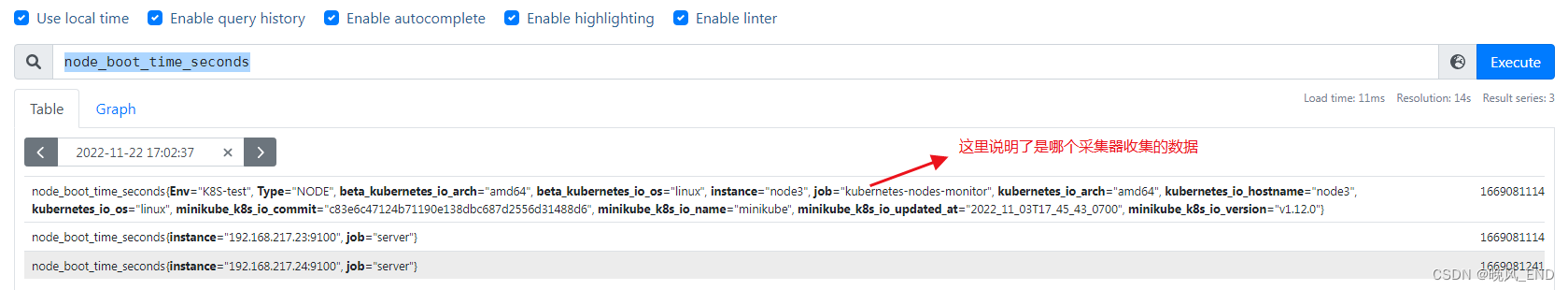

node_boot_time_seconds

node_cpu_seconds_total

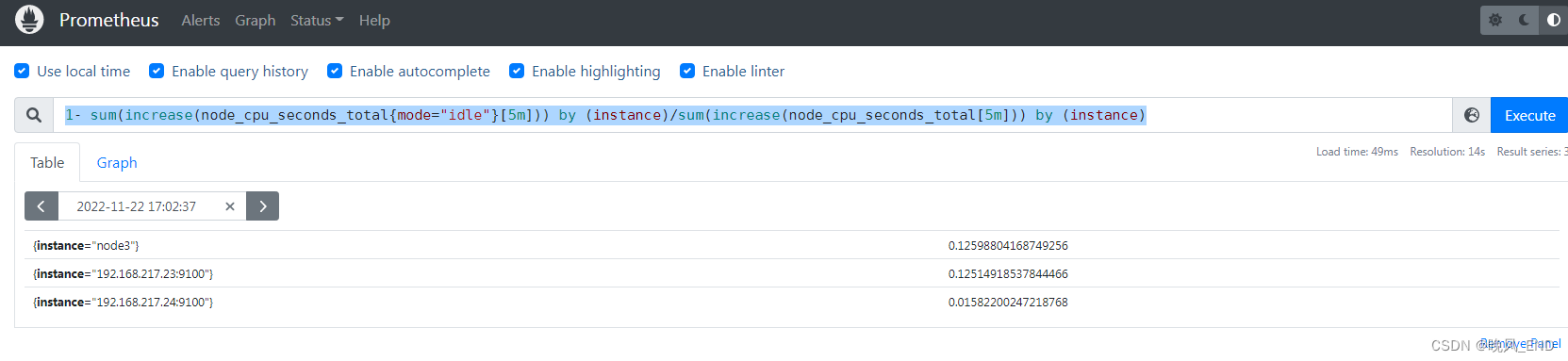

计算平均五分钟内的节点的CPU使用率:

1- sum(increase(node_cpu_seconds_total{mode="idle"}[5m])) by (instance)/sum(increase(node_cpu_seconds_total[5m])) by (instance)

查询节点的内存使用率:

(1- (node_memory_Buffers_bytes + node_memory_Cached_bytes + node_memory_MemFree_bytes) / node_memory_MemTotal_bytes) * 100

查询每个节点的总内存:

(node_memory_MemTotal_bytes)

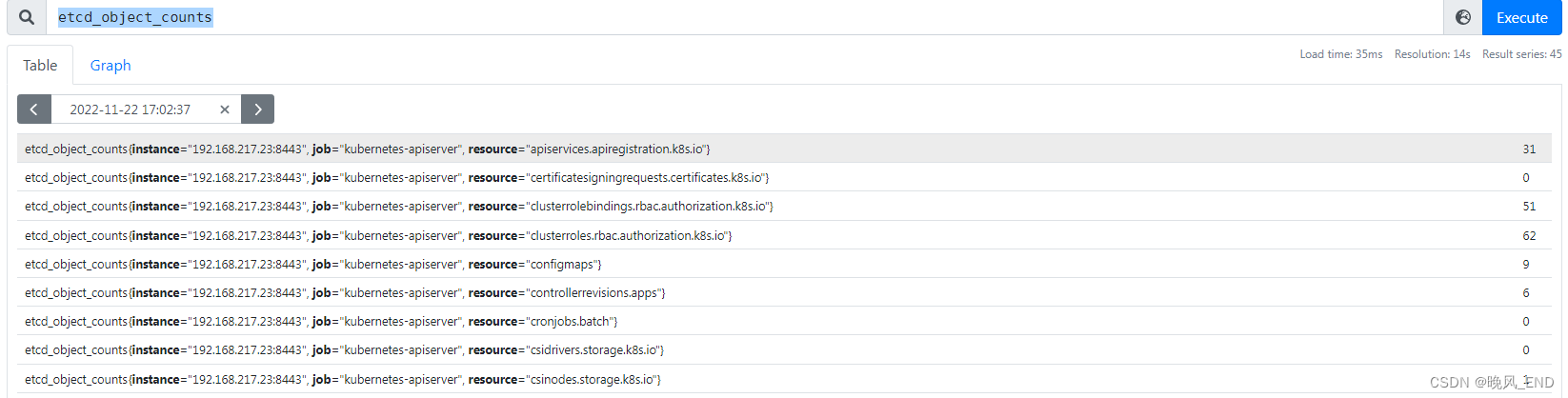

etcd存储的对象数目:

etcd_object_counts

这些都是可以验证的,比如clusterrole的数量,查询出来的是62个,在集群上实际查询也是可以验证到这个数字是对的:

[root@node3 kube-stat]# k get clusterrole |wc -l

63