Title

题目

Comparative benchmarking of failure detection methods in medical image segmentation: Unveiling the role of confidence aggregation

医学图像分割中故障检测方法的比较基准评测:揭示置信度聚合的作用

01

文献速递介绍

语义分割是医学图像分析研究中最广泛研究的任务之一,基于深度学习的一些算法在各种数据集上的表现良好(Isensee等,2021)。然而,当应用于真实环境或来自未知扫描仪或机构的数据集时,深度学习模型的性能会下降,这种现象在分割任务和其他图像分析任务中均有观察到(AlBadawy等,2018;Zech等,2018;Badgeley等,2019;Beede等,2020;Campello等,2021)。因此,预测有时可能不准确,不能盲目依赖。

尽管可以通过人工检查来识别有问题的分割结果,但随着图像维度的增加和分割结构的复杂化(尤其是放射学3D图像),人工检查变得越来越耗时。当分割仅是大规模数据集自动分析管道中的一步时,这一问题更加严重,使得人工检查不可行且对可靠分割的依赖尤为关键。改进分割模型及其鲁棒性是一个可能的解决方案,但本文专注于一种补充方法,即通过故障检测方法增强分割模型。本文定义并评估的故障检测旨在自动识别需要排除或人工校正的分割结果,以便在进入下游任务(例如体积测量、放射治疗计划、大规模分析)之前进行处理。这涉及为每个分割结果(图像级别)提供一个标量置信分数,以指示分割失败的可能性。尽管类级别或像素级别的故障检测是有趣的替代选择,但我们专注于图像级别的故障检测,因为在上述下游分析的背景下,这通常是最实际相关的任务:如果在任何级别发生分割故障,则必须决定是否保留整个预测(图像级别)用于进一步分析或予以拒绝。在部分预测有用的应用中,例如对像素或类别子集,像素级或类别级故障检测可能更为有益。从方法学的角度来看,值得注意的是,像素级或类别级方法对于图像级别的故障检测任务仍然是相关的,但它们需要适当的聚合函数,这增加了复杂性。

医学图像分割的故障检测是多个研究方向的动力来源,每个方向对该任务的处理方式不同:不确定性估计方法(Mehrtash等,2020)通常旨在为每个像素预测的正确性提供校准概率。一些研究提出将这些分数聚合到类别或图像级别,用于故障检测任务(Roy等,2019;Jungo等,2020;Ng等,2023)。分布外(OOD)检测方法(González等,2022;Graham等,2022)旨在识别偏离训练集分布的数据样本,这些样本可能导致分割失败。

第三种方法,分割质量回归方法(Valindria等,2017;Robinson等,2018;Li等,2022),尝试在没有真实标注的情况下直接预测分割指标值。

尽管故障检测在实践中具有重要意义并且方法多样,但当前分割故障检测的进展受到现有工作中评价实践不足的阻碍:虽然这些方法共享故障检测的实际动机,但任务定义和评价指标各异,使得跨工作结果的比较变得困难。通常,代理任务(如OOD检测、不确定性校准或分割质量回归)被用于评价,而不是直接处理故障检测(Mehrtash等,2020;Graham等,2022;Zhao等,2022;Ouyang等,2022)。此外,用于衡量故障检测的指标缺乏标准化(Valindria等,2017;Wang等,2019;Jungo等,2020;Kushibar等,2022;Ng等,2023),其不同特性和弱点很少被讨论。评价通常集中于相关方法的一个子集。故障检测方法可粗略分为像素级和图像级方法,但现有工作通常专注于其中之一,忽视了将像素级不确定性聚合为图像级不确定性的潜力。只使用单一数据集(解剖学)或未考虑数据集分布变化(例如,Jungo等,2020;Ng等,2023)。尽管针对特定应用的工作专注于单一分割任务或数据集是合理的,但这无法回答关于泛化到其他数据集和真实应用的问题,而在这些情况下,分布变化是预期的。公共可用实现的有限性:很少有论文公开其代码,通常省略基准实现(附录A),这妨碍了可重复性并导致不可靠的基准性能。为解决这些问题,我们重新审视故障检测任务定义和评价协议,使其与故障检测的实际动机对齐。这使得所有相关方法的全面比较成为可能,最终构建了一个医学图像分割故障检测的全面基准。我们的贡献(总结见图1)包括:通过剖析现有评价协议的缺陷并提出一个多功能且稳健的故障检测评价流程,整合现有评价协议。该流程基于选择性分类文献中的风险覆盖分析并缓解了已识别的缺陷。引入一个包含多个公开可用的放射学3D数据集的基准,以评估故障检测方法在单一数据集之外的泛化能力。我们的测试数据集包含现实的分布变化,模拟潜在的失败来源,从而进行更全面的评估。在提出的基准下,比较了代表故障检测不同方法的各种方法,包括图像级方法和像素级方法(带后续聚合)。我们发现,基于集成预测的成对Dice分数(Roy等,2019)在所有比较方法中始终表现最佳,并推荐其作为未来研究的强基线。所有实验的源代码,包括数据集准备、分割、故障检测方法实现和评价脚本,均已公开。

Aastract

摘要

Semantic segmentation is an essential component of medical image analysis research, with recent deelearning algorithms offering out-of-the-box applicability across diverse datasets. Despite these advancements,segmentation failures remain a significant concern for real-world clinical applications, necessitating reliabledetection mechanisms. This paper introduces a comprehensive benchmarking framework aimed at evaluatingfailure detection methodologies within medical image segmentation. Through our analysis, we identify thestrengths and limitations of current failure detection metrics, advocating for the risk-coverage analysis as aholistic evaluation approach. Utilizing a collective dataset comprising five public 3D medical image collections,we assess the efficacy of various failure detection strategies under realistic test-time distribution shifts. Ourfindings highlight the importance of pixel confidence aggregation and we observe superior performance of thepairwise Dice score (Roy et al., 2019) between ensemble predictions, positioning it as a simple and robustbaseline for failure detection in medical image segmentation. To promote ongoing research, we make thebenchmarking framework available to the community

语义分割是医学图像分析研究中的关键组成部分,近年来的深度学习算法在多个数据集上展现了开箱即用的适用性。尽管取得了这些进展,分割失败仍然是实际临床应用中的一个重要问题,因而需要可靠的故障检测机制。本文引入了一个全面的基准框架,旨在评估医学图像分割中的故障检测方法。通过分析,我们识别了当前故障检测指标的优点和局限性,并提倡使用风险-覆盖分析作为一种全面的评价方法。利用包含五个公开的三维医学图像数据集的集合数据,我们评估了各种故障检测策略在实际测试分布变化下的效果。研究结果突出了像素置信度聚合的重要性,并观察到基于集成预测之间的成对Dice分数(Roy等,2019)在所有比较方法中表现优异,确立了其作为医学图像分割故障检测的一种简单而稳健的基线方法。为了促进持续的研究,我们向社区公开了这一基准框架。

Method

方法

To benchmark failure detection methods, we need concise failuredetection metrics that fulfill the requirements R1–R3 from Section 2.We compare common metric candidates in Table 1 and choose toperform a risk-coverage analysis as the main evaluation, with the areaunder the risk-coverage curve (AURC) as a scalar failure detectionperformance metric, as it fulfills all requirements. The risk-coverageanalysis was originally proposed by El-Yaniv and Wiener (2010) andAURC was suggested as a comprehensive failure detection metric forimage classification by Jaeger et al. (2023).

为了对故障检测方法进行基准测试,我们需要满足第2节中R1–R3要求的简洁故障检测指标。在表1中比较了常见的指标候选,并选择使用风险-覆盖分析作为主要评价方法,其性能以风险-覆盖曲线下面积(AURC)作为标量故障检测指标,因为它满足所有要求。风险-覆盖分析最初由El-Yaniv和Wiener(2010)提出,而AURC被Jaeger等(2023)建议作为图像分类中全面的故障检测指标。

Conclusion

结论

In conclusion, our study addresses the pitfalls in existing evaluation protocols for segmentation failure detection by proposing aflexible evaluation pipeline based on a risk-coverage analysis. Usingthis pipeline, we introduced a benchmark comprising multiple radiological 3D datasets to assess the generalization of many failure detectionmethods, and found that the pairwise Dice score between ensemblepredictions consistently outperforms other methods, serving as a strong baseline for future studies.

总之,我们的研究通过提出基于风险-覆盖分析的灵活评价流程,解决了现有分割故障检测评价协议中的不足。利用这一流程,我们引入了一个基准,包含多个放射学3D数据集,用于评估多种故障检测方法的泛化能力。研究发现,基于集成预测之间的成对Dice分数方法始终优于其他方法,成为未来研究的强基线。

Results

结果

In the following sections, we first report the segmentation performances without failure detection in Section 5.1. Then, we describe themain benchmark results, starting with a comparison of pixel confidenceaggregation methods (Section 5.2) and extending the scope towardspixel- and image-level methods (Section 5.3). In Section 5.4, we studythe effect of alternative failure risk definitions. Finally, we performa qualitative analysis of the pairwise DSC method, to understand itsstrengths and weaknesses (Section 5.5).

在接下来的章节中,我们首先在第5.1节报告未使用故障检测时的分割性能。然后,我们描述主要的基准测试结果,首先比较像素置信度聚合方法(第5.2节),并将范围扩展到像素级和图像级方法(第5.3节)。在第5.4节中,我们研究了不同故障风险定义的影响。最后,在第5.5节中,我们对成对Dice相似系数(DSC)方法进行定性分析,以了解其优点和缺点。

Figure

图

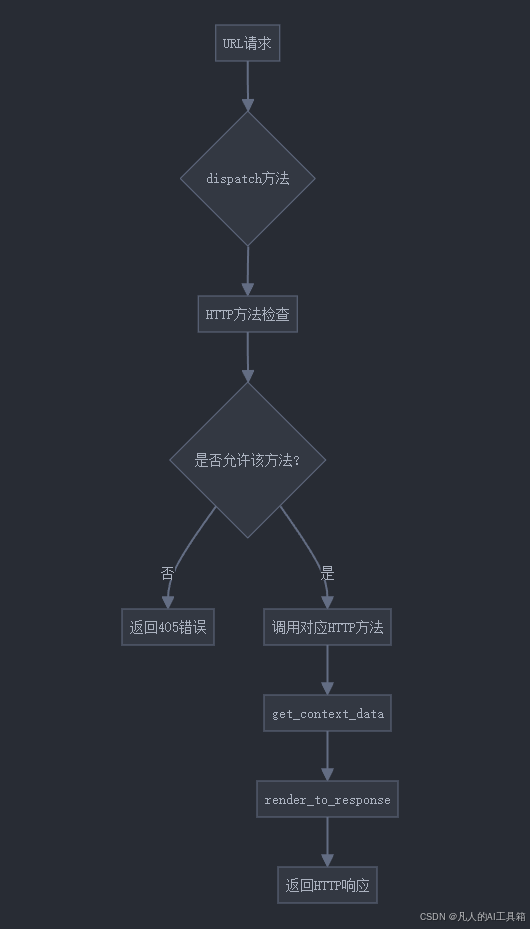

Fig. 1. Overview of the research questions and contributions of this paper. Based on a formal definition of the image-level failure detection task, we formulate requirements for theevaluation protocol. Existing failure detection metrics are compared and the risk-coverage analysis is identified as a suitable evaluation protocol. We then propose a benchmarkingframework for failure detection in medical image segmentation, which includes a diverse pool of 3D medical image datasets. A wide range of relevant methods are compared,including lines of research for image-level confidence and aggregated pixel confidence, which have been mostly studied in separation so far.

图1. 本文研究问题和贡献概览。基于图像级故障检测任务的正式定义,我们提出了评价协议的要求。对现有的故障检测指标进行了比较,并确定风险-覆盖分析是一种合适的评价协议。随后,我们提出了一个针对医学图像分割中故障检测的基准框架,其中包括多样化的3D医学图像数据集池。比较了广泛的相关方法,包括图像级置信度和聚合像素置信度的研究方向,这些方向迄今为止大多是分开研究的。

Fig. 2. Segmentation performance of a single U-Net on the test sets. Boxes show the median and IQR, while whiskers extend to the 5th and 95th percentiles, respectively. Eachdataset contains samples drawn from the same distribution as the training set (in-distribution, ID) and samples drawn from a different data distribution (dataset shift) with thesame structures to be segmented. Usually, the performance on the in-distribution samples is higher than on the samples with distribution shift, but especially for the Kidney tumor(which lacks dataset shifts) and Covid datasets, there are also several in-distribution failure cases.

图2. 单一U-Net在测试集上的分割性能。盒子表示中位数和四分位距(IQR),须线分别延伸到第5和第95百分位数。每个数据集包含从与训练集相同分布中抽取的样本(分布内,ID)以及从不同数据分布中抽取的样本(数据集分布变化),分割目标结构相同。通常情况下,分布内样本的性能高于分布变化样本,但尤其是在肾肿瘤(没有数据集分布变化)和Covid数据集上,也存在一些分布内的失败案例。

Fig. 3. Comparison of aggregation methods from Section 4.4.2 in terms of AURC scores for all datasets (lower is better). The experiments are named as ‘‘prediction model +confidence method’’ and each of them was repeated using 5 folds. Colored markers denote AURC values achieved by the methods, while gray marks above/below them are AURCvalues for random/optimal confidence rankings (which differ between the models trained on different folds; see Section 4.1). Pairwise DSC scores consistently best, but does notapply to single network outputs. Aggregation methods based on regression forests (RF) also show performance gains compared to the mean PE baseline, but fail catastrophicallyon the prostate dataset, possibly due to the small training set size. PE: predictive entropy. RF: regression forest.

图3. 第4.4.2节中的聚合方法在所有数据集上的AURC分数比较(AURC分数越低越好)。实验命名为“预测模型 + 置信度方法”,每个实验重复了5折交叉验证。彩色标记表示各方法获得的AURC值,灰色标记表示随机/最优置信度排序的AURC值(这些值因训练于不同折的模型而异;见第4.1节)。成对Dice相似系数(DSC)分数始终表现最佳,但不适用于单一网络输出。基于回归森林(RF)的聚合方法与平均预测熵(PE)基线相比也显示出性能提升,但在前列腺数据集上表现灾难性失败,可能是由于训练集规模过小。 PE:预测熵。RF:回归森林。

Fig. 4. Rankings by average AURC (top, lower ranks are better) and the underlying AURC scores (bottom; lower is better) for methods from Section 4.4.3 and all datasets. Theexperiments are named as ‘‘prediction model + confidence method’’ and each of them was repeated using 5 folds. In the lower diagram, colored dots denote AURC values achievedby the methods, while gray marks above/below them are AURC values for random/optimal confidence rankings (which differ between the models trained on different folds; seeSection 4.1). Ensemble + pairwise DSC is the best method overall, often achieving close to optimal AURC scores. The ranking on the prostate dataset is an outlier, which couldbe due to the small training set size. PE: predictive entropy.

图4. 来自第4.4.3节的方法在所有数据集上的平均AURC排名(上方,排名越低越好)及其对应的AURC分数(下方,AURC分数越低越好)。实验命名为“预测模型 + 置信度方法”,每个实验使用5折交叉验证重复进行。在下方图表中,彩色点表示各方法的实际AURC值,灰色标记表示随机/最优置信度排序的AURC值(这些值因不同折训练的模型而异,见第4.1节)。集成预测+成对Dice相似系数(DSC)是总体上最佳的方法,通常能达到接近最优的AURC分数。前列腺数据集的排名是一个异常值,这可能是由于训练集规模较小所致。 PE:预测熵。

Fig. 5. Impact of the choice of segmentation metric as a risk function on the ranking stability, comparing mean DSC (left) and NSD (right). Bootstrapping (𝑁 = 500) was used toobtain a distribution of ranks for the results of each fold and the ranking distributions of all folds were accumulated. All ranks across datasets are combined in this figure, wherethe circle area is proportional to the rank count and the black x-markers indicate median ranks, which were also used to sort the methods. Overall, the ranking distributionsare similar for mean DSC and NSD. The variance in the ranking distributions largely originates from combining the rankings across datasets, so for each dataset individually theranking is more stable (see for example the Covid dataset in fig. B.12).

图5. 不同分割指标作为风险函数对排名稳定性的影响,比较了平均Dice相似系数(DSC,左图)和归一化表面距离(NSD,右图)。通过自助法(𝑁 = 500)获取每个折结果的排名分布,并累计所有折的排名分布。本图中所有数据集的排名均被合并,其中圆的面积与排名次数成正比,黑色叉号表示中位排名,并用于对方法进行排序。总体来看,平均DSC和NSD的排名分布相似。排名分布的方差主要来源于跨数据集的排名合并,因此对于每个单独数据集的排名会更稳定(例如,Covid数据集的排名稳定性,见附图B.12)。

Fig. 6. Qualitative analysis of ensemble predictions on all datasets. For each dataset (rows), an interesting failure case is shown, consisting of (columns from left to right): thereference segmentation, the ensemble prediction and individual predictions of ensemble members (Ensemble #1 – 5) trained with different random seeds. True mean DSC isreported alongside the pairwise DSC scores. The ensemble predictions often disagree about test cases for which segmentation errors occur, which leads to low pairwise Dice andcan be considered a detected failure (rows 1–4). However, there are also cases where the ensemble is confident about a faulty segment, which could result in a silent failure (lasttwo rows).

图6. 针对所有数据集的集成预测定性分析。对于每个数据集(行),展示了一个有趣的失败案例,包括(从左到右的列):参考分割结果、集成预测结果,以及用不同随机种子训练的集成成员(Ensemble #1–5)的单独预测结果。同时报告了真实的平均Dice相似系数(DSC)和成对Dice分数。对于发生分割错误的测试案例,集成预测结果通常存在分歧,导致成对Dice分数较低,可被视为检测到的失败(第1–4行)。然而,也存在一些情况,集成对一个错误的分割结果表现出较高的置信度,这可能导致无声失败(最后两行)。

Table

表

Table 1Comparison of metric candidates for segmentation failure detection. Among those, AURC is the only metric that captures segmentation performanceand confidence ranking, which we find necessary for the comprehensive evaluation of a failure detection system. A detailed discussion of therequirements (R1–R3) associated with each column is in Section 2. f-AUROC uses binary failure labels. MAE: mean absolute error. PC: Pearsoncorrelation. SC: Spearman correlation

表1 分割故障检测指标候选的比较。在这些指标中,AURC是唯一能够同时捕捉分割性能和置信度排序的指标,我们认为这是对故障检测系统进行全面评价所必需的。与每一列相关的要求(R1–R3)的详细讨论见第2节。f-AUROC使用二元故障标签。MAE:平均绝对误差。PC:皮尔逊相关系数。SC:斯皮尔曼相关系数。

Table 2Summary of datasets used in this study. The #Testing column contains case numbers for each subset of the test set separated by a comma, starting with the in-distribution testsplit and followed by the shifted ‘‘domains’’. The number of classes includes one count for background.

表2 本研究中使用的数据集概览。#Testing 列包含每个测试集子集的案例数量,以逗号分隔,首先是分布内测试集,然后是偏移的“领域”。类别数量包括一个背景类别的计数。