引言:目前flink的文章比较多,但一般都关注某一特定方面,很少有一个文章,从一个简单的例子入手,说清楚从编码、构建、部署全流程是怎么样的。所以编写本文,自己做个记录备查同时跟大家分享一下。本文以简单的mysql cdc为例展开说明。

环境说明:MySQL:5.7;flink:1.14.0;hadoop:3.0.0;操作系统:CentOS 7.6;JDK:1.8.0_401。

1.MySQL

1.1 创建数据库和测试数据

数据库脚本:

CREATE DATABASE `flinktest`;

USE `flinktest`;

CREATE TABLE `products` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) NOT NULL,

`description` varchar(512) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=9 DEFAULT CHARSET=utf8mb4;

insert into `products`(`id`,`name`,`description`) values

(1,'aaa','aaaa'),

(2,'ccc','ccc'),

(3,'dd','ddd'),

(4,'eeee','eee'),

(5,'ffff','ffff'),

(6,'hhhh','hhhh'),

(7,'iiii','iiii'),

(8,'jjjj','jjjj');

账号使用root就行。

1.2 开启binlog

参考:https://core815.blog.csdn.net/article/details/144233298

踩坑:测试过程中发现mysql 9.0一直无法获取更新的数据,最终使用的5.7。

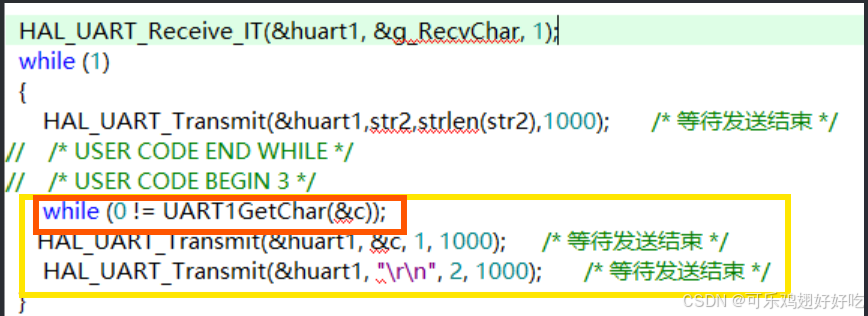

2.编码

2.1 主要实现

package com.zl;

import com.ververica.cdc.connectors.mysql.source.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.configuration.RestOptions;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

import java.util.Arrays;

import java.util.List;

import java.util.logging.Level;

import java.util.logging.Logger;

import static com.mysql.cj.conf.PropertyKey.useSSL;

public class MysqlExample {

public static void main(String[] args) throws Exception {

List<String> SYNC_TABLES = Arrays.asList("flinktest.products");

MySqlSource<String> mySqlSource = MySqlSource.<String>builder()

.hostname("10.86.37.169")

.port(3306)

.databaseList("flinktest")

.tableList(String.join(",", SYNC_TABLES))

.username("root")

.password("pwd")

.startupOptions(StartupOptions.initial())

.deserializer(new JsonDebeziumDeserializationSchema())

.build();

/// 配置flink访问页面-开始

/* Configuration config = new Configuration();

// 启用 Web UI,访问地址【http://ip:port】

config.setBoolean("web.ui.enabled", true);

config.setString(RestOptions.BIND_PORT,"8081");

// 这个使用jar直接运行可以,如果提交给yarn会报错,需要改为getExecutionEnvironment()

StreamExecutionEnvironment env = StreamExecutionEnvironment

.createLocalEnvironmentWithWebUI(config);*/

///配置flink访问页面-结束

StreamExecutionEnvironment env = StreamExecutionEnvironment

.getExecutionEnvironment();

env.setParallelism(1);

/// 设置CK存储-开始(不需要可注释掉)

// hadoop部署见:https://core815.blog.csdn.net/article/details/144022938

// hdfs访问地址见:/home/hadoop-3.3.3/etc/hadoop/core-site.xml

env.getCheckpointConfig()

.setCheckpointStorage("hdfs://10.86.97.191:9000"+"/flinktest/");

env.getCheckpointConfig().setCheckpointInterval(3000);

/// 设置CK存储-结束

// 如果不能正常读取mysql的binlog:

//①可能是mysql没有打开binlog或者mysql版本不支持(当前在mysql5.7.20环境下,功能正常);

// ②可能是数据库ip、port、账号、密码错误。

env.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL Source")

.setParallelism(1).print();

env.execute("Print MySQL Snapshot + Binlog");

}

}

2.2 依赖

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0

http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.zl.flinkcdc</groupId>

<artifactId>FlickCDC</artifactId>

<packaging>jar</packaging>

<version>1.0-SNAPSHOT</version>

<name>FlickCDC</name>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<flink-version>1.14.0</flink-version>

<flink-cdc-version>2.4.0</flink-cdc-version>

<hadoop.version>3.0.0</hadoop.version>

<slf4j.version>1.7.25</slf4j.version>

<log4j.version>2.16.0</log4j.version>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>${flink-cdc-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-guava</artifactId>

<version>30.1.1-jre-15.0</version>

</dependency>

<!--<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-guava</artifactId>

<version>18.0-13.0</version>

</dependency>-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-base</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-common</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web_2.11</artifactId>

<version>${flink-version}</version>

</dependency>

<!-- hadoop相关依赖-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

<scope>provided</scope>

<exclusions>

<exclusion>

<artifactId>commons-cli</artifactId>

<groupId>commons-cli</groupId>

</exclusion>

<exclusion>

<artifactId>commons-compress</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

<exclusion>

<artifactId>guava</artifactId>

<groupId>com.google.guava</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-annotations</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-core</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-databind</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<scope>provided</scope>

<exclusions>

<exclusion>

<artifactId>asm</artifactId>

<groupId>org.ow2.asm</groupId>

</exclusion>

<exclusion>

<artifactId>avro</artifactId>

<groupId>org.apache.avro</groupId>

</exclusion>

<exclusion>

<artifactId>commons-cli</artifactId>

<groupId>commons-cli</groupId>

</exclusion>

<exclusion>

<artifactId>commons-codec</artifactId>

<groupId>commons-codec</groupId>

</exclusion>

<exclusion>

<artifactId>commons-compress</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

<exclusion>

<artifactId>commons-io</artifactId>

<groupId>commons-io</groupId>

</exclusion>

<exclusion>

<artifactId>commons-lang3</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

<exclusion>

<artifactId>commons-logging</artifactId>

<groupId>commons-logging</groupId>

</exclusion>

<exclusion>

<artifactId>commons-math3</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

<exclusion>

<artifactId>guava</artifactId>

<groupId>com.google.guava</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-databind</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jaxb-api</artifactId>

<groupId>javax.xml.bind</groupId>

</exclusion>

<exclusion>

<artifactId>log4j</artifactId>

<groupId>log4j</groupId>

</exclusion>

<exclusion>

<artifactId>nimbus-jose-jwt</artifactId>

<groupId>com.nimbusds</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-log4j12</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

<exclusion>

<artifactId>jsr305</artifactId>

<groupId>com.google.code.findbugs</groupId>

</exclusion>

<exclusion>

<artifactId>gson</artifactId>

<groupId>com.google.code.gson</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

<scope>provided</scope>

<exclusions>

<exclusion>

<artifactId>commons-cli</artifactId>

<groupId>commons-cli</groupId>

</exclusion>

<exclusion>

<artifactId>guava</artifactId>

<groupId>com.google.guava</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-databind</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>commons-cli</groupId>

<artifactId>commons-cli</artifactId>

<version>1.5.0</version>

</dependency>

<!--mvn install:install-file -Dfile

=D:/maven/flink-shaded-hadoop-3-uber-3.1.1.7.2.9.0-173-9.0.jar

-DgroupId=org.apache.flink -DartifactId

=flink-shaded-hadoop-3 -Dversion=3.1.1.7.2.9.0-173-9.0 -Dpackaging=jar-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-hadoop-3</artifactId>

<version>3.1.1.7.2.9.0-173-9.0</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

<archive>

<manifest>

<addClasspath>true</addClasspath>

<mainClass>com.zl.MysqlExample</mainClass>

</manifest>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

完整代码见:https://gitee.com/core815/flink-cdc-mysql

3.打包

mvn版本:3.5.4。

到pom.xml所在路径,执行“mvn package”

打包效果:

4.jar直接运行

java -jar FlickCDC-1.0-SNAPSHOT-jar-with-dependencies.jar

5.flink yarn运行

hadoop、flink、yarn环境见:https://core815.blog.csdn.net/article/details/144022938

把FlickCDC-1.0-SNAPSHOT-jar-with-dependencies.jar放到“/home”路径下。

执行下面命令:

flink run-application -t yarn-application -Dparallelism.default=1 -Denv.java.opts=" -Dfile.encoding=UTF-8 -Dsun.jnu.encoding=UTF-8" -Dtaskmanager.memory.process.size=1g -Dyarn.application.name="FlinkCdcMysql" -Dtaskmanager.numberOfTaskSlots=1 -c com.zl.MysqlExample /home/FlickCDC-1.0-SNAPSHOT-jar-with-dependencies.jar

控制台看到如下打印:

yarn管理页面:

运行日志查看步骤:

下面即可看到完整日志:

6.常见问题

6.1 问题1

日志错误:

The MySQL server has a timezone offset (0 seconds ahead of UTC) which does not match the configured timezone Asia/Shanghai. Specify the right server-time-zone to avoid inconsistencies for time-related fields.

解决:

修改my.cnf文件。

[mysqld]

default-time-zone=‘Asia/Shanghai’

重启MySQL服务。

6.2 问题2:hdfs

日志错误:

Permission denied: user=PC2023, access=WRITE, inode=“/”:root:supergroup:drwxr-xr-x

解决:

临时解决

hadoop fs -chmod -R 777 /

6.3 问题3:guava30 guava18冲突

分析:

flink 1.13 cdc2.3的组合容易出这个问题。

解决:

参考:https://developer.aliyun.com/ask/574901

flink 使用1.14.0版本;cdc使用2.4.0版本。

6.4 问题4

日志错误:

/user/root/.flink/application_1733492706887_0002/log4j.properties could only be written to 0 of the 1 minReplication nodes

解决:

https://www.pianshen.com/article/1554707968/