概述

Android 获取视频缩略图的方法通常有:

- ContentResolver: 使用系统数据库

- MediaMetadataRetriever: 这个是android提供的类,用来获取本地和网络media相关文件的信息

- ThumbnailUtils: 是在android2.2(api8)之后新增的一个,该类为我们提供了三个静态方法供我们使用。

- MediaExtractor 与MediaMetadataRetriever类似

然而, 这几种方法存在一定的局限性, 比如, ContentResolver需要视频文件已经通过mediascanner 添加到系统数据库中, 使用MediaMetadataRetriever不支持某些格式等等. 常规的格式比如MP4, MKV, 这些接口还是很实用的.

对于系统不支持的播放的格式比如AVI等, 需要一个更丰富的接口或方法来获取视频的缩略图. 于是尝试使用OpenGLES 离屏渲染 + MediaPlayer来提取视频画面作为缩略图.

参考代码

参考:ExtractMpegFramesTest.java 改动, 使用MediaPlayer, 理论上, 只要使用MediaPlayer可以播放的视频, 都可以提取出视频画面.

import android.annotation.SuppressLint;

import android.graphics.Bitmap;

import android.graphics.SurfaceTexture;

import android.media.MediaPlayer;

import android.opengl.EGL14;

import android.opengl.EGLConfig;

import android.opengl.EGLContext;

import android.opengl.EGLDisplay;

import android.opengl.EGLSurface;

import android.opengl.GLES10;

import android.opengl.GLES11Ext;

import android.opengl.GLES20;

import android.os.Build;

import android.util.Log;

import android.view.Surface;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;

import java.nio.IntBuffer;

import javax.microedition.khronos.opengles.GL10;

public class VideoFrameExtractorGL extends Thread implements MediaPlayer.OnSeekCompleteListener {

final String TAG = "VFEGL";

private MediaPlayer mediaPlayer;

private Surface surface;

private int bitmapWidth, bitmapHeight;

private int textureId;

private SurfaceTexture surfaceTexture;

final Object drawLock = new Object();

// Shader代码

private String vertexShaderCode =

"#version 300 es\n" +

"in vec4 position;\n" +

"in vec2 texCoord;\n" +

"uniform mat4 uSTMatrix;\n" +

"out vec2 vTexCoord;\n" +

"\n" +

"void main() {\n" +

" // \n" +

" float curvature = -0.5; // 曲率值,负值表示凹面\n" +

" vec4 pos = position;\n" +

" //pos.z = curvature * (pos.x * pos.x + pos.y * pos.y);\n" +

"\n" +

" //if(pos.x > 0.0001) pos.y += 0.2;\n" +

"\n" +

" gl_Position = pos;\n" +

" vTexCoord = (uSTMatrix * vec4(texCoord, 0.0, 1.0)).xy;\n" +

"}";

private String fragmentShaderCode =

"#version 300 es\n" +

"#extension GL_OES_EGL_image_external : require\n" +

"precision mediump float;\n" +

"\n" +

"in vec2 vTexCoord;\n" +

"uniform samplerExternalOES sTexture;\n" +

"out vec4 fragColor;\n" +

"\n" +

"void main() {\n" +

" fragColor = texture(sTexture, vTexCoord);\n" +

"}\n";

protected float[] mSTMatrix = new float[16];

protected int mProgram;

private int mPositionHandle;

private int mTexCoordHandle;

private int mSTMatrixHandle;

// 顶点坐标和纹理坐标

private final float[] squareCoords = {

-1.0f, 1.0f, // top left

1.0f, 1.0f, // top right

-1.0f, -1.0f, // bottom left

1.0f, -1.0f // bottom right

};

private final float[] textureCoords = {

0.0f, 0.0f, // top left

1.0f, 0.0f, // top right

0.0f, 1.0f, // bottom left

1.0f, 1.0f // bottom right

};

private FloatBuffer vertexBuffer;

private FloatBuffer textureBuffer;

// EGL相关变量

private EGLDisplay eglDisplay;

private EGLContext eglContext;

private EGLSurface eglSurface;

boolean isPrepared = false;

long videoDuration = 0;

int videoWidth, videoHeight;

String mPath;

// 构造函数,初始化MediaPlayer并设置渲染大小

public VideoFrameExtractorGL(String videoFile, int bitmapWidth, int bitmapHeight, OnFrameExtractListener l) {

this.bitmapWidth = bitmapWidth;

this.bitmapHeight = bitmapHeight;

mPath = videoFile;

setOnFrameExtractListener(l);

}

@Override

public void run() {

mediaPlayer = new MediaPlayer();

mediaPlayer.setVolume(0, 0);

mediaPlayer.setOnSeekCompleteListener(this);

initializeEGL(); // 初始化EGL环境

initializeOpenGL(); // 初始化OpenGL离屏渲染环境

try {

mediaPlayer.setDataSource(mPath);

mediaPlayer.setOnPreparedListener(new MediaPlayer.OnPreparedListener() {

@Override

public void onPrepared(MediaPlayer mediaPlayer) {

Log.d(TAG, "onPrepared start playing");

isPrepared = true;

videoDuration = mediaPlayer.getDuration();

videoWidth = mediaPlayer.getVideoWidth();

videoHeight = mediaPlayer.getVideoHeight();

mediaPlayer.start();

}

});

mediaPlayer.prepareAsync();

} catch (Exception e) {

e.printStackTrace();

}

int timeout = 20;

while(!isPrepared){

try {

sleep(30);

timeout--;

if(timeout < 0){

break;

}

} catch (InterruptedException ignore) {}

}

while(mediaPlayer != null) {

drawFrameLoop();

}

}

// 初始化EGL环境

@SuppressLint("NewApi")

private void initializeEGL() {

Log.d(TAG, "initializeEGL");

// 1. 获取默认的EGL显示设备

eglDisplay = EGL14.eglGetDisplay(EGL14.EGL_DEFAULT_DISPLAY);

if (eglDisplay == EGL14.EGL_NO_DISPLAY) {

throw new RuntimeException("Unable to get EGL14 display");

}

// 2. 初始化EGL

int[] version = new int[2];

if (!EGL14.eglInitialize(eglDisplay, version, 0, version, 1)) {

throw new RuntimeException("Unable to initialize EGL14");

}

// 3. 配置EGL

int[] configAttributes = {

EGL14.EGL_RED_SIZE, 8,

EGL14.EGL_GREEN_SIZE, 8,

EGL14.EGL_BLUE_SIZE, 8,

EGL14.EGL_ALPHA_SIZE, 8,

EGL14.EGL_RENDERABLE_TYPE, EGL14.EGL_OPENGL_ES2_BIT,

EGL14.EGL_NONE

};

EGLConfig[] eglConfigs = new EGLConfig[1];

int[] numConfigs = new int[1];

EGL14.eglChooseConfig(eglDisplay, configAttributes, 0, eglConfigs, 0, 1, numConfigs, 0);

if (numConfigs[0] == 0) {

throw new IllegalArgumentException("No matching EGL configs");

}

EGLConfig eglConfig = eglConfigs[0];

// 4. 创建EGL上下文

int[] contextAttributes = {

EGL14.EGL_CONTEXT_CLIENT_VERSION, 2,

EGL14.EGL_NONE

};

eglContext = EGL14.eglCreateContext(eglDisplay, eglConfig, EGL14.EGL_NO_CONTEXT, contextAttributes, 0);

if (eglContext == null) {

throw new RuntimeException("Failed to create EGL context");

}

// 5. 创建离屏渲染的EGL Surface

int[] surfaceAttributes = {

EGL14.EGL_WIDTH, bitmapWidth,

EGL14.EGL_HEIGHT, bitmapHeight,

EGL14.EGL_NONE

};

eglSurface = EGL14.eglCreatePbufferSurface(eglDisplay, eglConfig, surfaceAttributes, 0);

if (eglSurface == null) {

throw new RuntimeException("Failed to create EGL Surface");

}

// 6. 绑定上下文

if (!EGL14.eglMakeCurrent(eglDisplay, eglSurface, eglSurface, eglContext)) {

throw new RuntimeException("Failed to bind EGL context");

}

}

// 初始化OpenGL ES的离屏渲染,使用帧缓冲区

private void initializeOpenGL() {

Log.d(TAG, "initializeOpenGL");

// 创建纹理并绑定

int[] textureIds = new int[1];

GLES20.glGenTextures(1, textureIds, 0);

textureId = textureIds[0];

// 绑定纹理并绘制

//GLES20.glActiveTexture(GLES20.GL_TEXTURE0);

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textureId);

GLES20.glTexParameterf(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_MIN_FILTER,

GLES20.GL_NEAREST

);

GLES20.glTexParameterf(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_MAG_FILTER,

GLES20.GL_LINEAR

);

GLES20.glTexParameteri(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_WRAP_S,

GLES20.GL_CLAMP_TO_EDGE

);

GLES20.glTexParameteri(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_WRAP_T,

GLES20.GL_CLAMP_TO_EDGE

);

surfaceTexture = new SurfaceTexture(textureId);

surfaceTexture.setOnFrameAvailableListener(new SurfaceTexture.OnFrameAvailableListener() {

@Override

public void onFrameAvailable(SurfaceTexture surfaceTexture) {

Log.d(TAG, "onFrameAvailable");

synchronized (drawLock){

drawLock.notifyAll();

}

}

});

// 创建Surface与MediaPlayer绑定

surface = new Surface(surfaceTexture);

mediaPlayer.setSurface(surface);

// 初始化着色器

createProgram(vertexShaderCode, fragmentShaderCode);

// 在构造函数中初始化缓冲区

vertexBuffer = ByteBuffer.allocateDirect(squareCoords.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer()

.put(squareCoords);

vertexBuffer.position(0);

textureBuffer = ByteBuffer.allocateDirect(textureCoords.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer()

.put(textureCoords);

textureBuffer.position(0);

}

// 创建着色器程序

private void createProgram(String vertexSource, String fragmentSource) {

int vertexShader = loadShader(GLES20.GL_VERTEX_SHADER, vertexSource);

int fragmentShader = loadShader(GLES20.GL_FRAGMENT_SHADER, fragmentSource);

mProgram = GLES20.glCreateProgram();

GLES20.glAttachShader(mProgram, vertexShader);

GLES20.glAttachShader(mProgram, fragmentShader);

GLES20.glLinkProgram(mProgram);

GLES20.glUseProgram(mProgram);

mPositionHandle = GLES20.glGetAttribLocation(mProgram, "position");

mTexCoordHandle = GLES20.glGetAttribLocation(mProgram, "texCoord");

mSTMatrixHandle = GLES20.glGetUniformLocation(mProgram, "uSTMatrix");

}

// 加载着色器

private int loadShader(int type, String shaderSource) {

int shader = GLES20.glCreateShader(type);

GLES20.glShaderSource(shader, shaderSource);

GLES20.glCompileShader(shader);

return shader;

}

final Object extractLock = new Object();

int targetPosOfVideo;

boolean seeking = false;

//if ignore time check, add extracting to check if notify callback

//or else, it will notify after player is started.

boolean extracting = false;

@SuppressLint("WrongConstant")

public void extract(int posOfVideoInMs){

synchronized (extractLock) {

targetPosOfVideo = posOfVideoInMs;

seeking = true;

extracting = true;

while (!isPrepared) {

Log.w(TAG, "extract " + posOfVideoInMs + " ms failed: MediaPlayer is not ready.");

try {

Thread.sleep(15);

} catch (InterruptedException ignore) {}

}

{

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.O) {

mediaPlayer.seekTo(posOfVideoInMs, MediaPlayer.SEEK_NEXT_SYNC);

}else{

mediaPlayer.seekTo(posOfVideoInMs);

}

}

try {

Log.d(TAG, "extract " + posOfVideoInMs + " ms, and start extractLock wait");

extractLock.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

public void extract(float posRatio){

while(!isPrepared){

try {

sleep(10);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

}

int pos = (int)(posRatio * videoDuration);

extract(pos);

}

// 截取指定时间的图像帧

private void drawFrameLoop() {

synchronized (drawLock) {

long pos = mediaPlayer.getCurrentPosition();

Log.d(TAG, "drawFrameLoop at " + pos + " ms");

surfaceTexture.updateTexImage();

surfaceTexture.getTransformMatrix(mSTMatrix);

GLES20.glViewport(0, 0, bitmapWidth, bitmapHeight);

GLES10.glClearColor(color[0], color[1], color[2], 1.0f); // 设置背景颜色为黑色

GLES10.glClear(GL10.GL_COLOR_BUFFER_BIT); // 清除颜色缓冲区

GLES20.glUseProgram(mProgram);

// 传递顶点数据

vertexBuffer.position(0);

GLES20.glEnableVertexAttribArray(mPositionHandle);

GLES20.glVertexAttribPointer(mPositionHandle, 2, GLES20.GL_FLOAT, false, 0, vertexBuffer);

// 传递纹理坐标数据

textureBuffer.position(0);

GLES20.glEnableVertexAttribArray(mTexCoordHandle);

GLES20.glVertexAttribPointer(mTexCoordHandle, 2, GLES20.GL_FLOAT, false, 0, textureBuffer);

// 传递纹理矩阵

GLES20.glUniformMatrix4fv(mSTMatrixHandle, 1, false, mSTMatrix, 0);

// 绘制纹理

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, vertexBuffer.limit() / 2);

Bitmap bm = toBitmap();

if(extracting && !seeking) {

boolean notifyCallback = ignoreTimeCheck || (targetPosOfVideo > 0 && pos >= targetPosOfVideo);

if(notifyCallback) {

targetPosOfVideo = 0;

if (oel != null) oel.onFrameExtract(this, bm);

synchronized (extractLock) {

Log.d(TAG, "drawFrameLoop notify extractLock");

extractLock.notify();

}

}

}

try{drawLock.wait();}catch(Exception ignore){}

}

}

public boolean isDone(){

return mediaPlayer != null && targetPosOfVideo <= mediaPlayer.getCurrentPosition();

}

public Bitmap getBitmap(){

return toBitmap();

}

IntBuffer pixelBuffer;

private Bitmap toBitmap(){

// 读取帧缓冲区中的像素

if(pixelBuffer == null){

pixelBuffer = IntBuffer.allocate(bitmapWidth * bitmapHeight);

}else {

pixelBuffer.rewind();

}

GLES20.glReadPixels(0, 0, bitmapWidth, bitmapHeight, GLES20.GL_RGBA, GLES20.GL_UNSIGNED_BYTE, pixelBuffer);

// 创建Bitmap并将像素数据写入

Bitmap bitmap = Bitmap.createBitmap(bitmapWidth, bitmapHeight, Bitmap.Config.ARGB_8888);

pixelBuffer.position(0);

bitmap.copyPixelsFromBuffer(pixelBuffer);

return bitmap;

}

// 释放资源

@SuppressLint("NewApi")

public void release() {

if (mediaPlayer != null) {

mediaPlayer.release();

}

mediaPlayer = null;

synchronized (drawLock){

try {

drawLock.wait(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

drawLock.notifyAll();

}

if (surface != null) {

surface.release();

}

if (eglSurface != null) {

EGL14.eglDestroySurface(eglDisplay, eglSurface);

}

if (eglContext != null) {

EGL14.eglDestroyContext(eglDisplay, eglContext);

}

if (eglDisplay != null) {

EGL14.eglTerminate(eglDisplay);

}

GLES20.glDeleteTextures(1, new int[]{textureId}, 0);

}

float[] color = {0, 0, 0, 1f};

public void setColor(float r, float g, float b){

color[0] = r; color[1] = g; color[2] = b;

}

OnFrameExtractListener oel;

public void setOnFrameExtractListener(OnFrameExtractListener l){

oel = l;

}

@Override

public void onSeekComplete(MediaPlayer mediaPlayer) {

Log.d(TAG, "onSeekComplete pos=" + mediaPlayer.getCurrentPosition());

seeking = false;

}

public interface OnFrameExtractListener{

void onFrameExtract(VideoFrameExtractorGL vfe, Bitmap bm);

}

public static Bitmap[] extractBitmaps(int[] targetMs, String path, int bmw, int bmh){

final int len = targetMs.length;

final Bitmap[] bms = new Bitmap[len];

VideoFrameExtractorGL vfe = new VideoFrameExtractorGL(path, bmw, bmh, new OnFrameExtractListener() {

int count = 0;

@Override

public void onFrameExtract(VideoFrameExtractorGL vfe, Bitmap bm) {

Log.d("VFEGL", "extractBitmaps");

bms[count] = bm;

count ++;

if(count >= len){

vfe.release();

}

}

});

vfe.start();

for(int pos : targetMs){

vfe.extract(pos);

}

Log.d("VFEGL", "extractBitmaps done");

return bms;

}

boolean ignoreTimeCheck = false;

public static Bitmap[] extractBitmaps(String path, int bmw, int bmh,

final float durationRatio, final int bitmapCount){

final Bitmap[] bms = new Bitmap[bitmapCount];

VideoFrameExtractorGL vfe = new VideoFrameExtractorGL(path, bmw, bmh, new OnFrameExtractListener() {

int count = 0;

@Override

public void onFrameExtract(VideoFrameExtractorGL vfe, Bitmap bm) {

Log.d("VFEGL", "extractBitmaps");

bms[count] = bm;

count ++;

if(count >= bitmapCount){

vfe.release();

}

}

});

vfe.start();

vfe.ignoreTimeCheck = true;

vfe.extract(durationRatio);

Log.d("VFEGL", "extractBitmaps done");

return bms;

}

}

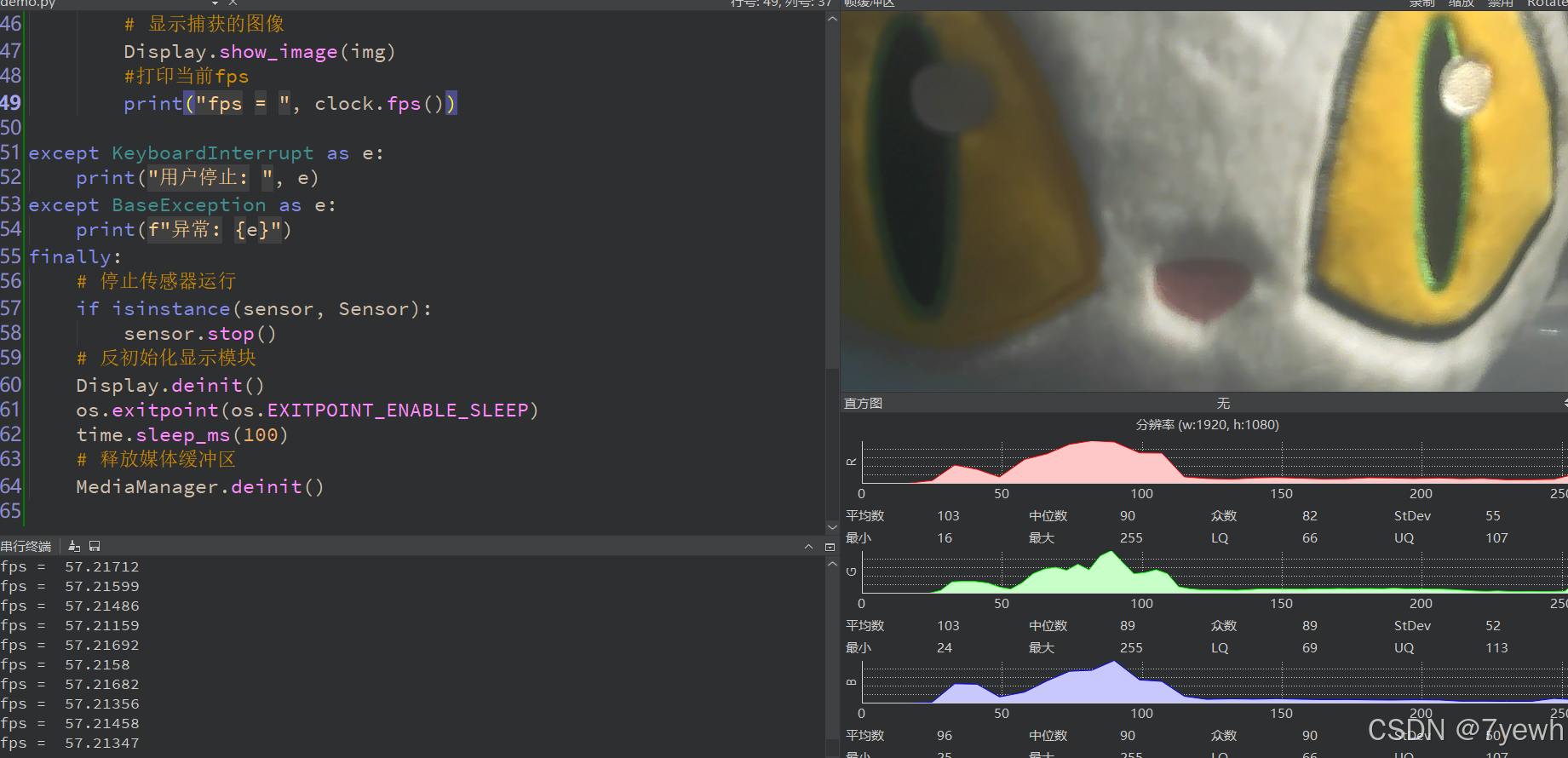

基本的流程如下:

- 初始化MeidaPlayer 用与播放视频

- 初始化OpenGL环境, 绑定Texture 和 SurfaceTexture

- 使用SurfaceTexutre创建Surface, 并为MediaPlayer 设置Surface, 这样视频就会绘制到Surface上

- 通过SurfaceTexture的setOnFrameAvailableListener回调绘制帧数据

- 从OpenGL中提取出glReadPixels提取出像素数据, 填充到Bitmap

调用

Bitmap[] bms = VideoFrameExtractorGL.extractBitmaps(path, 128, 72, 0.5f, bmCount);

需注意:

- 注意OpenGLES 的版本, 1.x 不支持离屏渲染, 2.x 需要配合着色器渲染图像

- 构建OpenGL的环境和渲染的工作, 要放在同一个线程中.

参考

Kotlin拿Android本地视频缩略图

Android视频图片缩略图的获取

Android 获取视频缩略图(获取视频每帧数据)的优化方案

37.4 OpenGL离屏渲染环境配置

ExtractMpegFramesTest.java

![241127学习日志——[CSDIY] [InternStudio] 大模型训练营 [20]](https://i-blog.csdnimg.cn/direct/39b6eb028e8f4293a21730505461939d.png#pic_center)

![如何使用ST7789展现图片?[ESP--4]](https://i-blog.csdnimg.cn/direct/825daf4eb48c417fa72538b5c75abf83.png)