本项目纯学习使用。

1 scrapy 代码

爬取逻辑非常简单,根据url来处理翻页,然后获取到详情页面的链接,再去爬取详情页面的内容即可,最终数据落地到excel中。

经测试,总计获取 11299条中医药材数据。

import pandas as pd

import scrapy

class ZhongyaoSpider(scrapy.Spider):

name = "zhongyao"

start_urls = [f"https://www.zysj.com.cn/zhongyaocai/index__{i}.html" for i in range(1, 27)]

def __init__(self, *args, **kwargs):

self.data = []

def parse(self, response):

for li in response.css('div#list-content ul li'):

a_tag = li.css('a')

title = a_tag.css('::attr(title)').get()

href = a_tag.css('::attr(href)').get()

if title and href:

# 构建完整的详情页 URL

detail_url = response.urljoin(href)

yield scrapy.Request(detail_url, callback=self.parse_detail, meta={'title': title})

# 解析逻辑

def parse_detail(self, response):

title = response.meta['title']

pinyin = response.css('div.item.pinyin_name_phonetic div.item-content::text').get(default='').strip()

alias = response.css('div.item.alias div.item-content p::text').get(default='').strip()

english_name = response.css('div.item.english_name div.item-content::text').get(default='').strip()

source = response.css('div.item.alias div.item-content p::text').get(default='').strip()

# 性味

flavor = response.css('div.item.flavor div.item-content p::text').get(default='').strip()

functional_indications = response.css('div.item.flavor div.item-content p::text').get(default='').strip()

usage = response.css('div.item.usage div.item-content p::text').get(default='').strip()

excerpt = response.css('div.item.excerpt div.item-content::text').get(default='').strip()

#

habitat = response.css('div.item.habitat div.item-content p::text').get(default='').strip()

# 出处

provenance = response.css('div.item.provenance div.item-content p::text').get(default='').strip()

# 性状

shape_properties = response.css('div.item.shape_properties div.item-content p::text').get(default='').strip()

# 归经

attribution = response.css('div.item.attribution div.item-content p::text').get(default='').strip()

# 原形态

prototype = response.css('div.item.prototype div.item-content p::text').get(default='').strip()

# 名家论述

discuss = response.css('div.item.discuss div.item-content p::text').get(default='').strip()

# 化学成分

chemical_composition = response.css('div.item.chemical_composition div.item-content p::text').get(default='').strip()

item = {

'title': title,

'pinyin': pinyin,

'alias': alias,

'source': source,

'english_name': english_name,

'habitat': habitat,

'flavor': flavor,

'functional_indications': functional_indications,

'usage': usage,

'excerpt': excerpt,

'provenance': provenance,

'shape_properties': shape_properties,

'attribution': attribution,

'prototype': prototype,

'discuss': discuss,

'chemical_composition': chemical_composition,

}

self.data.append(item)

yield item

def closed(self, reason):

# 当爬虫关闭时,保存数据到 Excel 文件

df = pd.DataFrame(self.data)

df.to_excel('zhongyao_data.xlsx', index=False)

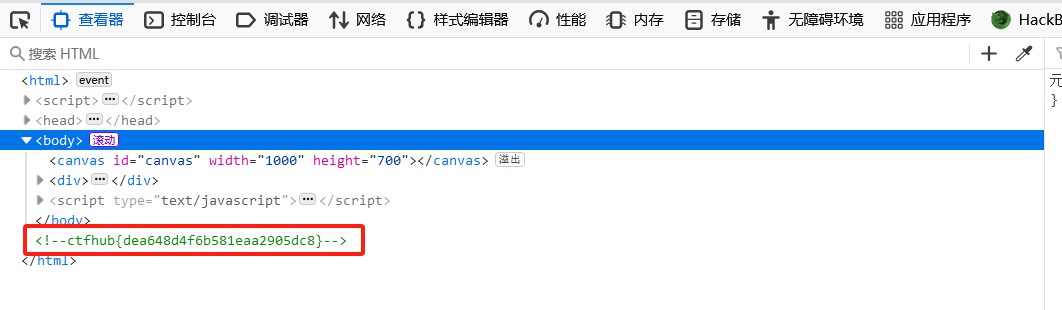

2 爬取截图

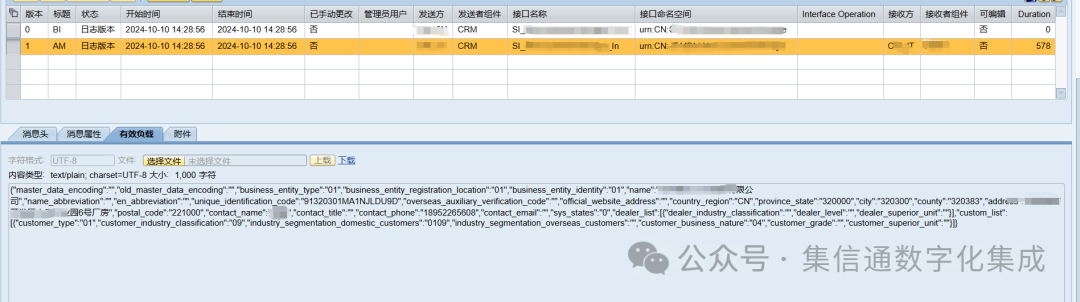

3 爬取数据截图