本文将为您介绍如何使用 LangChain、NestJS 和 Gemma 2 构建关于 PDF 格式 Angular 书籍的 RAG 应用。接着,HTMX 和 Handlebar 模板引擎将响应呈现为列表。应用使用 LangChain 及其内置的 PDF 加载器来加载 PDF 书籍,并将文档拆分为小块。然后,LangChain 使用 Gemini 嵌入文本模型将文档表示为向量,并将向量持久化存储到向量数据库中。向量存储检索器为大语言模型 (LLM) 提供上下文,以便在其数据中查找信息,从而生成正确的响应。

设置环境变量

访问 https://aistudio.google.com/app/apikey,登录帐号,创建新的 API 密钥。将 API 密钥替换为 GEMINI_API_KEY。

访问 Groq Cloud: https://console.groq.com/,注册帐号并新建一个 API 密钥。将 API 密钥替换为 GROQ_API_KEY。

访问 Huggingface: https://huggingface.co/join,注册帐号,创建新的访问令牌。将访问令牌替换为 HUGGINGFACE_API_KEY。

访问 Qdrant: https://cloud.qdrant.io/,注册帐号,创建 Qdrant 空间。将网址替换为 QDRANT_URL。将 API 密钥替换为 QDRANT_API_KEY。

安装依赖项

定义应用的配置

创建 src/configs 文件夹并在其中添加 configuration.ts 文件。

export default () => ({

port: parseInt(process.env.PORT, 10) || 3000,

groq: {

apiKey: process.env.GROQ_API_KEY || '',

model: process.env.GROQ_MODEL || 'gemma2-9b-it',

},

gemini: {

apiKey: process.env.GEMINI_API_KEY || '',

embeddingModel: process.env.GEMINI_TEXT_EMBEDDING_MODEL || 'text-embedding-004',

},

huggingface: {

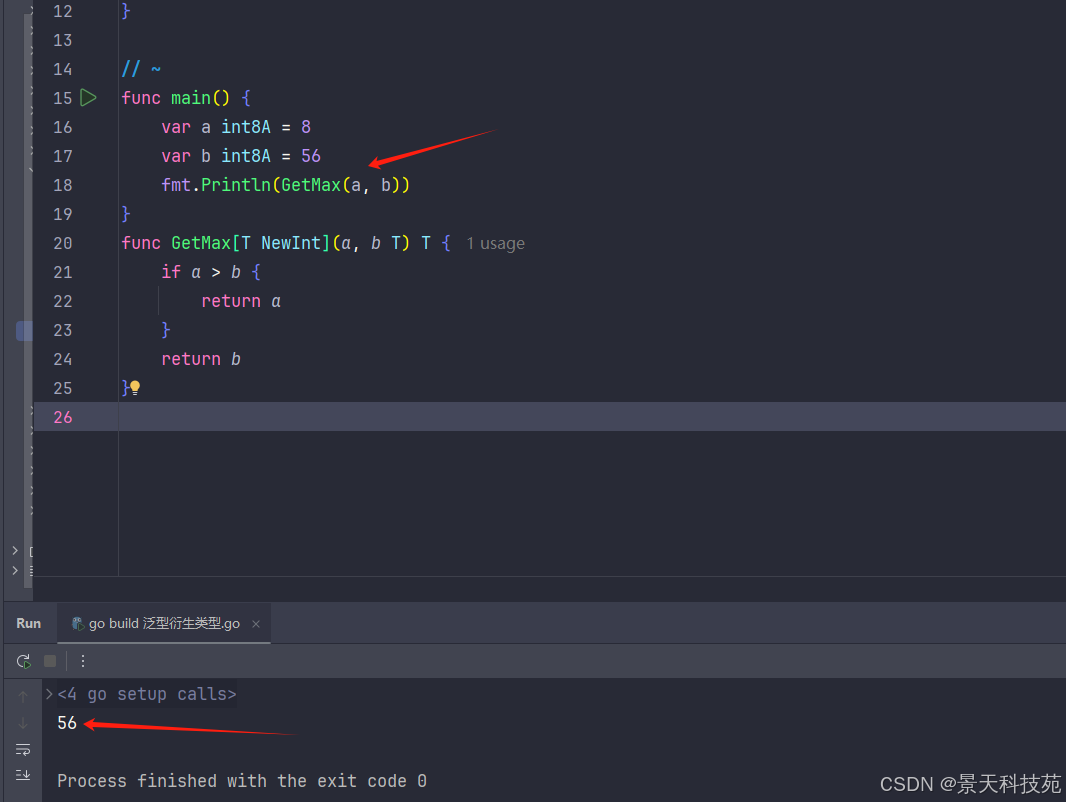

apiKey: process.env.HUGGINGFACE_API_KEY || '',

embeddingModel: process.env.HUGGINGFACE_EMBEDDING_MODEL || 'BAAI/bge-small-en-v1.5',

},

qdrant: {

url: process.env.QDRANT_URL || 'http://localhost:6333',

apiKey: process.env.QDRANT_APK_KEY || '',

},

});

创建 Groq 模块

生成 Groq 模块、控制器和服务。

添加一个聊天模型

在模块中定义 Groq 配置类型,文件路径为 application/types/groq-config.type.ts。配置服务将配置值转换为自定义对象。

添加自定义提供程序以提供 GroqChatModel 的实例。在 application/constants 文件夹下创建 groq.constant.ts 文件。

在控制器中测试 Groq 聊天模型

GroqService 服务有一个方法,用于执行查询并要求模型生成文本响应。

从模块导出聊天模型

import { Module } from '@nestjs/common';

import { GroqChatModelProvider } from './application/providers/groq-chat-model.provider';

import { GroqService } from './application/groq.service';

import { GroqController } from './presenters/http/groq.controller';

@Module({

providers: [GroqChatModelProvider, GroqService],

controllers: [GroqController],

exports: [GroqChatModelProvider],

})

export class GroqModule {}

创建向量存储模块

nest g mo vectorStore

nest g s application/vectorStore --flat

添加配置类型

在 application/types 文件夹下定义配置类型。

这是嵌入模型的配置类型。此应用同时支持 Gemini 文本嵌入模型和 Huggingface 推理嵌入模型。

应用支持内存向量存储和 Qdrant 向量存储。因此,应用具有 Qdrant 配置。

此配置中存储了拆分后的文档、向量数据库类型和嵌入模型。

export type VectorDatabasesType = 'MEMORY' | 'QDRANT';

创建可配置的嵌入模型

export type EmbeddingModels = 'GEMINI_AI' | 'HUGGINGFACE_INFERENCE';

import { TaskType } from '@google/generative-ai';

import { HuggingFaceInferenceEmbeddings } from '@langchain/community/embeddings/hf';

import { Embeddings } from '@langchain/core/embeddings';

import { GoogleGenerativeAIEmbeddings } from '@langchain/google-genai';

import { InternalServerErrorException } from '@nestjs/common';

import { ConfigService } from '@nestjs/config';

import { EmbeddingModelConfig } from '../types/embedding-model-config.type';

import { EmbeddingModels } from '../types/embedding-models.type';

function createGeminiTextEmbeddingModel(configService: ConfigService) {

const { apiKey, embeddingModel: model } = configService.get<EmbeddingModelConfig>('gemini');

return new GoogleGenerativeAIEmbeddings({

apiKey,

model,

taskType: TaskType.RETRIEVAL_DOCUMENT,

title: 'Angular Book',

});

}

function createHuggingfaceInferenceEmbeddingModel(configService: ConfigService) {

const { apiKey, embeddingModel: model } = configService.get<EmbeddingModelConfig>('huggingface');

return new HuggingFaceInferenceEmbeddings({

apiKey,

model,

});

}

export function createTextEmbeddingModel(configService: ConfigService, embeddingModel: EmbeddingModels): Embeddings {

if (embeddingModel === 'GEMINI_AI') {

return createGeminiTextEmbeddingModel(configService);

} else if (embeddingModel === 'HUGGINGFACE_INFERENCE') {

return createHuggingfaceInferenceEmbeddingModel(configService);

} else {

throw new InternalServerErrorException('Invalid type of embedding model.');

}

}

createGeminiTextEmbeddingModel 函数将实例化并返回 Gemini 文本嵌入模型。类似地,createHuggingfaceInferenceEmbeddingModel 将实例化并返回 Huggingface 推理嵌入模型。最后,createTextEmbeddingModel 函数是一个工厂方法,根据嵌入模型标志创建嵌入模型。

创建可配置的向量存储检索器

定义向量数据库服务接口

// application/interfaces/vector-database.interface.ts

import { VectorStore, VectorStoreRetriever } from '@langchain/core/vectorstores';

import { DatabaseConfig } from '../types/vector-store-config.type';

export interface VectorDatabase {

init(config: DatabaseConfig): Promise<void>;

asRetriever(): VectorStoreRetriever<VectorStore>;

}

import { VectorStore, VectorStoreRetriever } from '@langchain/core/vectorstores';

import { Injectable, Logger } from '@nestjs/common';

import { MemoryVectorStore } from 'langchain/vectorstores/memory';

import { VectorDatabase } from '../interfaces/vector-database.interface';

import { DatabaseConfig } from '../types/vector-store-config.type';

@Injectable()

export class MemoryVectorDBService implements VectorDatabase {

private readonly logger = new Logger(MemoryVectorDBService.name);

private vectorStore: VectorStore;

async init({ docs, embeddings }: DatabaseConfig): Promise<void> {

this.logger.log('MemoryVectorStoreService init called');

this.vectorStore = await MemoryVectorStore.fromDocuments(docs, embeddings);

}

asRetriever(): VectorStoreRetriever<VectorStore> {

return this.vectorStore.asRetriever();

}

}

MemoryVectorDBService 实现了接口,将向量持久化存储到内存存储中,并返回向量存储检索器。

import { VectorStore, VectorStoreRetriever } from '@langchain/core/vectorstores';

import { QdrantVectorStore } from '@langchain/qdrant';

import { Injectable, InternalServerErrorException, Logger } from '@nestjs/common';

import { ConfigService } from '@nestjs/config';

import { QdrantClient } from '@qdrant/js-client-rest';

import { VectorDatabase } from '../interfaces/vector-database.interface';

import { QdrantDatabaseConfig } from '../types/qdrant-database-config.type';

import { DatabaseConfig } from '../types/vector-store-config.type';

const COLLECTION_NAME = 'angular_evolution_collection';

@Injectable()

export class QdrantVectorDBService implements VectorDatabase {

private readonly logger = new Logger(QdrantVectorDBService.name);

private vectorStore: VectorStore;

constructor(private configService: ConfigService) {}

async init({ docs, embeddings }: DatabaseConfig): Promise<void> {

this.logger.log('QdrantVectorStoreService init called');

const { url, apiKey } = this.configService.get<QdrantDatabaseConfig>('qdrant');

const client = new QdrantClient({ url, apiKey });

const { exists: isCollectionExists } = await client.collectionExists(COLLECTION_NAME);

if (isCollectionExists) {

const isDeleted = await client.deleteCollection(COLLECTION_NAME);

if (!isDeleted) {

throw new InternalServerErrorException(`Unable to delete ${COLLECTION_NAME}`);

}

this.logger.log(`QdrantVectorStoreService deletes ${COLLECTION_NAME}. Result -> ${isDeleted}`);

}

const size = (await embeddings.embedQuery('test')).length;

const isSuccess = await client.createCollection(COLLECTION_NAME, {

vectors: { size, distance: 'Cosine' },

});

if (!isSuccess) {

throw new InternalServerErrorException(`Unable to create collection ${COLLECTION_NAME}`);

}

this.vectorStore = await QdrantVectorStore.fromDocuments(docs, embeddings, {

client,

collectionName: COLLECTION_NAME,

});

}

asRetriever(): VectorStoreRetriever<VectorStore> {

return this.vectorStore.asRetriever();

}

}

QdrantVectorDBService 实现了接口,将向量持久化存储到 Qdrant 向量数据库中,并返回向量存储检索器。

// application/vector-databases/create-vector-database.t

import { InternalServerErrorException } from '@nestjs/common';

import { ConfigService } from '@nestjs/config';

import { VectorDatabasesType } from '../types/vector-databases.type';

import { MemoryVectorDBService } from './memory-vector-db.service';

import { QdrantVectorDBService } from './qdrant-vector-db.service';

export function createVectorDatabase(type: VectorDatabasesType, configService: ConfigService) {

if (type === 'MEMORY') {

return new MemoryVectorDBService();

} else if (type === 'QDRANT') {

return new QdrantVectorDBService(configService);

}

throw new InternalServerErrorException(`Invalid vector store type: ${type}`);

}

函数将根据数据库类型实例化数据库服务。

从 Angular PDF 书籍创建文档块

将书籍复制到 assets 文件夹

loadPdf 函数使用 PDF 加载器来加载 PDF 文件,并将文档拆分为多个小块。

import { Embeddings } from '@langchain/core/embeddings';

import { VectorStore, VectorStoreRetriever } from '@langchain/core/vectorstores';

import { Inject, Injectable, Logger } from '@nestjs/common';

import path from 'path';

import { appConfig } from '~configs/root-path.config';

import { ANGULAR_EVOLUTION_BOOK, TEXT_EMBEDDING_MODEL, VECTOR_DATABASE } from './constants/rag.constant';

import { VectorDatabase } from './interfaces/vector-database.interface';

import { loadPdf } from './loaders/pdf-loader';

@Injectable()

export class VectorStoreService {

private readonly logger = new Logger(VectorStoreService.name);

constructor(

@Inject(TEXT_EMBEDDING_MODEL) embeddings: Embeddings,

@Inject(VECTOR_DATABASE) private dbService: VectorDatabase,

) {

this.createDatabase(embeddings, this.dbService);

}

private async createDatabase(embeddings: Embeddings, dbService: VectorDatabase) {

const docs = await this.loadDocuments();

await dbService.init({ docs, embeddings });

}

private async loadDocuments() {

const bookFullPath = path.join(appConfig.rootPath, ANGULAR_EVOLUTION_BOOK);

const docs = await loadPdf(bookFullPath);

this.logger.log(`number of docs -> ${docs.length}`);

return docs;

}

asRetriever(): VectorStoreRetriever<VectorStore> {

this.logger.log(`return vector retriever`);

return this.dbService.asRetriever();

}

}

VectorStoreService 将 PDF 书籍存储到向量数据库中,并返回向量存储检索器。

将模块设为动态模块

import { DynamicModule, Module } from '@nestjs/common';

import { ConfigService } from '@nestjs/config';

import { TEXT_EMBEDDING_MODEL, VECTOR_DATABASE, VECTOR_STORE_TYPE } from './application/constants/rag.constant';

import { createTextEmbeddingModel } from './application/embeddings/create-embedding-model';

import { EmbeddingModels } from './application/types/embedding-models.type';

import { VectorDatabasesType } from './application/types/vector-databases.type';

import { createVectorDatabase, MemoryVectorDBService, QdrantVectorDBService } from './application/vector-databases';

import { VectorStoreTestService } from './application/vector-store-test.service';

import { VectorStoreService } from './application/vector-store.service';

import { VectorStoreController } from './presenters/http/vector-store.controller';

@Module({

providers: [VectorStoreService, VectorStoreTestService, MemoryVectorDBService, QdrantVectorDBService],

controllers: [VectorStoreController],

exports: [VectorStoreService],

})

export class VectorStoreModule {

static register(embeddingModel: EmbeddingModels, vectorStoreType: VectorDatabasesType): DynamicModule {

return {

module: VectorStoreModule,

providers: [

{

provide: TEXT_EMBEDDING_MODEL,

useFactory: (configService: ConfigService) => createTextEmbeddingModel(configService, embeddingModel),

inject: [ConfigService],

},

{

provide: VECTOR_STORE_TYPE,

useValue: vectorStoreType,

},

{

provide: VECTOR_DATABASE,

useFactory: (type: VectorDatabasesType, configService: ConfigService) =>

createVectorDatabase(type, configService),

inject: [VECTOR_STORE_TYPE, ConfigService],

},

],

};

}

}

VectorStoreModule 是一个动态模块。嵌入模型和向量数据库均可自行配置。注册静态方法根据配置创建文本嵌入模块和向量数据库。

创建 RAG 模块

RAG 模块负责创建 LangChain 链,该链请求模型生成响应。

创建 RAG 服务

// application/constants/prompts.constant.ts

import { ChatPromptTemplate, MessagesPlaceholder } from '@langchain/core/prompts';

const qaSystemPrompt = `You are an assistant for question-answering tasks.

Use the following pieces of retrieved context to answer the question.

If you don't know the answer, just say that you don't know.

{context}`;

export const qaPrompt = ChatPromptTemplate.fromMessages([

['system', qaSystemPrompt],

new MessagesPlaceholder('chat_history'),

['human', '{question}'],

]);

const contextualizeQSystemPrompt = `Given a chat history and the latest user question

which might reference context in the chat history, formulate a standalone question

which can be understood without the chat history. Do NOT answer the question,

just reformulate it if needed and otherwise return it as is.`;

export const contextualizeQPrompt = ChatPromptTemplate.fromMessages([

['system', contextualizeQSystemPrompt],

new MessagesPlaceholder('chat_history'),

['human', '{question}'],

]);

此常量文件存储了 LangChain 链的一些提示词。

import { StringOutputParser } from '@langchain/core/output_parsers';

import { ChatGroq } from '@langchain/groq';

import { contextualizeQPrompt } from '../constants/prompts.constant';

export function createContextualizedQuestion(llm: ChatGroq) {

const contextualizeQChain = contextualizeQPrompt.pipe(llm).pipe(new StringOutputParser());

return (input: Record<string, unknown>) => {

if ('chat_history' in input) {

return contextualizeQChain;

}

return input.question;

};

}

该函数会创建一个链,该链可以在不依赖聊天历史记录的情况下提出问题。

import { BaseMessage } from '@langchain/core/messages';

import { Runnable, RunnablePassthrough, RunnableSequence } from '@langchain/core/runnables';

import { ChatGroq } from '@langchain/groq';

import { Inject, Injectable } from '@nestjs/common';

import { formatDocumentsAsString } from 'langchain/util/document';

import { GROQ_CHAT_MODEL } from '~groq/application/constants/groq.constant';

import { VectorStoreService } from '~vector-store/application/vector-store.service';

import { createContextualizedQuestion } from './chain-with-history/create-contextual-chain';

import { qaPrompt } from './constants/prompts.constant';

import { ConversationContent } from './types/conversation-content.type';

@Injectable()

export class RagService {

private chat_history: BaseMessage[] = [];

constructor(

@Inject(GROQ_CHAT_MODEL) private model: ChatGroq,

private vectorStoreService: VectorStoreService,

) {}

async ask(question: string): Promise<ConversationContent[]> {

const contextualizedQuestion = createContextualizedQuestion(this.model);

const retriever = this.vectorStoreService.asRetriever();

try {

const ragChain = RunnableSequence.from([

RunnablePassthrough.assign({

context: (input: Record<string, unknown>) => {

if ('chat_history' in input) {

const chain = contextualizedQuestion(input);

return (chain as Runnable).pipe(retriever).pipe(formatDocumentsAsString);

}

return '';

},

}),

qaPrompt,

this.model,

]);

const aiMessage = await ragChain.invoke({ question, chat_history: this.chat_history });

this.chat_history = this.chat_history.concat(aiMessage);

if (this.chat_history.length > 10) {

this.chat_history.shift();

}

return [

{

role: 'Human',

content: question,

},

{

role: 'Assistant',

content: (aiMessage.content as string) || '',

},

];

} catch (ex) {

console.error(ex);

throw ex;

}

}

}

RagService 服务非常简单。ask 方法将输入提交给链并输出响应。该方法从响应中提取内容,将聊天历史记录中人类和 AI 之间的聊天消息存储在内存中,并将对话返回给模板引擎进行渲染。

添加 RAG 控制器

RAG 控制器将查询提交给链,获取结果,并将 HTML 代码发送回模板引擎进行渲染。

将模块导入 RAG 模块

将 RagModule 导入 AppModule

修改应用控制器以渲染 Handlebar 模板

HTMX 和 Handlebar 模板引擎

这是一个用于显示对话的简单界面。

default.hbs

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<meta name="description" content="Angular tech book RAG powed by gemma 2 LLM." />

<meta name="author" content="Connie Leung" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>{{{ title }}}</title>

<style>

*, *::before, *::after {

padding: 0;

margin: 0;

box-sizing: border-box;

}

</style>

<script src="https://cdn.tailwindcss.com?plugins=forms,typography"></script>

</head>

<body class="p-4 w-screen h-screen min-h-full">

<script src="https://unpkg.com/htmx.org@2.0.1" integrity="sha384-QWGpdj554B4ETpJJC9z+ZHJcA/i59TyjxEPXiiUgN2WmTyV5OEZWCD6gQhgkdpB/" crossorigin="anonymous"></script>

<div class="h-full grid grid-rows-[auto_1fr_40px] grid-cols-[1fr]">

{{> header }}

{{{ body }}}

{{> footer }}

</div>

</body>

</html>

以上是具有页眉、页脚和正文的默认布局。正文最终显示的是 AI 与人类之间的对话。页眉部分则导入 Tailwind,用于设置 HTML 元素的样式,并导入 HTMX 来与服务器交互。

<div>

<div class="mb-2 p-1 border border-solid border-[#464646] rounded-lg">

<p class="text-[1.25rem] mb-2 text-[#464646] underline">Architecture</p>

<ul>

<li class="text-[1rem]">Chat Model: Groq</li>

<li class="text-[1rem]">LLM: Gemma 2</li>

<li class="text-[1rem]">Embeddings: Gemini AI Embedding / HuggingFace Embedding</li>

<li class="text-[1rem]">Vector Store: Memory Vector Store / Qdrant Vector Store</li>

<li class="text-[1rem]">Retriever: Vector Store Retriever</li>

</ul>

</div>

<div id="chat-list" class="mb-4 h-[300px] overflow-y-auto overflow-x-auto">

<div class="flex text-[#464646] text-[1.25rem] italic underline">

<span class="w-1/5 p-1 border border-solid border-[#464646]">Role</span>

<span class="w-4/5 p-1 border border-solid border-[#464646]">Result</span>

</div>

</div>

<form id="rag-form" hx-post="/rag" hx-target="#chat-list" hx-swap="beforeend swap:1s">

<div>

<label>

<span class="text-[1rem] mr-1 w-1/5 mb-2 text-[#464646]">Question: </span>

<input type="text" name="query" class="mb-4 w-4/5 rounded-md p-2"

placeholder="Ask me something"

aria-placeholder="Placeholder to ask question to RAG"></input>

</label>

</div>

<button type="submit" class="bg-blue-500 hover:bg-blue-700 text-white p-2 text-[1rem] flex justify-center items-center rounded-lg">

<span class="mr-1">Send</span><img class="w-4 h-4 htmx-indicator" src="/images/spinner.gif">

</button>

</form>

</div>

用户可以在文本框中输入问题,然后点击 “发送” 按钮。该按钮向 /rag 发出 POST 请求并将对话附加到列表中。

这个 LangChain RAG 应用到此就创建完成了,创建该应用时采用了 Gemma 2 模型,以生成响应。

如何学习AI大模型?

作为一名热心肠的互联网老兵,我决定把宝贵的AI知识分享给大家。 至于能学习到多少就看你的学习毅力和能力了 。我已将重要的AI大模型资料包括AI大模型入门学习思维导图、精品AI大模型学习书籍手册、视频教程、实战学习等录播视频免费分享出来。

这份完整版的大模型 AI 学习资料已经上传CSDN,朋友们如果需要可以微信扫描下方CSDN官方认证二维码免费领取【保证100%免费】

一、全套AGI大模型学习路线

AI大模型时代的学习之旅:从基础到前沿,掌握人工智能的核心技能!

二、640套AI大模型报告合集

这套包含640份报告的合集,涵盖了AI大模型的理论研究、技术实现、行业应用等多个方面。无论您是科研人员、工程师,还是对AI大模型感兴趣的爱好者,这套报告合集都将为您提供宝贵的信息和启示。

三、AI大模型经典PDF籍

随着人工智能技术的飞速发展,AI大模型已经成为了当今科技领域的一大热点。这些大型预训练模型,如GPT-3、BERT、XLNet等,以其强大的语言理解和生成能力,正在改变我们对人工智能的认识。 那以下这些PDF籍就是非常不错的学习资源。

四、AI大模型商业化落地方案

作为普通人,入局大模型时代需要持续学习和实践,不断提高自己的技能和认知水平,同时也需要有责任感和伦理意识,为人工智能的健康发展贡献力量。