一、本文介绍

本文记录的是基于蒙特卡罗注意力(MCAttn)模块的YOLOv10目标检测改进方法研究。利用蒙特卡罗注意力(MCAttn)模块提高RepNCSPELAN4模块的跨尺度特征提取能力,使模型能够更好地传递和融合提取的多尺度特征,提高对小目标的关注度。

文章目录

- 一、本文介绍

- 二、MCAttn模块介绍

- 2.1 设计出发点

- 2.2 MCAttn原理

- 2.2.1 基于随机采样的池化操作

- 2.2.2 注意力图的计算

- 2.3 特点

- 三、MCAttn的实现代码

- 四、添加步骤

- 4.1 改进点1

- 4.2 改进点2⭐

- 五、添加步骤

- 5.1 修改ultralytics/nn/modules/block.py

- 5.2 修改ultralytics/nn/modules/__init__.py

- 5.3 修改ultralytics/nn/modules/tasks.py

- 六、yaml模型文件

- 6.1 模型改进版本一

- 6.2 模型改进版本二⭐

- 七、成功运行结果

二、MCAttn模块介绍

Exploiting Scale-Variant Attention for Segmenting Small Medical Objects

2.1 设计出发点

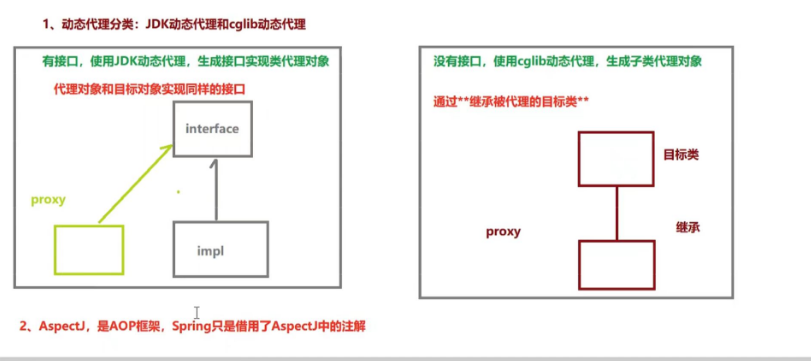

- 解决传统注意力机制的局限性:传统的深度学习注意力机制在处理医学图像时存在一些局限性。例如,一些方法如squeeze - excitation(SE)采用全局 - 平均池化来获取 1 × 1 1×1 1×1输出张量,这种方式虽然有助于校准通道间的相互依赖关系,但在利用跨尺度相关性方面能力有限。对于医学图像中的小对象分割,需要一种能够更好地捕捉不同尺度信息的注意力机制。

- 适应医学图像小对象分割需求:医学图像中的小对象具有独特的挑战,它们不仅尺寸小,而且形态和位置信息难以准确把握。传统的注意力机制产生的固定维度注意力图往往不足以分析医学图像,因为它们可能忽略了背景中的丰富上下文信息以及小对象自身的多尺度特征。因此,需要设计一种专门针对医学小对象分割的注意力模块,能够更好地适应小对象的特点,提高分割的准确性。

2.2 MCAttn原理

2.2.1 基于随机采样的池化操作

MCAttn模块使用一种基于随机采样的池化操作来生成尺度无关的注意力图。它从三个不同尺度(

3

×

3

3×3

3×3、

2

×

2

2×2

2×2和

1

×

1

1×1

1×1,即池化张量)中随机选择一个

1

×

1

1×1

1×1注意力图。

2.2.2 注意力图的计算

给定一个输入张量

x

x

x,MCAttn的输出注意力图

A

m

(

x

)

A_{m}(x)

Am(x)计算方式如下:

A m ( x ) = ∑ i = 1 n P 1 ( x , i ) f ( x , i ) A_{m}(x)=\sum_{i = 1}^{n}P_{1}(x,i)f(x,i) Am(x)=∑i=1nP1(x,i)f(x,i),

其中 i i i表示注意力图的输出大小, f ( x , i ) f(x,i) f(x,i)表示平均池化函数, n n n表示输出池化张量的数量(在本研究中设置为 3 3 3)。这里的关联概率 P 1 ( x , i ) P_{1}(x,i) P1(x,i)满足条件 ∑ i = 1 n P 1 ( x , i ) = 1 \sum_{i = 1}^{n}P_{1}(x,i)=1 ∑i=1nP1(x,i)=1且 ∏ i = 1 n P 1 ( x , i ) = 0 \prod_{i = 1}^{n}P_{1}(x,i)=0 ∏i=1nP1(x,i)=0,确保生成通用且可推广的注意力图。

2.3 特点

- 跨尺度信息捕捉:通过从三个不同尺度中随机选择注意力图,

MCAttn模块能够捕捉到不同尺度的信息,增强了网络对小医学对象的识别能力。这种跨尺度的特性使得它能够更好地适应医学图像中不同大小和形态的小对象,弥补了传统注意力机制在这方面的不足。 - 提高对小对象的关注度:在实验中,与不使用

MCAttn模块的情况相比,使用MCAttn在MCBottleneck中能够增强对超小和小医学对象的形态和精确位置的辨别能力。 - 增强特征学习:

MCAttn模块生成的注意力图能够更好地引导网络学习小对象的特征,提高了上下文特征学习的能力。它可以帮助网络更好地理解小对象与周围环境的关系,以及小对象自身在不同尺度下的特征变化,从而提高整个网络的分割性能。

论文:https://arxiv.org/abs/2407.07720

源码: https://github.com/anthonyweidai/SvANet

三、MCAttn的实现代码

MCAttn模块的实现代码如下:

import numpy as np

from typing import Any, Callable

from typing import Union

import torch

from torch import nn, Tensor

def pair(Val):

return Val if isinstance(Val, (tuple, list)) else (Val, Val)

NormLayerTuple = (

nn.BatchNorm1d,

nn.BatchNorm2d,

nn.SyncBatchNorm,

nn.LayerNorm,

nn.InstanceNorm1d,

nn.InstanceNorm2d,

nn.GroupNorm,

nn.BatchNorm3d,

)

def initWeight(Module):

# init conv, norm , and linear layers

## empty module

if Module is None:

return

## conv layer

elif isinstance(Module, (nn.Conv2d, nn.Conv3d, nn.ConvTranspose2d)):

nn.init.kaiming_uniform_(Module.weight, a=math.sqrt(5))

if Module.bias is not None:

fan_in, _ = nn.init._calculate_fan_in_and_fan_out(Module.weight)

if fan_in != 0:

bound = 1 / math.sqrt(fan_in)

nn.init.uniform_(Module.bias, -bound, bound)

## norm layer

elif isinstance(Module, NormLayerTuple):

if Module.weight is not None:

nn.init.ones_(Module.weight)

if Module.bias is not None:

nn.init.zeros_(Module.bias)

## linear layer

elif isinstance(Module, nn.Linear):

nn.init.kaiming_uniform_(Module.weight, a=math.sqrt(5))

if Module.bias is not None:

fan_in, _ = nn.init._calculate_fan_in_and_fan_out(Module.weight)

bound = 1 / math.sqrt(fan_in) if fan_in > 0 else 0

nn.init.uniform_(Module.bias, -bound, bound)

elif isinstance(Module, (nn.Sequential, nn.ModuleList)):

for m in Module:

initWeight(m)

elif list(Module.children()):

for m in Module.children():

initWeight(m)

class BaseConv2d(nn.Module):

def __init__(

self,

in_channels: int,

out_channels: int,

kernel_size: int,

stride: Optional[int]=1,

padding: Optional[int]=None,

groups: Optional[int]=1,

bias: Optional[bool]=None,

BNorm: bool=False,

# norm_layer: Optional[Callable[..., nn.Module]]=nn.BatchNorm2d,

ActLayer: Optional[Callable[..., nn.Module]]=None,

dilation: int=1,

Momentum: Optional[float]=0.1,

**kwargs: Any

) -> None:

super(BaseConv2d, self).__init__()

if padding is None:

padding = int((kernel_size - 1) // 2 * dilation)

if bias is None:

bias = not BNorm

self.in_channels = in_channels

self.out_channels = out_channels

self.kernel_size = kernel_size

self.stride = stride

self.padding = padding

self.groups = groups

self.bias = bias

self.Conv = nn.Conv2d(in_channels, out_channels,

kernel_size, stride, padding, dilation, groups, bias, **kwargs)

self.Bn = nn.BatchNorm2d(out_channels, eps=0.001, momentum=Momentum) if BNorm else nn.Identity()

if ActLayer is not None:

if isinstance(list(ActLayer().named_modules())[0][1], nn.Sigmoid):

self.Act = ActLayer()

else:

self.Act = ActLayer(inplace=True)

else:

self.Act = ActLayer

self.apply(initWeight)

def forward(self, x: Tensor) -> Tensor:

x = self.Conv(x)

x = self.Bn(x)

if self.Act is not None:

x = self.Act(x)

return x

def profileModule(self, Input: Tensor):

if Input.dim() != 4:

print('Conv2d requires 4-dimensional Input (BxCxHxW). Provided Input has shape: {}'.format(Input.size()))

BatchSize, in_channels, in_h, in_w = Input.size()

assert in_channels == self.in_channels, '{}!={}'.format(in_channels, self.in_channels)

k_h, k_w = pair(self.kernel_size)

stride_h, stride_w = pair(self.stride)

pad_h, pad_w = pair(self.padding)

groups = self.groups

out_h = (in_h - k_h + 2 * pad_h) // stride_h + 1

out_w = (in_w - k_w + 2 * pad_w) // stride_w + 1

# compute MACs

MACs = (k_h * k_w) * (in_channels * self.out_channels) * (out_h * out_w) * 1.0

MACs /= groups

if self.bias:

MACs += self.out_channels * out_h * out_w

# compute parameters

Params = sum([p.numel() for p in self.parameters()])

Output = torch.zeros(size=(BatchSize, self.out_channels, out_h, out_w), dtype=Input.dtype, device=Input.device)

# print(MACs)

return Output, Params, MACs

class AdaptiveAvgPool2d(nn.AdaptiveAvgPool2d):

def __init__(self, output_size: Union[int, tuple]=1):

super(AdaptiveAvgPool2d, self).__init__(output_size=output_size)

def profileModule(self, Input: Tensor):

Output = self.forward(Input)

return Output, 0.0, 0.0

def setMethod(self, ElementName, ElementValue):

return setattr(self, ElementName, ElementValue)

def shuffleTensor(Feature: Tensor, Mode: int=1) -> Tensor:

# shuffle multiple tensors with the same indexs

# all tensors must have the same shape

if isinstance(Feature, Tensor):

Feature = [Feature]

Indexs = None

Output = []

for f in Feature:

# not in-place operation, should update output

B, C, H, W = f.shape

if Mode == 1:

# fully shuffle

f = f.flatten(2)

if Indexs is None:

Indexs = torch.randperm(f.shape[-1], device=f.device)

f = f[:, :, Indexs.to(f.device)]

f = f.reshape(B, C, H, W)

else:

# shuflle along y and then x axis

if Indexs is None:

Indexs = [torch.randperm(H, device=f.device),

torch.randperm(W, device=f.device)]

f = f[:, :, Indexs[0].to(f.device)]

f = f[:, :, :, Indexs[1].to(f.device)]

Output.append(f)

return Output

def callMethod(self, ElementName):

return getattr(self, ElementName)

def makeDivisible(v: float, divisor: int, min_value: Optional[int] = None) -> int:

"""

This function is taken from the original tf repo.

It ensures that all layers have a channel number that is divisible by 8

It can be seen here:

https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.Py

"""

if min_value is None:

min_value = divisor

new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_v < 0.9 * v:

new_v += divisor

return new_v

class MoCAttention(nn.Module):

# Monte carlo attention

def __init__(

self,

InChannels: int,

HidChannels: int=None,

SqueezeFactor: int=4,

PoolRes: list=[1, 2, 3],

Act: Callable[..., nn.Module]=nn.ReLU,

ScaleAct: Callable[..., nn.Module]=nn.Sigmoid,

MoCOrder: bool=True,

**kwargs: Any,

) -> None:

super().__init__()

if HidChannels is None:

HidChannels = max(makeDivisible(InChannels // SqueezeFactor, 8), 32)

AllPoolRes = PoolRes + [1] if 1 not in PoolRes else PoolRes

for k in AllPoolRes:

Pooling = AdaptiveAvgPool2d(k)

setMethod(self, 'Pool%d' % k, Pooling)

self.SELayer = nn.Sequential(

BaseConv2d(InChannels, HidChannels, 1, ActLayer=Act),

BaseConv2d(HidChannels, InChannels, 1, ActLayer=ScaleAct),

)

self.PoolRes = PoolRes

self.MoCOrder = MoCOrder

def monteCarloSample(self, x: Tensor) -> Tensor:

if self.training:

PoolKeep = np.random.choice(self.PoolRes)

x1 = shuffleTensor(x)[0] if self.MoCOrder else x

AttnMap: Tensor = callMethod(self, 'Pool%d' % PoolKeep)(x1)

if AttnMap.shape[-1] > 1:

AttnMap = AttnMap.flatten(2)

AttnMap = AttnMap[:, :, torch.randperm(AttnMap.shape[-1])[0]]

AttnMap = AttnMap[:, :, None, None] # squeeze twice

else:

AttnMap: Tensor = callMethod(self, 'Pool%d' % 1)(x)

return AttnMap

def forward(self, x: Tensor) -> Tensor:

AttnMap = self.monteCarloSample(x)

return x * self.SELayer(AttnMap)

四、添加步骤

4.1 改进点1

模块改进方法1️⃣:直接加入MoCAttention模块。

MoCAttention模块添加后如下:

注意❗:在5.2和5.3小节中需要声明的模块名称为:MoCAttention。

4.2 改进点2⭐

模块改进方法2️⃣:基于MoCAttention模块的C2f。

相较方法一中的直接插入注意力模块,利用注意力模块对卷积等其他模块进行改进,其新颖程度会更高一些,训练精度可能会表现的更高。

第二种改进方法是对YOLOv10中的C2f模块进行改进,MoCAttention的跨尺度特征提取能力可以进一步丰富 C2f模块所处理的特征信息。C2f模块则可以将提取到的特征送入MCAttn中,使网络能够更全面地理解图像中的目标对象,提高整体的特征提取能力。

改进代码如下:

class C2f_MCAttn(nn.Module):

"""Faster Implementation of CSP Bottleneck with 2 convolutions."""

def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5):

"""Initialize CSP bottleneck layer with two convolutions with arguments ch_in, ch_out, number, shortcut, groups,

expansion.

"""

super().__init__()

self.c = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, 2 * self.c, 1, 1)

self.cv2 = Conv((2 + n) * self.c, c2, 1) # optional act=FReLU(c2)

self.m = nn.ModuleList(Bottleneck(self.c, self.c, shortcut, g, k=((3, 3), (3, 3)), e=1.0) for _ in range(n))

self.att = MoCAttention(c2)

def forward(self, x):

"""Forward pass through C2f layer."""

y = list(self.cv1(x).chunk(2, 1))

y.extend(m(y[-1]) for m in self.m)

return self.att(self.cv2(torch.cat(y, 1)))

def forward_split(self, x):

"""Forward pass using split() instead of chunk()."""

y = list(self.cv1(x).split((self.c, self.c), 1))

y.extend(m(y[-1]) for m in self.m)

return self.att(self.cv2(torch.cat(y, 1)))

注意❗:在5.2和5.3小节中需要声明的模块名称为:C2f_MCAttn。

五、添加步骤

5.1 修改ultralytics/nn/modules/block.py

此处需要修改的文件是ultralytics/nn/modules/block.py

block.py中定义了网络结构的通用模块,我们想要加入新的模块就只需要将模块代码放到这个文件内即可。

将MoCAttention和C2f_MCAttn模块代码添加到此文件下。

5.2 修改ultralytics/nn/modules/init.py

此处需要修改的文件是ultralytics/nn/modules/__init__.py

__init__.py文件中定义了所有模块的初始化,我们只需要将block.py中的新的模块命添加到对应的函数即可。

C2f_NAM和C2f_MCAttn在block.py中实现,所有要添加在from .block import:

from .block import (

C1,

C2,

...

MoCAttention,

C2f_MCAttn

)

5.3 修改ultralytics/nn/modules/tasks.py

在tasks.py文件中,需要在两处位置添加各模块类名称。

首先:在函数声明中引入MoCAttention和C2f_MCAttn

其次:在parse_model函数中注册MoCAttention和C2f_MCAttn模块

六、yaml模型文件

6.1 模型改进版本一

在代码配置完成后,配置模型的YAML文件。

此处以ultralytics/cfg/models/v10/yolov10m.yaml为例,在同目录下创建一个用于自己数据集训练的模型文件yolov10m-MoCAtt.yaml。

将yolov10m.yaml中的内容复制到yolov10m-MoCAtt.yaml文件下,修改nc数量等于自己数据中目标的数量。

在骨干网络中添加MoCAttention模块,只需要填入一个参数,通道数。

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 1 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, SCDown, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, SCDown, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2fCIB, [1024, True]]

- [-1, 1, MoCAttention, [1024]]

- [-1, 1, SPPF, [1024, 5]] # 10

- [-1, 1, PSA, [1024]] # 11

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 14

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, C2f, [256]] # 17 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 14], 1, Concat, [1]] # cat head P4

- [-1, 3, C2fCIB, [512, True]] # 20 (P4/16-medium)

- [-1, 1, SCDown, [512, 3, 2]]

- [[-1, 11], 1, Concat, [1]] # cat head P5

- [-1, 3, C2fCIB, [1024, True]] # 23 (P5/32-large)

- [[17, 20, 23], 1, v10Detect, [nc]] # Detect(P3, P4, P5)

6.2 模型改进版本二⭐

此处同样以ultralytics/cfg/models/v10/yolov10m.yaml为例,在同目录下创建一个用于自己数据集训练的模型文件yolov10m-C2f_MCAttn.yaml。

将yolov10m.yaml中的内容复制到yolov10m-C2f_MCAttn.yaml文件下,修改nc数量等于自己数据中目标的数量。

📌 模型的修改方法是将骨干网络中的所有C2f模块替换成C2f_MCAttn模块。

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 1 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f_MCAttn, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f_MCAttn, [256, True]]

- [-1, 1, SCDown, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f_MCAttn, [512, True]]

- [-1, 1, SCDown, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2fCIB, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

- [-1, 1, PSA, [1024]] # 10

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 13

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, C2f, [256]] # 16 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 13], 1, Concat, [1]] # cat head P4

- [-1, 3, C2fCIB, [512, True]] # 19 (P4/16-medium)

- [-1, 1, SCDown, [512, 3, 2]]

- [[-1, 10], 1, Concat, [1]] # cat head P5

- [-1, 3, C2fCIB, [1024, True]] # 22 (P5/32-large)

- [[16, 19, 22], 1, v10Detect, [nc]] # Detect(P3, P4, P5)

七、成功运行结果

分别打印网络模型可以看到MoCAttention模块和C2f_MCAttn已经加入到模型中,并可以进行训练了。

YOLOv10m-MCAttn:

from n params module arguments

0 -1 1 1392 ultralytics.nn.modules.conv.Conv [3, 48, 3, 2]

1 -1 1 41664 ultralytics.nn.modules.conv.Conv [48, 96, 3, 2]

2 -1 2 111360 ultralytics.nn.modules.block.C2f [96, 96, 2, True]

3 -1 1 166272 ultralytics.nn.modules.conv.Conv [96, 192, 3, 2]

4 -1 4 813312 ultralytics.nn.modules.block.C2f [192, 192, 4, True]

5 -1 1 78720 ultralytics.nn.modules.block.SCDown [192, 384, 3, 2]

6 -1 4 3248640 ultralytics.nn.modules.block.C2f [384, 384, 4, True]

7 -1 1 228672 ultralytics.nn.modules.block.SCDown [384, 576, 3, 2]

8 -1 2 1689984 ultralytics.nn.modules.block.C2fCIB [576, 576, 2, True]

9 -1 1 664704 ultralytics.nn.modules.block.MoCAttention [576, 576]

10 -1 1 831168 ultralytics.nn.modules.block.SPPF [576, 576, 5]

11 -1 1 1253088 ultralytics.nn.modules.block.PSA [576, 576]

12 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

13 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]

14 -1 2 1993728 ultralytics.nn.modules.block.C2f [960, 384, 2]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]

17 -1 2 517632 ultralytics.nn.modules.block.C2f [576, 192, 2]

18 -1 1 332160 ultralytics.nn.modules.conv.Conv [192, 192, 3, 2]

19 [-1, 14] 1 0 ultralytics.nn.modules.conv.Concat [1]

20 -1 2 831744 ultralytics.nn.modules.block.C2fCIB [576, 384, 2, True]

21 -1 1 152448 ultralytics.nn.modules.block.SCDown [384, 384, 3, 2]

22 [-1, 11] 1 0 ultralytics.nn.modules.conv.Concat [1]

23 -1 2 1911168 ultralytics.nn.modules.block.C2fCIB [960, 576, 2, True]

24 [17, 20, 23] 1 2282134 ultralytics.nn.modules.head.v10Detect [1, [192, 384, 576]]

YOLOv10m-MCAttn summary: 511 layers, 17149990 parameters, 17149974 gradients, 64.0 GFLOPs

YOLOv10m-C2f_MCAttn:

from n params module arguments

0 -1 1 1392 ultralytics.nn.modules.conv.Conv [3, 48, 3, 2]

1 -1 1 41664 ultralytics.nn.modules.conv.Conv [48, 96, 3, 2]

2 -1 2 142720 ultralytics.nn.modules.block.C2f_MCAttn [96, 96, True]

3 -1 1 166272 ultralytics.nn.modules.conv.Conv [96, 192, 3, 2]

4 -1 4 1111488 ultralytics.nn.modules.block.C2f_MCAttn [192, 192, True]

5 -1 1 78720 ultralytics.nn.modules.block.SCDown [192, 384, 3, 2]

6 -1 4 4434816 ultralytics.nn.modules.block.C2f_MCAttn [384, 384, True]

7 -1 1 228672 ultralytics.nn.modules.block.SCDown [384, 576, 3, 2]

8 -1 2 1689984 ultralytics.nn.modules.block.C2fCIB [576, 576, 2, True]

9 -1 1 831168 ultralytics.nn.modules.block.SPPF [576, 576, 5]

10 -1 1 1253088 ultralytics.nn.modules.block.PSA [576, 576]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]

13 -1 2 1993728 ultralytics.nn.modules.block.C2f [960, 384, 2]

14 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

15 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]

16 -1 2 517632 ultralytics.nn.modules.block.C2f [576, 192, 2]

17 -1 1 332160 ultralytics.nn.modules.conv.Conv [192, 192, 3, 2]

18 [-1, 13] 1 0 ultralytics.nn.modules.conv.Concat [1]

19 -1 2 831744 ultralytics.nn.modules.block.C2fCIB [576, 384, 2, True]

20 -1 1 152448 ultralytics.nn.modules.block.SCDown [384, 384, 3, 2]

21 [-1, 10] 1 0 ultralytics.nn.modules.conv.Concat [1]

22 -1 2 1911168 ultralytics.nn.modules.block.C2fCIB [960, 576, 2, True]

23 [16, 19, 22] 1 2282134 ultralytics.nn.modules.head.v10Detect [1, [192, 384, 576]]

YOLOv10m-C2f_MCAttn summary: 687 layers, 18000998 parameters, 18000982 gradients, 70.7 GFLOPs