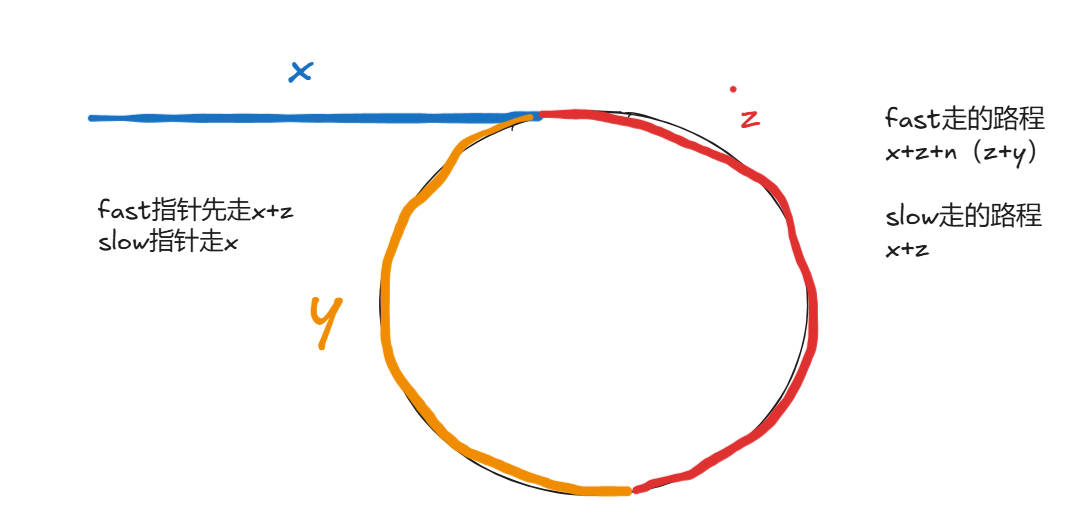

多线程东方财富(股吧)用户信息爬取

在上一篇博客股吧信息爬取的基础上加入了多线程,使得速度提升了十几倍,爬取内容如下:

最终爬取结果如下:

完整代码如下(准备好环境,比如python的第三方库之后可以直接运行):

import csv

import random

import re

import threading

import chardet

import pandas as pd

from bs4 import BeautifulSoup

from selenium import webdriver

import concurrent.futures

from datetime import datetime

from tqdm import tqdm

from urllib.parse import urljoin

import requests

chrome_options = webdriver.ChromeOptions()

# 添加其他选项,如您的用户代理等

# ...

chrome_options.add_argument('--headless') # 无界面模式,可以加速爬取

# 指定 Chrome WebDriver 的路径

driver = webdriver.Chrome(executable_path='/usr/local/bin/chromedriver', options=chrome_options)

## 浏览器设置选项

# chrome_options = Options()

chrome_options.add_argument('blink-settings=imagesEnabled=false')

def get_time():

"""获取随机时间"""

return round(random.uniform(3, 6), 1)

from random import choice

def get_user_agent():

"""获取随机用户代理"""

user_agents = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

# "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

# "Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

# "Mozilla/5.0 (iPod; U; CPU iPhone OS 2_1 like Mac OS X; ja-jp) AppleWebKit/525.18.1 (KHTML, like Gecko) Version/3.1.1 Mobile/5F137 Safari/525.20",

# "Mozilla/5.0 (Linux;u;Android 4.2.2;zh-cn;) AppleWebKit/534.46 (KHTML,like Gecko) Version/5.1 Mobile Safari/10600.6.3 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)",

"Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)"

]

## 在user_agent列表中随机产生一个代理,作为模拟的浏览器

user_agent = choice(user_agents)

return user_agent

def get_page(list_url):

"""获取包含特定关键字的留言链接"""

user_agent = get_user_agent()

headers = {'User-Agent': user_agent}

# Make the request using the requests library

response = requests.get(list_url, headers=headers)

if response.status_code == 200:

html_content = response.text

# 使用 BeautifulSoup 解析 HTML

soup = BeautifulSoup(html_content, 'html.parser')

bump_elements = soup.find_all(class_='nump')

# 提取数字并转换为整数

nump_numbers = [int(element.text) for element in bump_elements]

# 找出最大的数

if nump_numbers:

max_nump = max(nump_numbers)

else:

# 处理空序列的情况,例如给 max_nump 赋一个默认值

max_nump = None # 或者其他你认为合适的默认值

return max_nump

else:

print(f"Error: {response.status_code}")

return None

def generate_urls(base_url, page_number, total_pages):

urls = []

for page in range(2, total_pages + 1, page_number):

url = f"{base_url},f_{page}.html"

urls.append(url)

return urls

def get_detail_urls_by_keyword(urls):

comment, link, reads, date = [], [], [], []

total_iterations = len(urls)

# Create a tqdm instance for the progress bar

progress_bar = tqdm(total=total_iterations, desc='Processing URLs', position=0, leave=True)

# 在函数外定义一个锁

lock = threading.Lock()

def process_url(url):

nonlocal comment, link, reads, date

'''获取包含特定关键字的留言链接'''

user_agent = get_user_agent()

headers = {'User-Agent': user_agent}

# Make the request using the requests library

response = requests.get(url, headers=headers)

encoding = chardet.detect(response.content)['encoding']

# 解码响应内容

if response.status_code == 200:

html_content = response.content.decode(encoding)

# 使用 BeautifulSoup 解析 HTML

soup = BeautifulSoup(html_content, 'html.parser')

#print(html_content)

# Extract and convert relative URLs to absolute URLs

with lock:

links = []

author_elements = soup.select('div.author a')

for element in author_elements:

href = element.get('href')

if href:

absolute_url = urljoin('https:', href)

links.append(absolute_url)

links = ['https://i.eastmoney.com/' + text.split('"//i.eastmoney.com/')[-1].split('">')[0] for text in

links]

link = [

link[len('https://i.eastmoney.com/'):] if link.startswith('https://i.eastmoney.com/') else link for

link in links]

# Extract comments

comment_elements = soup.select('div.reply')

for element in comment_elements:

message_id = element.text.strip().split(':')[-1]

comment.append(message_id)

# Extract dates

pattern = re.compile(r'\d{1,2}-\d{1,2} \d{2}:\d{2}')

# Find all matches in the text

date = pattern.findall(html_content)

# Extract reads

read_elements = soup.select('div.read')

for element in read_elements:

message_id = element.text.strip().split(':')[-1]

reads.append(message_id)

# Update the progress bar

progress_bar.update(1)

else:

print(f"Error: {response.status_code}")

# Create threads for each URL

threads = []

for url in urls:

thread = threading.Thread(target=process_url, args=(url,))

thread.start()

threads.append(thread)

# Wait for all threads to complete

for thread in threads:

thread.join()

# Close the progress bar

progress_bar.close()

return comment, link, reads, date

def extract_and_combine(url):

match = re.search(r'\d{6}', url)

if match:

extracted_number = match.group()

result = extracted_number

return result

else:

return None

def process_dates(date_list):

processed_dates = []

current_year = 2023

for date_str in date_list:

try:

# Adjust the format string based on the actual format of your data

date_obj = datetime.strptime(date_str, "%m-%d %H:%M")

# Check if processed_dates is not empty before accessing its last element

if processed_dates and date_obj.month < processed_dates[-1].month:

current_year -= 1

# Replace the year in date_obj with the updated current_year

processed_date = date_obj.replace(year=current_year)

processed_dates.append(processed_date)

except ValueError as e:

print(f"Error processing date '{date_str}': {e}")

return processed_dates

def write_to_csv_file(comment, link, reads, date, result):

"""

将数据写入 CSV 文件

Parameters:

comment (list): 评论数列表

link (list): 链接列表

title (list): 标题列表

reads (list): 阅读数列表

date (list): 日期列表

result (str): 结果标识

Returns:

None

"""

# 指定 CSV 文件的路径

csv_file_path = result + "_评论.csv"

# 将数据写入 CSV 文件

with open(csv_file_path, 'w', newline='', encoding='utf-8') as csv_file:

csv_writer = csv.writer(csv_file)

# 写入表头

csv_writer.writerow(['评论数', '链接', '阅读数', '日期'])

# 写入数据

csv_writer.writerows(zip(comment, link, reads, date))

print(f"CSV 文件已生成: {csv_file_path}")

def filter_and_append_links(comment, link):

"""

过滤评论数大于等于0的链接并添加到 final_link 列表中

Parameters:

comment (list): 包含评论数的列表

link (list): 包含链接的列表

Returns:

final_link (list): 过滤后的链接列表

"""

final_link = []

for i in range(4, len(link)):

comment_value = int(comment[i])

if comment_value >= 0:

final_link.append(link[i])

return final_link

def remove_duplicates(input_list):

unique_list = []

for item in input_list:

if item not in unique_list:

unique_list.append(item)

return unique_list

def process_result_links(links):

# 调用去重函数

result_link = remove_duplicates(links)

# 使用循环和 remove 方法移除包含子列表的元素

for item in result_link[:]: # 使用切片创建副本,以防止在循环中修改原始列表

if 'list' in item:

result_link.remove(item)

return result_link

def get_information_for_url(url):

influence, age, location, fan = [], [], [], []

user_agent = get_user_agent()

headers = {'User-Agent': user_agent}

# Make the request using the requests library

response = requests.get(url, headers=headers)

if response.status_code == 200:

html_content = response.text

# 使用 BeautifulSoup 解析 HTML

print(html_content)

soup = BeautifulSoup(html_content, 'html.parser')

# 提取影响力信息

# Extract ages

age_elements = soup.select('div.others_level p:contains("吧龄") span')

for element in age_elements:

age_text = element.text.strip()

age.append(age_text)

# Extract locations

location_elements = soup.select('p.ip_info')

for element in location_elements:

text = element.text.strip()

match = re.search(r':([^?]+)\?', text)

if match:

ip_location = match.group(1)

location.append(ip_location)

# Extract fans

fan_elements = soup.select('div.others_fans a#tafansa span.num')

for element in fan_elements:

message_id = element.text.strip().split(':')[-1]

fan.append(message_id)

return influence, age, location, fan

else:

print(f"Error: {response.status_code}")

return None

def get_information(urls):

influence, age, location, fan = [], [], [], []

with concurrent.futures.ThreadPoolExecutor() as executor:

results = list(tqdm(executor.map(get_information_for_url, urls), total=len(urls), desc="Processing URLs"))

for result in results:

influence.extend(result[0])

age.extend(result[1])

location.extend(result[2])

fan.extend(result[3])

return age, location, fan

def write_to_csv(result_link, age, location, fan, result):

# 构建 CSV 文件名

csv_filename = result + "_用户.csv"

# 将数据封装成字典列表

data = [

{"链接": link, "吧龄": a, "属地": loc, "粉丝": f}

for link, a, loc, f in zip(result_link, age, location, fan)

]

# 使用 csv 模块创建 CSV 文件并写入数据,同时指定列名

with open(csv_filename, 'w', newline='') as csvfile:

fieldnames = ["链接", "吧龄", "属地", "粉丝"]

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

# 写入列名

writer.writeheader()

# 写入数据

writer.writerows(data)

print(f"Data has been written to {csv_filename}")

def convert_to_guba_link(file_path):

"""

读取 Excel 文件中的六位数,将其转换为股吧链接

Parameters:

file_path (str): Excel 文件路径

Returns:

guba_links (list): 转换后的股吧链接列表

"""

guba_links = []

try:

# 读取 Excel 文件

df = pd.read_excel(file_path)

# 获取第一列的数据

six_digit_numbers = df.iloc[:, 0]

# 转换六位数为股吧链接

for number in six_digit_numbers:

# 使用 f-string 构建链接

link = f"https://guba.eastmoney.com/list,{number:06d}.html"

guba_links.append(link)

except Exception as e:

print(f"Error: {e}")

return guba_links

def main():

"""主函数"""

list_urls = convert_to_guba_link('number.xlsx')

print('爬虫程序开始执行--->')

i = 2

for list_url in list_urls:

page = 3

print("总页数:",page)

page_number = 1

url_without_html = list_url.replace(".html", "")

urls = generate_urls(url_without_html, page_number, page)

print(urls)

comment, link, reads, date = get_detail_urls_by_keyword(urls)

print(comment)

print(link)

print(reads)

print(date)

date = process_dates(date)

result = extract_and_combine(list_url)

write_to_csv_file(comment, link, reads, date, result)

link = process_result_links(link)

age, location, fan = get_information(link)

print(age)

print(location)

print(fan)

write_to_csv(link, age, location, fan, result)

print('抓取完个数:',i)

i = i + 1

if __name__ == '__main__':

'''执行主函数'''

main()

![[paddle]paddleseg快速开始](https://img-blog.csdnimg.cn/img_convert/3baa205345e943971ce0a31b0a6d1b8c.png)