一、Qwen2.5 & 数据集

Qwen2.5 是 Qwen 大型语言模型的最新系列,参数范围从 0.5B 到 72B 不等。

对比 Qwen2 最新的 Qwen2.5 进行了以下改进:

- 知识明显增加,并且大大提高了编码和数学能力。

- 在指令跟随、生成长文本(超过

8K个标记)、理解结构化数据(例如表格)以及生成结构化输出(尤其是JSON)方面有了显著改进。对系统提示的多样性更具弹性,增强了聊天机器人的角色扮演实现和条件设置。 - 长上下文支持多达

128K个令牌,并且可以生成多达8K个令牌。 - 多语言支持超过

29种语言,包括中文、英语、法语、西班牙语、葡萄牙语、德语、意大利语、俄语、日语、韩语、越南语、泰语、阿拉伯语等。

Qwen2.5 ModelScope 地址:

https://modelscope.cn/models/Qwen/Qwen2.5-0.5B-Instruct

本文基于 Qwen2.5-0.5B 强化微调训练 Ner 命名实体识别任务,数据集采用 CLUENER(中文语言理解测评基准)2020数据集:

进入下面链接下载数据集:

https://www.cluebenchmarks.com/introduce.html

数据分为10个标签类别,分别为: 地址(address),书名(book),公司(company),游戏(game),政府(goverment),电影(movie),姓名(name),组织机构(organization),职位(position),景点(scene)

数据实例如下:

{"text": "浙商银行企业信贷部叶老桂博士则从另一个角度对五道门槛进行了解读。叶老桂认为,对目前国内商业银行而言,", "label": {"name": {"叶老桂": [[9, 11]]}, "company": {"浙商银行": [[0, 3]]}}}

{"text": "生生不息CSOL生化狂潮让你填弹狂扫", "label": {"game": {"CSOL": [[4, 7]]}}}

{"text": "那不勒斯vs锡耶纳以及桑普vs热那亚之上呢?", "label": {"organization": {"那不勒斯": [[0, 3]], "锡耶纳": [[6, 8]], "桑普": [[11, 12]], "热那亚": [[15, 17]]}}}

{"text": "加勒比海盗3:世界尽头》的去年同期成绩死死甩在身后,后者则即将赶超《变形金刚》,", "label": {"movie": {"加勒比海盗3:世界尽头》": [[0, 11]], "《变形金刚》": [[33, 38]]}}}

{"text": "布鲁京斯研究所桑顿中国中心研究部主任李成说,东亚的和平与安全,是美国的“核心利益”之一。", "label": {"address": {"美国": [[32, 33]]}, "organization": {"布鲁京斯研究所桑顿中国中心": [[0, 12]]}, "name": {"李成": [[18, 19]]}, "position": {"研究部主任": [[13, 17]]}}}

{"text": "目前主赞助商暂时空缺,他们的球衣上印的是“unicef”(联合国儿童基金会),是公益性质的广告;", "label": {"organization": {"unicef": [[21, 26]], "联合国儿童基金会": [[29, 36]]}}}

{"text": "此数据换算成亚洲盘罗马客场可让平半低水。", "label": {"organization": {"罗马": [[9, 10]]}}}

{"text": "你们是最棒的!#英雄联盟d学sanchez创作的原声王", "label": {"game": {"英雄联盟": [[8, 11]]}}}

{"text": "除了吴湖帆时现精彩,吴待秋、吴子深、冯超然已然归入二三流了,", "label": {"name": {"吴湖帆": [[2, 4]], "吴待秋": [[10, 12]], "吴子深": [[14, 16]], "冯超然": [[18, 20]]}}}

{"text": "在豪门被多线作战拖累时,正是他们悄悄追赶上来的大好时机。重新找回全队的凝聚力是拉科赢球的资本。", "label": {"organization": {"拉科": [[39, 40]]}}}

其中 train.json 有 10748 条数据,dev.json 中有 1343 条数据,可作为验证集使用。

本次我们实验暂时不需要模型输出位置,这里对数据集格式做下转换:

import json

def trans(file_path, save_path):

with open(save_path, "a", encoding="utf-8") as w:

with open(file_path, "r", encoding="utf-8") as r:

for line in r:

line = json.loads(line)

text = line['text']

label = line['label']

trans_label = {}

for key, items in label.items():

items = items.keys()

trans_label[key] = list(items)

trans = {

"text": text,

"label": trans_label

}

line = json.dumps(trans, ensure_ascii=False)

w.write(line + "\n")

w.flush()

if __name__ == '__main__':

trans("ner_data_origin/train.json", "ner_data/train.json")

trans("ner_data_origin/dev.json", "ner_data/val.json")

转换后的数据格式示例:

{"text": "彭小军认为,国内银行现在走的是台湾的发卡模式,先通过跑马圈地再在圈的地里面选择客户,", "label": {"address": ["台湾"], "name": ["彭小军"]}}

{"text": "温格的球队终于又踢了一场经典的比赛,2比1战胜曼联之后枪手仍然留在了夺冠集团之内,", "label": {"organization": ["曼联"], "name": ["温格"]}}

{"text": "突袭黑暗雅典娜》中Riddick发现之前抓住他的赏金猎人Johns,", "label": {"game": ["突袭黑暗雅典娜》"], "name": ["Riddick", "Johns"]}}

{"text": "郑阿姨就赶到文汇路排队拿钱,希望能将缴纳的一万余元学费拿回来,顺便找校方或者教委要个说法。", "label": {"address": ["文汇路"]}}

{"text": "我想站在雪山脚下你会被那巍峨的雪山所震撼,但你一定要在自己身体条件允许的情况下坚持走到牛奶海、", "label": {"scene": ["牛奶海", "雪山"]}}

{"text": "吴三桂演义》小说的想像,说是为牛金星所毒杀。……在小说中加插一些历史背景,", "label": {"book": ["吴三桂演义》"], "name": ["牛金星"]}}

{"text": "看来各支一二流的国家队也开始走出欧洲杯后低迷,从本期对阵情况看,似乎冷门度也不太高,你认为呢?", "label": {"organization": ["欧洲杯"]}}

{"text": "就天涯网推出彩票服务频道是否是业内人士所谓的打政策“擦边球”,记者近日对此事求证彩票监管部门。", "label": {"organization": ["彩票监管部门"], "company": ["天涯网"], "position": ["记者"]}}

{"text": "市场仍存在对网络销售形式的需求,网络购彩前景如何?为此此我们采访业内专家程阳先生。", "label": {"name": ["程阳"], "position": ["专家"]}}

{"text": "组委会对中国区预选赛进行了抽签分组,并且对本次抽签进行了全程直播。", "label": {"government": ["组委会"]}}

整体数据集 train.json 的 Token 分布如下所示:

import json

from transformers import AutoTokenizer

import numpy as np

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif'] = ['SimHei']

def get_token_distribution(file_path, tokenizer):

input_num_tokens, outout_num_tokens = [], []

with open(file_path, "r", encoding="utf-8") as r:

for line in r:

line = json.loads(line)

text = line['text']

label = line['label']

label = json.dumps(label, ensure_ascii=False)

input_num_tokens.append(len(tokenizer(text).input_ids))

outout_num_tokens.append(len(tokenizer(label).input_ids))

return min(input_num_tokens), max(input_num_tokens), np.mean(input_num_tokens),\

min(outout_num_tokens), max(outout_num_tokens), np.mean(outout_num_tokens)

def main():

model_path = "model/Qwen2.5-0.5B-Instruct"

train_data_path = "ner_data/train.json"

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

i_min, i_max, i_avg, o_min, o_max, o_avg = get_token_distribution(train_data_path, tokenizer)

print(i_min, i_max, i_avg, o_min, o_max, o_avg)

plt.figure(figsize=(8, 6))

bars = plt.bar([

"input_min_token",

"input_max_token",

"input_avg_token",

"ouput_min_token",

"ouput_max_token",

"ouput_avg_token",

], [

i_min, i_max, i_avg, o_min, o_max, o_avg

])

plt.title('训练集Token分布情况')

plt.ylabel('数量')

for bar in bars:

yval = bar.get_height()

plt.text(bar.get_x() + bar.get_width() / 2, yval, int(yval), va='bottom')

plt.show()

if __name__ == '__main__':

main()

其中输入Token 最大是 50,输出 Token 最大是 69 。

二、微调训练

解析数据,构建 Dataset 数据集:

ner_dataset.py

# -*- coding: utf-8 -*-

from torch.utils.data import Dataset

import torch

import json

import numpy as np

class NerDataset(Dataset):

def __init__(self, data_path, tokenizer, max_source_length, max_target_length) -> None:

super().__init__()

self.tokenizer = tokenizer

self.max_source_length = max_source_length

self.max_target_length = max_target_length

self.max_seq_length = self.max_source_length + self.max_target_length

self.data = []

if data_path:

with open(data_path, "r", encoding='utf-8') as f:

for line in f:

if not line or line == "":

continue

json_line = json.loads(line)

text = json_line["text"]

label = json_line["label"]

label = json.dumps(label, ensure_ascii=False)

self.data.append({

"text": text,

"label": label

})

print("data load , size:", len(self.data))

def preprocess(self, text, label):

messages = [

{"role": "system",

"content": "你的任务是做Ner任务提取, 根据用户输入提取出完整的实体信息, 并以JSON格式输出。"},

{"role": "user", "content": text}

]

prompt = self.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

instruction = self.tokenizer(prompt, add_special_tokens=False, max_length=self.max_source_length,

padding="max_length", pad_to_max_length=True, truncation=True)

response = self.tokenizer(label, add_special_tokens=False, max_length=self.max_target_length,

padding="max_length", pad_to_max_length=True, truncation=True)

input_ids = instruction["input_ids"] + response["input_ids"] + [self.tokenizer.pad_token_id]

attention_mask = (instruction["attention_mask"] + response["attention_mask"] + [1])

labels = [-100] * len(instruction["input_ids"]) + response["input_ids"] + [self.tokenizer.pad_token_id]

return input_ids, attention_mask, labels

def __getitem__(self, index):

item_data = self.data[index]

input_ids, attention_mask, labels = self.preprocess(**item_data)

return {

"input_ids": torch.LongTensor(np.array(input_ids)),

"attention_mask": torch.LongTensor(np.array(attention_mask)),

"labels": torch.LongTensor(np.array(labels))

}

def __len__(self):

return len(self.data)

微调训练,这里采用全参数微调:

# -*- coding: utf-8 -*-

import torch

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from transformers import AutoModelForCausalLM, AutoTokenizer

from ner_dataset import NerDataset

from tqdm import tqdm

import time, sys

def train_model(model, train_loader, val_loader, optimizer,

device, num_epochs, model_output_dir, writer):

batch_step = 0

for epoch in range(num_epochs):

time1 = time.time()

model.train()

for index, data in enumerate(tqdm(train_loader, file=sys.stdout, desc="Train Epoch: " + str(epoch))):

input_ids = data['input_ids'].to(device, dtype=torch.long)

attention_mask = data['attention_mask'].to(device, dtype=torch.long)

labels = data['labels'].to(device, dtype=torch.long)

optimizer.zero_grad()

outputs = model(

input_ids=input_ids,

attention_mask=attention_mask,

labels=labels,

)

loss = outputs.loss

loss.backward()

optimizer.step()

writer.add_scalar('Loss/train', loss, batch_step)

batch_step += 1

# 100轮打印一次 loss

if index % 100 == 0 or index == len(train_loader) - 1:

time2 = time.time()

tqdm.write(

f"{index}, epoch: {epoch} -loss: {str(loss)} ; each step's time spent: {(str(float(time2 - time1) / float(index + 0.0001)))}")

# 验证

model.eval()

val_loss = validate_model(model, device, val_loader)

writer.add_scalar('Loss/val', val_loss, epoch)

print(f"val loss: {val_loss} , epoch: {epoch}")

print("Save Model To ", model_output_dir)

model.save_pretrained(model_output_dir)

def validate_model(model, device, val_loader):

running_loss = 0.0

with torch.no_grad():

for _, data in enumerate(tqdm(val_loader, file=sys.stdout, desc="Validation Data")):

input_ids = data['input_ids'].to(device, dtype=torch.long)

attention_mask = data['attention_mask'].to(device, dtype=torch.long)

labels = data['labels'].to(device, dtype=torch.long)

outputs = model(

input_ids=input_ids,

attention_mask=attention_mask,

labels=labels,

)

loss = outputs.loss

running_loss += loss.item()

return running_loss / len(val_loader)

def main():

# 基础模型位置

model_name = "model/Qwen2.5-0.5B-Instruct"

# 训练集

train_json_path = "ner_data/train.json"

# 验证集

val_json_path = "ner_data/val.json"

max_source_length = 50

max_target_length = 140

epochs = 30

batch_size = 15

lr = 1e-4

model_output_dir = "output_ner"

logs_dir = "logs"

# 设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 加载分词器和模型

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(model_name, trust_remote_code=True)

print("Start Load Train Data...")

train_params = {

"batch_size": batch_size,

"shuffle": True,

"num_workers": 4,

}

training_set = NerDataset(train_json_path, tokenizer, max_source_length, max_target_length)

training_loader = DataLoader(training_set, **train_params)

print("Start Load Validation Data...")

val_params = {

"batch_size": batch_size,

"shuffle": False,

"num_workers": 4,

}

val_set = NerDataset(val_json_path, tokenizer, max_source_length, max_target_length)

val_loader = DataLoader(val_set, **val_params)

# 日志记录

writer = SummaryWriter(logs_dir)

# 优化器

optimizer = torch.optim.AdamW(params=model.parameters(), lr=lr)

model = model.to(device)

# 开始训练

print("Start Training...")

train_model(

model=model,

train_loader=training_loader,

val_loader=val_loader,

optimizer=optimizer,

device=device,

num_epochs=epochs,

model_output_dir=model_output_dir,

writer=writer

)

writer.close()

if __name__ == '__main__':

main()

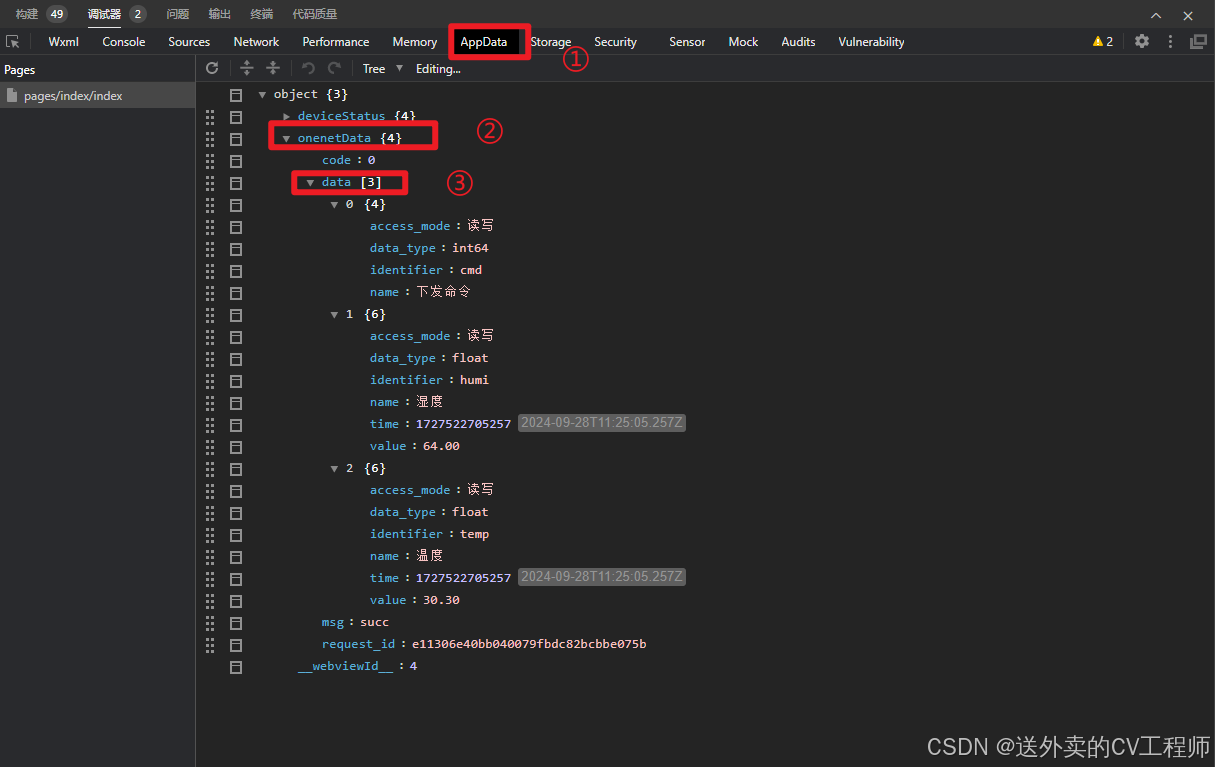

训练过程:

训练结束后,可以查看下 tensorboard 中你的 loss 曲线:

tensorboard --logdir=logs --bind_all

在 浏览器访问 http:ip:6006/

三、模型测试

# -*- coding: utf-8 -*-

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

def main():

model_path = "model/Qwen2.5-0.5B-Instruct"

train_model_path = "output_ner"

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(train_model_path, trust_remote_code=True)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

test_case = [

"三星WCG2011北京赛区魔兽争霸3最终名次",

"新华网孟买3月10日电(记者聂云)印度国防部10日说,印度政府当天批准",

"证券时报记者肖渔"

]

for case in test_case:

messages = [

{"role": "system",

"content": "你的任务是做Ner任务提取, 根据用户输入提取出完整的实体信息, 并以JSON格式输出。"},

{"role": "user", "content": case}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

generated_ids = model.generate(

model_inputs.input_ids,

max_new_tokens=140,

top_k=1

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print("----------------------------------")

print(f"input: {case}\nresult: {response}")

if __name__ == '__main__':

main()