贝叶斯方法是非常基础且重要的方法,在前文中断断续续也有所介绍,感兴趣的话可以自行移步阅读即可:

《数学之美番外篇:平凡而又神奇的贝叶斯方法》

《贝叶斯深度学习——基于PyMC3的变分推理》

《模型优化调参利器贝叶斯优化bayesian-optimization实践》

在《模型优化调参利器贝叶斯优化bayesian-optimization实践》 一文中,我们基于bayesian-optimization库来实现了贝叶斯优化实践,本文同样是要应用实践贝叶斯优化方法,只不过这里我们使用的是skopt模块来完成对应的实践的。

对于目标函数f:

noise_level = 0.1

def f(x, noise_level=noise_level):

return np.sin(5 * x[0]) * (1 - np.tanh(x[0] ** 2))\

+ np.random.randn() * noise_level可以先绘制f的边界轮廓,如下;

x = np.linspace(-2, 2, 400).reshape(-1, 1)

fx = [f(x_i, noise_level=0.0) for x_i in x]

plt.plot(x, fx, "r--", label="True (unknown)")

plt.fill(np.concatenate([x, x[::-1]]),

np.concatenate(([fx_i - 1.9600 * noise_level for fx_i in fx],

[fx_i + 1.9600 * noise_level for fx_i in fx[::-1]])),

alpha=.45, fc="g", ec="None")

plt.legend()

plt.title("Function Contours")

plt.show()结果如下所示:

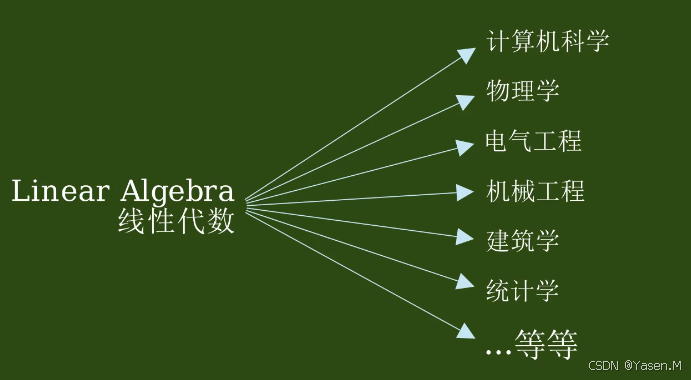

贝叶斯优化是建立在高斯过程之上的,如果每个函数评估都很昂贵,例如,当参数是神经网络的超参数且函数评估是十倍的平均交叉验证分数时,则使用标准优化例程优化超参数将永远花费!

其思想是使用高斯过程来近似函数。换句话说,假定函数值遵循多元高斯分布。函数值的协方差由参数之间的GP核给出。然后,利用捕获函数在高斯先验下选择下一个待评估参数,使得评估速度更快。

from skopt import gp_minimize

res = gp_minimize(f, # the function to minimize

[(-2.0, 2.0)], # the bounds on each dimension of x

acq_func="EI", # the acquisition function

n_calls=15, # the number of evaluations of f

n_random_starts=5, # the number of random initialization points

noise=0.1**2, # the noise level (optional)

random_state=1234) # the random seed计算过程输出如下所示:

fun: -1.0079192525206238

func_vals: array([ 0.03716044, 0.00673852, 0.63515442, -0.16042062, 0.10695907,

-0.24436726, -0.58630532, 0.05238726, -1.00791925, -0.98466748,

-0.86259916, 0.18102445, -0.10782771, 0.00815673, -0.79756401])

models: [GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5) + WhiteKernel(noise_level=0.01),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775)]

random_state: RandomState(MT19937) at 0x1BC23E3DDB0

space: Space([Real(low=-2.0, high=2.0, prior='uniform', transform='normalize')])

specs: {'args': {'model_queue_size': None, 'n_jobs': 1, 'kappa': 1.96, 'xi': 0.01, 'n_restarts_optimizer': 5, 'n_points': 10000, 'callback': None, 'verbose': False, 'random_state': RandomState(MT19937) at 0x1BC23E3DDB0, 'y0': None, 'x0': None, 'acq_optimizer': 'auto', 'acq_func': 'EI', 'initial_point_generator': 'random', 'n_initial_points': 10, 'n_random_starts': 5, 'n_calls': 15, 'base_estimator': GaussianProcessRegressor(kernel=1**2 * Matern(length_scale=1, nu=2.5),

n_restarts_optimizer=2, noise=0.010000000000000002,

normalize_y=True, random_state=822569775), 'dimensions': Space([Real(low=-2.0, high=2.0, prior='uniform', transform='normalize')]), 'func': <function f at 0x000001BBC7401E18>}, 'function': 'base_minimize'}

x: [-0.3551841563751006]

x_iters: [[-0.009345334109402526], [1.2713537644662787], [0.4484475787090836], [1.0854396754496047], [1.4426790855107496], [0.9579248468740365], [-0.4515808656827538], [-0.6859481043867404], [-0.3551841563751006], [-0.29315378760492994], [-0.3209941584981484], [-2.0], [2.0], [-1.3373741960111043], [-0.24784229191660678]]同样可以对收敛的过程进行可视化:

from skopt.plots import plot_convergence

plot_convergence(res)结果如下所示:

接下来可以进一步检查可视化:1、拟合gp模型到原始函数的近似 2、确定下一个要查询点的采集值

接下来绘制5个随机点下的五个迭代:

def f_wo_noise(x):

return f(x, noise_level=0)

for n_iter in range(5):

# Plot true function.

plt.subplot(5, 2, 2*n_iter+1)

if n_iter == 0:

show_legend = True

else:

show_legend = False

ax = plot_gaussian_process(res, n_calls=n_iter,

objective=f_wo_noise,

noise_level=noise_level,

show_legend=show_legend, show_title=False,

show_next_point=False, show_acq_func=False)

ax.set_ylabel("")

ax.set_xlabel("")

# Plot EI(x)

plt.subplot(5, 2, 2*n_iter+2)

ax = plot_gaussian_process(res, n_calls=n_iter,

show_legend=show_legend, show_title=False,

show_mu=False, show_acq_func=True,

show_observations=False,

show_next_point=True)

ax.set_ylabel("")

ax.set_xlabel("")

plt.show()可视化结果如下所示:

第一列表示:1、真实的的函数、高斯过程模型对原函数的逼近、GP逼近的确定程度。

第二列显示每个代理模型拟合后的采集函数值。我们可能不选择全局最小值,而是根据用于最小化捕获函数的最小值选择局部最小值。在更接近之前在处计算的点处,方差下降为零。最后,随着点数的增加,GP模型更接近实际函数。最后几个点聚集在最小值附近,因为GP无法通过进一步探索获得更多信息: