这篇文章覆盖了LCEL的理解和他是如何工作的。

LCEL(LangChain Expression Language):是把一些有趣python概念抽象成一种格式,从而为构建LangChain组件链提供一种“简约”代码层。

LCEL在下面方面有着强大的支撑:

- 链的快速开发

- 流式输出、异步,以及并发执行等高阶特性

- 和LangSmith和LangServe的快速集成

在这篇文章中,我们将学习LCEL是什么、他是如何工作的,以及LCEL链、pipe和RUnnable的要点。

LCEL语法

我们首先安装本次演示所需的所有必备库。注意,也可以通过我们的Jupyter笔记来操作。

!pip install -qU \

langchain==0.0.345 \

anthropic==0.7.7 \

cohere==4.37 \

docarray==0.39.1为了理解LCEL预发,我们首先构建一个简单的链使用LangChain的传统语法。我们将使用Claude 2.1初始化一个简单的LLMChain。

from langchain.chat_models import ChatAnthropic

from langchain.prompts import ChatPromptTemplate

from langchain.schema.output_parser import StrOutputParser

ANTHROPIC_API_KEY = "<<YOUR_ANTHROPIC_API_KEY>>"

prompt = ChatPromptTemplate.from_template(

"Give me small report about {topic}"

)

model = ChatAnthropic(

model="claude-2.1",

max_tokens_to_sample=512,

anthropic_api_key=ANTHROPIC_API_KEY

) # swap Anthropic for OpenAI with `ChatOpenAI` and `openai_api_key`

output_parser = StrOutputParser()通过使用这个链,我们可以通过在LLMChain上面调用chain.run方法来生成关于某个特定主题(如,“人工智能”)的小型报告。

from langchain.chains import LLMChain

chain = LLMChain(

prompt=prompt,

llm=model,

output_parser=output_parser

)

# and run

out = chain.run(topic="Artificial Intelligence")

print(out)Here is a brief report on some key aspects of artificial intelligence (AI):

Introduction

- AI refers to computer systems that are designed to perform tasks that would otherwise require human intelligence, such as visual perception, speech recognition, decision-making, and language translation.

Major AI Techniques

- Machine learning uses statistical techniques and neural networks to enable systems to improve at tasks with experience. Common techniques include deep learning, reinforcement learning, and supervised learning.

- Computer vision focuses on extracting information from digital images and videos. It powers facial recognition, self-driving vehicles, and other visual AI tasks.

- Natural language processing enables computers to understand, interpret, and generate human languages. Key applications include machine translation, search engines, and voice assistants like Siri.

Current Capabilities

- AI programs have matched or exceeded human capabilities in narrow or well-defined tasks like playing chess and Go, identifying objects in images, and transcribing speech.

- However, general intelligence comparable to humans across different areas remains an unsolved challenge, often referred to as artificial general intelligence (AGI).

Future Directions

- Ongoing AI research is focused on developing stronger machine learning techniques, achievingexplainability and transparency in AI decision-making, and addressing potential ethical issues like bias.

- If achieved, AGI could have significant societal and economic impacts, potentially enhancing and automating intellectual work. However safety, control and alignment with human values remain active research priorities.

I hope this brief overview of some major aspects of the current state of AI technology and research provides useful context and information. Let me know if you would like me to elaborate on or clarify anything further.通过LCEL,我们使用管道操作符(|)而不是Chains来以不同的方式创建链:

lcel_chain = prompt | model | output_parser

# and run

out = lcel_chain.invoke({"topic": "Artificial Intelligence"})

print(out)Here is a brief report on artificial intelligence:

Artificial intelligence (AI) refers to computer systems that can perform human-like cognitive functions such as learning, reasoning, and self-correction. AI has advanced significantly in recent years due to increases in computing power and the availability of large datasets and open source machine learning libraries.

Some key highlights about the current state of AI:

- Applications of AI - AI is being utilized in a wide variety of industries including finance, healthcare, transportation, criminal justice, and social media platforms. Use cases include personalized recommendations, predictive analytics, automated customer service agents, medical diagnosis, self-driving vehicles, and content moderation.

- Machine Learning - The ability for AI systems to learn from data without explicit programming is driving much of the recent progress. Machine learning methods like deep learning neural networks have achieved new breakthroughs in areas like computer vision, speech recognition, and natural language processing.

- Limitations - While AI has made great strides, current systems still have major limitations compared to human intelligence including lack of general world knowledge, difficulties dealing with novelty, bias issues from flawed datasets, and lack of skills for complex reasoning, empathy, creativity, etc. Ensuring the safety and controllability of AI systems remains an ongoing challenge.

- Future Outlook - Experts predict key areas for AI advancement to include gaining contextual understanding and reasoning skills, achieving more human-like communication abilities, algorithmic fairness and transparency, as well as advances in specialized fields like robotics, autonomous vehicles, and human-AI collaboration. Careful management of risks posed by more advanced AI systems remains crucial. Global competition for AI talent and computing resources continues to intensify.

That covers some of the key trends, strengths and limitations, and future trajectories for artificial intelligence technology based on the current landscape. Please let me know if you would like me to elaborate on any part of this overview.这里的语法对于Python来说并不典型,但只使用了原生Python的功能。我们的管道操作符(|)只是简单的获取左侧的输出,并将其输入到右侧的函数中。

管道操作符如何工作

为了理解LCEL和管道操作符正在发生什么情况,我们创建自己的兼容管道的函数。

当Python解释器看到两个对象之间的“|”操作符(如a|b)是,它将尝试将对象a传入对象b的__or__方法。这意味着这些模式是等效的:

# object approach

chain = a.__or__(b)

chain("some input")

# pipe approach

chain = a | b

chain("some input")考虑到这一点,我们可以构建一个可运行(Runnable)类,这个类接收一个函数,并将它转换为一个可使用管道操作符(|)与其他函数进行链式调用的函数。

class Runnable:

def __init__(self, func):

self.func = func

def __or__(self, other):

def chained_func(*args, **kwargs):

# the other func consumes the result of this func

return other(self.func(*args, **kwargs))

return Runnable(chained_func)

def __call__(self, *args, **kwargs):

return self.func(*args, **kwargs)让我们来实现这个操作,取值为3,加5(返回8),然后乘以2--得到16.

def add_five(x):

return x + 5

def multiply_by_two(x):

return x * 2

# wrap the functions with Runnable

add_five = Runnable(add_five)

multiply_by_two = Runnable(multiply_by_two)

# run them using the object approach

chain = add_five.__or__(multiply_by_two)

chain(3) # should return 1616直接使用__or__我们得到了正确的答案,让我们来尝试使用管道操作来将这些链接起来:

chain = add_five | multiply_by_two

chain(3)16通过任意一种方法,我们都会得到正确的响应,从本质上讲,这就是LCEL将组件链接在一起使用的管道逻辑。然而,这并非LCEL的全部,他还有更多内涵。

深入LCEL

现在我们已经了解了LCEL语法的底层逻辑,让我们在LangChain的语境中对其进行深入探究并了解一些为在使用LCEL时实现最大化的灵活性而提供的附加方法。

Runnables

在使用LCEL,我们可能会发现,当值在组件之间传递时,我们需要修改值的流向或者值本身--为此,我们使用可执行对象(runnable)。让我们首先初始化几个简单的向量存储组件。

from langchain.embeddings import CohereEmbeddings

from langchain.vectorstores import DocArrayInMemorySearch

COHERE_API_KEY = "<<COHERE_API_KEY>>"

embedding = CohereEmbeddings(

model="embed-english-light-v3.0",

cohere_api_key=COHERE_API_KEY

)

vecstore_a = DocArrayInMemorySearch.from_texts(

["half the info will be here", "James' birthday is the 7th December"],

embedding=embedding

)

vecstore_b = DocArrayInMemorySearch.from_texts(

["and half here", "James was born in 1994"],

embedding=embedding

)我们初始化两个本地的向量存储,并将两个重要信息片段分开存储在这两个向量存储中。我们后面很快会明白为什么要这么做,现在我们仅仅需要其中之一。让我们尝试将一个问题通过vecstore_a传递给RAG流水线.

from langchain_core.runnables import (

RunnableParallel,

RunnablePassthrough

)

retriever_a = vecstore_a.as_retriever()

retriever_b = vecstore_b.as_retriever()

prompt_str = """Answer the question below using the context:

Context: {context}

Question: {question}

Answer: """

prompt = ChatPromptTemplate.from_template(prompt_str)

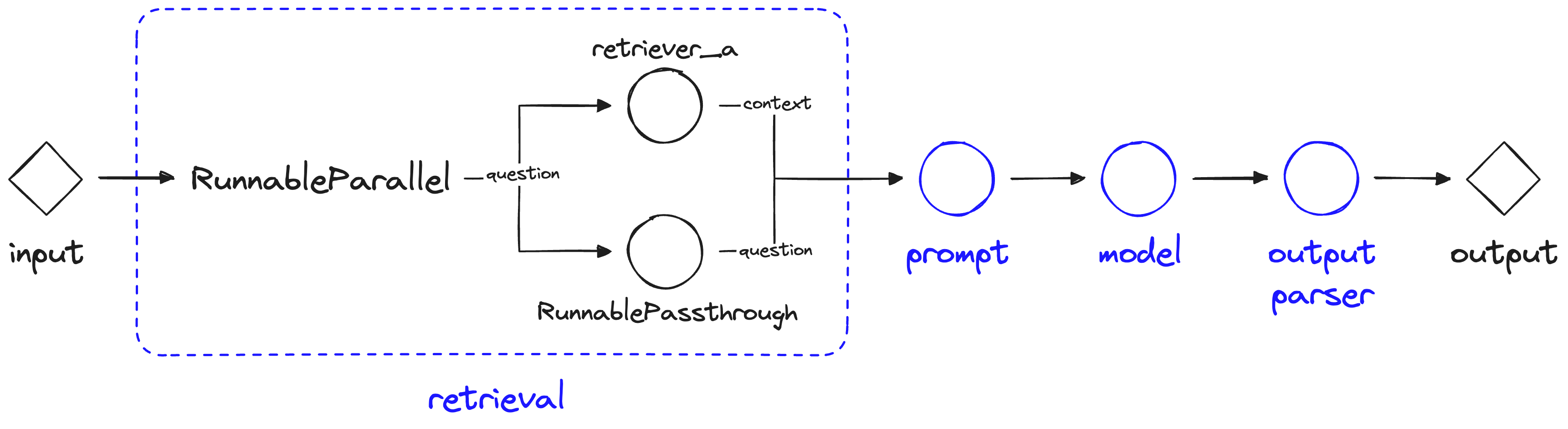

retrieval = RunnableParallel(

{"context": retriever_a, "question": RunnablePassthrough()}

)

chain = retrieval | prompt | model | output_parser我们在这里使用两个新的对象,RunnableParallel和RunnablePasstrhrough。RunnableParalle对象允许我们定义多个值和操作,并将他们全部并行运行。在这里,我们使用chain的输入调用retriever_a,然后通过“context”参数将retriever_a的结果传递给链中的下一个组件。

LCEL流使用RunnableParallel和RunnablePassthrough

RunnablePassthrough对象被用作一种“传递(直通)”机制,他接受当前组件(检索组件)的任何输入,并允许我们通过“question”键将他提供给组件。

out = chain.invoke("when was James born?")

print(out) Unfortunately I do not have enough context to definitively state when James was born. The only potentially relevant information is "James' birthday is the 7th December", but this does not specify the year he was born. To answer the question of when specifically James was born, I would need more details or context such as his current age or the year associated with his birthday.使用这些信息,推理已经接近回答了问题,但是他没有足够的信息,它缺失我们存储在retriever_b的信息。幸运的是,我们可以使用RunnableParllel对象来实现多个并行信息流。

prompt_str = """Answer the question below using the context:

Context:

{context_a}

{context_b}

Question: {question}

Answer: """

prompt = ChatPromptTemplate.from_template(prompt_str)

retrieval = RunnableParallel(

{

"context_a": retriever_a, "context_b": retriever_b,

"question": RunnablePassthrough()

}

)

chain = retrieval | prompt | model | output_parser在这里,我们通过context_a和context_b传递两组上下文到我们的prompt组件。通过这种方式,我们可以向LLM提供更多信息(尽管奇怪的是,该打语言模型并不能将这些信息联系起来进行综合分析)。

out = chain.invoke("when was James born?")

print(out)Based on the context provided, James was born in 1994. This is stated in the second document with the page content "James was born in 1994". Therefore, the answer to the question "when was James born?" is 1994.out = chain.invoke("what date exactly was James born?")

print(out)Unfortunately, the given context does not provide definitive information to answer the question "what date exactly was James born?". The context includes:

- James' birthday is the 7th December (no year specified)

- James was born in 1994

While it states James was born in 1994, there is no additional detail provided about the exact date. The context only specifies that his birthday, referring to the day and month he was born, is December 7th. But without the specific year provided with his birthday, there is not enough information to determine the exact date he was born.

Since an exact date of James' birth is not able to be determined from the given context, I do not have enough information to provide an answer specifying his exact birth date. The context provides his year of birth but does not include the required detail about the day and month in order for me to state his complete exact date of birth.使用这种方式,我们可以能够进行多个并行执行,并且相当容易得构建更复杂的链。

Runnable Lambdas

RunnableLambda是LangChain关于将Python函数转换为管道适配的函数的一种抽象,与我们在文章前面Runnable类相似。

from langchain_core.runnables import RunnableLambda

def add_five(x):

return x + 5

def multiply_by_two(x):

return x * 2

# wrap the functions with RunnableLambda

add_five = RunnableLambda(add_five)

multiply_by_two = RunnableLambda(multiply_by_two)使用我们早前的add_five和multiply_by_two函数来试一下。

chain = add_five | multiply_by_two跟Runnable抽象不一样的是我们不能直接调用RunnableLambda来运行它,取而代之,我们需要调用chain.invoke:

chain.invoke(3)16跟之前一样,我们得到了相同的答案。自然地,我们可以使用这种方法把自定义函数插入到我们的链中。让我们尝试一个短一点的链,并看看我们可能想在哪里插入自定义函数:

prompt_str = "Tell me an short fact about {topic}"

prompt = ChatPromptTemplate.from_template(prompt_str)

chain = prompt | model | output_parser我们多次运行这个链,看看他会返回什么类型的答案:

chain.invoke({"topic": "Artificial Intelligence"})" Here's a short fact about artificial intelligence:\n\nAI systems can analyze huge amounts of data and detect patterns that humans may miss. For example, AI is helping doctors diagnose diseases earlier by processing medical images and spotting subtle signs that a human might not notice."chain.invoke({"topic": "Artificial Intelligence"})" Here's a short fact about artificial intelligence:\n\nAI systems are able to teach themselves over time. Through machine learning, algorithms can analyze large amounts of data and improve their own processes and decision making without needing to be manually updated by humans. This self-learning ability is a key attribute enabling AI progress."返回的文本经常包含一个“Here's a short fact about ...\n\n”作为开头--让我们添加一个函数,按照两个连续的“\n\n”进行分割,并且仅返回事实本身。

def extract_fact(x):

if "\n\n" in x:

return "\n".join(x.split("\n\n")[1:])

else:

return x

get_fact = RunnableLambda(extract_fact)

chain = prompt | model | output_parser | get_fact现在再次尝试调用我们的链:

chain.invoke({"topic": "Artificial Intelligence"})'Most AI systems today are narrow AI, meaning they are focused on and trained for a specific task like computer vision, natural language processing or playing chess. General artificial intelligence that has human-level broad capabilities across many domains does not yet exist. AI has made tremendous progress in recent years thanks to advances in deep learning, big data and computing power, but still has limitations and scientists are working to make AI systems safer and more beneficial to humanity.'chain.invoke({"topic": "Artificial Intelligence"})'AI systems can analyze massive amounts of data and detect patterns that humans may miss. This ability to find insights in large datasets is one of the key strengths of AI and enables many practical applications like personalized recommendations, fraud detection, and medical diagnosis.'使用这个get_fact函数,我们并没有得到格式化良好的响应。

这些涵盖了你开始使用LCEL进行构建所需的基本内容。有了它,我们可以轻松的组合链--LangChain团队目前的重点是进一步进行LCEL的开发和支持。

LCEL的优缺点各不相同。喜欢他的人往往关注其极简的代码风格、对流、并行操作和异步的支持,以及它与LangChain专注于将组件链接在一起的理念的良好整合。

有些人不喜欢LCEL。这些人通常支出,LangChain本来已经是一个非常抽象的库,LCEL是在它之上的又一层抽象,语法令人困惑,与Python的宗旨相悖,并且需要花费太多的精力去学习新的(不常见)的语法。

两种观点都是完全合理的,LCEL是一种非常不同的方法--既有明显的优点也有明显的缺点。无论如何,如果你愿意花一些时间学习这种语法,他可以让我们快速的进行开发,考虑到这一点,它是很值得学习的。

![【PWN · HOO | HOF | Tcache pthread struct】[2024 · ByteCTF] ezheap](https://i-blog.csdnimg.cn/direct/0fbe255c8dc443b5a2065fead740a1a0.png)