1. 配置 gdal

1.1. 官网下载

这个是因为你电脑是 win64 位才选择哦~

下载这个,然后解压

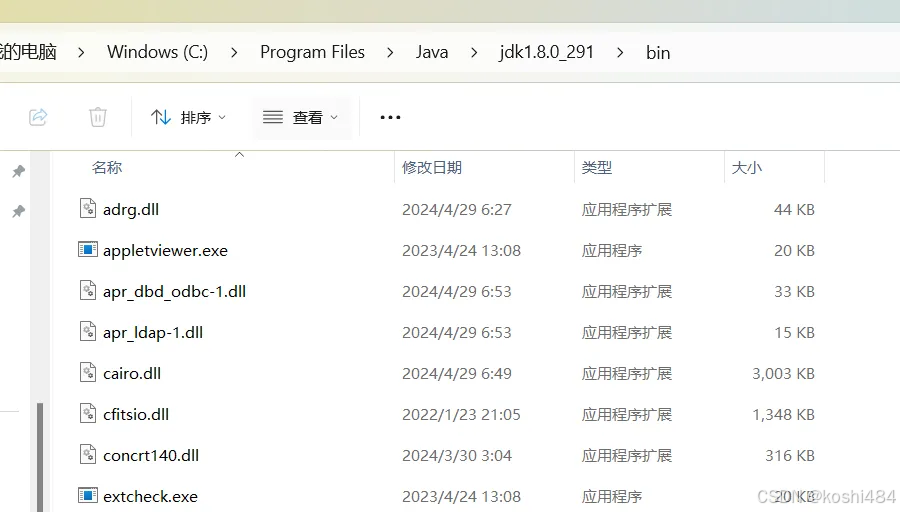

1.2. 复制这个压缩包下的 ddl 文件

可以按照类型复制,然后复制到你的 java jDK 文件夹下

1.3. 找到你的 java jdk 文件夹

不知道 java 的文件夹位置,可以通过这个查找

1.4. 复制对象

将这个复制到这个

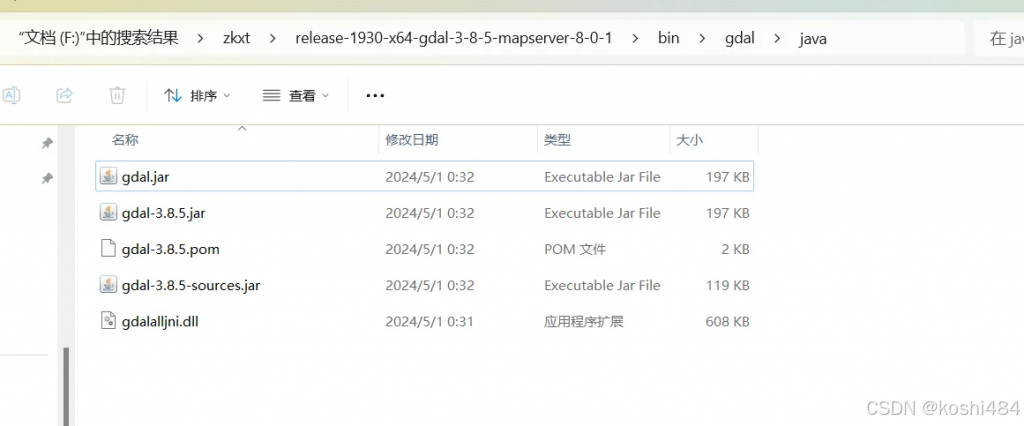

1.5. 选择 release-1930-x64-gdal-3-8-5-mapserver-8-0-1\bin\gdal\java

1.5. 选择 release-1930-x64-gdal-3-8-5-mapserver-8-0-1\bin\gdal\java

也复制到 java/bin java/jre/java,并且放入项目里面

2. 解析 tif

package org.example.flink_springboot.geop;

import org.gdal.gdal.Band;

import org.gdal.gdal.Dataset;

import org.gdal.gdal.Driver;

import org.gdal.gdal.gdal;

import org.gdal.gdalconst.gdalconst;

import org.gdal.gdalconst.gdalconstConstants;

public class GDALTest {

public static void main(String[] args) {

// 注册文件格式

gdal.AllRegister();

// 使用只读的方式打开图像

Dataset poDataset = gdal.Open("F:\\learn\\flink_springboot\\src\\main\\resources\\srtm_60_05.tif", gdalconst.GA_ReadOnly);

if (poDataset == null) {

System.out.println("The image could not be read.");

} else {

// 图像打开成功

System.out.println("The image could be read.");

Driver hDriver = poDataset.GetDriver();

// 输出文件的格式

System.out.println("文件格式:" + hDriver.GetDescription());

//System.out.println("文件格式:" + hDriver.getShortName() + "/" + hDriver.getLongName());

// 输出图像的大小和波段个数

System.out.println("size is:x:" + poDataset.getRasterXSize() + " ,y:" + poDataset.getRasterYSize()

+ " ,band size:" + poDataset.getRasterCount());

// 输出图像的投影信息

if (poDataset.GetProjectionRef() != null) {

System.out.println("Projection is " + poDataset.GetProjectionRef());

}

// 输出图像的坐标和分辨率信息

double[] adfGeoTransform = new double[6];

poDataset.GetGeoTransform(adfGeoTransform);

System.out.println("origin : " + adfGeoTransform[0] + "," + adfGeoTransform[3]);

System.out.println("pixel size:" + adfGeoTransform[1] + "," + adfGeoTransform[5]);

// 分别输出各个波段的块大小,并获取该波段的最大值和最小值,颜色表信息

for (int band = 0; band < poDataset.getRasterCount(); band++) {

Band poBand = poDataset.GetRasterBand(band + 1);

System.out.println("Band" + (band + 1) + ":" + "size:x:" + poBand.getXSize() + ",y:" + poBand.getYSize());

Double[] min = new Double[1];

Double[] max = new Double[1];

poBand.GetMinimum(min);

poBand.GetMaximum(max);

if (min[0] != null || max[0] != null) {

System.out.println("Min=" + min[0] + ",max=" + max[0]);

} else {

System.out.println("No Min/Max values stored in raster.");

}

if (poBand.GetColorTable() != null) {

System.out.println("band" + band + "has a color table with"

+ poBand.GetRasterColorTable().GetCount() + "entries.");

}

int buf[] = new int[poDataset.getRasterXSize()];

for (int i = 0; i < 3; i++) {

poBand.ReadRaster(0, i, poDataset.getRasterXSize(), 1, buf);

for (int j = 0; j < 3; j++)

System.out.print(buf[j] + ", ");

System.out.println("\n");

}

}

poDataset.delete();

}

}

}

3. 解析 shp

package org.example.flink_springboot.shape;

import org.gdal.gdal.gdal;

import org.gdal.ogr.*;

import org.gdal.osr.SpatialReference;

import java.util.HashMap;

import java.util.Map;

public class GdalDemo_shp1 {

public void opeanShp(String strVectorFile ) {

// 注册所有的驱动

ogr.RegisterAll();

// 为了支持中文路径,请添加下面这句代码

gdal.SetConfigOption("GDAL_FILENAME_IS_UTF8", "YES");

// 为了使属性表字段支持中文,请添加下面这句

gdal.SetConfigOption("SHAPE_ENCODING", "CP936");

// 读取数据,这里以ESRI的shp文件为例

String strDriverName = "ESRI Shapefile";

// 创建一个文件,根据strDriverName扩展名自动判断驱动类型

org.gdal.ogr.Driver oDriver = ogr.GetDriverByName(strDriverName);

if (oDriver == null) {

System.out.println(strDriverName + " 驱动不可用!\n");

return;

}

DataSource dataSource = oDriver.Open(strVectorFile);

//Layer layer = dataSource.GetLayer("test");

Layer layer = dataSource.GetLayer(0);

for(int i = 0;i<dataSource.GetLayerCount();i++) {

Layer layerIdx = dataSource.GetLayer(i);

System.out.println("图层名称:<==>" + layerIdx.GetName());

}

String layerName = layer.GetName();

System.out.println("图层名称:" + layerName);

SpatialReference spatialReference = layer.GetSpatialRef();

//System.out.println(spatialReference);

System.out.println("空间参考坐标系:" + spatialReference.GetAttrValue("AUTHORITY", 0)

+ spatialReference.GetAttrValue("AUTHORITY", 1));

double[] layerExtent = layer.GetExtent();

System.out.println("图层范围:minx:" + layerExtent[0] + ",maxx:" + layerExtent[1] + ",miny:" + layerExtent[2] + ",maxy:" + layerExtent[3]);

FeatureDefn featureDefn = layer.GetLayerDefn();

int fieldCount = featureDefn.GetFieldCount();

Map<String,String> fieldMap = new HashMap<String,String>();

for (int i = 0; i < fieldCount; i++) {

FieldDefn fieldDefn = featureDefn.GetFieldDefn(i);

// 得到属性字段类型

int fieldType = fieldDefn.GetFieldType();

String fieldTypeName = fieldDefn.GetFieldTypeName(fieldType);

// 得到属性字段名称

String fieldName = fieldDefn.GetName();

fieldMap.put(fieldTypeName, fieldName);

}

System.out.println();

System.out.println("fileMap:");

System.out.println(fieldMap);

System.out.println(layer.GetFeature(1).GetGeometryRef().ExportToJson());

System.out.println(layer.GetFeature(2).GetGeometryRef().ExportToJson());

System.out.println(layer.GetFeature(3).GetGeometryRef().ExportToJson());

for (int i = 0; i < 12; i++) {

Feature feature = layer.GetFeature(i);

Object[] arr = fieldMap.values().toArray();

for (int k = 0; k < arr.length; k++) {

String fvalue = feature.GetFieldAsString(arr[k].toString());

System.out.println(" 属性名称:" + arr[k].toString() + ",属性值:" + fvalue);

}

}

}

public static void main(String[] args) {

GdalDemo_shp shp = new GdalDemo_shp();

String strVectorFile ="F:\\learn\\flink_springboot\\src\\main\\resources\\中华人民共和国.shp";

String info = shp.opeanShp(strVectorFile);

System.out.println(info);

}

}package org.example.flink_springboot.shape;

import java.io.File;

import java.io.IOException;

import java.io.Serializable;

import java.util.Map;

public class SHP {

/**

* 生成shape文件

*

* @param shpPath 生成shape文件路径(包含文件名称) filepath

* @param encode 编码 code

* @param geoType 图幅类型,Point和Rolygon

* @param shpKey data中图幅的key geomfiled

* @param attrKeys 属性key集合 keylist

* @param data 图幅和属性集合 datalist

*/

public void write2Shape(String shpPath, String encode, String geoType, String shpKey, List<ShpFiled> attrKeys, List<Map<String, Object>> data) {

WKTReader reader = new WKTReader();

try {

//创建shape文件对象

File file = new File(shpPath);

Map<String, Serializable> params = new HashMap<>();

params.put(ShapefileDataStoreFactory.URLP.key, file.toURI().toURL());

ShapefileDataStore ds = (ShapefileDataStore) new ShapefileDataStoreFactory().createNewDataStore(params);

//定义图形信息和属性信息

SimpleFeatureTypeBuilder tb = new SimpleFeatureTypeBuilder();

tb.setCRS(DefaultGeographicCRS.WGS84);

tb.setName("sx_test");

tb.add("the_geom", getClass(geoType));

for (ShpFiled field : attrKeys) {

tb.add(field.getFiledname().toUpperCase(), getClass(field.getType()));

}

ds.createSchema(tb.buildFeatureType());

//设置编码

Charset charset = Charset.forName(encode);

ds.setCharset(charset);

//设置Writer

FeatureWriter<SimpleFeatureType, SimpleFeature> writer = ds.getFeatureWriter(ds.getTypeNames()[0], Transaction.AUTO_COMMIT);

// 写入文件信息

for (int i = 0; i < data.size(); i++) {

SimpleFeature feature = writer.next();

Map<String, Object> row = data.get(i);

Geometry geom = reader.read(row.get(shpKey).toString());

feature.setAttribute("the_geom", geom);

for (ShpFiled field : attrKeys) {

if (row.get(field.getFiledname()) != null) {

feature.setAttribute(field.getFiledname().toUpperCase(), row.get(field.getFiledname()));

} else {

feature.setAttribute(field.getFiledname().toUpperCase(), null);

}

}

}

writer.write();

writer.close();

ds.dispose();

//添加到压缩文件

//zipShapeFile(shpPath);

} catch (IOException e) {

e.printStackTrace();

}catch (Exception e) {

e.printStackTrace();

}

}

/**

* 更新shp文件数据

*

* @param path 文件路径

* @param datalist 空间及属性数据

*/

public static void updateFeature(String path, List<Map<String, Object>> datalist,String code) {

ShapefileDataStore dataStore = null;

File file = new File(path);

Transaction transaction = new DefaultTransaction("handle");

try {

dataStore = new ShapefileDataStore(file.toURL());

Charset charset = Charset.forName(code);

dataStore.setCharset(charset);

String typeName = dataStore.getTypeNames()[0];

SimpleFeatureStore store = (SimpleFeatureStore) dataStore.getFeatureSource(typeName);

// 获取字段列表

SimpleFeatureType featureType = store.getSchema();

List<String> fileds = getFileds(featureType);

store.setTransaction(transaction);

WKTReader reader = new WKTReader();

for (Map<String, Object> data : datalist) {

Filter filter = null;

if (data.get("where") != null) {

filter = ECQL.toFilter(data.get("where").toString());

}

Object[] objs = new Object[] {};

String[] str = new String[] {};

if (data.get("geom") != null) {

Geometry geometry = reader.read(data.get("geom").toString());

str = add(str, "the_geom");

objs = add(objs, geometry);

}

for (String stri : fileds) {

if (data.get(stri) != null) {

str = add(str, stri);

objs = add(objs, data.get(stri));

}

}

store.modifyFeatures(str, objs, filter);

}

transaction.commit();

System.out.println("========updateFeature====end====");

} catch (Exception eek) {

eek.printStackTrace();

try {

transaction.rollback();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

/**

* 移除shp中的数据

* @param path 文件路径

* @param ids 字段值数组

* @param filed 字段名

*/

public static void removeFeature(String path, List<String>ids,String filed,String code){

ShapefileDataStore dataStore = null;

File file = new File(path);

Transaction transaction = new DefaultTransaction("handle");

try {

dataStore = new ShapefileDataStore(file.toURL());

Charset charset = Charset.forName(code);

dataStore.setCharset(charset);

String typeName = dataStore.getTypeNames()[0];

SimpleFeatureStore store = (SimpleFeatureStore) dataStore.getFeatureSource(typeName);

store.setTransaction(transaction);

Filter filter = null;

if(ids.size()>0) {

String join = filed +" in ("+StringUtils.join(ids,",")+")";

System.out.println(join);

filter = ECQL.toFilter(join);

}

if(filter!=null) {

store.removeFeatures(filter);

transaction.commit();

System.out.println("======removeFeature== done ========");

}

} catch (Exception eek) {

eek.printStackTrace();

try {

transaction.rollback();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

}