鸢尾花数据集(lris Dataset)

(1)下载地址【引用】:鸢尾花数据集下载

(2)鸢尾花数据集特点

茑尾花数据集有150 条样本记录,分为3个类别,每个类别有 50 个样本,每条记录有 4个特征(花萼长度、花萼宽度、花瓣长度、花瓣宽度)。

鸢尾花数据集(lris Dataset)数据加载

dataLoader.py

#导入相关模块

import os

import numpy as np

import pandas as pd

import torch

from torch.utils.data import Dataset #用于创建可用于DataLoader的自定义数据集类

# 类IrisDataset 继承自 torch.utils.data.Dataset

# 表示这是一个pytorch数据集类

class IrisDataset(Dataset):

def __init__(self, data_path):

# 保存数据集的路径

self.data_path = data_path

# 使用assert 来检查data_path是否存在,如果路径无效,则抛出错误

assert os.path.exists(data_path), "Dataset does not exist."

# 使用pandas 读取CSV数据,其中将列名指定[0, 1, 2, 3, 4]

df = pd.read_csv(self.data_path, names=[0, 1, 2, 3, 4])

# 通过map将鸢尾花的三个类别(setosa、versicolor、virginica)映射为数字标签0、1、2

label_mapping = {"setosa": 0, "versicolor": 1, "virginica": 2}

df[4] = df[4].map(label_mapping)

# 提取特征列(前4列)

features = df.iloc[:, :4]

# 提取类别标签(最后一列)

labels = df.iloc[:, 4:]

# Standardization (Z-score normalization)

# 归一化(Z值化)处理,对数据进行标准化

features = (features - np.mean(features) / np.std(features))

# Convert data to tensors

# 将数据转化为PyTorch张量

self.features = torch.from_numpy(np.array(features, dtype="float32"))

self.labels = torch.from_numpy(np.array(labels, dtype="int"))

# Dataset size

# 保存数据集的样本数量

self.dataset_size = len(labels)

print(f"Dataset size: {self.dataset_size}")

# 获取数据集的长度

def __len__(self):

return self.dataset_size

# 获取样本

def __getitem__(self, index):

return self.features[index], self.labels[index] # 返回指定索引的样本

使用PyTorch实现训练和评估流程

nn.py

import os.path

import sys

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from tqdm import tqdm

# 从 dataLoader 文件中导入自定义的 iris_dataloader类,用于加载鸢尾花数据集。

from dataLoader import iris_dataloader

# 定义一个名为 NeuralNetwork 的类继承自nn.Module,构建一个三层的全连接神经网络。

class NeuralNetwork(nn.Module):

# 初始化函数,定义了1个输入层、2个隐藏层、1个输出层

def __init__(self, input_dim, hidden_dim1, hidden_dim2, output_dim):

super().__init__()

self.layer1 = nn.Linear(input_dim, hidden_dim1)

self.layer2 = nn.Linear(hidden_dim1, hidden_dim2)

self.layer3 = nn.Linear(hidden_dim2, output_dim)

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

return x

# 设置计算设备,如有GPU则使用CUDA,否则使用CPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# print(device)

# 加载和划分数据集

# 加载鸢尾花数据集,并划分为训练集、验证集和测试集

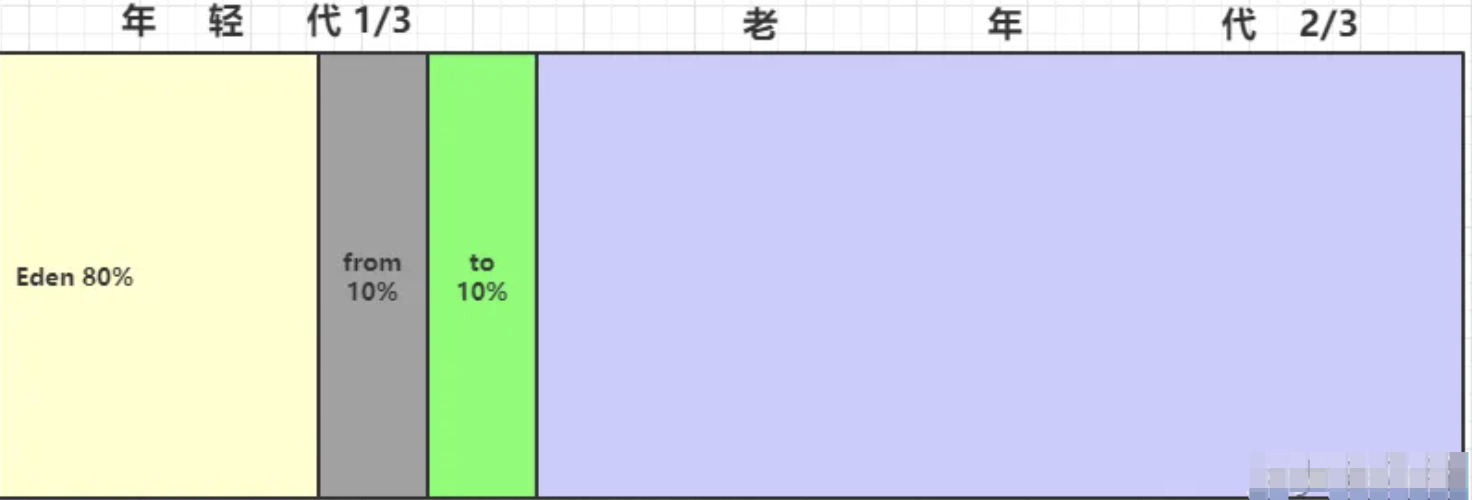

iris_dataset = iris_dataloader("./iris.txt")

train_size = int(len(iris_dataset) * 0.7)

val_size = int(len(iris_dataset) * 0.2)

test_size = len(iris_dataset) - train_size - val_size

# random_split 根据比例 70%/20%/10% 将数据集划分为训练集、验证集和测试集。

train_dataset, val_dataset, test_dataset = torch.utils.data.random_split(

iris_dataset, [train_size, val_size, test_size]

)

# DataLoader 用于加载数据,并设置批次大小和是否随机打乱数据。

train_loader = DataLoader(train_dataset, batch_size=16, shuffle=True)

val_loader = DataLoader(val_dataset, batch_size=1, shuffle=False)

test_loader = DataLoader(test_dataset, batch_size=1, shuffle=False)

print(f"训练集大小: {len(train_loader) * 16}, 验证集大小: {len(val_loader)}, 测试集大小: {len(test_loader)}")

# 推断函数,计算模型在数据集上的准确率

def evaluate(model, data_loader, device):

model.eval()

correct_predictions = 0

# torch.no_grad() 禁用梯度计算,从而加快推断速度并节省显存

with torch.no_grad():

for data in data_loader:

inputs, labels = data

outputs = model(inputs.to(device))

if outputs.dim() > 1:

# 提取模型输出中概率最大的类别

predicted_labels = torch.max(outputs, dim=1)[1]

else:

predicted_labels = torch.max(outputs, dim=0)[1]

correct_predictions += torch.eq(predicted_labels, labels.to(device)).sum().item()

accuracy = correct_predictions / len(data_loader)

return accuracy

# 主函数,执行模型训练与验证

def main(lr=0.005, epochs=20):

model = NeuralNetwork(4, 12, 6, 3).to(device)

loss_function = nn.CrossEntropyLoss()

params = [p for p in model.parameters() if p.requires_grad]

optimizer = optim.Adam(params, lr=lr)

save_path = os.path.join(os.getcwd(), "results/weights")

if not os.path.exists(save_path):

os.makedirs(save_path)

# 模型训练:每个 epoch 开始时模型设置为训练模式(model.train()),计算损失并通过反向传播优化权重。

for epoch in range(epochs):

model.train()

correct_predictions = torch.zeros(1).to(device)

total_samples = 0

train_progress = tqdm(train_loader, file=sys.stdout, ncols=100)

for batch in train_progress:

inputs, labels = batch

labels = labels.squeeze(-1)

total_samples = inputs.shape[0]

optimizer.zero_grad()

outputs = model(inputs.to(device))

predicted_labels = torch.max(outputs, dim=1)[1]

correct_predictions += torch.eq(predicted_labels, labels.to(device)).sum()

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

train_accuracy = correct_predictions / total_samples

train_progress.set_description(f"train epoch[{epoch + 1}/{epochs}] loss:{loss:.3f}")

# 验证集评估:在每个 epoch 结束时,通过 evaluate 函数计算验证集的准确率。

val_accuracy = evaluate(model, val_loader, device)

print(f"train epoch[{epoch + 1}/{epochs}] loss:{loss:.3f} train_acc:{train_accuracy:.3f} val_acc:{val_accuracy:.3f}")

# 保存模型权重:每次迭代结束后保存模型参数。

torch.save(model.state_dict(), os.path.join(save_path, "nn.pth"))

print("Training completed.")

test_accuracy = evaluate(model, test_loader, device)

print(f"test_acc: {test_accuracy}")

if __name__ == "__main__":

main(lr=0.005, epochs=20)

运行结果