OpenEBS存储

- 一,OpenEBS简介

- 二,卷类型

- 三,本地卷存储引擎类型

- 四,复制卷存储引擎类型(副本卷)

- 4.1 复制卷实现原理

- 4.2 复制卷的优势

- 五,openebs存储引擎技术选型

- 六,k8s中部署openebs服务

- 6.1 下载yaml创建资源

- 6.2 检查资源创建状态

- 七,Local PV hostpath动态供给

- 7.1 创建hostpath测试pod

- 7.2 查看pod,pv,pvc状态

- 八,Local PV device动态供给

- 8.1 k8s配置device存储类

- 8.2 创建存储资源

- 8.3 准备未使用的磁盘,标识存储磁盘信息

- 8.4 创建device测试pod

- 8.5 查看pod,pvc状态

- 八,ZFS Local PV

- 9.1 k8s存储节点部署zfs服务

- 9.2 通过磁盘创建存储池(所有节点)

- 9.3 k8s创建openebs-zfs服务

- 9.4 创建存储类

- 9.5 创建测试pvc

- 9.6查看pvc绑定状态

- 十,LVM Local PV

- 10.1 通过磁盘创建卷组

- 10.2 k8s部署openebs-lvm服务

- 10.3 查看服务状态

- 10.4 创建存储类

- 10.5 创建测试pod使用lvm-pvc

- 10.6 查看pvc绑定状态

- 十一,Rawfile Local PV

- 十二 ,Mayastor

- 12.1 配置helm

- 12.2 安装Mayastor 服务

- 十三,cStor

- 13.1 k8s存储节点部署iscsi服务(所有存储节点)

- 13.2 检查openebs的NDM组件

- 13.3 k8s中安装CStor operators 和 CSI 驱动程序组件

- 13.4 检查块设备创建

- 13.5 创建存储池

- 13.6 创建存储类

- 13.7 创建测试pod,pvc

- 十四,Jiva

- 14.1 安装iscsi服务,加载内核模块(所有存储节点)

- 14.2 k8s中部署jiva服务

- 14.3 jiva卷策略配置参数

- 14.4 创建存储类

- 14.5 创建测试pod使用pvc

- 14.6 验证存储卷状态

- 14.7 检查pod,pvc状态

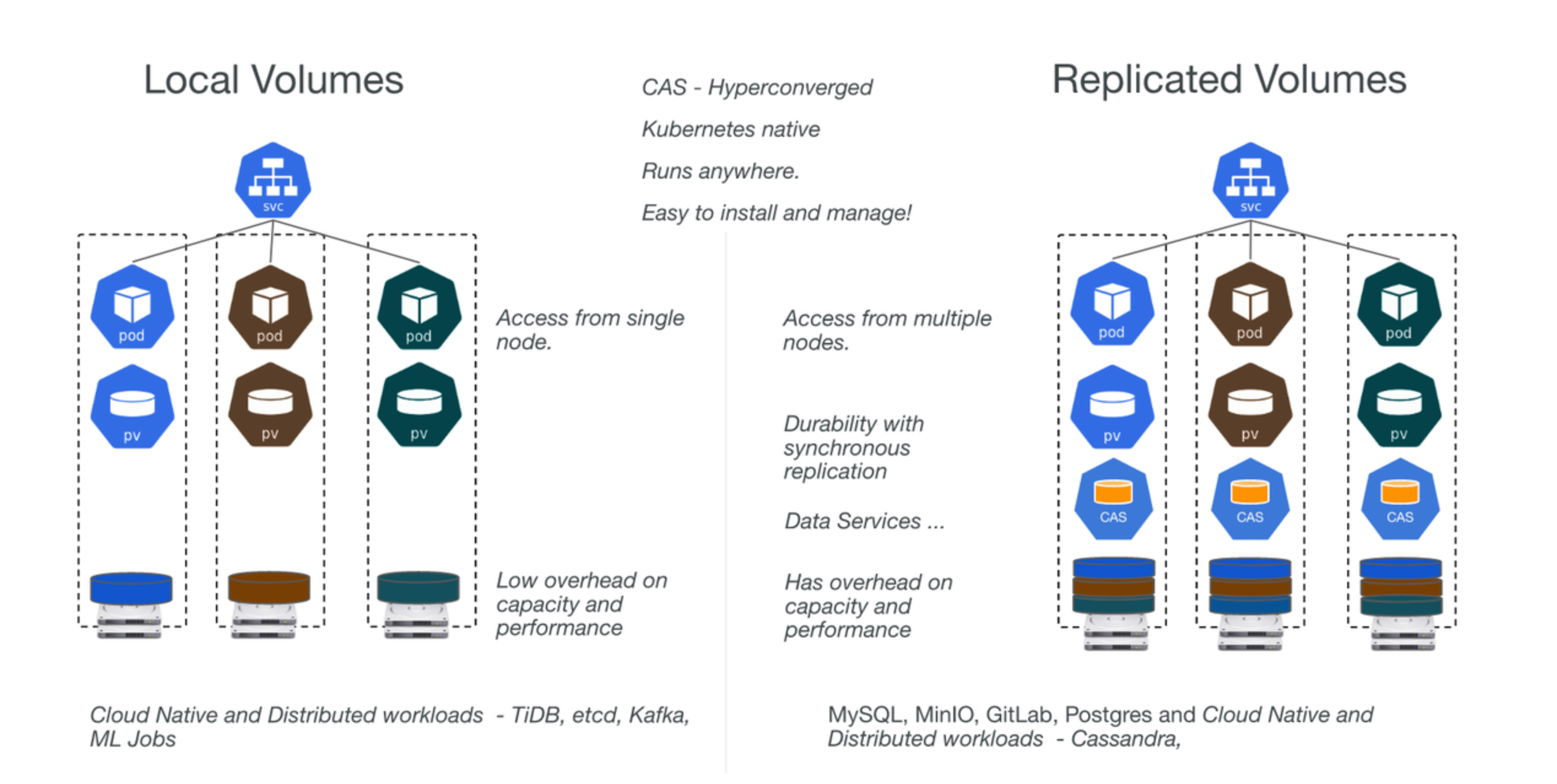

一,OpenEBS简介

OpenEBS是一组存储引擎,工作节点可用的任何存储转换为本地或分布式 Kubernetes 持久卷, 在高层次上,OpenEBS支持两大类卷——本地卷和复制卷

OpenEBS是Kubernetes本地超融合存储解决方案,它管理节点可用的本地存储,并为有状态工作负载提供本地或高可用的分布式持久卷。 作为一个完全的Kubernetes原生解决方案的另一个优势是,管理员和开发人员可以使用kubectl、Helm、 Prometheus、Grafana、Weave Scope等Kubernetes可用的所有优秀工具来交互和管理OpenEBS,部署需要快速、高度持久、可靠和可扩展的 Kubernetes 有状态工作负载。

二,卷类型

本地卷:本地卷能够直接将工作节点上插入的数据盘抽象为持久卷,供当前节点上的 Pod 挂载使用。

复制卷:OpenEBS 使用其内部的引擎为每个复制卷创建一个微服务,在使用时,有状态服务将数据写入 OpenEBS 引擎,引擎将数据同步复制到集群中的多个节点,从而实现了高可用

三,本地卷存储引擎类型

Local PV hostpath

指定使用本地目录

Local PV device

通过配置磁盘标识信息来定义哪些设备可用于创建本地pv

ZFS Local PV K8S:1.20+

通过zfs服务创建存储池,配置卷,比如条带卷、镜像卷

LVM Local PV K8S:1.20+

通过lvm创建存储卷组

Rawfile Local PV K8s:1.21+

四,复制卷存储引擎类型(副本卷)

Mayastor

cStor K8s 1.21+

Jiva 3.2.0+稳定版本 适配k8s:1.21+

4.1 复制卷实现原理

OpenEBS使用其中一个引擎(Mayastor、cStor或Jiva)为每个分布式持久卷创建微服务

Pod将数据写入OpenEBS引擎,OpenEBS引擎将数据同步复制到集群中的多个节点。

OpenEBS引擎本身作为pod部署,并由Kubernetes进行协调。 当运行Pod的节点失败时,Pod将被重新调度到集群中的另一个节点,OpenEBS将使用其他节点上的可用数据副本提供对数据的访问

4.2 复制卷的优势

OpenEBS分布式块卷被称为复制卷,以避免与传统的分布式块存储混淆,传统的分布式块存储倾向于将数据分布到集群中的许多节点上。 复制卷是为云原生有状态工作负载设计的,这些工作负载需要大量的卷,这些卷的容量通常可以从单个节点提供,而不是使用跨集群中的多个节点分片的单个大卷

五,openebs存储引擎技术选型

| 应用需求 | 存储类型 | OpenEBS卷类型 |

|---|---|---|

| 低时延、高可用性、同步复制、快照、克隆、精简配置 | SSD/云存储卷 | OpenEBS Mayastor |

| 高可用性、同步复制、快照、克隆、精简配置 | 机械/SSD/云存储卷 | OpenEBS cStor |

| 高可用性、同步复制、精简配置 | 主机路径或外部挂载存储 | OpenEBS Jiva |

| 低时延、本地PV | 主机路径或外部挂载存储 | Dynamic Local PV - Hostpath, Dynamic Local PV - Rawfile |

| 低时延、本地PV | 本地机械/SSD/云存储卷等块设备 | Dynamic Local PV - Device |

| 低延迟,本地PV,快照,克隆 | 本地机械/SSD/云存储卷等块设备 | OpenEBS Dynamic Local PV - ZFS , OpenEBS Dynamic Local PV - LVM |

总结:

多机环境,如果有额外的块设备(非系统盘块设备)作为数据盘,选用OpenEBS Mayastor、OpenEBS cStor

多机环境,如果没有额外的块设备(非系统盘块设备)作为数据盘,仅单块系统盘块设备,选用OpenEBS Jiva

单机环境,建议本地路径Dynamic Local PV - Hostpath, Dynamic Local PV - Rawfile,由于单机多用于测试环境,数据可靠性要求较低。

六,k8s中部署openebs服务

6.1 下载yaml创建资源

mkdir openebs && cd openebs

wget https://openebs.github.io/charts/openebs-operator.yaml

kubectl apply -f openebs-operator.yaml

修改yaml中hostpath默认路径

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-hostpath

annotations:

openebs.io/cas-type: local

cas.openebs.io/config: |

#hostpath type will create a PV by

# creating a sub-directory under the

# BASEPATH provided below.

- name: StorageType

value: "hostpath"

#Specify the location (directory) where

# where PV(volume) data will be saved.

# A sub-directory with pv-name will be

# created. When the volume is deleted,

# the PV sub-directory will be deleted.

#Default value is /var/openebs/local

- name: BasePath

value: "/var/openebs/local/" #默认存储路径

6.2 检查资源创建状态

kubectl get storageclasses,pod,svc -n openebs

kubectl get storageclasses,pod,svc -n openebs

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/local-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 372d

storageclass.storage.k8s.io/nfs nfs Delete Immediate true 311d

storageclass.storage.k8s.io/nfs-storageclass (default) fuseim.pri/ifs Retain Immediate true 314d

storageclass.storage.k8s.io/openebs-device openebs.io/local Delete WaitForFirstConsumer false 3m41s

storageclass.storage.k8s.io/openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 3m41s

NAME READY STATUS RESTARTS AGE

pod/openebs-localpv-provisioner-6779c77b9d-gr9gn 1/1 Running 0 3m41s

pod/openebs-ndm-cluster-exporter-5bbbcd59d4-4nmjl 1/1 Running 0 3m41s

pod/openebs-ndm-llc54 1/1 Running 0 3m41s

pod/openebs-ndm-node-exporter-698gw 1/1 Running 0 3m41s

pod/openebs-ndm-node-exporter-z522n 1/1 Running 0 3m41s

pod/openebs-ndm-operator-d8797fff9-jzrp7 1/1 Running 0 3m41s

pod/openebs-ndm-prk5g 1/1 Running 0 3m41s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/openebs-ndm-cluster-exporter-service ClusterIP None <none> 9100/TCP 3m41s

service/openebs-ndm-node-exporter-service ClusterIP None <none> 9101/TCP 3m41s

#配置hostpath本地卷为默认存储类

#kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

七,Local PV hostpath动态供给

7.1 创建hostpath测试pod

kubectl apply -f demo-openebs-hostpath.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

name: nginx

namespace: openebs

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- resources:

limits:

cpu: 0.5

name: nginx

image: nginx

ports:

- containerPort: 80

name: percona

volumeMounts:

- mountPath: /usr/share/nginx/html/

name: demo-nginx

volumes:

- name: demo-nginx

persistentVolumeClaim:

claimName: demo-vol1-claim

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

namespace: openebs

name: demo-vol1-claim

spec:

storageClassName: openebs-hostpath

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1G

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

name: nginx

spec:

ports:

- port: 80

targetPort: 80

selector:

name: nginx

7.2 查看pod,pv,pvc状态

kubectl get pod,pv,pvc -n openebs

NAME READY STATUS RESTARTS AGE

pod/nginx-5b56fb9c85-jl4wl 1/1 Running 0 106s

persistentvolume/pvc-658df0cf-932a-49bf-a237-c4fadcbd143b 1G RWO Delete Bound openebs/demo-vol1-claim openebs-hostpath 96s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/demo-vol1-claim Bound pvc-658df0cf-932a-49bf-a237-c4fadcbd143b 1G RWO openebs-hostpath 26s

八,Local PV device动态供给

8.1 k8s配置device存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-device

annotations:

openebs.io/cas-type: local

# cas.openebs.io/config: |

# - name: StorageType

# value: "device"

parameters:

devname: "test-device" #存储空间会使用指定了磁盘标识的设备块

provisioner: openebs.io/local

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

8.2 创建存储资源

kubectl app -f openebs-device.yaml

kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-device openebs.io/local Delete WaitForFirstConsumer false 5m35s

openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 23m

8.3 准备未使用的磁盘,标识存储磁盘信息

parted /dev/sdb mklabel gpt

parted /dev/sdb mkpart test-device 1MiB 10MiB

8.4 创建device测试pod

apiVersion: v1

kind: Pod

metadata:

name: hello-local-device-pod

spec:

volumes:

- name: local-storage

persistentVolumeClaim:

claimName: local-device-pvc

containers:

- name: hello-container

image: busybox

command:

- sh

- -c

- 'while true; do echo "`date` [`hostname`] Hello from OpenEBS Local PV." >> /mnt/store/greet.txt; sleep $(($RANDOM % 5 + 300)); done'

volumeMounts:

- mountPath: /mnt/store

name: local-storage

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: local-device-pvc

spec:

storageClassName: openebs-device #对应存储类名称,kubectl get storageclasses.storage.k8s.io

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5G

8.5 查看pod,pvc状态

[root@master1 openebs]# kubectl get pod,pvc

NAME READY STATUS RESTARTS AGE

pod/hello-local-device-pod 1/1 Running 0 14m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/local-device-pvc Bound pvc-2551b29f-3056-4b32-9004-75f52557a766 5G RWO openebs-device 19m

八,ZFS Local PV

9.1 k8s存储节点部署zfs服务

cat /etc/redhat-release #centos根据系统版本号修改el7_6

yum install http://download.zfsonlinux.org/epel/zfs-release.el7_6.noarch.rpm -y

vi /etc/yum.repos.d/zfs.repo

[zfs-kmod]

name=ZFS on Linux for EL7 - kmod

baseurl=http://download.zfsonlinux.org/epel/7.6/kmod/$basearch/

enabled=1 #修改enabled=0为1,默认未开启

metadata_expire=7d

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-zfsonlinux

yum -y install zfs

9.2 通过磁盘创建存储池(所有节点)

zpool create zfspv-pool /dev/sdc

zpool list #查看存储池

NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zfspv-pool 19.9G 106K 19.9G - 0% 0% 1.00x ONLINE -

9.3 k8s创建openebs-zfs服务

wget https://openebs.github.io/charts/zfs-operator.yaml

sed -i 's/registry.k8s.io\/sig-storage/\/google_containers\//' zfs-operator.yaml #修改国内镜像下载地址

kubectl get pods -n kube-system -l role=openebs-zfs #查看pod服务运行状态

NAME READY STATUS RESTARTS AGE

openebs-zfs-controller-0 5/5 Running 0 3m13s

openebs-zfs-node-h5t6b 2/2 Running 0 2m29s

9.4 创建存储类

kubectl apply -f zfs-storage.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-zfspv

parameters:

recordsize: "4k"

compression: "off"

dedup: "off"

fstype: "zfs"

poolname: "zfspv-pool" #对应创建的存储池名字

provisioner: zfs.csi.openebs.io

9.5 创建测试pvc

kubectl apply -f zfs-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: csi-zfspv

namespace: openebs

spec:

storageClassName: openebs-zfspv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

9.6查看pvc绑定状态

kubectl get pvc csi-zfspv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

csi-zfspv Bound pvc-32775579-e3bd-4fda-a98c-5161d8970528 4Gi RWO openebs-zfspv 103m

十,LVM Local PV

10.1 通过磁盘创建卷组

pvcreate /dev/sdc

vgcreate lvmvg /dev/sdc

10.2 k8s部署openebs-lvm服务

wget https://openebs.github.io/charts/lvm-operator.yaml

sed -i 's/registry.k8s.io\/sig-storage/registry.aliyuncs.com\/google_containers\//' zfs-operator.yaml

kubectl apply -f lvm-operator.yaml

10.3 查看服务状态

kubectl get pod -A -l role=openebs-lvm

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system openebs-lvm-controller-0 5/5 Running 0 6m19s

kube-system openebs-lvm-node-7qn5p 2/2 Running 4 (6m40s ago) 7m

10.4 创建存储类

kubectl apply -f lvm-storage.yaml

cat lvm-storage.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-lvmpv

parameters:

storage: "lvm"

volgroup: "lvmvg" #对应创建的卷组

provisioner: local.csi.openebs.io

#allowedTopologies:

#- matchLabelExpressions:

# - key: kubernetes.io/hostname

# values:

# - lvmpv-node1

# - lvmpv-node2

#表示“lvmvg”仅在节点 lvmpv-node1 和 lvmpv-node2 上可用。LVM 驱动程序将仅在这些节点上创建卷。

10.5 创建测试pod使用lvm-pvc

apiVersion: v1

kind: Pod

metadata:

name: fio

spec:

restartPolicy: Never

containers:

- name: perfrunner

image: openebs/tests-fio

command: ["/bin/bash"]

args: ["-c", "while true ;do sleep 50; done"]

volumeMounts:

- mountPath: /datadir

name: fio-vol

tty: true

volumes:

- name: fio-vol

persistentVolumeClaim:

claimName: csi-lvmpv

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: csi-lvmpv

spec:

storageClassName: openebs-lvmpv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

10.6 查看pvc绑定状态

kubectl get pvc csi-lvmpv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

csi-lvmpv Bound pvc-2ddb8407-03f6-4af0-9d82-f95e567c3b32 4Gi RWO openebs-lvmpv 9s

十一,Rawfile Local PV

十二 ,Mayastor

环境要求:

helm版本3.7+

Linux kernel 5.13 (或者更高版本)

内核加载模块:nvme-tcp、ext4 和可选的 XFS

最低配置:1c2g

12.1 配置helm

wget https://get.helm.sh/helm-v3.9.4-linux-amd64.tar.gz

tar xf helm-v3.9.4-linux-amd64.tar.gz

cp linux-amd64/helm /usr/bin/

12.2 安装Mayastor 服务

helm repo add openebs https://openebs.github.io/charts

helm repo update

helm repo list

helm install openebs --namespace openebs openebs/openebs --set mayastor.enabled=true --create-namespace

十三,cStor

13.1 k8s存储节点部署iscsi服务(所有存储节点)

建议在 至少3 个节点上以实现高可用性

yum install iscsi-initiator-utils -y

systemctl enable iscsid.service --now

#sudo systemctl stop zfs-import-scan.service

#sudo systemctl disable zfs-import-scan.service

13.2 检查openebs的NDM组件

openebs服务自动创建,每个节点会运行一个

kubectl -n openebs get pods -l openebs.io/component-name=ndm -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

openebs-ndm-dq958 1/1 Running 0 4m5s 10.98.4.2 node1.ale.org <none> <none>

openebs-ndm-qntzn 1/1 Running 0 4m5s 10.98.4.3 node2.ale.org <none> <none>

openebs-ndm-wbv22 1/1 Running 0 54s 10.98.4.1 master1.ale.org <none> <none>

13.3 k8s中安装CStor operators 和 CSI 驱动程序组件

可以使用jhelm和yaml方式,推荐yaml

helm repo add openebs-cstor https://openebs.github.io/cstor-operators

helm repo update

helm install openebs-cstor openebs-cstor/cstor -n openebs --create-namespace

#wget https://openebs.github.io/charts/cstor-operator.yaml

#kubectl apply -f cstor-operator.yaml

kubectl get pod -n openebs

NAME READY STATUS RESTARTS AGE

openebs-cstor-admission-server-6bf8f59b76-kcb5p 1/1 Running 0 18m

openebs-cstor-csi-controller-0 6/6 Running 0 51s

openebs-cstor-csi-node-24xv6 2/2 Running 0 18m

openebs-cstor-csi-node-pf4tt 2/2 Running 0 18m

openebs-cstor-csi-node-pvmhv 2/2 Running 0 18m

openebs-cstor-cspc-operator-54c867b7b5-vqmnm 1/1 Running 0 18m

openebs-cstor-cvc-operator-5557b65896-t4r7b 1/1 Running 0 18m

openebs-cstor-openebs-ndm-2k449 1/1 Running 0 18m

openebs-cstor-openebs-ndm-7rfmr 1/1 Running 0 18m

openebs-cstor-openebs-ndm-gvc7v 1/1 Running 0 18m

openebs-cstor-openebs-ndm-operator-667cc5bb79-486jc 1/1 Running 0 18m

openebs-localpv-provisioner-64478448d5-27d9t 1/1 Running 0 24m

openebs-ndm-cluster-exporter-5bbbcd59d4-tvfqp 1/1 Running 0 24m

openebs-ndm-dq958 1/1 Running 0 24m

openebs-ndm-node-exporter-4k9lm 1/1 Running 0 24m

openebs-ndm-node-exporter-7gjdh 1/1 Running 0 24m

openebs-ndm-node-exporter-v6zwv 1/1 Running 0 21m

openebs-ndm-operator-5b4b976495-4pm46 1/1 Running 0 24m

openebs-ndm-qntzn 1/1 Running 0 24m

openebs-ndm-wbv22 1/1 Running 0 21m

13.4 检查块设备创建

OpenEBS 控制器会在底层节点上发现用户除系统安装盘外的所有磁盘

master1节点上的sdb、sdc会在bd资源中

[root@master1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─centos-root 253:0 0 47G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm

sdb 8:16 0 20G 0 disk

└─sdb1 8:17 0 20G 0 part

sdc 8:32 0 20G 0 disk

└─sdc1 8:33 0 20G 0 part

sr0 11:0 1 1024M 0 rom

[root@master1 ~]# kubectl get bd -A

NAMESPACE NAME NODENAME SIZE CLAIMSTATE STATUS AGE

openebs blockdevice-132b97bf1539798a611ebb52ba751f9b node1.ale.org 21473771008 Claimed Active 17h

openebs blockdevice-210cdc3db63530e8f31d613eba0a8e9a master1.ale.org 21473771008 Unclaimed Active 21s

openebs blockdevice-bc67cbdd43719bd9fa8ae6adc16fb2e6 node2.ale.org 21473771008 Claimed Active 17h

openebs blockdevice-ff23509cea3e5f6ee8679d38f8b33595 master1.ale.org 21473771008 Claimed Active 17h

kubectl get bd -n openebs

NAME NODENAME SIZE CLAIMSTATE STATUS AGE

blockdevice-132b97bf1539798a611ebb52ba751f9b node1.ale.org 21473771008 Unclaimed Inactive 23m

blockdevice-bc67cbdd43719bd9fa8ae6adc16fb2e6 node2.ale.org 21473771008 Unclaimed Active 24m

blockdevice-ff23509cea3e5f6ee8679d38f8b33595 master1.ale.org 21473771008 Unclaimed Inactive 20m

13.5 创建存储池

kubectl apply -f cspc-single.yaml

apiVersion: cstor.openebs.io/v1

kind: CStorPoolCluster

metadata:

name: cstor-storage

namespace: openebs

spec:

pools:

- nodeSelector:

kubernetes.io/hostname: "master1.ale.org" #节点标签选择器

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-ff23509cea3e5f6ee8679d38f8b33595" #bd块设备对应名字

poolConfig:

dataRaidGroupType: "stripe"

- nodeSelector:

kubernetes.io/hostname: "node1.ale.org"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-132b97bf1539798a611ebb52ba751f9b"

poolConfig:

dataRaidGroupType: "stripe"

- nodeSelector:

kubernetes.io/hostname: "node2.ale.org"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-bc67cbdd43719bd9fa8ae6adc16fb2e6"

poolConfig:

dataRaidGroupType: "stripe"

#检查设备存储池连接状态

kubectl get cspc -n openebs

NAME HEALTHYINSTANCES PROVISIONEDINSTANCES DESIREDINSTANCES AGE

cstor-storage 3 3 14s

kubectl get cspi -n openebs

NAME HOSTNAME FREE CAPACITY READONLY PROVISIONEDREPLICAS HEALTHYREPLICAS STATUS AGE

cstor-storage-cx7k node1.ale.org 0 0 false 0 0 80s

cstor-storage-nmws node2.ale.org 0 0 false 0 0 79s

cstor-storage-zntk master1.ale.org 0 0 false 0 0 81s

13.6 创建存储类

kubectl apply -f csi-cspc-sc.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: cstor-csi #存储类名称

provisioner: cstor.csi.openebs.io

allowVolumeExpansion: true #是否打开存储扩展功能

parameters:

cas-type: cstor

# cstorPoolCluster should have the name of the CSPC

cstorPoolCluster: cstor-storage #存储池名称

# # replicaCount should be <= no. of CSPI

replicaCount: "3" #

13.7 创建测试pod,pvc

kubectl apply -f csi-cstor-pvc.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- command:

- sh

- -c

- 'date >> /mnt/openebs-csi/date.txt; hostname >> /mnt/openebs-csi/hostname.txt; sync; sleep 5; sync; tail -f /dev/null;'

image: busybox

imagePullPolicy: Always

name: busybox

volumeMounts:

- mountPath: /mnt/openebs-csi

name: demo-vol

volumes:

- name: demo-vol

persistentVolumeClaim:

claimName: demo-cstor-vol

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: demo-cstor-vol

spec:

storageClassName: cstor-csi

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

#检查pod,pvc运行状态

kubectl get pod,pvc

NAME READY STATUS RESTARTS AGE

pod/busybox 1/1 Running 0 16m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/demo-cstor-vol Bound pvc-2e7af54f-e8cd-40c8-9486-1399591706e2 5Gi RWO cstor-csi 16m

#检查卷及其副本是否处于Healthy状态

kubectl get cstorvolume -n openebs

NAME CAPACITY STATUS AGE

pvc-2e7af54f-e8cd-40c8-9486-1399591706e2 5Gi Healthy 16h

kubectl get cstorvolumereplica -n openebs

NAME ALLOCATED USED STATUS AGE

pvc-2e7af54f-e8cd-40c8-9486-1399591706e2-cstor-storage-cx7k 73K 5.07M Healthy 16h

pvc-2e7af54f-e8cd-40c8-9486-1399591706e2-cstor-storage-nmws 73K 5.07M Healthy 16h

pvc-2e7af54f-e8cd-40c8-9486-1399591706e2-cstor-storage-zntk 73K 5.07M Healthy 16h

#查看pod写入数据

kubectl exec -it busybox -- cat /mnt/openebs-csi/date.txt

Wed May 31 10:13:20 UTC 2023

Thu Jun 1 01:32:24 UTC 2023

#节点存储池默认位置

ll /var/openebs/cstor-pool/cstor-storage/

总用量 4

-rw-r--r-- 1 root root 1472 6月 1 09:31 pool.cache

-rw-r--r-- 1 root root 0 5月 31 18:11 zrepl.lock

十四,Jiva

14.1 安装iscsi服务,加载内核模块(所有存储节点)

sudo yum install iscsi-initiator-utils -y

sudo systemctl enable --now iscsid

modprobe iscsi_tcp

echo iscsi_tcp >/etc/modules-load.d/iscsi-tcp.conf

14.2 k8s中部署jiva服务

mkdir openebs && cd openebs

wget https://openebs.github.io/charts/openebs-operator.yaml

kubectl apply -f openebs-operator.yaml

kubectl apply -f https://openebs.github.io/charts/hostpath-operator.yaml

wget https://openebs.github.io/charts/jiva-operator.yaml

sed -i 's/k8s.gcr.io\/sig-storage/registry.aliyuncs.com\/google_containers/' jiva-operator.yaml

kubectl apply -f jiva-operator.yaml

#查看pod状态

kubectl get pod -n openebs

NAME READY STATUS RESTARTS AGE

jiva-operator-7747864f88-scxb9 1/1 Running 0 11m

openebs-jiva-csi-controller-0 5/5 Running 0 35s

openebs-jiva-csi-node-7rpp5 3/3 Running 0 4m39s

openebs-jiva-csi-node-8gnbx 3/3 Running 0 5m20s

openebs-jiva-csi-node-9fkdf 3/3 Running 0 5m20s

openebs-localpv-provisioner-846d97c485-xfktf 1/1 Running 0 12m

14.3 jiva卷策略配置参数

kubectl apply -f JivaVolumePolicy.yaml

apiVersion: openebs.io/v1alpha1

kind: JivaVolumePolicy

metadata:

name: example-jivavolumepolicy

namespace: openebs

spec:

replicaSC: openebs-hostpath

target:

replicationFactor: 1

# disableMonitor: false

# auxResources:

# tolerations:

# resources:

# affinity:

# nodeSelector:

# priorityClassName:

# replica:

# tolerations:

# resources:

# affinity:

# nodeSelector:

# priorityClassName:

14.4 创建存储类

默认卷数据存储位置在节点/var/openebs/<pvc-*>

kubectl apply -f jiva-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-jiva-csi-sc

provisioner: jiva.csi.openebs.io

allowVolumeExpansion: true

parameters:

cas-type: "jiva"

policy: "example-jivavolumepolicy"

[root@master1 ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-jiva-csi-sc jiva.csi.openebs.io Delete Immediate true 4s

14.5 创建测试pod使用pvc

kubectl apply -f jiva-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: fio

spec:

selector:

matchLabels:

name: fio

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

template:

metadata:

labels:

name: fio

spec:

containers:

- name: perfrunner

image: openebs/tests-fio

command: ["/bin/bash"]

args: ["-c", "while true ;do sleep 50; done"]

volumeMounts:

- mountPath: /datadir

name: fio-vol

volumes:

- name: fio-vol

persistentVolumeClaim:

claimName: example-jiva-csi-pvc

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: example-jiva-csi-pvc

spec:

storageClassName: openebs-jiva-csi-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

14.6 验证存储卷状态

kubectl get jivavolume -n openebs

NAME REPLICACOUNT PHASE STATUS

pvc-264e18d6-782f-41db-80f0-e2d7b2fa0d04 1 Ready RW

14.7 检查pod,pvc状态

kubectl get pod,pvc

NAME READY STATUS RESTARTS AGE

pod/fio-5bd64c6b49-krwh6 1/1 Running 0 28m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/example-jiva-csi-pvc Bound pvc-264e18d6-782f-41db-80f0-e2d7b2fa0d04 4Gi RWO openebs-jiva-csi-sc 28m